Data Structures and Algorithms (DSA) form the backbone of computer science, providing essential methods for problem-solving and data management. Algorithms outline sequential steps to tackle complex issues, while data structures organize and store data efficiently. Mastering DSA is crucial for building optimized software and solving challenging computational problems.

In this DSA Roadmap, we provide a structured approach to help you learn data structures and algorithms from scratch. Whether you’re a beginner or looking to strengthen your skills, this DSA roadmap offers a comprehensive guide to mastering DSA concepts step by step.

If you’re looking for a structured, guided approach to mastering DSAs, Scaler’s DSA Course offers a comprehensive curriculum, expert mentorship, and hands-on practice to help you achieve your goals.

What is Data Structure?

Before diving into the DSA roadmap, let’s briefly understand the essence of data structures and algorithms.

A data structure is like a specialized container used in software to store and organize data efficiently, making it easy to access and manipulate. Think of data structures as different storage boxes, each designed for a unique purpose: arrays function like shelves with numbered slots, while linked lists resemble a chain of connected boxes. Choosing the right data structure is essential for efficient data management and quick retrieval, enabling faster and more effective software development.

What is an Algorithm?

An algorithm is a systematic approach to solving a problem or performing a calculation. Whether implemented in hardware or software, algorithms consist of a precise sequence of instructions that execute specific operations sequentially.

5 Easy Steps for Learning DSA from Scratch

The roadmap is designed to take you from fundamental concepts to practical application, ensuring a comprehensive understanding of DSA principles. Each step builds upon the previous one, gradually increasing in complexity and depth.

Typical Duration: The duration to complete this roadmap may vary depending on factors such as prior knowledge, learning pace, and time commitment. However, a rough estimate suggests an average completion time of 4 to 6 months for dedicated learners.

Here is an overview of the steps that we are going to discuss –

- Pick a programming language to learn.

- Learn about the complexity of space and time.

- Discover the fundamentals of each type of algorithm and data structure.

- Practice a lot, a lot, and more

- Take It On and Become a Pro

Step 1. Start by Picking Any Programming Language of Your Choice

Mastering a programming language you’re comfortable with is essential for excelling in Data Structures and Algorithms (DSA) interviews. Since DSA is the backbone of computer science, a deep understanding of your chosen programming language is crucial for success.

Think of it as learning a new language: before writing essays, we first learn the alphabet, grammar, and punctuation. Similarly, in programming, you need a strong grasp of the basics before tackling data structures and algorithms. Here’s how to get started:

- Choose a Language: Start with a language you feel comfortable with, such as Python, Java, C, or C++. Familiarity and ease of use are key.

- Master the Fundamentals: Before diving into coding, focus on the foundational elements of the language:

- Syntax and data types

- Variables and operators

- Conditional statements and loops

- Functions and how they work

- Understand Object-Oriented Programming (OOP): OOP concepts like classes, inheritance, and polymorphism are extremely useful for organizing code efficiently.

A solid foundation in these basics will make it much easier to approach DSA concepts confidently and effectively.

Step 2. Learn Time and Space Complexity Analysis

A strong understanding of time and space complexities is essential for anyone preparing for a DSA (Data Structures and Algorithms) interview. These concepts help assess the efficiency of algorithms, which is a frequent topic in interviews. Here’s a breakdown:

- Time Complexity: Measures how long an algorithm takes to execute as the input size grows.

- Space Complexity: Describes the amount of memory an algorithm uses based on input size.

Mastering these concepts is key because interviewers often ask candidates to analyze and optimize algorithms for both time and space efficiency.

To prepare effectively:

- Familiarize yourself with evaluating time and space complexities for common algorithms, such as sorting and searching algorithms.

- Practice identifying the most suitable algorithm for specific problems based on their time and space complexities.

This foundational knowledge will ensure you’re well-equipped to handle complexity-related questions during interviews.

Time Complexity Analysis

- Time complexity describes the relationship between the input size and the time taken by an algorithm to complete.

- Notations:

Big O notation (O()): It represents the upper bound of the worst-case scenario.Omega notation (Ω()): It represents the lower bound of the best-case scenario.Theta notation (Θ()): It represents both the upper and lower bounds, indicating tight bounds.Little o notation (o()): It represents an upper bound that is not tight.

Space Complexity Analysis

- Space complexity quantifies the amount of memory space required by an algorithm to execute as a function of the input size.

- Notations:

Big O notation (O()): It represents the upper bound of the worst-case scenario for space usage.Omega notation (Ω()): It represents the lower bound of the best-case scenario for space usage.Theta notation (Θ()): It represents both the upper and lower bounds, indicating tight bounds.Little o notation (o()): It represents an upper bound that is not tight.

Step 3. Learn the Basics of Data Structure and Algorithms

Gaining a solid grasp of the foundations is crucial for getting good at DSA (Data Structures and Algorithms) interview questions. Complex algorithmic problems require the use of data structures like arrays, linked lists, stacks, queues, trees, and graphs as fundamental building blocks. Optimizing your solutions requires an understanding of the time and space complexity of various operations on these data structures.

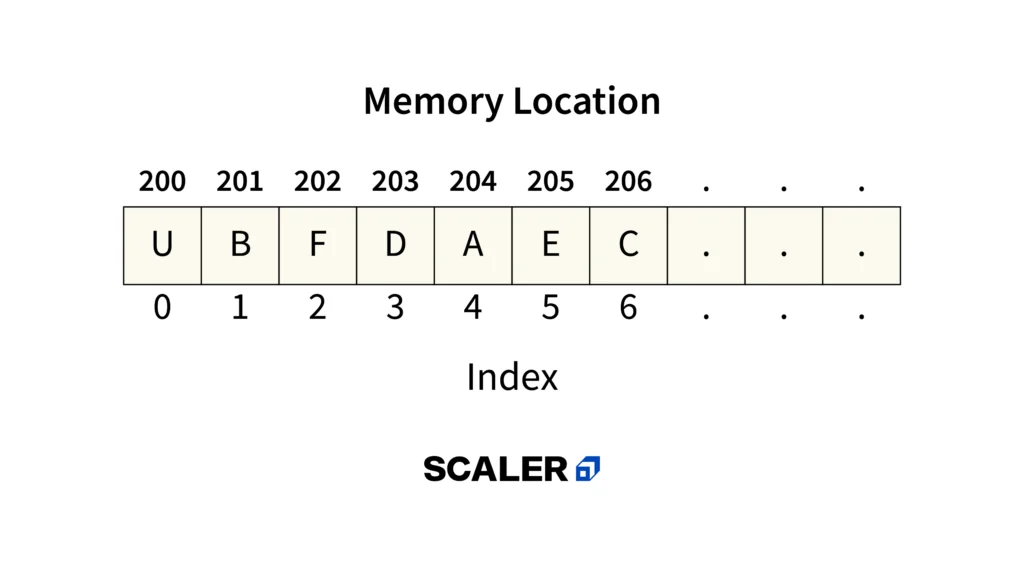

a) Array

The array is the most fundamental yet significant data structure. The data structure is linear. A collection of homogeneous data types with contiguous memory allotted to each element is called an array. It takes constant time to access any element of an array due to the contiguous memory allocation. There is an index number assigned to each element in the array.

The following are some array-related subjects you should study:

- Rotation of Array: The rotation of an array refers to the circular shifting of its elements; for example, a right circular shift shifts the last element to become the first element and moves every other element one point to the right.

- Rearranging an array: Rearranging the elements in an array refers to reversing the initial order of the elements after certain operations or conditions.

- There are times when you need to operate on a range of elements in the array. These are known as range queries. Range queries are the term for these functions.

- Multidimensional arrays are made up of multiple dimensions. The most popular type is the two-dimensional array or matrix.

- The Kadane algorithm.

- The algorithm for the Dutch national flag.

b) String

Another kind of array is a string. It may be understood as a collection of characters. However, it has a few unique features, such as the null character at the end of a string to indicate that the string is over. A few special operations are also available, such as concatenation, which combines two strings into one.

Here are some essential string concepts for you to be aware of:

- Substring and subsequence: A subsequence is a sequence that results from removing one or more elements from a string. A continuous section of the string is called a substring.

- Rotation and reversing a string: Reversing a string involves switching the characters’ positions so that the first one becomes the last, the second becomes the second last, and so forth.

- A binary string is a string composed of just two kinds of characters.

- Palindrome: A palindrome string is one in which every element is the same at every distance from the string’s center.

- Lexicographical pattern: Known as dictionary order, a lexicographic pattern is based on ASCII values.

- Pattern searching is the process of looking for a specific pattern within a string. It is a string-related advanced topic.

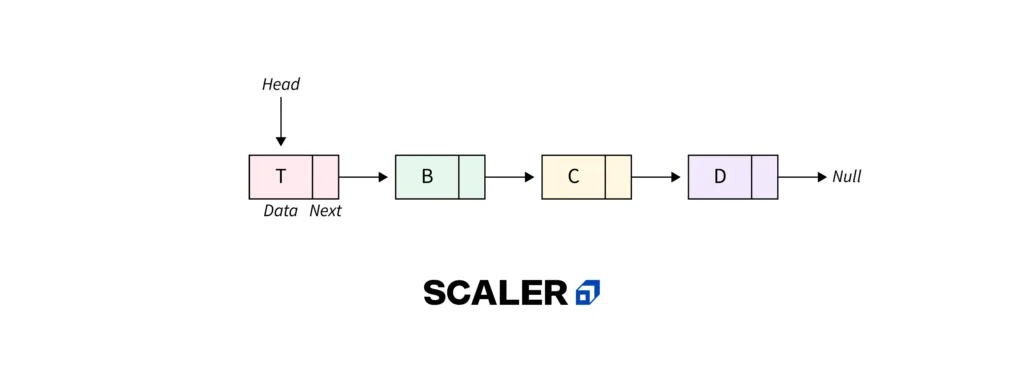

c) Linked Lists

The linked list is a linear data structure, just like the data structures mentioned above. However, the configuration of a linked list differs from that of an array. It is not assigned to consecutive memory regions. Rather, every node in the linked list is assigned to a random memory location, and the node before it keeps track of a pointer pointing to it. As a result, no node can access memory directly, and the linked list is dynamic—that is, its size can change at any time.

Topics that you should learn are:

- Singly Linked List: In this type of linked list, every node only points to the node after it.

- A circular linked list is one in which the final node points back to the list’s starting point.

- Double Linked List: In this scenario, each linked list node contains two pointers: one points to the node after it and the other to the node before it.

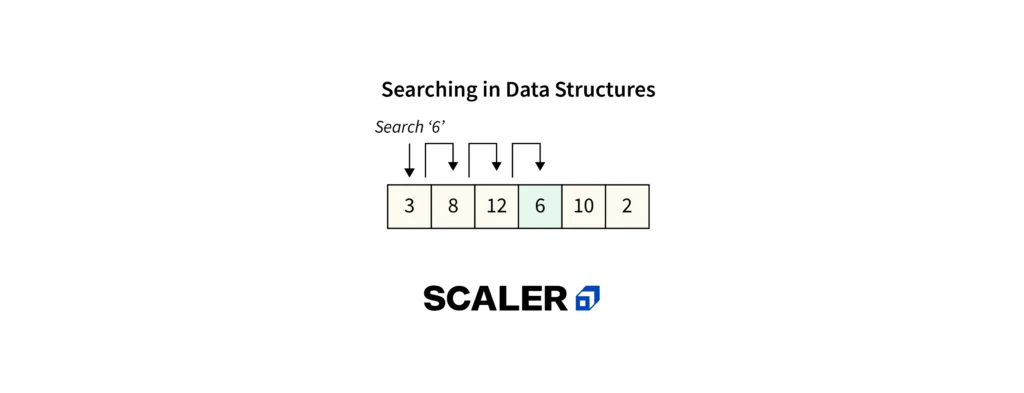

d) Searching Algorithms

To locate a particular element in an array, text, linked list, or other type of data structure, searching algorithms are used.

The most widely used algorithms for searching are:

- Linear Search: This searching algorithm iteratively searches from one end to the other for the element.

- Binary Search: This kind of search algorithm divides the data structure into two halves and determines which half contains the element that needs to be found.

- Ternary Search: In this instance, the array is split into three segments, and we identify the segment where the needed element needs to be found based on the values at the partitioning positions.

In addition to these, there exist additional search algorithms such as

- Jump Search

- Interpolation Search

- Exponential Search

Want to gain a deep understanding of searching algorithms and their applications? The Scaler’s DSA Course offers a structured learning path, expert mentorship, and hands-on practice to help you master these essential concepts.

e) Sorting Algorithms

This is yet another popular algorithm. We frequently need to organize or sort data according to a particular requirement. In certain situations, the sorting algorithm is applied. A set of homogeneous data can be sorted in an array in ascending or descending order based on certain conditions.

An array or list’s elements can be rearranged using a sorting algorithm based on a comparison operator applied to the elements. The new element order in the corresponding data structure is determined by the comparison operator.

Sorting algorithms come in many different varieties. Several popular algorithms are:

- Bubble Sort

- Selection Sort

- Insertion Sort

- Quick Sort

- Merge Sort

There are additional sorting algorithms as well, and they are useful in various circumstances. Our comprehensive article on sorting algorithms contains more information about them.

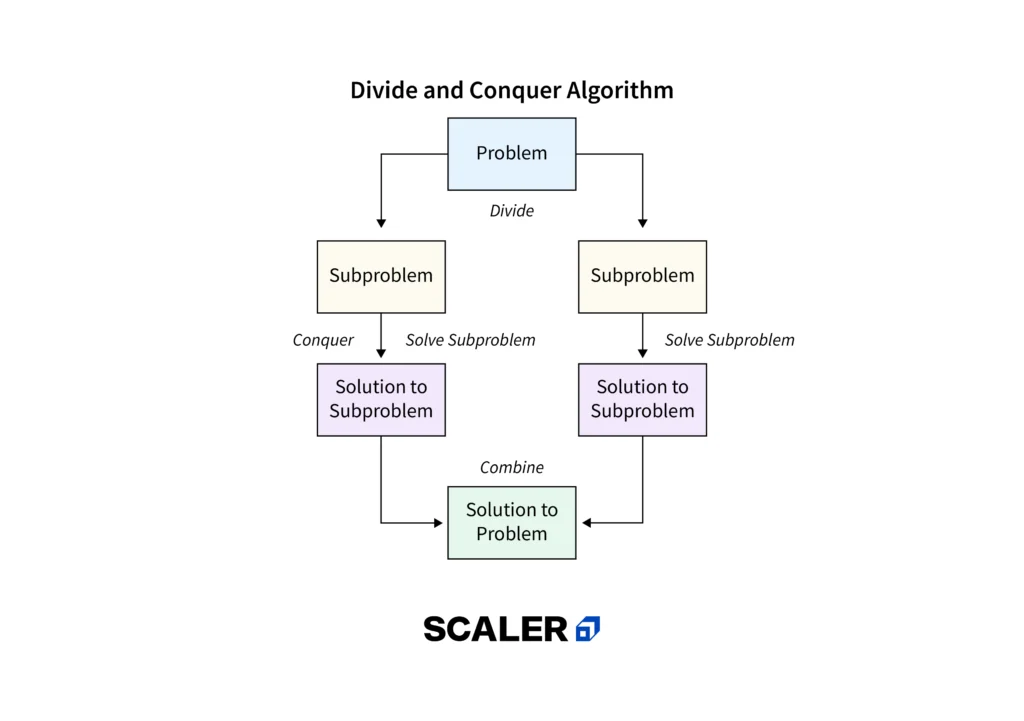

f) Divide and Conquer Algorithm

If you want to pursue a career in programming, you should learn this interesting and important algorithm. As the name implies, it divides the problem into smaller portions, solves each chunk separately, and then combines the solved subtasks once more to solve the main problem.

Divide and Conquer is a paradigm based on algorithms. Three steps are typically used in a Divide and Conquer algorithm to solve a problem.

- Divide: Separate the given problem into smaller, related problems.

- Conquer: Resolve these subproblems recursively

- Combine: Properly mix the responses

This is the main method discussed in the two previously mentioned sorting algorithms, Merge Sort and Quick Sort. For additional information on the method, its applications, and how to solve some thought-provoking problems, please see the dedicated article Divide and Conquer Algorithm.

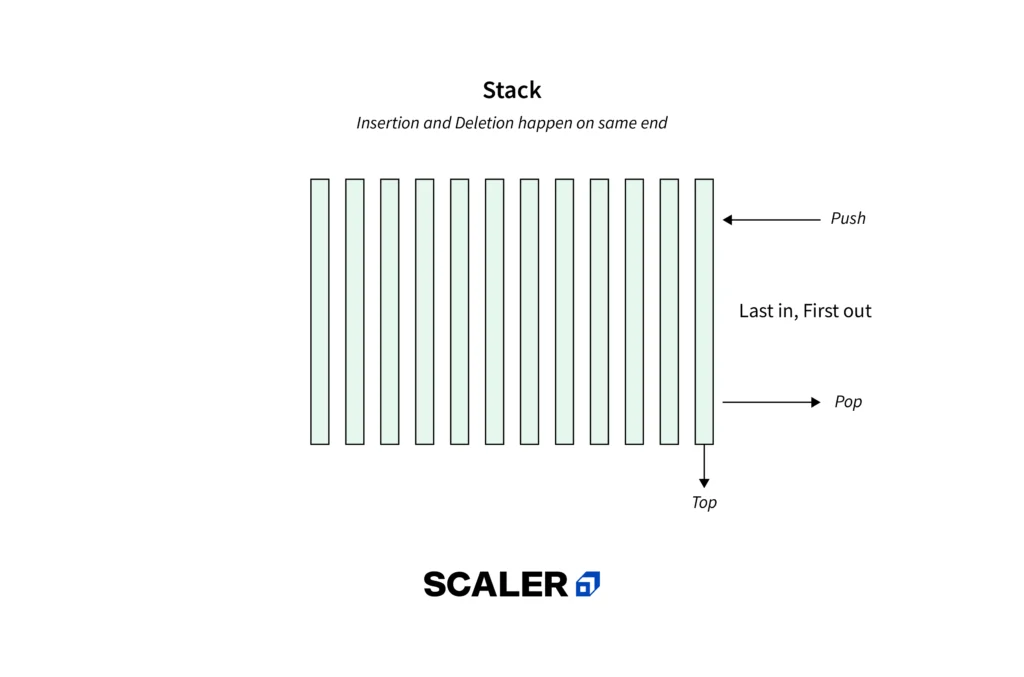

g) Stacks

Now, let’s delve into slightly more advanced data structures, like queues and stacks.

Stack is a linear data structure because its operations are performed in a predetermined sequence. The order could be Last In First Out (LIFO) or First In Last Out (FILO).

Because it is implemented using other data structures, like arrays and linked lists, based on the traits and features of the stack data structure, the stack is considered a complex data structure.

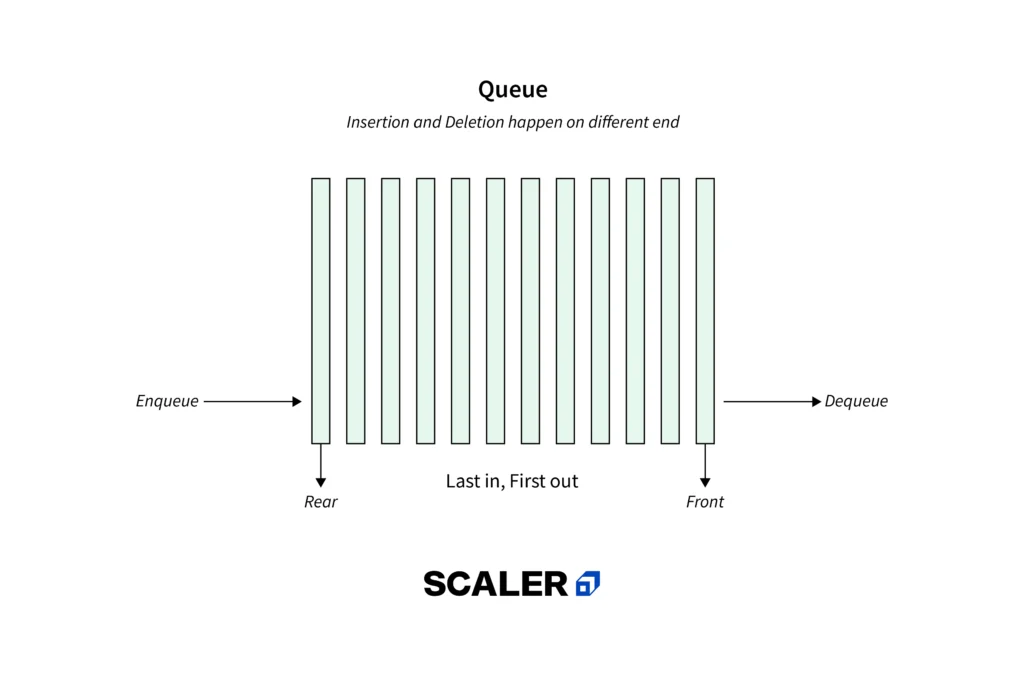

h) Queues

Queue is another data structure that resembles Stack but has different properties.

A queue is a linear structure that conducts each of its operations using the First In First Out (FIFO) principle.

A queue may take several forms, such as

- Circular queue: In a circular queue, the final element is linked to the queue’s initial element.

- Double-ended queue (also referred to as a deque): A unique kind of queue in which actions can be taken from either end is known as a double-ended queue.

- A priority queue is a unique kind of queue in which the items are arranged according to their importance. After a high-priority element, a low-priority element is dequeued.

When managing extensive amounts of data, it’s essential to structure it based on its intended use. Sorting algorithms are very important in this regard, particularly for interview preparation. Continuing on this DSA roadmap, we’ll now look into various sorting algorithms.

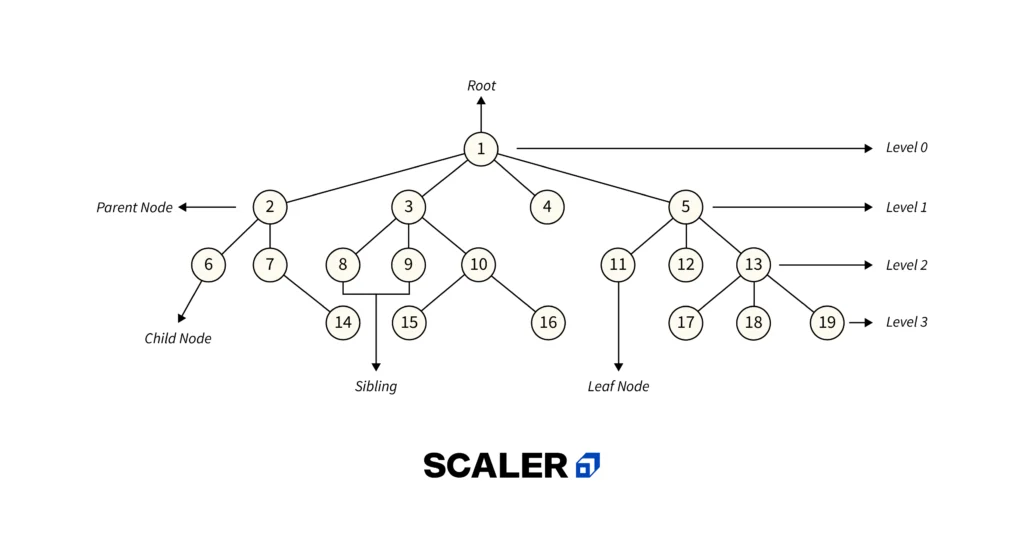

i) Tree

The tree is the first non-linear data structure you should become familiar with.

An upside-down version of a tree is what a tree data structure looks like in the wild. It has leaves and a root as well. The leaves are at the lowest level of the tree, and the root is the first node. The unique feature of a tree is that every node can only reach any other node via a single path.

Depending on a tree node’s maximum child count, it can be –

- A binary tree is a unique kind of tree in which there can be no more than two children per node.

- A ternary tree is a unique kind of tree in which a node can have up to three children at a time.

- N-ary tree: A node in this kind of tree can have a maximum of N children.

There are additional classifications based on node configuration. Among them are:

- Complete Binary Tree: In this kind of binary tree, every level is filled in, perhaps except the final one. However, as much left as possible is filled in the final level elements.

- A perfect binary tree is one in which every level is filled.

- Binary Search Tree: In a binary search tree, a node with a higher value is placed to the right of a node, and a smaller node is placed to the left of a node.

- Ternary Search Tree: This structure resembles a binary search tree, with the exception that one element may have a maximum of three children.

j) Priority Queue and Heap

A priority queue is one where each value has a corresponding priority. An element with a higher priority is dequeued before an element with a lower priority when dequeuing occurs.

A heap is a binary tree with a unique property: each node is greater than or equal to (max-heap) or less than or equal to (min-heap) its children. Consequently, the root node always holds the maximum (max-heap) or minimum (min-heap) value in the entire tree.

Heaps and priority queues have a twofold relationship: firstly, a heap can serve as the basis for implementing a priority queue, and secondly, a priority queue can be viewed as an extension of a heap.

The data structures roadmap contains three types of problems that are crucial to understanding in order to progress with this subject:

- Implementation-based problems

- Conversion-based problems

- K-based problems

Want to become proficient in solving heap and priority queue problems? The Scaler’s DSA Course offers a structured learning path, expert mentorship, and hands-on practice to help you excel in this essential area of computer science.

k) Graph

The graph is a significant non-linear data structure. It is comparable to the Tree data structure, but it can be traversed in any order and lacks a specific root or leaf node.

A graph is a type of non-linear data structure that is made up of a finite number of vertices, also known as nodes, and a set of edges that join two nodes together.

Every edge depicts the relationship between two nodes. Numerous issues in real life are resolved by this data structure. There are different kinds of graphs depending on how the nodes and edges are oriented.

Here are some essential graph concepts to understand:

- Graph types: Graphs can be classified according to their node weights or connectivity.

- A Brief Overview of BFS and DFS: These are graph traversal algorithms

- Cycles in a graph: A cycle is a set of connections that we move in a loop around.

- Topological sorting in the graph

- Minimum Spanning tree in graph

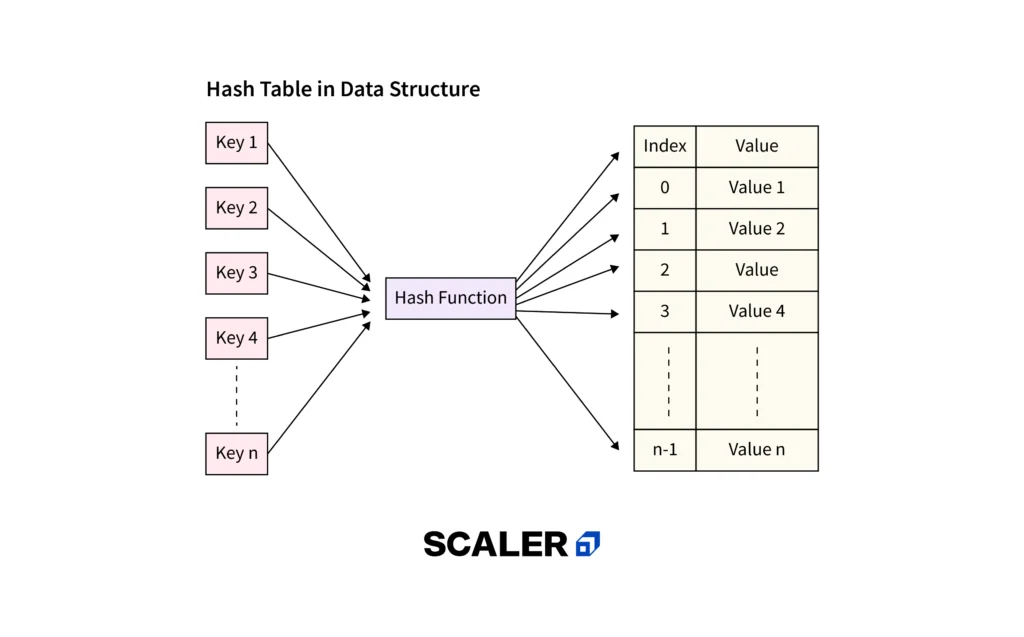

l) Hash Table

Hashing: Hashing is a technique used to map data of arbitrary size to fixed-size values. It is commonly used to index and retrieve items in a database, in computer science, and in cryptography. A hash function is a mathematical function that converts an input value (or ‘key’) into a fixed-size string of bytes, typically a hash code. The output, or hash value, is usually a fixed-size integer that is much smaller than the input data. A good hash function should evenly distribute the keys across the hash table to minimize collisions.

Hash Table: A hash table is a data structure that implements an associative array abstract data type, which can map keys to values. It uses a hash function to compute an index into an array of buckets or slots, from which the desired value can be found. Hash tables offer efficient insertion, deletion, and lookup operations, with an average-case time complexity of O(1) for these operations when collisions are handled properly.

Hash Map: A hash map is an implementation of a hash table, typically used in programming languages. It is a data structure that stores key-value pairs, where each key is unique. Hash maps provide efficient lookup, insertion, and deletion of key-value pairs, making them suitable for a wide range of applications. In many programming languages, hash maps are implemented using arrays and linked lists or other data structures to handle collisions.

m) Greedy Algorithms

As the name implies, this algorithm assembles the solution piece by piece and selects the subsequent piece that provides the most evident and instant benefit—that is, the most advantageous option at that precise moment. Therefore, Greedy is best suited to solve problems where selecting the locally optimal solution also results in the global solution.

Take the Fractional Knapsack Problem, for instance. Selecting the item with the highest value-to-weight ratio is the most advantageous course of action locally. Because we can take fractions of an item, this strategy also results in a globally optimal solution.

Here is how you can get started with the Greedy algorithm with the help of relevant sub-topics:

- Standard greedy algorithms

- Greedy algorithms in graphs

- Greedy Algorithms in Operating Systems

- Greedy algorithms in array

- Approximate greedy algorithms for NP-complete problems

n) Recursion (Very Important)

One of the most significant algorithms that make use of the idea of code reuse and repeated application of the same code is recursion.

The point that makes Recursion one of the most used algorithms is that it forms the base for many other algorithms such as:

- Tree traversals

- Graph traversals

- Divide and Conquer Algorithms

- Backtracking algorithms

In Recursion, you can follow the below articles/links to get the most out of it:

- Recursion

- Recursive Functions

- Tail Recursion

- Towers of Hanoi (TOH)

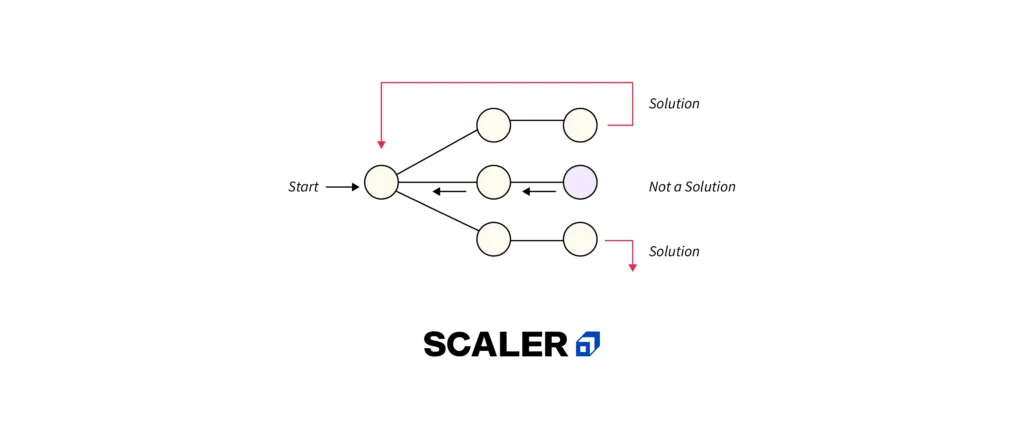

o) Backtracking Algorithm

As was previously indicated, the Backtracking algorithm is based on the Recursion algorithm and can revert if a recursive solution fails. In other words, if a solution fails, the program goes back in time to the point at which it failed and then builds upon a different solution. In other words, it essentially explores every option and selects the right one.

Backtracking is an algorithmic method for recursively solving problems by attempting to construct a solution piece by piece and eliminating any solutions that, at any given time, are unable to satisfy the problem’s constraints.

Some important and most common problems of backtracking algorithms, that you must solve before moving ahead, are

- Knight’s tour problem

- Rat in a maze

- N-Queen problem

- Subset sum problem

- m-coloring problem

- Hamiltonian cycle

- Sudoku

p) Advanced Graph Algorithms

Advanced Graph Algorithms:

- Shortest Path Algorithms: These algorithms find the shortest path between two vertices in a weighted graph. Examples include Dijkstra’s algorithm for single-source shortest paths and Floyd-Warshall’s algorithm for all pairs of shortest paths.

- Minimum Spanning Tree (MST): MST algorithms find a subset of edges that connect all vertices in a graph with the minimum total edge weight. Prim’s algorithm and Kruskal’s algorithm are commonly used to find MSTs.

Self-Balancing Trees:

- Definition: Self-balancing trees are binary search trees that automatically maintain balance during insertions and deletions to ensure efficient operations.

- Common Types: Some common types include AVL trees, red-black trees, and B-trees.

- Balancing Criteria: These trees ensure that the height difference between left and right subtrees (or other balancing criteria) remains small, typically

O(log n), to maintain efficient search, insert, and delete operations.

q) Dynamic Programming

Dynamic programming is another important type of algorithm. The primary use of dynamic programming is as an improvement over simple recursion. Dynamic programming can be used to optimize recursive solutions that involve repeated calls for the same inputs.

The primary idea behind the Dynamic Programming algorithm is to reduce time complexity by avoiding repeating calculations of the same subtask by using the previously calculated result.

To learn more about dynamic programming and practice some interesting problems related to it, refer to the following articles:

- Tabulation vs Memoization

- Optimal Substructure Property

- Overlapping Subproblems Property

- How to solve a Dynamic Programming Problem?

- Bitmasking and Dynamic Programming

- Digit DP | Introduction

r) Other Basic Algorithms

String Algorithms: String algorithms deal with manipulating, searching, and analyzing strings efficiently. Common string algorithms include substring search (e.g., Knuth-Morris-Pratt algorithm, Rabin-Karp algorithm), string matching (e.g., Boyer-Moore algorithm), and string manipulation (e.g., string reversal, string sorting).

Prime Numbers – Sieve of Eratosthenes: The Sieve of Eratosthenes is an efficient algorithm for finding all prime numbers up to a specified integer n. It works by iteratively marking the multiples of each prime number starting from 2, and the unmarked numbers remaining after the process are prime.

Primality Test: Primality tests are algorithms used to determine whether a given number is prime or composite. There are various primality tests, including trial division, Miller-Rabin test, and AKS primality test.

Euclidean Algorithm:

- The Euclidean algorithm is used to find the greatest common divisor (GCD) of two integers efficiently. It works by repeatedly applying the principle that the GCD of two numbers is the same as the GCD of one of the numbers and the remainder of their division.

- The Euclidean algorithm is often used in various mathematical computations

Step 4. Continuous Learning and Keep Practicing!

Practice a lot, a lot, and more!

Practice is the key to mastering any skill. During your preparation, you should incorporate practicing DSA questions into your daily routine. The problem-of-the-day feature on most DSA websites can assist you in staying consistent.

You can also use given resources below for continuous learning and practice on online platforms like:

- Scaler / InterviewBit

- LeetCode

- HackerRank

Remember:

- Focus on Consistency: Dedicate a regular amount of time each day or week to practice. Consistency is key to solidifying your skills.

- Challenge Yourself: Don’t shy away from problems that seem difficult. Stepping outside your comfort zone helps you learn and grow.

- Track Your Progress: Monitor your progress by recording your performance or completed challenges. This helps stay motivated and see your improvement over time.

By leveraging these resources and practicing consistently, you’ll transform yourself into a confident and well-rounded DSA expert, ready to tackle any coding challenge that comes your way!

Step 5. Participate and Compete in Coding Contests and Hackathons

Participating in coding competitions is a fantastic way to accelerate your DSA (Data Structures and Algorithms) development. These contests simulate real-world coding scenarios, pushing you to:

- Think Fast: Online tests often have strict time limits, forcing you to develop efficient problem-solving skills and code quickly.

- Practice Under Pressure: The competitive environment helps you learn to stay calm and focused when tackling challenges on a deadline.

- Explore Diverse Problems: Competitions offer a wide range of problems, exposing you to new concepts and techniques you can integrate into your skillset.

a) Participate in Coding Competitions

Scaler Contest: Scaler Academy hosts regular coding contests to help developers improve their skills and compete with their peers. These contests cover a range of topics and difficulty levels, offering participants a chance to showcase their problem-solving abilities. Joining these contests can be a great way to stay sharp and learn from others in the coding community.

HackerRank: HackerRank hosts various coding challenges and competitions across different domains such as algorithms, data structures, artificial intelligence, and more. They also partner with companies for hiring challenges.

Codeforces: Codeforces is a competitive programming platform that hosts regular contests and competitions. It covers a wide range of topics including algorithms, data structures, mathematics, and more.

LeetCode: LeetCode offers a wide range of coding challenges and contests that cover algorithms, data structures, databases, shells, and more. It’s also widely used for technical interview preparation.

TopCoder: TopCoder is one of the oldest competitive programming platforms that hosts single-round matches, marathon matches, and other contests. It covers various algorithms, data structures, and problem-solving techniques.

Google Code Jam: Google Code Jam is an annual coding competition hosted by Google. It features multiple rounds of algorithmic puzzles and challenges, culminating in a final onsite competition.

Facebook Hacker Cup: The Facebook Hacker Cup is an annual competition hosted by Facebook. It includes multiple rounds of algorithmic challenges, with the top contestants invited to the finals at Facebook’s headquarters.

AtCoder: AtCoder is a Japanese competitive programming platform that hosts regular contests and educational programming challenges. It offers problems of varying difficulty levels.

CodeChef: CodeChef is a competitive programming platform that hosts monthly coding contests, long challenges, and other programming competitions. It covers a wide range of topics including algorithms, data structures, and mathematics.

HackerEarth: HackerEarth hosts coding challenges, hackathons, and hiring challenges for programmers and developers. It offers challenges in various domains including algorithms, data structures, machine learning, and more.

b) Contribute to Open Source Projects

Contributing to open-source projects involves collaborating on software development with a global community of developers. It offers opportunities to gain practical experience, build a portfolio, and contribute to projects that benefit others.

- Skill Enhancement: Contribute to diverse projects, improving coding skills, and gaining exposure to new technologies and coding practices.

- Portfolio Building: Showcase contributions on platforms like GitHub, enhancing credibility and attractiveness to potential employers.

- Community Engagement: Collaborate with developers worldwide, gaining feedback, mentorship, and networking opportunities.

- Social Impact: Contribute to projects that address real-world problems, making a positive impact on society.

- Learning from Peers: Engage with experienced developers, learning from their expertise and gaining insights into industry best practices.

c) Engage with the DSA Community

- Participating in online forums like Stack Overflow or Reddit’s r/learnprogramming to seek help, share knowledge, and discuss DSA topics.

- Contributing to open-source DSA repositories on platforms like GitHub, collaborating with developers globally, and honing problem-solving skills.

- Joining DSA-focused communities on social media platforms such as LinkedIn or Discord to connect with like-minded individuals, share resources, and stay updated on industry trends.

Expert Advice for Improving Your Learning Journey

Here are some cool tips to make learning even better:

- Practice, Practice, Practice! The more problems you solve, the better you’ll understand how things work. Think of it like training for a coding marathon!

- Don’t Just Read, Do! Learning is more than just memorizing. Play around with the concepts, ask questions, and try to understand why things work the way they do.

- Break Down Big Problems: Feeling overwhelmed? Just like a giant pizza, cut big problems into smaller slices that are easier to handle. This makes solving them less scary!

- Find Your Learning Style: Do you learn best by watching videos, reading tutorials, or diving straight into code? Explore different resources and find what works best for you. Maybe you’re a visual learner who thrives with diagrams, or perhaps you’re an auditory learner who benefits from video explanations.

- Explain Concepts to a Friend: Teaching is a fantastic way to solidify your understanding. Explain a concept you learned to a friend (real or imaginary) and see if they can grasp it. If not, it’s time to revisit the material.

- Celebrate Your Wins (Big and Small!): Acknowledge your progress! Completing a tough challenge or finally grasping a complex concept deserves a celebratory dance (or your favorite snack!).

Remember, the DSA journey is yours. Personalize your learning by incorporating activities you enjoy, catering to your learning style, and celebrating your achievements along the way. This will make the process more engaging and help you reach your coding goals!

Conclusion

The significance of data structures was covered in this article, after which we outlined a topic-by-topic data structures roadmap with a summary of the key ideas from each topic. Gaining a thorough understanding of these ideas will improve your readiness for interviews, where one of the most crucial subjects is data structures.

Join Scaler’s DSA course to learn more about Data Structures and Algorithms. With Scaler’s DSA course, you’re not just starting a learning journey—you’re diving into an exciting experience designed to help both beginners and experienced coders. Our easy-to-follow curriculum ensures you understand every concept, guiding you step-by-step.

Join us and become a coding expert! With Scaler, you’ll master algorithms in no time!

Read These Important Roadmaps: More Paths to Career Success

FAQ’s About DSA Roadmap

What should I learn first: data structures or algorithms?

Algorithms and data structures are interdependent. We apply algorithms to a suitable data structure and, in turn, we apply the algorithms to the data structure. After going through the advantages and disadvantages of each case and considering them all, you must begin studying data structures first.

However, you shouldn’t delve too far into it without first understanding algorithms. Since both data structures and algorithms are essential for optimal outcomes, it is best to study them concurrently.

Is DSA hard for beginners?

DSA can be challenging for beginners due to its abstract nature and the need for logical thinking, but consistent practice and patience can lead to mastery.

Can I master DSA in 3 months?

In general, learning Data Structures and Algorithms would take three to four months; however, keep in mind that this is a subject that takes time to grasp. Individual differences exist regarding the amount of time required.

What mathematics topics one must learn to master algorithms and data structures?

It is recommended that you learn at least one programming language, such as C++, Java, or Python, before working with data structures and algorithms. The fundamentals of time and space complexity analysis should also be covered.

What should be the proper order of topics to learn Data Structure and Algorithms for beginners having a basic knowledge of C and C++?

For beginners with basic C and C++ knowledge, a structured order to learn Data Structures and Algorithms could be:

- Basics of C/C++

- Introduction to Data Structures

- Fundamental Algorithms

- Advanced Data Structures

- Advanced Algorithms

- Problem-Solving

Should I learn algorithms and data structures with Python, Java, C, or C++?

For beginners, Python is often recommended due to its simplicity and readability, making it easier to focus on learning concepts rather than language intricacies. However, Java, C, and C++ are also commonly used languages for learning algorithms and data structures, depending on personal preference and intended application. So, Java and C++ would be advisable.

How long does it take to complete data structures and algorithms?

The time required to learn data structures and algorithms depends on factors like prior programming experience, study pace, and daily practice hours. For most beginners, achieving a solid understanding can take a few months to a year. Consistent practice and dedication are essential for mastery.