The quality of data is crucial in the field of machine learning since it serves as the basis for the models that are created. Data cleaning, also known as data cleansing or data scrubbing, is the process of identifying and rectifying errors, inconsistencies, and inaccuracies within a dataset. It’s a crucial step that often goes underappreciated but plays a pivotal role in determining the success of any machine learning project.

If you’re eager to master the art of data cleaning and unlock the full potential of your machine learning models, Scaler’s Machine Learning Course delves into the intricacies of data preprocessing, equipping you with the skills and knowledge to ensure your data is pristine and ready for analysis.

What is Data Cleaning?

Data cleaning is the process of identifying and rectifying errors, inconsistencies, and inaccuracies in a dataset. This includes a broad range of activities, including dealing with outliers, handling missing values, fixing typos, eliminating duplicates, and standardizing formats. The goal of data cleaning is to improve the quality, reliability, and usability of data for analysis and machine learning tasks.

Although they are frequently used synonymously, data preprocessing and data cleaning are two different but related ideas:

- Data Cleaning: Primarily focuses on fixing or removing incorrect, corrupted, or irrelevant data points. It addresses problems like missing values, duplicate records, and formatting errors that come up during data entry or collection.

- Data Preprocessing: This broader term encompasses both data cleaning and additional transformations that prepare the data for analysis. It involves work such as normalization, feature engineering, encoding categorical variables, and feature scaling.

In essence, data cleaning is a subset of data preprocessing. Data preprocessing includes a broader range of methods to change the data into a format appropriate for machine learning algorithms, whereas data cleaning concentrates on correcting errors and guaranteeing data integrity.

Why is Data Cleaning Important?

The unsung hero of machine learning, data cleaning is vital to any project that uses data but is frequently disregarded. Its importance lies in the profound impact it has on both data quality and the performance of machine learning models.

Impact on Data Quality

Data cleaning improves the quality of your dataset by removing irrelevant information, errors, and inconsistencies. It ensures that your data is accurate, reliable, and representative of the real-world phenomena you’re trying to analyze. This, in turn, lays a solid foundation for robust analysis and modeling.

Impact on Model Performance

The adage “garbage in, garbage out” rings true in machine learning. Feeding dirty data into your model is akin to constructing a house on shaky ground. It causes skewed results, inaccurate forecasts, and ultimately bad decision-making. By meticulously cleaning your data, you remove the noise and distractions that can mislead your algorithms, allowing them to learn the true patterns and relationships within the data. This translates to improved accuracy, better generalization, and ultimately, more valuable insights.

Importance of Data Cleaning in Data Science and Machine Learning

Data cleaning is not just a preliminary step; it’s an ongoing process that permeates the entire data science lifecycle. Data cleaning is essential at every stage, from gathering and analyzing data to creating and implementing models. It ensures that your data is in optimal condition for analysis, leading to more robust and reliable results.

In the realm of machine learning, clean data is the fuel that powers accurate predictions and insightful models. By investing time and effort in data cleaning, you pave the way for models that can generalize well to new data, make accurate predictions, and ultimately, drive better decision-making in your organization or research.

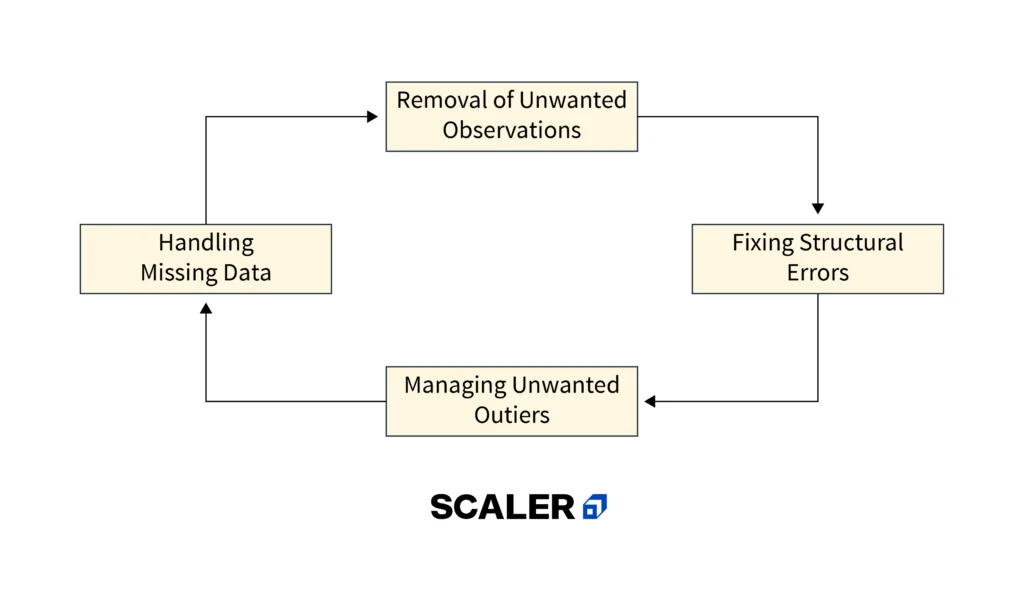

Steps to Perform Data Cleaning in Machine Learning

In order to guarantee data quality and dependability, data cleaning is a multifaceted process that calls for a methodical approach. Let’s break down the essential steps involved:

1. Data Inspection and Exploration

Begin by thoroughly understanding your dataset. Utilize tools like df.info() in Python’s pandas library to get a quick overview of the data types, missing values, and column names. Sort the columns by category and number, then look at how the values are distributed in each. Counting unique values in categorical columns can reveal potential inconsistencies or errors. Visualizing the data using histograms or scatter plots can also provide valuable insights into its structure and potential issues.

2. Removing Unwanted Observations

Identify and remove irrelevant columns or rows that do not contribute to your analysis. Columns like “Name” or “Ticket,” for example, may not be relevant for predicting survival in a Titanic dataset. Similarly, remove duplicate rows to avoid biases and ensure accurate analysis.

3. Handling Missing Data

Missing data is a common challenge in real-world datasets. Based on the type and degree of missingness, you have a few options for techniques:

- Deletion: If missing values are few and random, you can simply delete the corresponding rows or columns.

- Imputation: This involves replacing missing values with estimated values based on other available data. The mean, median, mode, and more complex methods like regression or K-nearest neighbours imputation are examples of common imputation techniques.

4. Handling Outliers

Outliers are data points that deviate significantly from the rest of the distribution. They may distort analysis and produce unreliable findings. Detect outliers using statistical methods like the z-score or interquartile range (IQR). Once identified, you can choose to remove them or replace them with more reasonable values, depending on your analysis goals.

5. Data Transformation

Transforming data into a format that can be used for analysis and modeling is known as data transformation. This can include:

- Standardization: Scaling features to have zero mean and unit variance.

- Normalization: Scaling features to a range between 0 and 1.

- Encoding: Converting categorical variables into numerical representations that machine learning algorithms can understand.

6. Data Validation and Verification

The final step involves verifying the accuracy and consistency of the cleaned data. This entails looking for any remaining errors or outliers, as well as data type inconsistencies and inconsistencies across various data sources. Thorough validation ensures that your cleaned dataset is ready for analysis and modeling.

Python Implementation for Data Cleaning

Python, with its rich ecosystem of libraries like pandas and NumPy, offers a powerful toolkit for data cleaning. Let’s walk through a practical implementation of the data-cleaning process using Python code snippets.

Sample CSV File: Let’s take an example CSV file data for the code implementation:

"ID","Name","Age","Score","City","Country"

1,"John Doe",25,85,"New York","USA"

2,"Jane Smith",30,90,"Los Angeles","USA"

3,"Bob Johnson",28,78,"Chicago","USA"

4,"Alice Brown",22,92,"Houston","USA"

5,"Mike Davis",35,88,"Philadelphia","USA"

6,"Emily Taylor",29,95,"Phoenix","USA"

7,"Sarah Lee",26,80,"San Antonio","USA"

8,"Kevin White",31,89,"San Diego","USA"

9,"Lisa Hall",27,91,"Dallas","USA"

10,"Tom Harris",33,87,"San Jose","USA"1. Data Inspection and Exploration

import pandas as pd

# Load your dataset

df = pd.read_csv("your_dataset.csv")

# Display basic information about the dataset

print(df.info())

# Display descriptive statistics for numerical columns

print(df.describe())

# Display the first few rows of the dataset

print(df.head())

# Visualize the distribution of numerical columns

import matplotlib.pyplot as plt

df.hist(bins=30, figsize=(10, 8))

plt.show()Output:

Kotlin:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 10 entries, 0 to 9

Data columns (total 6 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 ID 10 non-null int64

1 Name 10 non-null object

2 Age 10 non-null int64

3 Score 10 non-null int64

4 City 10 non-null object

5 Country 10 non-null object

dtypes: int64(3), object(3)

memory usage: 608.0+ bytesShell:

ID Age Score

count 10.00000 10.000000 10.000000

mean 5.50000 28.600000 87.500000

std 3.02765 3.683748 5.724556

min 1.00000 22.000000 78.000000

25% 3.25000 25.750000 85.250000

50% 5.50000 28.000000 88.500000

75% 7.75000 31.000000 91.000000

max 10.00000 35.000000 95.000000Mathematica:

ID Name Age Score City Country

0 1 John Doe 25 85 New York USA

1 2 Jane Smith 30 90 Los Angeles USA

2 3 Bob Johnson 28 78 Chicago USA

3 4 Alice Brown 22 92 Houston USA

4 5 Mike Davis 35 88 Philadelphia USA2. Removing Unwanted Observations

# Drop irrelevant columns (assuming 'ID' is not needed)

df.drop(["ID"], axis=1, inplace=True)

# Remove duplicate rows (if any)

df.drop_duplicates(inplace=True)

# Remove rows based on a condition (example: Age less than 24)

df = df[df['Age'] >= 24]

# Display the cleaned dataframe

print(df.head())Output:

Name Age Score City Country

0 John Doe 25 85 New York USA

1 Jane Smith 30 90 Los Angeles USA

2 Bob Johnson 28 78 Chicago USA

4 Mike Davis 35 88 Philadelphia USA

5 Emily Taylor 29 95 Phoenix USA3. Handling Missing Data

# Drop rows with missing values (if appropriate)

df.dropna(inplace=True)

# Impute missing values with mean (for numerical columns)

df["Age"].fillna(df["Age"].mean(), inplace=True)

# Impute missing values with mode (for categorical columns)

df["City"].fillna(df["City"].mode()[0], inplace=True)

# Display the dataframe after handling missing data

print(df.info())Output: Kotlin

<class 'pandas.core.frame.DataFrame'>

Int64Index: 9 entries, 0 to 9

Data columns (total 5 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Name 9 non-null object

1 Age 9 non-null int64

2 Score 9 non-null int64

3 City 9 non-null object

4 Country 9 non-null object

dtypes: int64(2), object(3)

memory usage: 432.0+ bytes4. Handling Outliers

import numpy as np

from scipy import stats

# Detect outliers using z-score (for numerical columns)

z_scores = stats.zscore(df["Score"])

abs_z_scores = np.abs(z_scores)

filtered_entries = (abs_z_scores < 3)

df = df[filtered_entries]

# Detect outliers using IQR (Interquartile Range)

Q1 = df['Score'].quantile(0.25)

Q3 = df['Score'].quantile(0.75)

IQR = Q3 - Q1

df = df[~((df['Score'] < (Q1 - 1.5 * IQR)) | (df['Score'] > (Q3 + 1.5 * IQR)))]

# Display the dataframe after handling outliers

print(df.describe())Output: Shell

Age Score

count 9.000000 9.000000

mean 28.555556 88.222222

std 3.709775 5.244044

min 25.000000 78.000000

25% 26.000000 85.000000

50% 29.000000 88.000000

75% 30.000000 91.000000

max 35.000000 95.0000005. Data Transformation

from sklearn.preprocessing import StandardScaler, MinMaxScaler

# Standardize numerical columns (zero mean, unit variance)

scaler = StandardScaler()

df[["Age", "Score"]] = scaler.fit_transform(df[["Age", "Score"]])

# Normalize numerical columns (range between 0 and 1)

scaler = MinMaxScaler()

df[["Score"]] = scaler.fit_transform(df[["Score"]])

# Encode categorical columns

df = pd.get_dummies(df, columns=["City"])

# Display the transformed dataframe

print(df.head())Output:

| Name | Age | Score | Country | City_Chicago | City_Dallas | City_Houston | City_Los Angeles | City_New York | City_Philadelphia | City_Phoenix | City_San Antonio | City_San Diego | City_San Jose |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| John Doe | -1.014185 | 0.388889 | USA | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

| Jane Smith | 0.389607 | 0.833333 | USA | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| Bob Johnson | -0.149877 | 0 | USA | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Mike Davis | 1.918889 | 0.666667 | USA | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| Emily Taylor | 0.810293 | 1 | USA | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

Remember:

- The specific data cleaning steps and techniques you choose will depend on the nature and characteristics of your dataset.

- Always carefully inspect and analyze your data before applying any transformations.

- Data cleaning is an iterative process, and you may need to experiment with different techniques to achieve the best results.

Join the tech revolution with Scaler’s Machine Learning Course. Acquire the skills needed to excel in the rapidly advancing field of machine learning.

Data Cleaning Tools

In the pursuit of accurate and reliable machine learning models, having the right tools for data cleaning is essential. To assist you in selecting the data cleaning tool that best meets your needs, let us examine a few widely used options, each with advantages and disadvantages of their own.

1. OpenRefine

OpenRefine, formerly known as Google Refine, is an effective open-source tool for handling jumbled data. It allows you to explore, clean, transform, and reconcile data in various formats.

- Pros: Free, versatile, handles large datasets, supports various data formats, offers powerful filtering and transformation capabilities.

- Cons: Higher learning curve; this is mainly a desktop program that needs some technical understanding.

2. Trifacta Wrangler

Trifacta Wrangler is a platform for visual data wrangling that makes cleaning and preparing data easier. It offers an interactive interface where you can explore, transform, and enrich data using a variety of visual tools and transformations.

- Pros: Intuitive visual interface, easy to learn and use, powerful data profiling and transformation capabilities, supports various data sources.

- Cons: Can be expensive for enterprise use, limited in handling very large datasets, and may require additional training for advanced features.

3. DataCleaner

An open-source program for preparing and enhancing data, DataCleaner is intended for both technical and non-technical users. It offers a range of features for data profiling, cleaning, validation, and enrichment.

- Pros: Free, user-friendly interface, automated data profiling and cleaning suggestions support various data sources, and offers a visual workflow designer.

- Cons: May need extra plugins for specialized tasks, limited ability to handle very large datasets, and possibly less comprehensive community support when compared to commercial tools.

4. WinPure Clean & Match

A full suite of software tools for data quality, including data cleansing, deduplication, and matching, is offered by WinPure Clean & Match. It’s designed to help businesses improve data accuracy, completeness, and consistency.

- Pros: User-friendly interface, robust data cleansing and deduplication capabilities, fuzzy matching for identifying similar records, customizable workflows.

- Cons: Can be expensive, primarily focused on customer data, and may not be suitable for all types of data cleaning tasks.

5. Drake

Drake is a Python data workflow tool that lets you create intricate pipelines for processing data. While not strictly a data-cleaning tool, it can be used to automate data-cleaning tasks within a broader workflow.

- Pros: Python-based, flexible and customizable, ideal for automating repetitive data cleaning tasks, integrates well with other Python data science tools.

- Cons: Requires programming knowledge, can be complex for non-technical users, the learning curve might be steep for those new to Python.

These are just a few examples of the many data-cleaning tools available. The tool you choose will rely on your needs, financial situation, and level of technical proficiency. Experiment with different tools to find the one that best fits your workflow and empowers you to achieve data-cleaning success.

Advantages and Disadvantages of Data Cleaning in Machine Learning

In the field of machine learning, data cleaning has pros and cons. It has enormous advantages but also presents certain difficulties. Understanding these trade-offs is crucial for making informed decisions about how to approach data cleaning in your projects.

Advantages

- Improved Data Quality: The most obvious benefit of data cleaning is the improvement in data quality. You can produce a dataset that is more accurate, dependable, and representative of the real-world phenomena you are researching by eliminating mistakes, inconsistencies, and unnecessary information.

- Enhanced Model Performance: Clean data leads to better machine-learning models. When your model is trained on high-quality data, it’s more likely to learn the true patterns and relationships within the data, resulting in more accurate predictions and better decision-making.

- Deeper Insights: Clean data allows you to uncover deeper insights and discover hidden patterns that may have been obscured by noise and errors. This can lead to new discoveries, improved understanding of your data, and ultimately, better outcomes.

- More Efficient Analysis: By removing irrelevant and redundant information, data cleaning simplifies your dataset, making it easier to analyze and visualize. By doing this, you can free up important time and resources to concentrate on gaining insightful knowledge.

Disadvantages

- Time-Consuming: Data cleaning can be a time-consuming process, especially when dealing with large and complex datasets. It frequently calls for manual data validation, correction, and inspection, which can be time-consuming and difficult.

- Requires Expertise: Effective data cleaning requires domain knowledge and expertise. A thorough understanding of the topic matter and data analysis principles is necessary to recognize potential errors and inconsistencies, understand the subtleties of your data, and select the appropriate cleaning techniques.

- Potential for Bias: If not done carefully, data cleaning can introduce bias into your dataset. The underlying distribution of the data may be slightly altered by eliminating outliers or imputing missing values using assumptions, which may produce skewed results.

- Not a One-Size-Fits-All Solution: There is no single “best” way to clean data. The best strategy will vary depending on your dataset’s unique properties, your analysis objectives, and the resources at your disposal.

To gain a comprehensive understanding of data cleaning techniques and best practices, consider enrolling in Scaler’s Machine Learning Course. With expert-led instruction, practical projects, and one-on-one mentoring, this course will give you the knowledge and abilities you need to become a machine learning pro at data cleaning.

Conclusion

Data cleaning, often overlooked, is the bedrock upon which successful machine learning models are built. Data cleaning ensures data quality and reliability by carefully addressing errors, inconsistencies, and outliers. This has a direct impact on model accuracy and the validity of insights derived from the data. From data inspection and preprocessing to handling missing values and outliers, each step in the data cleaning process is crucial for unlocking the true potential of your data.

Your machine-learning projects will succeed if you put in the time and effort to clean the data. Clean data leads to better models, more accurate predictions, and ultimately, more informed decision-making.

FAQs

What does it mean to cleanse our data?

The process of finding and fixing mistakes, inconsistencies, and inaccuracies in a dataset is called data cleansing, sometimes referred to as data cleaning. This involves tasks like handling missing values, correcting typos, removing duplicates, and standardizing formats to improve data quality.

What is an example of cleaning data?

An example of data cleaning is correcting inconsistent date formats in a dataset. If some dates are formatted as “dd/mm/yyyy” and others as “mm/dd/yyyy,” you would standardize them to a single format to ensure consistency and accuracy.

What is the meaning of a data wash?

Data wash is not a standard term in data science or machine learning. It may refer to a colloquial way of describing data cleaning or preprocessing, but it’s not widely used in the field.

How is data cleansing done?

Data cleansing involves various steps, including data inspection and exploration, removing unwanted observations, handling missing data and outliers, data transformation, and data validation. This can be done manually or with the help of automated tools and scripts.

What is data cleansing in cybersecurity?

In cybersecurity, data cleansing focuses on removing or sanitizing sensitive data to protect it from unauthorized access or exposure. This can involve techniques like data masking, tokenization, or encryption.

How to clean data using SQL?

SQL (Structured Query Language) provides various functions and commands for data cleaning, such as TRIM for removing leading and trailing spaces, REPLACE for replacing specific characters, and CASE statements for handling inconsistencies. You can also use SQL queries to filter out unwanted data, correct errors, and aggregate data to identify outliers.