Data science is all about finding meaning in data, and statistics is the key to unlocking those insights. Consider statistics as the vocabulary that data scientists employ to comprehend and analyze data. Without it, data is just a jumble of numbers.

A strong background in statistics is essential for anyone hoping to work as a data scientist. It’s the tool that empowers you to turn raw data into actionable intelligence, make informed decisions, and drive real-world impact. In this guide, we’ll break down the key concepts, tools, and applications of statistics in data science, providing you with the knowledge you need to succeed in this exciting field.

Ready to deepen your understanding of statistics and its role in data science? Explore Scaler’s Data Science course and gain the skills to excel in this dynamic field.

Fundamentals of Statistics

Statistics provides the framework for understanding and interpreting data. It enables us to calculate uncertainty, spot trends, and draw conclusions about populations from samples. In data science, a strong grasp of statistical concepts is crucial for making informed decisions, validating findings, and building robust models.

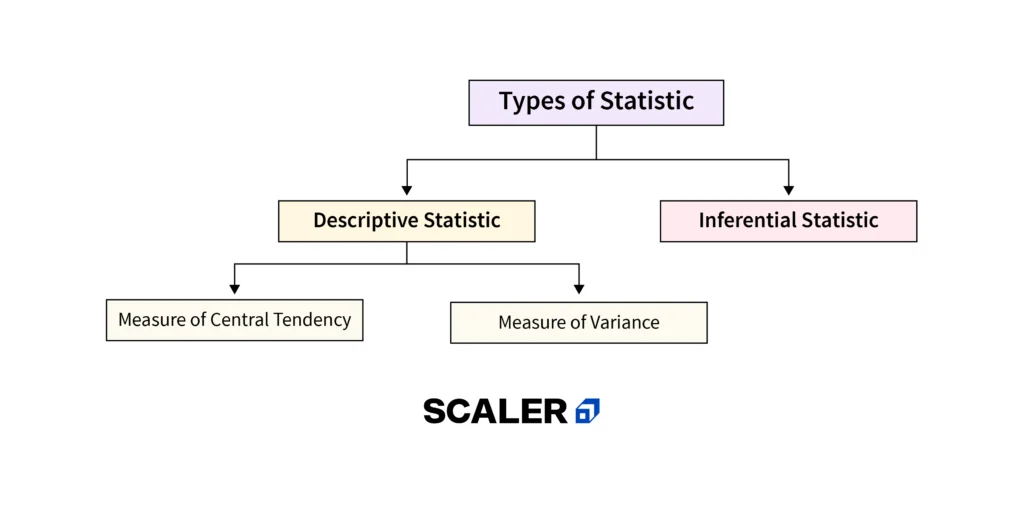

1. Descriptive Statistics

Descriptive statistics help us summarize and describe the key characteristics of a dataset. This includes measures of central tendency like mean (average), median (middle value), and mode (most frequent value), which tell us about the typical or central value of a dataset. We also use measures of variability, such as range (difference between maximum and minimum values), variance, and standard deviation, to understand how spread out the data is. Additionally, data visualization techniques like histograms, bar charts, and scatter plots provide visual representations of data distributions and relationships, making it easier to grasp complex patterns.

2. Inferential Statistics

Inferential statistics, on the other hand, allow us to make generalizations about a population based on a sample. This involves understanding how to select representative samples and how they relate to the overall population. Hypothesis testing is a key tool in inferential statistics, allowing us to evaluate whether a hypothesis about a population is likely to be true based on sample data. We also use confidence intervals to estimate the range of values within which a population parameter is likely to fall. Finally, p-values and significance levels help us determine the statistical significance of results and whether they are likely due to chance.

Why Does Statistics Matter in Data Science?

Statistics is the foundation of the entire field of data science, not just a theoretical subject found in textbooks. It’s the engine that drives data-driven decision-making, allowing you to extract meaningful insights, test hypotheses, and build reliable models.

Applications of Statistics in Data Science Projects:

Statistics is an integral part of data science projects and finds numerous applications at each stage of such projects, from data exploration to model building and validation. Here’s how:

- Data Collection: Designing surveys or experiments to gather representative samples that accurately reflect the target population.

- Data Cleaning: Identifying and handling outliers, missing values, and anomalies using statistical techniques.

- Exploratory Data Analysis (EDA): Summarizing data, visualizing distributions, and identifying relationships between variables using descriptive statistics and graphs.

- Feature engineering: Selecting and transforming variables to improve model performance, often based on statistical insights.

- Model Building: Using statistical models like linear regression, logistic regression, or decision trees to make predictions or classify data.

- Model Evaluation: Assessing the accuracy and reliability of models using statistical metrics like R-squared, precision, recall, and F1 score.

- Hypothesis Testing: Formulating and testing hypotheses about relationships between variables to draw valid conclusions.

- A/B Testing: Comparing the performance of different versions of a product or website to determine which one is more effective, using statistical significance tests.

Examples of Statistical Methods in Real-world Data Analysis:

Here are some examples of how statistical methods are applied in real-world data analysis:

- Healthcare: Statistical methods can be used for analyzing clinical trial data to determine the effectiveness of a new drug or treatment.

- Finance: Building risk models to assess the creditworthiness of borrowers.

- Marketing: Identifying customer segments and predicting their buying behaviour.

- E-commerce: Personalizing product recommendations based on customer preferences.

- Manufacturing: Optimizing production processes to reduce defects and improve efficiency.

By applying statistical methods, data scientists can uncover hidden patterns in data, make accurate predictions, and drive data-driven decision-making across various domains. Whether it’s predicting customer churn, optimizing pricing strategies, or detecting fraudulent activity, statistics play a pivotal role in transforming raw data into actionable insights.

The Fundamental Statistics Concepts for Data Science

Statistics provides the foundation for extracting meaningful insights from data. Understanding these key concepts will empower you to analyze data effectively, build robust models, and make informed decisions in the field of data science.

1. Correlation

Correlation quantifies the relationship between two variables. The correlation coefficient, a value between -1 and 1, indicates the strength and direction of this relationship. A positive correlation means that as one variable increases, so does the other, while a negative correlation means that as one variable increases, the other decreases. Pearson correlation measures linear relationships, while Spearman correlation assesses monotonic relationships.

2. Regression

Regression analysis is a statistical method used to model the relationship between a dependent variable and one or more independent variables. Linear regression models a linear relationship, while multiple regression allows for multiple independent variables. Logistic regression is used when the dependent variable is categorical, such as predicting whether a customer will churn or not.

3. Bias

Bias refers to systematic errors in data collection, analysis, or interpretation that can lead to inaccurate conclusions. Selection, measurement, and confirmation bias are examples of different types of bias. Mitigating bias requires careful data collection and analysis practices, such as random sampling, blinding, and robust statistical methods.

4. Probability

Probability is the study of random events and their likelihood of occurrence. Expected values, variance, and probability distributions are examples of fundamental probability concepts. Conditional probability and Bayes’ theorem allow us to update our beliefs about an event based on new information.

5. Statistical Analysis

Statistical analysis is the process of testing hypotheses and making inferences about data using statistical techniques. Analysis of variance (ANOVA) compares means between multiple groups, while chi-square tests assess the relationship between categorical variables.

6. Normal Distribution

Numerous natural phenomena can be described by the normal distribution, commonly referred to as the bell curve. It is a common probability distribution. It’s characterized by its mean and standard deviation. Z-scores standardize values relative to the mean and standard deviation, allowing us to compare values from different normal distributions.

By mastering these fundamental statistical concepts, you will be able to analyze data, identify patterns, make predictions, and draw meaningful conclusions that will aid in data science decision-making.

Statistics in Relation To Machine Learning

While machine learning frequently takes center stage in data science, statistics is its unsung hero. Statistical concepts underpin the entire machine learning process, from model development and training to evaluation and validation. Understanding this connection is essential for aspiring data scientists and anyone seeking to harness the power of machine learning.

The Role of Statistics in Machine Learning:

Statistics and machine learning are closely intertwined disciplines. Here’s how they relate:

- Model Development: Machine learning models are created and designed using statistical methods such as regression and probability distributions. These models are essentially mathematical representations of relationships within data.

- Training and Optimization: Statistical optimization techniques, such as gradient descent, are used to fine-tune the parameters of machine learning models, enabling them to learn from data and make accurate predictions.

- Model Evaluation: Statistical metrics like accuracy, precision, recall, and F1 score are used to assess the performance of machine learning models. These metrics help data scientists select the best-performing model and identify areas for improvement.

- Hypothesis Testing: Statistical hypothesis testing determines whether the observed results of a machine learning model are statistically significant or simply random.

- Data Preprocessing: Statistical techniques like normalization and standardization are applied to prepare data for machine learning algorithms.

Examples of Statistical Techniques Used in Machine Learning:

Certainly, many statistical techniques form the backbone of machine learning algorithms. Here are a few examples:

- Linear Regression: A statistical model used for predicting a continuous outcome variable based on one or more predictor variables.

- Logistic Regression: A statistical model used for predicting a binary outcome (e.g., yes/no, true/false) based on one or more predictor variables.

- Bayesian Statistics: A probabilistic framework that combines prior knowledge with observed data to make inferences and predictions.

- Hypothesis Testing: A statistical method for evaluating whether a hypothesis about a population is likely to be true based on sample data.

- Cross-Validation: A technique for assessing how well a machine learning model will generalize to new, unseen data.

Statistical Software Used in Data Science

Data scientists have access to a vast collection of statistical software, each with its own set of strengths and capabilities. Whether you’re just starting your data science journey or you’re a seasoned professional, familiarizing yourself with these tools is essential for efficient and effective data analysis.

- Excel: While often overlooked, Excel remains a powerful tool for basic data analysis and visualization. Its user-friendly interface and built-in functions make it accessible for beginners, while its flexibility allows for custom calculations and data manipulation.

- R: It is a statistical programming language specifically designed for data analysis and visualization. It boasts a vast collection of packages and libraries for various statistical techniques, making it a favorite among statisticians and data analysts.

- Python: Known for its versatility and ease of use, Python has become the go-to language for data science. It offers a rich ecosystem of libraries like NumPy (for numerical operations), pandas (for data manipulation and analysis), SciPy (for scientific computing), and stats models (for statistical modeling), making it a powerful tool for data scientists.

- MySQL: It is a popular open-source relational database management system (RDBMS), is widely used to store and manage structured data. Its ability to handle large datasets and perform complex queries makes it essential for data scientists working with relational data.

- SAS: It is a comprehensive statistical analysis software suite used in various industries for tasks like business intelligence, advanced analytics, and predictive modeling. It offers a wide range of statistical procedures, data management tools, and reporting capabilities.

- Jupyter Notebook: A web-based interactive computing environment that allows data scientists to create and share documents that combine code, visualizations, and narrative text. It’s a popular tool for data exploration, prototyping, and collaboration.

The software used is frequently determined by the task at hand, the type of data, and personal preferences. Many data scientists use a combination of these tools to leverage their strengths and tackle diverse challenges.

Ready to master these essential tools and advance your data science skills? Explore Scaler’s Data Science course and gain hands-on experience with Excel, Python, MySQL, and more.

Practical Applications and Case Studies

Statistics isn’t just theoretical; it’s the engine powering many of the most impactful data science applications across industries. Here are a few examples where statistical methods play a pivotal role:

1. Customer Churn Prediction (Telecommunications):

A telecommunications company was experiencing a high rate of customer churn, losing valuable revenue.

Data scientists tackled this problem by building a logistic regression model using historical customer data. This model analyzed various factors, including call patterns, data usage, customer service interactions, and billing history, to predict the likelihood of each customer churning. Armed with these predictions, the company could proactively reach out to high-risk customers with personalized retention offers and tailored services, ultimately reducing churn and improving customer loyalty.

2. Fraud Detection (Finance):

A financial institution was losing millions of dollars annually due to fraudulent transactions.

To combat this, data scientists implemented anomaly detection algorithms based on statistical distributions and probability theory. These algorithms continuously monitored transaction data, flagging unusual patterns or outliers that could indicate fraudulent activity. This allowed the institution to investigate and block potentially fraudulent transactions in real time, significantly reducing financial losses.

3. Disease Prediction (Healthcare):

In the realm of healthcare, data scientists are using survival analysis and predictive modeling techniques to predict the risk of diseases like diabetes and heart disease. By analyzing patient data, including demographics, medical history, lifestyle factors, and genetic information, these models can identify high-risk individuals. Armed with this knowledge, healthcare providers can offer personalized preventive care and early interventions, potentially saving lives and improving overall health outcomes.

4. Recommender Systems (e-commerce):

E-commerce giants like Amazon and Netflix rely heavily on recommender systems to drive customer engagement and sales. These systems use collaborative filtering and matrix factorization, statistical techniques that analyze vast amounts of user behavior and product/content data. By understanding user preferences and item characteristics, recommender systems can suggest products or movies that are most likely to resonate with each individual, resulting in personalized shopping experiences and increased revenue.

These case studies demonstrate how statistics enables data scientists to tackle complex problems, uncover hidden patterns, and provide actionable insights that drive business value across industries. By leveraging statistical methods, you can create innovative solutions that have a real-world impact, from improving customer satisfaction to saving lives.

Read More Article:

- Data Science Roadmap

- How to Become a Data Scientist

- Career Transition to Data Science

- Data Science Career Opportunities

- Best Data Science Courses Online

Conclusion

Statistics is the foundation on which data science is built. It provides the essential tools for understanding, analyzing, and interpreting data, allowing us to uncover hidden patterns, make informed decisions, and drive innovation.

From the fundamental concepts of descriptive and inferential statistics to the advanced techniques used in machine learning, statistics empowers data scientists to transform raw data into actionable insights. By mastering the concepts discussed in this guide, you’ll be well-equipped to tackle the challenges of data analysis, build robust models, and make data-driven decisions that have a real-world impact. Remember, statistics is not just a subject to be studied; it’s a powerful tool that can unlock the full potential of data and propel your career in data science to new heights.

If you’re ready to dive deeper into the world of data science, consider exploring Scaler’s comprehensive Data Science Course. They offer a well-structured curriculum, expert instruction, and career support to help you launch your career in this exciting field.

FAQs

What statistics are needed for data science?

Data science requires a solid foundation in descriptive and inferential statistics, including measures of central tendency and variability, probability distributions, hypothesis testing, regression analysis, and sampling techniques.

What are the branches of statistics?

The two primary branches of statistics are descriptive statistics, which summarize and describe data, and inferential statistics, which draw conclusions about populations from samples. Other branches include Bayesian statistics, non-parametric statistics, and robust statistics.

What is the importance of statistics in data science?

Statistics is important in data science because it provides tools for analyzing and interpreting data, developing reliable models, making informed decisions, and effectively communicating findings. It’s the backbone of the entire data science process, from data collection to model evaluation.

Can I learn statistics for data science online?

Yes, numerous online courses and resources are available to learn statistics for data science. Platforms such as Coursera, edX, and Udemy provide courses ranging from beginner to advanced levels, which are frequently taught by experienced professionals and academics.

How do I apply statistical concepts in data science projects?

Statistical concepts are used throughout the data science workflow. You can use descriptive statistics to summarize data, inferential statistics to test hypotheses, regression analysis to predict outcomes, and various other techniques depending on the specific project and its goals.

![[DS] Not sure how to be a top data scientist](https://scaler-blog-prod-wp-content.s3.ap-south-1.amazonaws.com/wp-content/uploads/2024/11/19104522/Banner-1-2.jpg)

![[DS] think data science is for you](https://scaler-blog-prod-wp-content.s3.ap-south-1.amazonaws.com/wp-content/uploads/2024/11/19104524/Banner-2-2.jpg)