How to Reduce Bias in AI?

Overview

Artificial Intelligence (AI) has facilitated significant advancements in various industries but has also raised concerns about bias in AI algorithms. AI bias refers to the unfair treatment or discrimination against certain groups based on characteristics like race, gender, or age. Biased AI systems can perpetuate inequality and reinforce societal biases. This article explores strategies to address and reduce AI bias through diverse and representative datasets, algorithmic fairness, and continuous monitoring and evaluation. By implementing these measures, organizations can build fair and unbiased AI systems that align with ethical standards.

Artificial Intelligence and Bias

AI systems are designed to process vast amounts of data and make autonomous decisions. However, these systems are not immune to bias. Bias in AI algorithms can arise from multiple sources, including biased training data, flawed algorithm design, or the inherent biases of the human creators. Understanding the complexities of AI bias is essential to effectively address and mitigate its impact.

AI bias often originates from biased training data. Machine learning algorithms learn patterns and make predictions based on historical data. If this data is biased or reflects societal prejudices, the AI system will learn and replicate those biases. For example, if historical criminal justice data shows disproportionate arrests among certain racial groups, an AI system trained on that data may inadvertently perpetuate racial bias when predicting the likelihood of reoffending.

AI bias can also emerge from the design and development of AI models. Biases can inadvertently be introduced through the selection of features, the choice of training algorithms, or the optimization objectives.

What is AI Bias?

AI bias refers to the unfair or discriminatory treatment of individuals or groups by artificial intelligence systems. It occurs when AI algorithms produce outcomes that exhibit favoritism or discrimination based on certain characteristics, such as race, gender, age, or socioeconomic status. AI bias can manifest in various forms, including biased predictions, unequal access to resources or opportunities, and the reinforcement of existing societal biases.

The root cause of AI bias can often be traced back to the data used to train AI models. Machine learning algorithms learn from historical data, which may contain inherent biases or reflect societal prejudices. If the training data is unrepresentative or skewed, the AI system will learn and reproduce those biases in its decision-making processes. For example, if a facial recognition system is trained predominantly on data of lighter-skinned individuals, it may struggle to accurately identify individuals with darker skin tones, leading to biased and discriminatory outcomes.

AI bias can also arise due to the design and implementation of algorithms. Biased assumptions or preferences of human designers can inadvertently influence the decision-making process of AI systems.

The impact of AI bias can be significant and far-reaching. In the realm of criminal justice, biased AI algorithms can lead to discriminatory practices such as profiling or unfair sentencing.

To mitigate AI bias, algorithms can be designed to incorporate principles of fairness, such as equalizing error rates across different groups or ensuring equitable outcomes. Transparency and interpretability of AI systems are also crucial to understanding and identifying potential biases.

What are the Types of AI Bias?

In this section, we will focus on two broad types of AI bias - cognitive biases and lack of complete data.

Cognitive Biases

Cognitive biases refer to the inherent biases and limitations of human thinking that can inadvertently influence AI systems. These biases can be introduced during the design, development, or decision-making processes, and subsequently impact the outcomes of AI algorithms. Some common cognitive biases that can contribute to AI bias include:

-

Confirmation Bias:

This bias occurs when AI systems favor information or patterns that confirm preexisting beliefs or assumptions while disregarding contradictory evidence. -

Stereotyping Bias:

AI systems may inadvertently rely on stereotypes and generalizations about certain groups or individuals, leading to biased predictions or discriminatory outcomes. -

Availability Bias:

Availability bias occurs when AI systems prioritize easily accessible or readily available data over more comprehensive or representative information. This bias can result in incomplete or skewed understandings of certain groups or situations, leading to biased outcomes.For example, an AI system trained on data from a specific region may develop a skewed understanding of certain demographics, leading to biased decisions or recommendations for individuals from other regions that are not represented in the training data.

Lack of Complete Data

Another type of AI bias stems from the lack of complete or representative data used to train AI models. Incomplete data can lead to biased outcomes or reinforce existing biases in the AI system. Some examples of biases arising from the lack of complete data include:

- Underrepresentation Bias:

If certain groups are underrepresented or excluded from the training data, the AI system may struggle to accurately understand or make predictions for those groups. - Sampling Bias:

Sampling bias occurs when the training data is not a representative sample of the population it aims to serve. If the data is collected from a biased or limited sample, the AI system may generate biased results that do not reflect the broader population. - Data Imbalance Bias:

Data imbalance refers to situations where certain groups or classes are significantly overrepresented or underrepresented in the training data. This can lead to biased predictions, as the AI system may be more accurate for dominant groups.

What are Some Examples of AI Bias?

These examples highlight the pervasive nature of AI bias in various domains. They underscore the importance of proactive measures to identify, address, and mitigate bias in AI systems.

Eliminating Selected Accents in Call Centers

In some instances, AI systems used in call centers have exhibited bias against certain accents. The AI algorithms, designed to transcribe and analyze customer interactions, may struggle to accurately understand and process accents that differ from the dominant accent in the training data. This can result in misinterpretations, misunderstandings, and unequal treatment of customers with non-standard accents, leading to a biased customer experience.

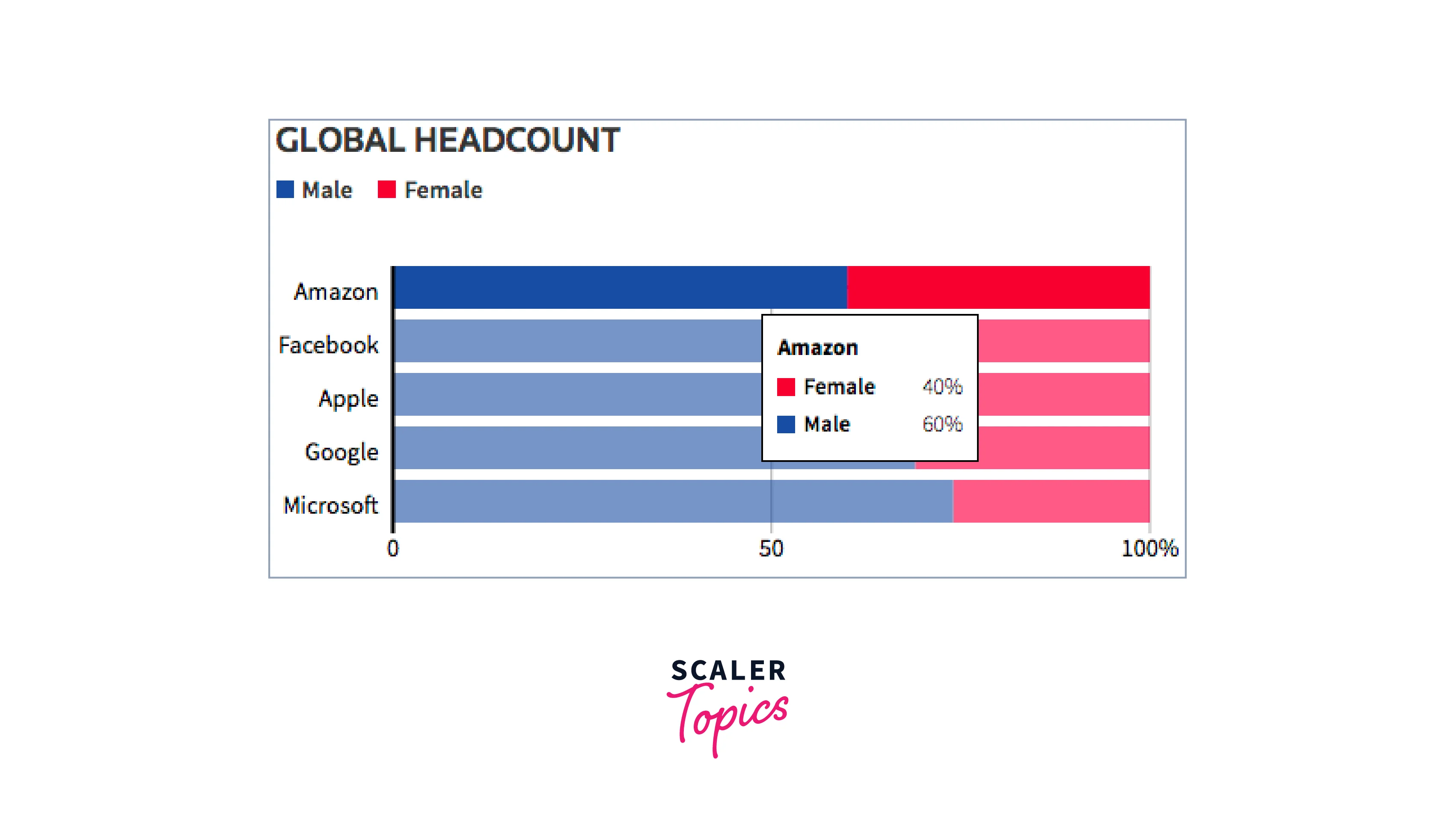

Amazon's Biased Recruiting Tool

Amazon faced scrutiny when it was discovered that its AI recruiting tool exhibited gender bias. The tool was designed to review job applicants' resumes and make recommendations. However, due to the historical hiring patterns of the company, the AI system learned to favor male candidates over female candidates. This biased outcome reflected the gender imbalances within the existing workforce and perpetuated the discrimination against women in the hiring process.

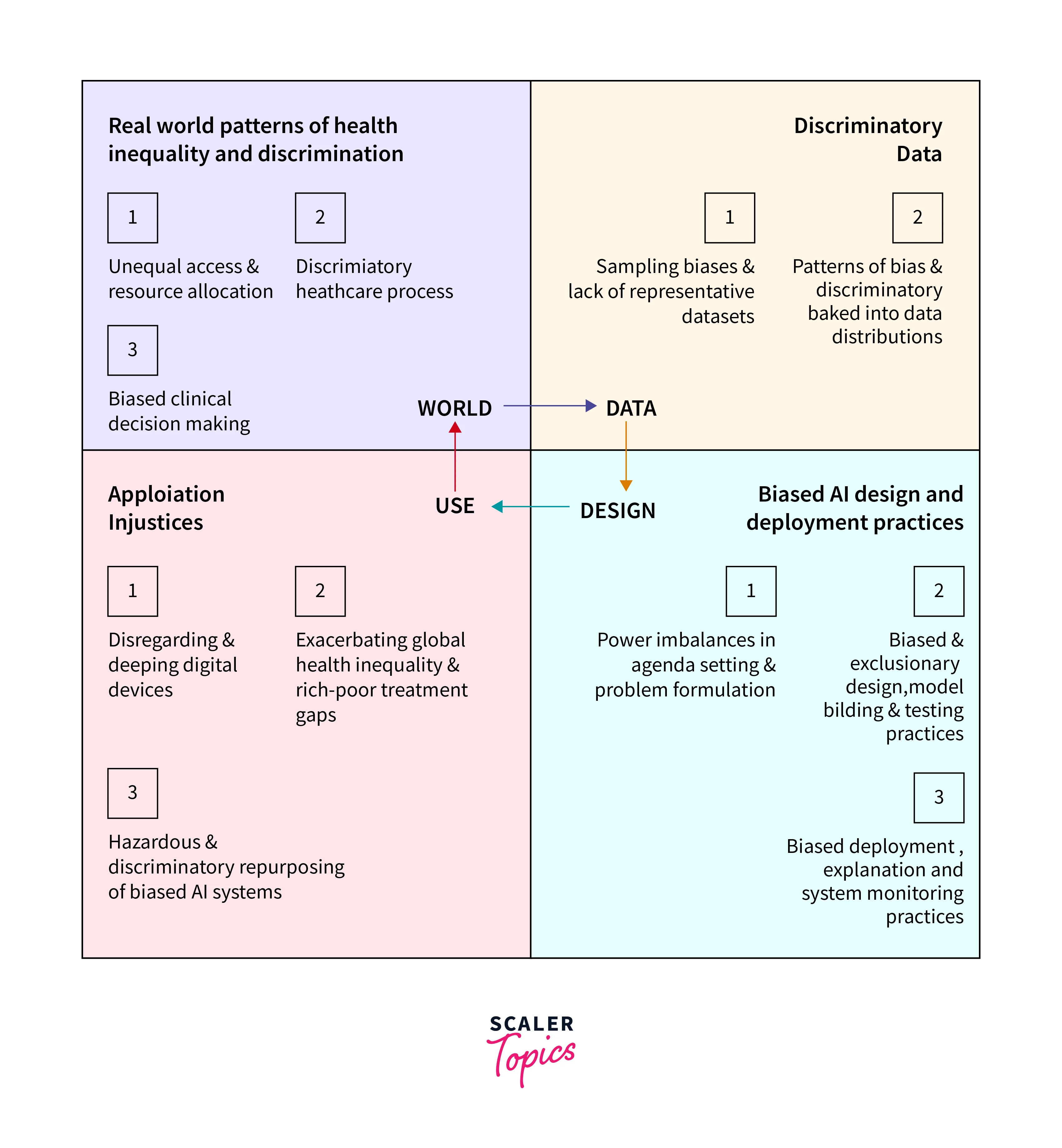

Racial Bias in Healthcare Risk Algorithm

Healthcare risk algorithms are used to predict and assess patient risks, such as predicting readmission rates or identifying high-risk patients. However, studies have shown that these algorithms can exhibit racial bias. For example, a study found that an algorithm used to identify high-risk patients for additional care resources systematically underestimated the needs of Afro-American patients compared to white patients. This bias resulted in unequal distribution of resources and potentially compromised healthcare outcomes for marginalized communities.

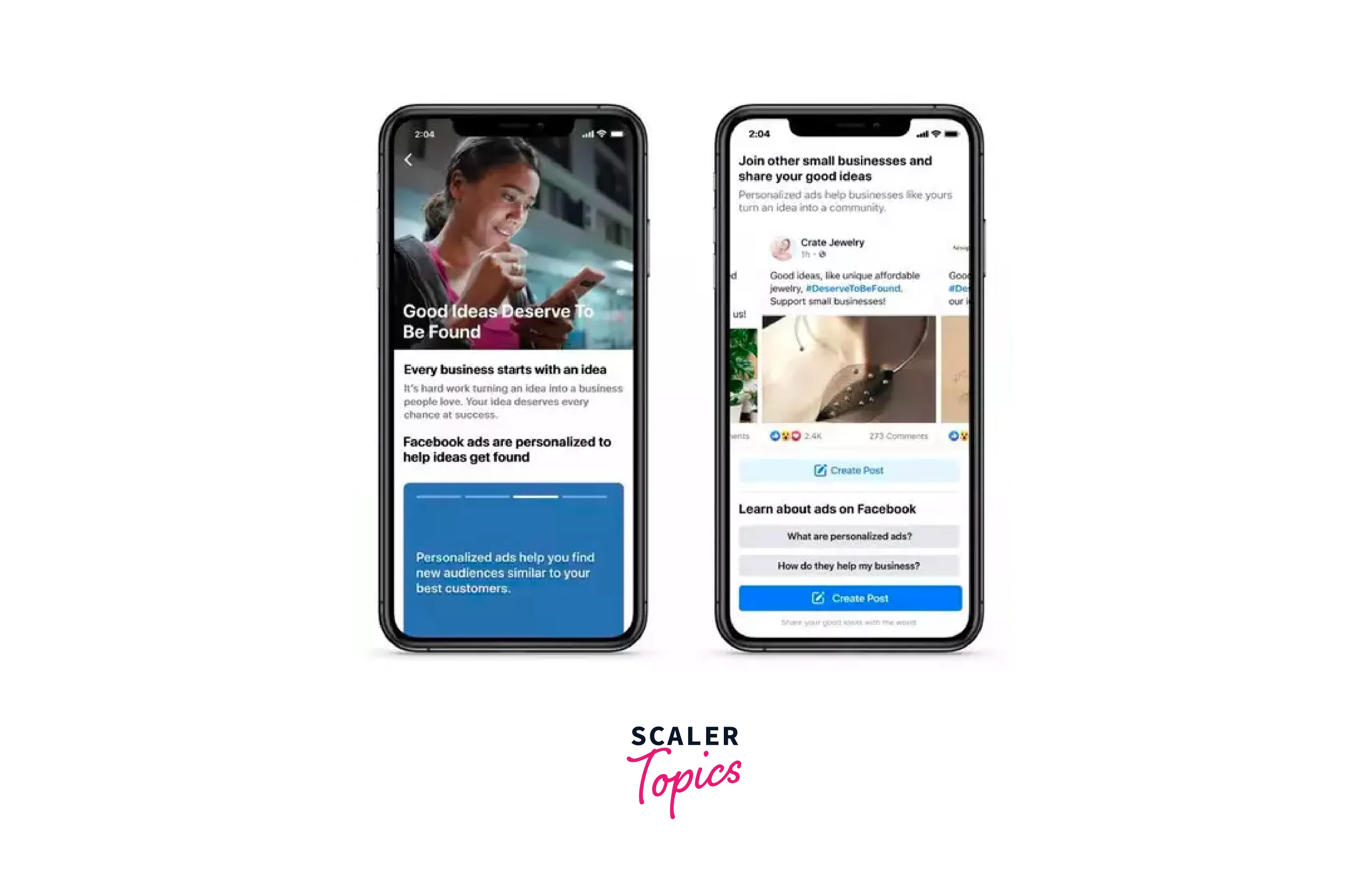

Bias in Facebook Ads

Facebook's ad platform has faced criticism for allowing biased ad targeting. Advertisers can target specific demographics, interests, or behaviors. However, this targeting feature can be misused and result in discriminatory practices. For instance, there have been cases where housing ads on Facebook were targeted to exclude certain racial or ethnic groups, violating fair housing laws and perpetuating housing discrimination.

Human Factor Relating to Bias in AI

The human factor plays a significant role in the presence of bias in AI systems. Biases of AI creators, lack of diversity in AI development, and ethical considerations all contribute to the potential for bias in AI algorithms.

- Biases of AI Creators:

The biases of AI creators, including their unconscious biases and personal beliefs, can inadvertently influence the design choices, data selection, and decision-making processes, resulting in biased outcomes. AI creators need to be aware of their biases and actively address them to minimize bias in AI systems. - Lack of Diversity in AI Development:

Homogeneous teams may have limited perspectives and experiences, resulting in biased assumptions and inadequate consideration of potential biases. By promoting diversity in AI development teams, organizations can bring in different viewpoints and expertise, enabling a more comprehensive understanding of biases and their mitigation. - Ethical Considerations:

AI developers and practitioners have an ethical responsibility to prioritize fairness, transparency, and accountability. Establishing ethical guidelines and frameworks can guide the development and deployment of AI technologies, ensuring that bias is minimized and ethical standards are upheld. By incorporating ethical considerations throughout the AI development process, organizations can mitigate the risks of bias and work towards more ethical and unbiased AI systems. - Bias in Data Collection:

Bias in data used to train AI models can result in biased outcomes. Historical data reflecting societal biases or underrepresentation of certain groups can perpetuate and amplify bias in AI systems. Careful consideration should be given to data collection methods, ensuring diversity, representativeness, and the identification and mitigation of biases in the training data. - Continuous Monitoring and Evaluation:

Regular monitoring and evaluation of AI systems are necessary to detect and address bias as it evolves. Ongoing assessments and audits can help identify and rectify bias in AI algorithms, ensuring fairness and accountability. By implementing continuous monitoring mechanisms, organizations can actively mitigate the risks of bias and ensure that AI systems remain fair, transparent, and unbiased.

Will AI Ever be Completely Unbiased?

Achieving completely unbiased AI systems is a complex challenge due to limitations in data and human influence. Bias in AI algorithms is rooted in biased training data and can be influenced by the unconscious biases of AI developers. Detecting and mitigating bias is a complex task, as it can manifest in various ways and requires continuous monitoring and improvement. Ethical and social challenges add further complexity, and bias can evolve.

How to Fix Biases in AI and Machine Learning Algorithms?

Fixing biases in AI and machine learning algorithms is essential to ensure fairness, transparency, and ethical use of AI technology. While the complete elimination of biases may be challenging, several strategies and approaches can help mitigate bias in AI systems.

- Diverse and Representative Data:

Using diverse and representative datasets is crucial to minimize bias in AI algorithms. It is important to ensure that the training data encompasses various demographic groups, cultures, and perspectives. - Bias Identification and Evaluation:

Implementing techniques to identify and evaluate bias in AI algorithms is essential. This includes analyzing the outcomes of the algorithms to determine if they disproportionately favor or discriminate against specific groups. Techniques such as statistical tests, fairness metrics, and bias impact assessments can help in the identification and quantification of biases. - Regular Auditing and Monitoring:

Regular auditing and monitoring of AI systems are necessary to detect and address biases that may emerge over time. Ongoing evaluation ensures that biases are identified and corrected promptly. This can involve continuous monitoring of the data inputs, decision-making processes, and outcomes of the AI algorithms to ensure fairness. - Interdisciplinary Collaboration:

Addressing biases in AI algorithms requires interdisciplinary collaboration. Ethicists, social scientists, and domain experts should work together with AI developers to understand the ethical and social implications of AI systems. By combining technical expertise with diverse perspectives, biases can be more effectively identified and mitigated.

Tools to Reduce Bias

To reduce bias in AI, several tools and techniques have been developed to help identify, analyze, and mitigate biases in machine learning algorithms. These tools play a crucial role in promoting fairness, transparency, and accountability in AI systems.

-

AI Fairness 360 (AIF360):

AIF360 is an open-source toolkit that provides a comprehensive set of algorithms, metrics, and tutorials to address various aspects of bias in AI systems. It offers techniques for measuring bias, mitigating bias in training data, and evaluating fairness in machine learning models.

-

Fairlearn:

Fairlearn is another open-source toolkit that focuses on fairness in AI. It provides algorithms and metrics for group fairness, individual fairness, and disparate impact. Fairlearn enables developers to compare and visualize different fairness mitigation techniques and supports the iterative process of improving fairness in AI systems. -

IBM Watson OpenScale:

IBM Watson OpenScale is a platform that helps organizations manage and monitor the fairness and transparency of AI models in production. It provides tools to detect and mitigate biases by continuously monitoring the performance and behavior of AI systems.

-

Microsoft Fairlearn Dashboard:

The Microsoft Fairlearn Dashboard is a user-friendly interface that allows developers to assess and mitigate unfairness in machine learning models. It provides visualizations and metrics to understand the distribution of predictions across different groups and enables users to experiment with various mitigation techniques. -

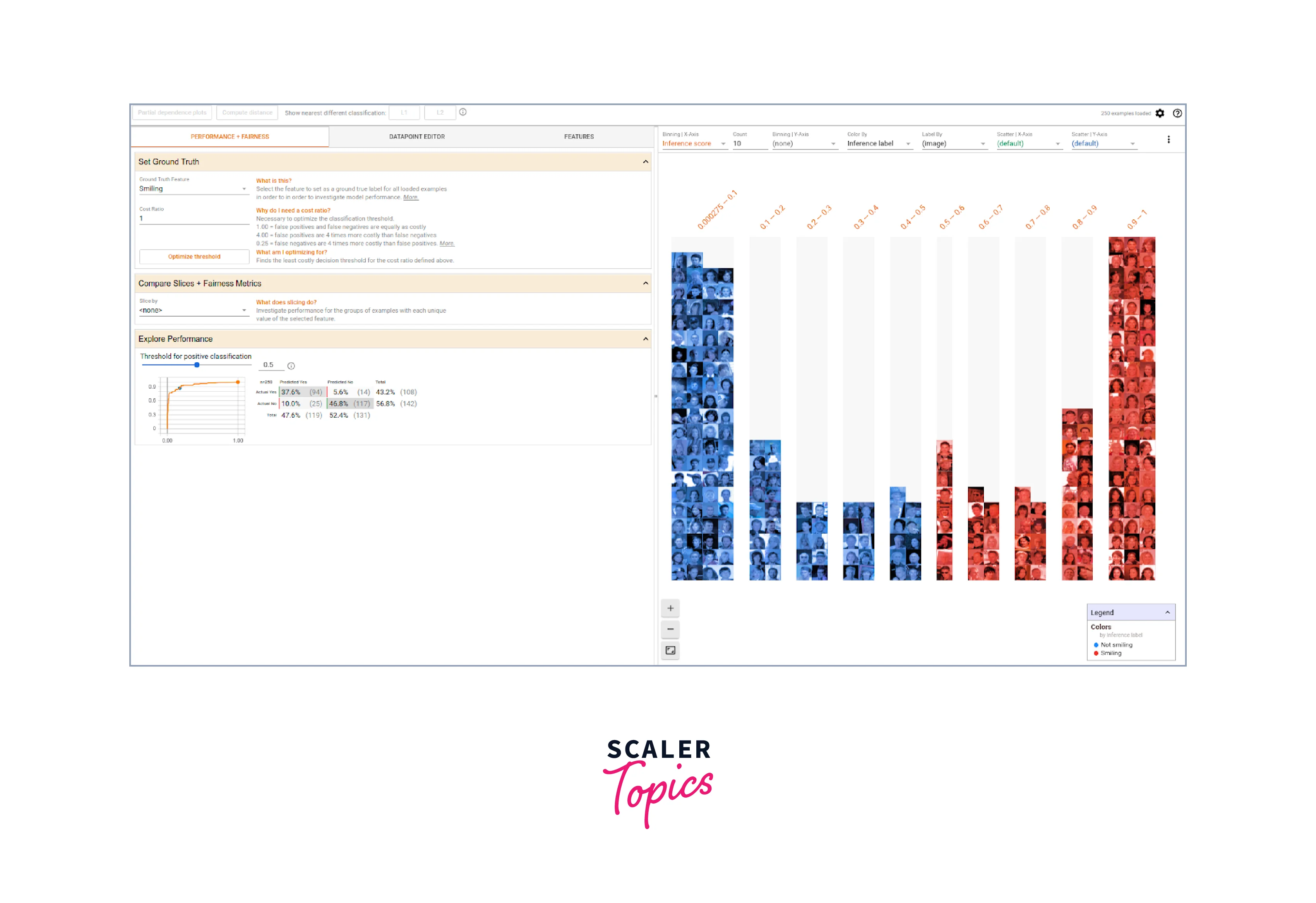

Google What-If Tool:

The Google What-If Tool is a web-based tool that allows developers to explore and understand the behavior of machine learning models. It provides an interactive interface to test different scenarios and visualize the impact of various inputs on model outputs.

Conclusion

- AI bias is a significant concern as it can perpetuate discrimination and reinforce societal inequalities, affecting areas such as hiring processes, criminal justice systems, healthcare, and advertising.

- Examples of AI bias include eliminating selected accents in call centers, Amazon's biased recruiting tool, racial bias in healthcare risk algorithms, and bias in Facebook ads.

- The human factor plays a crucial role in AI bias, as biases present in data, algorithms, and decision-making can be influenced by human prejudices and lack of diversity.

- Achieving completely unbiased AI systems is challenging due to limitations in data, human influence, the complexity of bias detection, and the evolving nature of bias.

- Important tools to reduce bias in AI include AI Fairness 360 (AIF360), Fairlearn, IBM Watson OpenScale, Google What-If Tool, and Microsoft Fairlearn Dashboard.