Philosophy of AI

Overview

Artificial intelligence (AI) has been advancing rapidly in recent years, raising new philosophical questions about the nature of consciousness, intelligence, and the mind. These questions have sparked a growing interest in the AI philosophy, a field that explores the philosophical implications of artificial intelligence and its impact on society. This article provides an overview of the philosophy of AI, including the debate between strong and weak AI and the Chinese Room and Gödelian arguments against strong AI.

Introduction

AI philosophy is a branch of philosophy that investigates the nature of intelligence, consciousness, and the mind about artificial intelligence. The philosophy of AI raises important ethical, social, and political questions, including whether machines can be conscious or have moral responsibilities and how they might impact society.

What is the philosophy of AI?

The AI philosophy is a field of study that explores the philosophical implications of artificial intelligence (AI) for understanding the nature of intelligence, consciousness, and the mind. It aims to answer fundamental questions about the nature of intelligence and the mind, as well as the ethical, social, and political implications of AI for society. This interdisciplinary field draws on philosophy, computer science, cognitive science, psychology, and neuroscience to explore the complex questions raised by AI, including whether machines can be conscious or have moral responsibilities, and how AI might impact society. The philosophy of AI is concerned with both theoretical and practical aspects of AI, seeking to understand how AI can be developed, how it can be used, and what its implications are for society.

Could a Machine Mind Ever Truly Understand Semantics?

The question of whether a machine can truly understand semantics is a central debate in the philosophy of AI. Semantics refers to the meaning of language, and some argue that machines can only manipulate symbols without actually understanding their meaning.

One approach to addressing this question is through the Turing Test, which was proposed by Alan Turing in 1950. The Turing Test involves a human evaluator communicating with a machine and a human via text-based chat. If the evaluator is unable to distinguish the machine from the human based on their responses, the machine is said to have passed the Turing Test and demonstrated human-like intelligence.

However, passing the Turing Test does not necessarily mean that a machine truly understands semantics. For example, a machine could pass the Turing Test by simply matching responses to pre-existing patterns, without actually understanding the meaning behind the language.

Another approach to this question is through the concept of intentionality, which refers to the ability to have beliefs, desires, and intentions. Proponents of strong AI argue that machines can have intentionality, and therefore, truly understand semantics. However, opponents argue that intentionality is a uniquely human capacity and cannot be replicated in machines.

One famous argument against strong AI is the Chinese Room argument, proposed by philosopher John Searle in 1980. The Chinese Room argument is based on a thought experiment in which a person who does not understand Chinese is given a set of rules for translating Chinese symbols into English. The person then receives a set of Chinese symbols and can use the rules to translate them into English without actually understanding the meaning of the symbols. Searle argues that this thought experiment demonstrates that a machine following a set of rules cannot truly understand the meaning of language.

Strong vs Weak AI

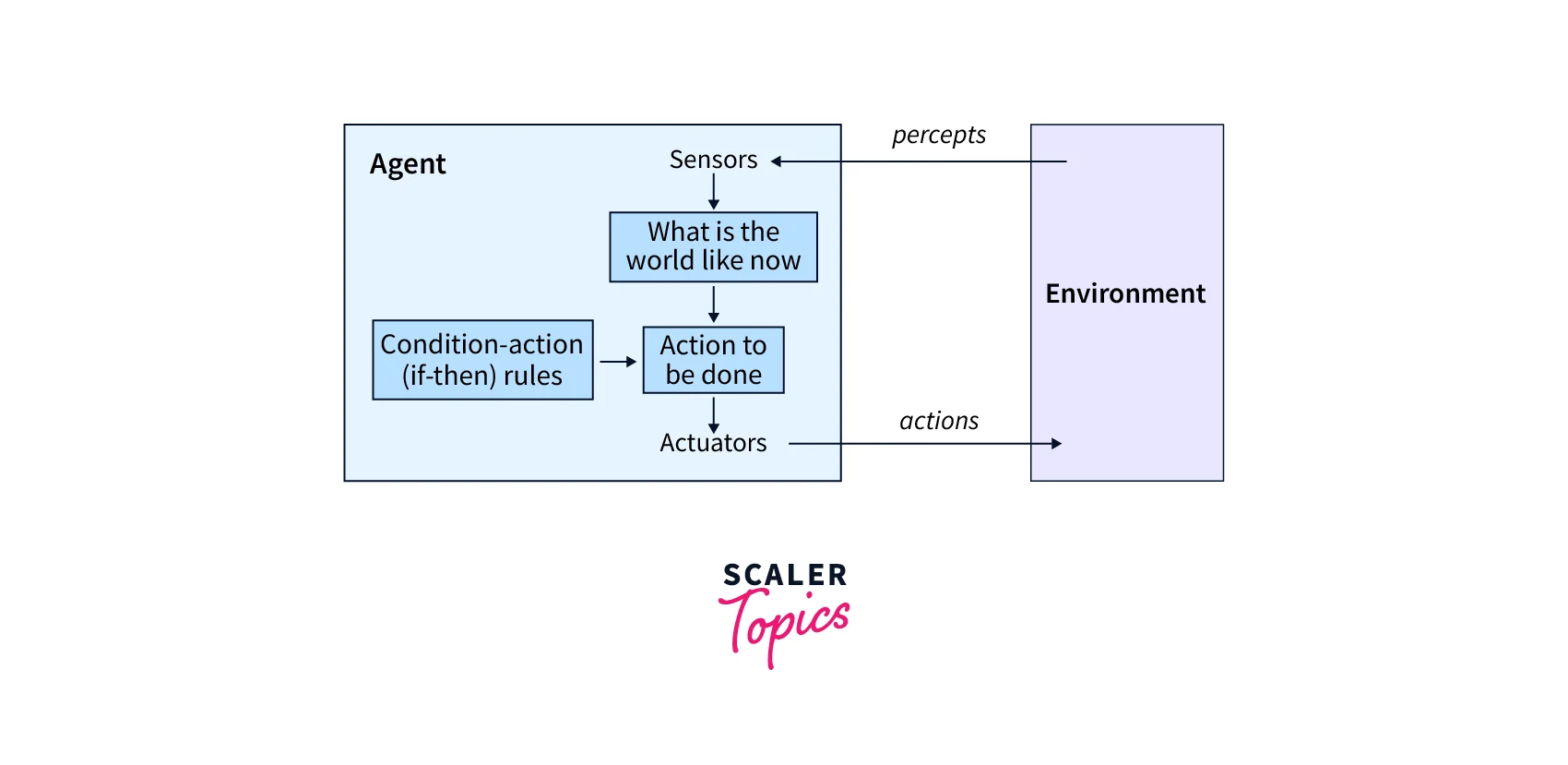

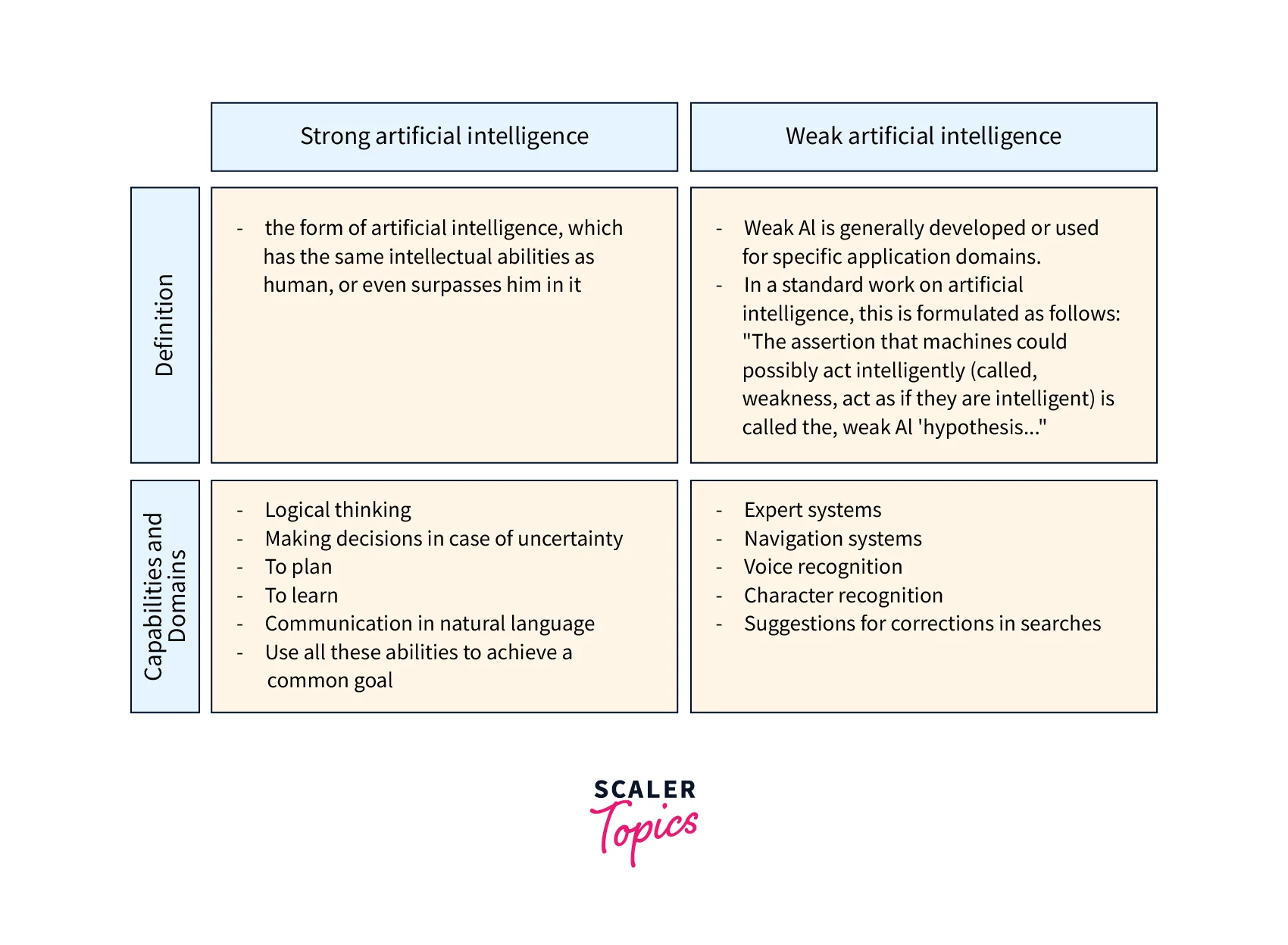

The distinction between strong and weak AI is a key concept in the AI philosophy and is based on the idea that different levels of intelligence can be exhibited by machines.

Weak AI: Weak AI, also known as narrow or applied AI, refers to machines that are designed to perform specific tasks, such as recognizing speech or playing chess. These machines can perform their tasks with a high degree of accuracy, but they do not have general intelligence that can be applied to other tasks. For example, a chess-playing machine may be able to play chess at a world-class level, but it would be unable to perform tasks outside of the game.

Strong AI: Strong AI, also known as artificial general intelligence (AGI), refers to machines that are capable of intelligent behavior that rivals that of a human being. Strong AI would be able to understand language, make decisions, and learn from experience in the same way that a human being does. Strong AI is often considered the ultimate goal of AI research, but it has yet to be achieved.

One example of weak AI is the speech recognition software used in virtual assistants such as Siri and Alexa. These programs can accurately recognize spoken words and respond with appropriate actions, but they do not have a general intelligence beyond their specific task.

In contrast, an example of a strong AI would be a machine that can understand and respond to natural language conversation in the same way that a human being would. This level of intelligence would require a machine to have a deep understanding of semantics and context, as well as the ability to learn and adapt to new situations.

The distinction between strong and weak AI is important for understanding the potential capabilities and limitations of AI. While weak AI has already had a significant impact on many industries, such as finance and healthcare, strong AI has the potential to revolutionize many aspects of society. However, achieving strong AI is a difficult and complex task, and there are significant ethical and social implications to consider as well.

Chinese Room Argument Against Strong AI

The Chinese Room argument was proposed by philosopher John Searle in 1980. The argument is based on a thought experiment in which a person who does not understand Chinese is given a set of rules for translating Chinese symbols into English. The person then receives a set of Chinese symbols and can use the rules to translate them into English without actually understanding the meaning of the symbols.

Searle argues that this thought experiment demonstrates that a machine following a set of rules cannot truly understand the meaning of language. In the same way, a machine may be able to manipulate symbols and produce human-like responses, but it does not truly understand the meaning behind those symbols.

The Gödelian Argument Against “Strong AI”

The Gödelian argument is another famous argument against strong AI, named after the mathematician Kurt Gödel. The argument is based on Gödel's incompleteness theorem, which states that any formal system that is powerful enough to represent arithmetic will contain statements that are true but cannot be proven within that system.

Gödelian argued that since human beings can understand truths that cannot be proven within formal systems, human intelligence must be more powerful than any formal system. Therefore, machines cannot be truly intelligent, since they are limited by the formal systems they operate within.

Conclusion

- The AI philosophy is an interdisciplinary field that explores the philosophical implications of artificial intelligence for understanding the nature of intelligence, consciousness, and the mind.

- The question of whether a machine can truly understand semantics is a central debate in the philosophy of AI.

- Proponents of strong AI argue that machines can have intentionality and truly understand semantics, but opponents argue that intentionality is a uniquely human capacity that cannot be replicated in machines.

- The distinction between strong and weak AI is important for understanding the potential capabilities and limitations of AI.

- While weak AI has already had a significant impact on many industries, strong AI has the potential to revolutionize many aspects of society, but achieving it is a difficult and complex task with significant ethical and social implications.