Explanation-based Learning in Artificial Intelligence

Overview

Explanation-based learning in artificial intelligence is a branch of machine learning that focuses on creating algorithms that learn from previously solved problems. It is a problem-solving method that is especially helpful when dealing with complicated, multi-faceted issues that necessitate a thorough grasp of the underlying processes.

Introduction

Since its beginning, machine learning has come a long way. While early machine-learning algorithms depended on statistical analysis to spot patterns and forecast outcomes, contemporary machine-learning models are intended to learn from subject experts' explanations. Explanation-based learning in artificial intelligence has proven to be a potent tool in its development that can handle complicated issues more efficiently.

What is Explanation-Based Learning?

Explanation-based learning in artificial intelligence is a problem-solving method that involves agent learning by analyzing specific situations and connecting them to previously acquired information. Also, the agent applies what he has learned to solve similar issues. Rather than relying solely on statistical analysis, EBL algorithms incorporate logical reasoning and domain knowledge to make predictions and identify patterns.

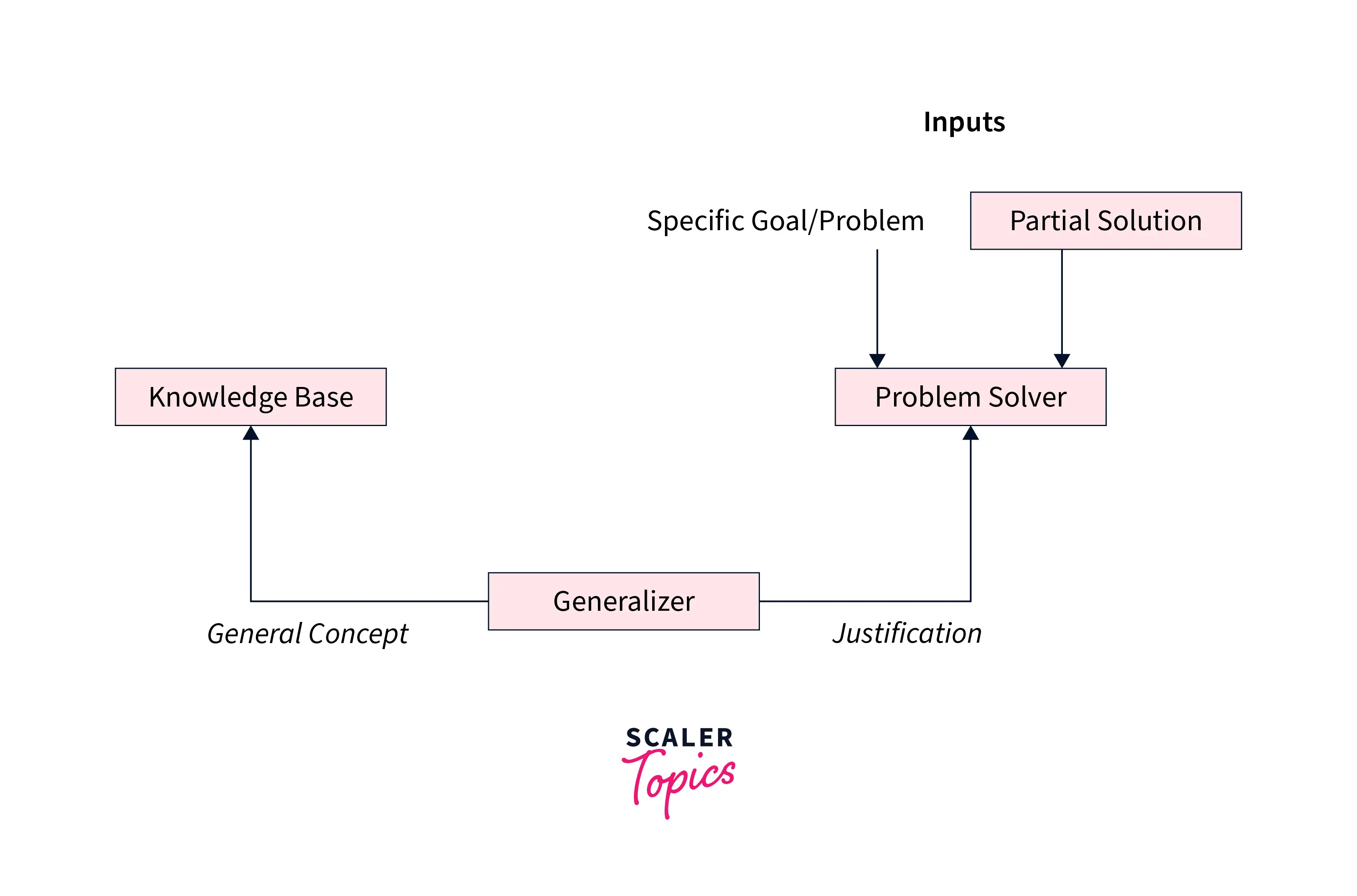

Explanation-based learning architecture:

The environment provides two inputs to the EBL architecture:

-

A specific goal, and

-

A partial solution.

The problem solver analyses these sources and provides reasoning to the generalizer.

The generalizer uses general ideas from the knowledge base as input and compares them to the problem solver's reasoning to come up with an answer to the given problem.

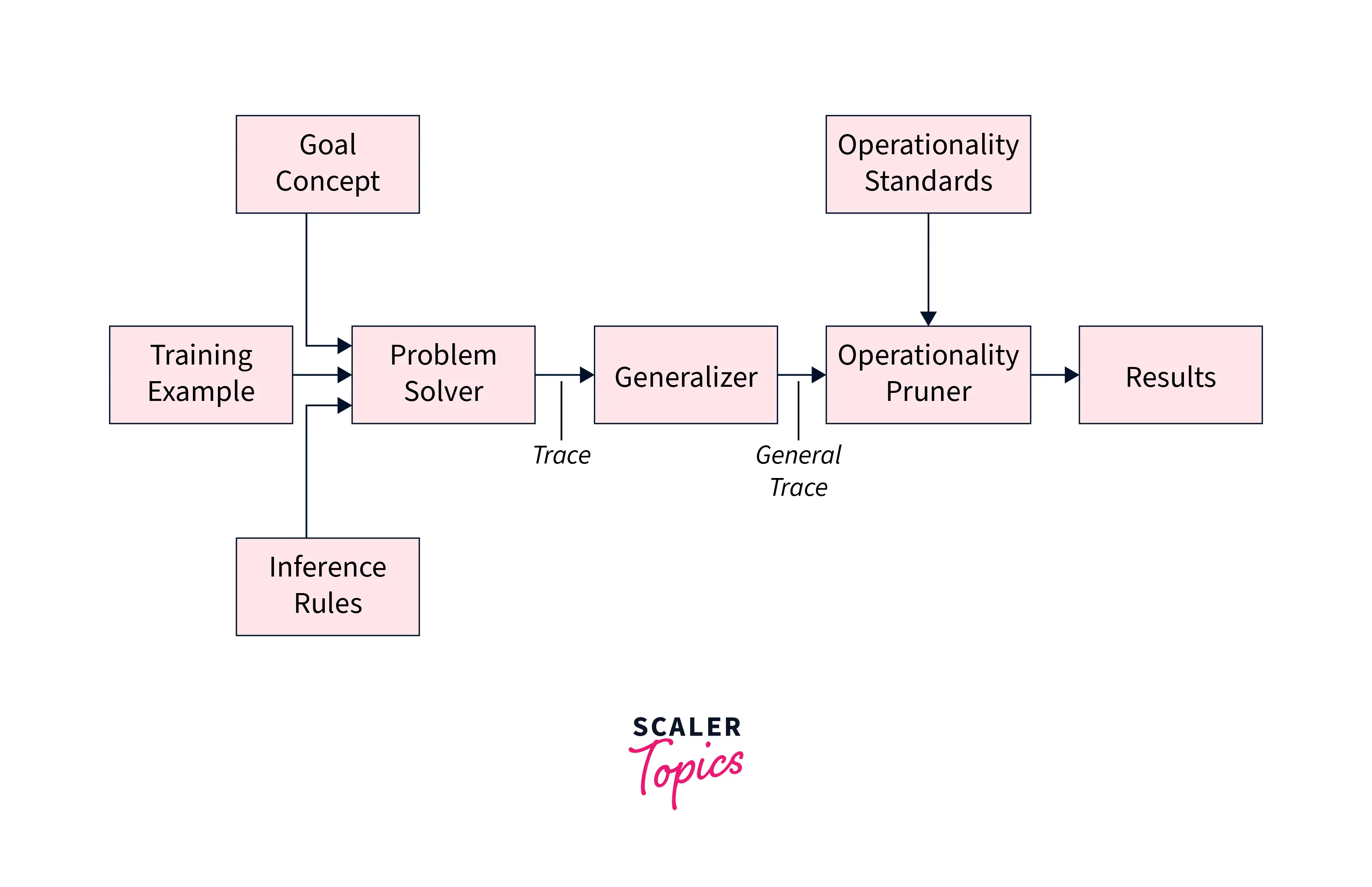

Explanation-based learning System Representation:

-

Problem Solver: It takes 3 kinds of external inputs: The goal idea is a complex problem statement that the agent must learn. Training instances are facts that illustrate a specific instance of a target idea. Inference rules reflect facts and procedures that demonstrate what the learner already understands.

-

Generalizer: The problem solver's output is fed into the generalizer, which compares the problem solver's explanation to the knowledge base and outputs to the operational pruner.

-

Operational pruner: It takes two inputs, one from generalized and the other from operationally standard. The operational standard describes the final concept and defines the format in which the learned concept should be conveyed.

a) The Explanation-Based Learning Hypothesis

According to the Explanation based learning hypothesis, if a system has an explanation for how to tackle a comparable problem it faced previously, it will utilize that explanation to handle the current problem more efficiently. This hypothesis is founded on the concept that learning via explanations is more successful than learning through instances alone.

b) Standard Approach to Explanation-Based Learning

The typical approach to explanation-based learning in artificial intelligence entails the following steps:

- Determine the problem to be solved

- Gather samples of previously solved problems that are comparable to the current problem.

- Identify the connections between the previously solved problems and the new problem.

- Extraction of the underlying principles and rules used to solve previously solved problems.

- Apply the extracted rules and principles to solve the new problem.

c) Examples of Explanation-Based Learning

-

Medical Diagnosis: Explanation-based learning can be used in medical diagnosis to determine the underlying causes of a patient's symptoms. Explanation-based learning algorithms can find trends and produce more accurate diagnoses by analyzing previously diagnosed instances.

-

Robot Navigation: Explanation-based learning may be used to educate robots on how to navigate through complicated settings. Explanation-based learning algorithms can discover the rules and principles that were utilized to navigate those settings and apply them to new scenarios by analyzing prior successful navigation efforts.

-

Fraud Detection: Explanation-based learning may be utilized in fraud detection to discover patterns of fraudulent conduct. Explanation-based learning algorithms can find the rules and principles that were utilized to detect prior cases of fraud and apply them to new cases by analyzing previous incidents of fraud.

Conclusion

- Explanation-based learning in artificial intelligence is a powerful tool for solving complex problems efficiently.

- By learning from explanations provided by domain experts, EBL algorithms can identify the underlying principles and rules that govern a particular domain and apply them to new situations.

- Explanation-based learning in the artificial intelligence approach has a wide range of applications, from medical diagnosis to fraud detection, and is poised to play an increasingly important role in the development of advanced AI systems.