AWS Airflow

Apache Airflow stands out as a leading open-source tool tailored for orchestrating workflows, primarily favored by data engineers for managing intricate data pipelines through its Directed Acyclic Graphs (DAG) capabilities. Recognizing the inherent complexities of manually scaling and maintaining Airflow systems—such as potential VM overloads and scheduler interruptions—managed services like Amazon's Managed Workflows for Apache Airflow (MWAA) emerge as pivotal solutions. MWAA streamlines the operation of data pipelines at scale, alleviating challenges associated with system management. This service, integrated with AWS, facilitates seamless execution of Extract-Transform-Load (ETL) tasks and data pipelines, emphasizing efficiency and scalability. Essentially, as data becomes increasingly integral across industries, orchestrating tasks and automating workflows become imperative. Airflow's robust automation and workflow management features empower developers to author, schedule, and oversee workflows programmatically. However, to circumvent intricate maintenance and security nuances, MWAA emerges as a transformative solution, ensuring optimal performance and streamlined operations for teams leveraging Apache Airflow.

What is AWS Airflow

Before we jump into finding out what AWS Airflow is, let us understand why was the problem statement before introducing AWS Airflow. While working with Apache Airflow which has its own set of advantages, you might have come across scenarios where the Airflow scheduler (which helps to schedule tasks that enable the workflows) stopped working. This happens due to the number of connections to the metadata database increasing to the maximum capacity which meant a considerable risk was getting involved as the business-critical workflows were not running at that moment. This required manual labor to fix the issue, which took time and is very challenging in this fast-paced digital transformation for a company.

QuickNote: The metadata database we discussed above in the problem statement of AWS Airflow is a core component of it, that stores the most central information like the configuration of the AWS Airflow environment's roles and permissions, in addition to all the metadata for past and present DAG(Directed Acyclic Graph) and task runs.

To solve this problem, AWS offers its AWS Airflow which is secure, reliable, and offers available for managed workflow orchestration where you can programmatically author, schedule, and monitor the workflows. You can make use of the Airflow and Python to create workflows without undertaking any stress of managing the infrastructure for offering availability, scalability, and security. With the Amazon MWAA, you can simply use your familiar Apache Airflow to orchestrate the workflows.

Some key points to note regarding the AWS Airflow are:

- Deployment at scale is easier with Apache Airflow. It does end offer any overhead operational burden for managing the infrastructure.

- You can effortlessly connect to the cloud, AWS, or on-premises resources via the Apache Airflow providers or custom plugins.

- To cut down on engineering overhead and operating costs, monitoring the environments through Amazon CloudWatch integration is supported.

- You can schedule and run the workloads in any of your own isolated and secure cloud environments with Apache Airflow.

Benefits

A few key features offered by Amazon Airflow are listed below:

Security is Built-in Amazon Managed Workflows offers built-in security as it now keeps the data secure via Amazon’s Virtual Private Cloud (VPC). Here, the data is encrypted automatically with the AWS Key Management Service (KMS), which enables the workflow environment to be highly secured by default.

Automatic Scaling You now don't require any configuration to automatically manage and offer a seamless worker scaling with Amazon Managed Workflows. With built-in worker monitoring - the scaling is automatic as when the workers are overburdened, additional workers get automatically provisioned to support the traffic, and eventually decommissioned when the traffic decreases or more workers are no longer required.

Seamless Monitoring of workflows can be done - AWS or on-premises You can view the task executions, delays, or any workflow-related errors for more than one environment without the hassles of integrating it with any third-party tools. This is made possible with Amazon Managed Workflows that automatically sends Apache Airflow all the system logs and metrics to Amazon Cloudwatch.

Deployment with AWS Airflow is Easier With Amazon Managed Workflows, the deployment is much easier as it leverages the same Apache Airflow product you are familiar with. The deployment can either be done from CLI, AWS Management Console, AWS CloudFormation, or AWS SDK.

Plug-in integration Amazon Managed Workflows can be used to connect with any of your on-premises resources. Also, you can simply connect to any AWS resources like Cloudwatch, Sagemaker, Lambda, Redshift, SQS, Glue, DynamoDB, EKS, Athena, Batch, SNS, Firehose, DataSync, EMR, ECS/Fargate, and S3 which might be required for your workflows.

Operational costs are Low Operational costs and engineering overhead is reduced at scale with the integration of Managed Workflows by removing the operational load of running the open-source Apache Airflow. This brings down the operational costs that would have been incurred while running a data pipeline orchestration at any scale.

Use cases

Below are a few of the various use cases of the AWS Airflow that show how we can implement the AWS Airflow and unleash its benefits:

Promotes and supports complex workflows: With AWS Airflow, you can effortlessly create either on-demand or scheduled workflows that help with the whole preparation and processing of complex data from big data providers.

Systematically coordinate the ETL (extract, transform, and load) jobs: Orchestrating various ETL processes becomes easier with AWS Airflow. The ETL process can also use diverse technologies within a complex ETL workflow.

Easily prepare the Machine Learning(ML) data: For training the ML models with data, you can easily and quickly automate the data ingestion as well as data training pipelines.

How it works

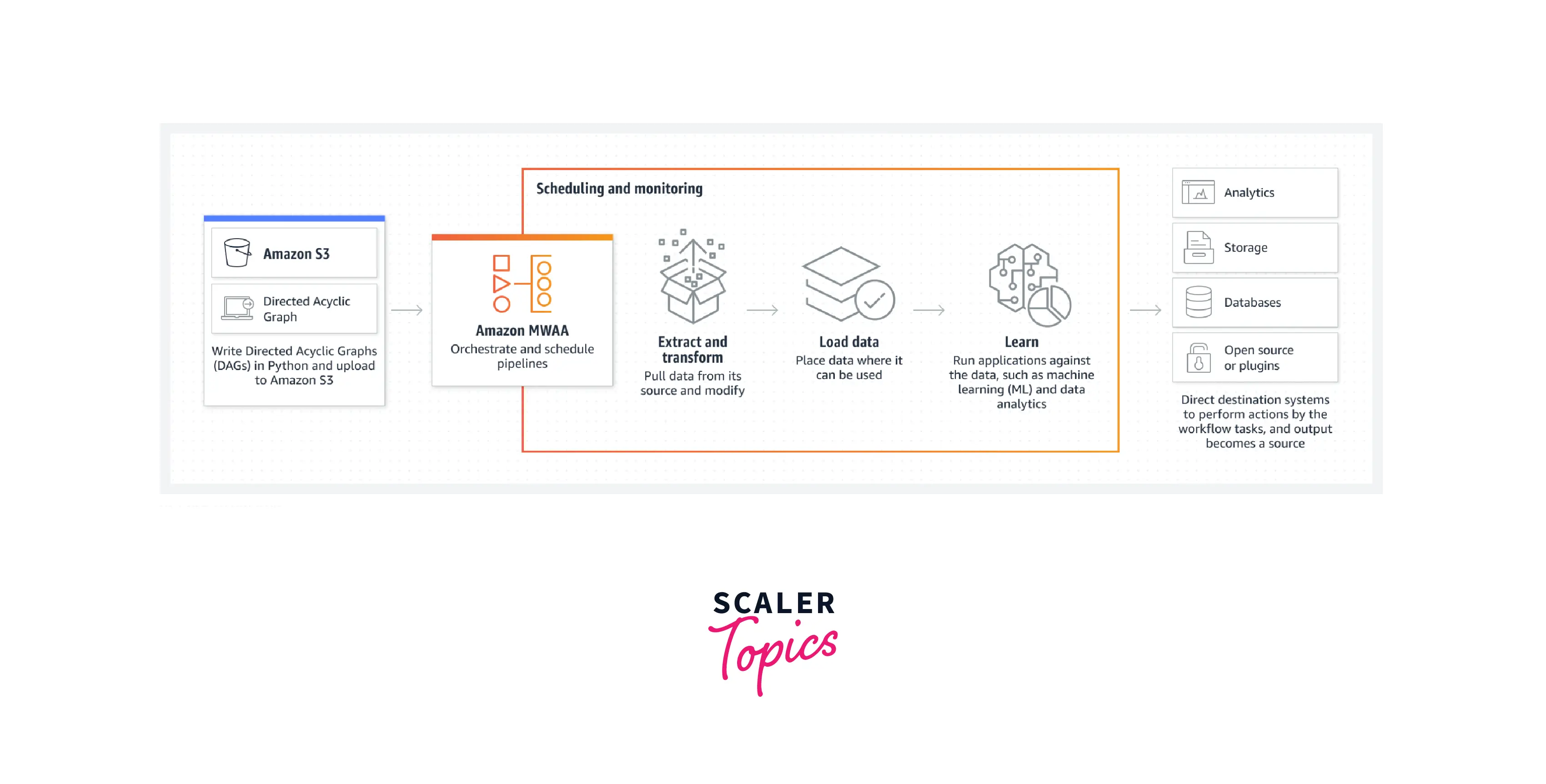

The below diagram shows how AWS Airflow works to secure and offer highly available managed workflow orchestration for Apache Airflow.

Start by simply writing the DAGs (Directed Acyclic Graphs) in the Python language which is used to orchestrate the workflow for Amazon Managed Workflows for Apache Airflow (MWAA). You can even upload these DAGs, plugins, and Python requirements to the Amazon Simple Storage Service (S3) bucket which shall be assigned to the MWAA. Then you can easily orchestrate, schedule, run and monitor the DAGs from either a command line interface (CLI), the Apache Airflow user interface (UI), the AWS Management Console, or a software development kit (SDK). During scheduling and monitoring, you can perform ETL processing to pull data from data sources and modify them according to business transformation, then load this refined data where it can be utilized for generating insights. This transformed data can be used to run applications like machine learning (ML) and data analysis. Finally, you can direct the target systems to perform actions via the workflow tasks and make the output become a source for various use cases.

What is Apache Airflow on AWS?

To help teams manage their workflows programmatically, Apache offers Apache Airflow as a dynamic platform that helps to turn the sequences of consecutive tasks into Directed Acyclic Graphs (DAGs) effortlessly. Users are given the capability to set up dependencies using the Apache Airflow scheduler, to automatically authorize the tasks to the workers, for fast and reliable execution. Collaborating, maintaining, testing, and versioning are simpler by defining the workflows as code.

For complex DAGs also, any modification can be done easily via the robust set of command line utilities which makes working with complicated DAGs much easier and simpler. The advanced user interface offers to visualize the end-to-end data pipelines easier allowing the teams to keep track of and monitor any production, progress, and problems seamlessly. All these features make Apache Airflow a widely popular choice for workflow orchestration, whereas the MWAA only enhances its features.

What are Managed WorkFlows for Apache Airflow?

As we know, Apache Airflow is an open-source tool that is widely used to programmatically author, schedule, and monitor the chain of tasks and processes which are mostly known as "Workflows." AWS offers Amazon Managed Workflows for Apache Airflow (widely popular as MWAA) service which is defined as a managed orchestration service for Apache Airflow which offers an easy-to-setup and operates functionality for any end-to-end data pipelines at scale in the cloud.

With the capability of Amazon MWAA, users can use the capability of Airflow and Python together to easily create workflows. Users don't have to manage the infrastructure for availability, scalability, or security purposes. When the demands or traffic on workflows increase, Amazon MWAA is intelligent enough to automatically scale the capacity to meet the needs. Also, The Amazon Managed Workflows for Apache Airflow is integrated with AWS security services which provide fast and secure access to data.

Region Availability

Below is the regions where the capabilities of Amazon MWAA are available.

| Region | Address |

|---|---|

| Europe (Stockholm) | eu-north-1 |

| Europe (Frankfurt) | eu-central-1 |

| Europe (Ireland) | eu-west-1 |

| Europe (London) | eu-west-2 |

| Europe (Paris) | eu-west-3 |

| Asia Pacific (Mumbai) | ap-south-1 |

| Asia Pacific (Singapore) | ap-southeast-1 |

| Asia Pacific (Sydney) | ap-southeast-2 |

| Asia Pacific (Tokyo) | ap-northeast-1 |

| Asia Pacific (Seoul) | ap-northeast-2 |

| US East (N. Virginia) | us-east-1 |

| US East (Ohio) | us-east-2 |

| US West (Oregon) | us-west-2 |

| Canada (Central) | ca-central-1 |

| South America (São Paulo) | sa-east-1 |

Accelerated AWS Airflow Deployment

The AWS Airflow deployments are now much faster and easier as well. Once your account is created, you can immediately start deploying DAGs (Directed Acyclic Graphs) directly to your Airflow environment. This deployment can be done either from AWS CloudFormation, AWS SDK AWS Management Console, or AWS CLI. You don't need to wait for anyone to provision the infrastructure or gather any development resources.

Better Logging

If you are storing the logs locally(which might call for the Virtual Machine to crash) or are waiting for an answer with logs storage, MWAA has it covered. With MWAA, you can automatically set up the entire log system that will confirm utilizing the Cloud watch for logging purposes. This shall avoid any developer manually configuring a CloudWatch Agent on all instances to stream the logs to Cloudwatch. This calls for a lot of errors, making sure the log groups for all companies are properly set up, which is mitigated with MWAA automatic scaling feature.

Built-in Airflow Security

MWAA gives you the confidence of being sure that your workloads are secure by default. All the data gets automatically encrypted with AWS’s Key Management Service (KMS). With the Single-Sign-On (SSO) access, security is again assured as it gives the users SSO access only when they have to view a workflow execution or schedule one. The Amazon Virtual Private Cloud (VPC) offers an isolated and secure environment for all the workers to effortlessly run in the cloud while maintaining security as well. To command for control on authentication or authorization (based on user role), that is also offered by simply using Apache Airflow’s user interface and navigating your way to Identity and Access Management (IAM) area.

Operational Costs Reduced

Amazon Managed Workflows bring down the engineering overhead and help to reduce the overall operational costs via quick and easy deployment, on-demand monitoring, and less manual input for orchestrating any data pipeline end-to-end optimally.

How does it Work?

Let us discuss the working mechanism of Amazon Managed Workflows for Apache Airflow (MWAA).

Once you are already working and setting up the Apache Airflow, you shall see that transitioning to the Amazon Managed Workflows for Apache Airflow will be effortless. By using Python, MWAA writes the Directed Acyclic Graphs (DAGs). With these DAGs, It’s those DAGs that Amazon Managed Workflows for Apache Airflow use to orchestrate and schedule the workflows that are created by the team.

Get started with Amazon Managed Workflows, you can easily assign an S3 bucket to it. This is where the Python dependencies list, DAGs, and plugins shall be stored. Then, you may need to upload to the bucket via a code pipeline or by manually triggering the pipeline to describe the ETL and eventually learn the entire process for automation. Finally, you can start to schedule, run and monitor the DAGs via a user interface of Airflow, SDK, or CLI.

When to use MWAA?

You might be wondering to find an answer to the question: When should Amazon Managed Workflows for Apache Airflow Managed Airflows be used or Are they really for your products or brand?

We must not forget the limitations when utilizing the MWAA between utilizing an unmanaged Airflow system right now the MWAA limits to the 2.0.2 version whereas Airflow works on the 2.1.4 version. You shall find limited flexibility when you use any managed service, again for a legit trade-off offering reduced costs like think. for plans on how to scale out the larger systems which shall require hiring more employees for just managing its operations.

Summing up, in scenarios where either you want to adopt Apache Airflow for the first time, or looking for ways to automate the workflows already orchestrated with Airflow, then MWAA is the option to go for.

AWS Airflow vs AWS Glue

Let us discuss in detail how different AWS Airflow and AWS Glue are:

AWS Airflow is a platform for programmatically authoring, scheduling, and monitoring the data pipelines where the workflows as directed acyclic graphs (DAGs) of tasks. You can execute tasks on an array of workers, with the rich user interface which makes it easy and convenient to visualize the data pipelines that are running in production, monitor the task progress as well as troubleshoot issues when required.

Whereas AWS Glue is a fully managed extract, transform, and load (ETL) service which is easy and convenient for customers to integrate with their architecture for preparing and loading the data for analytics.

Below are a few features offered by AWS Airflow:

Sophisticated: The data pipelines created with AWS Airflow are crisp and clear-cut. You can parameterize the scripts using the powerful Jinja templating engine, in the core of the AWS Aurfow.

Dynamic: With AWS Airflow, the data pipelines are configured as Python code which offers flexible and dynamic pipeline generation.

Extendible: You can conveniently define custom operators, and executors and extend the library to fit the environment that suits your requirement.

Below are a few features offered by AWS Glue:

Easy: With AWS Glue offers a fully managed ETL, which makes it really easy and effortless to automate the building, maintaining, and running ETL jobs. It finds the data sources, recognizes the data formats, as well as recommends schemas and transformations. It automatically generates the code required for executing the data transformations and loading processes.

Integrated: AWS Glue can be finely integrated with a wide range of AWS services.

Serverless: AWS Glue is serverless as no infrastructure is required to provision or manage. It efficiently handles the configuration, provisioning, and scaling required to run the ETL jobs. You are charged only for resources that get utilized while running the jobs.

Summing up, while AWS Airflow is categorized as a tool for Workflow Managers, AWS Glue is categorized as a Big Data Tool.

Pricing

The pricing structure of AWS Managed Workflows for Apache Airflow (MWAA) is discussed in detail below. You only get changed for what you use without any additional or hidden upfront fee. You only need to pay for the time the AWS Airflow Environment is running along with the additional auto-scaling that is provided for more workers and serves the web server capacity.

We have four different pricing structures for AWS Airflow as discussed below:

Environment Pricing Charges incur at hourly instance usage for the Managed Workflows environment which is billed at one-second resolution. The fees vary depending on the size of the environment.

Additional Worker Instance Pricing Charges incur hourly instance usage at one-second resolution. The fees vary depending on the size of the environment. If you wish to opt for auto-scaling, then you get charged for the additional worker instances used depending upon the Managed Workflow environment task load.

Additional Scheduler Instance Pricing Charges incur at hourly instance usage. When you opt for more schedulers than offered with your Managed Workflows environment, you will incur charges for the additional scheduler instances used depending upon the Managed Workflow environment availability.

Database Storage Charges are incurred at GB-month of storage consumed by the Managed Workflows database. You don't need to provide any instance in advance.

FAQs

Below is a few frequently asked question about AWS Airflow:

Q: What Airflow plugins does the service support?

A: All the 100+ Airflow community plugins developed to date in addition to any custom plugins are supported by Amazon MWAA by simply placing them in the AWS S3 bucket.

Q: Can Airflow be scheduled to run on AWS?

A: You can easily deploy and get started with Airflow in minutes with the Amazon Managed Workflows for Apache Airflow.

Q: What do Amazon Managed Workflows for Apache Airflow manage on my behalf?

A: From installing the software, setting up Airflow, (server instances, provisioning the infrastructure capacity, and storage), providing easier user management as well as authorization via the AWS Identity and Access Management (IAM) and Single Sign-On (SSO) is managed by Amazon MWAA.

Conclusion

-

AWS Airflow which is secure, reliable, and offers available for managed workflow orchestration where you can schedule and monitor workflows not just author them. You can make use of the Airflow and Python to create workflows without undertaking the stress of managing the infrastructure to offer availability, scalability, and security.

-

The AWS Airflow deployments are now much faster and easier as well. Once your account is created, you can immediately start deploying DAGs (Directed Acyclic Graphs) directly to your Airflow environment.

-

Amazon Managed Workflows bring down the engineering overhead and help to reduce the overall operational costs via quick and easy deployment, on-demand monitoring, and less manual input for orchestrating any data pipeline end-to-end optimally.

-

Scenarios where either you want to adopt Apache Airflow for the first time, or looking for ways to automate the workflows already orchestrated with Airflow, then MWAA is the option to go for.