DynamoDB Streams

Overview

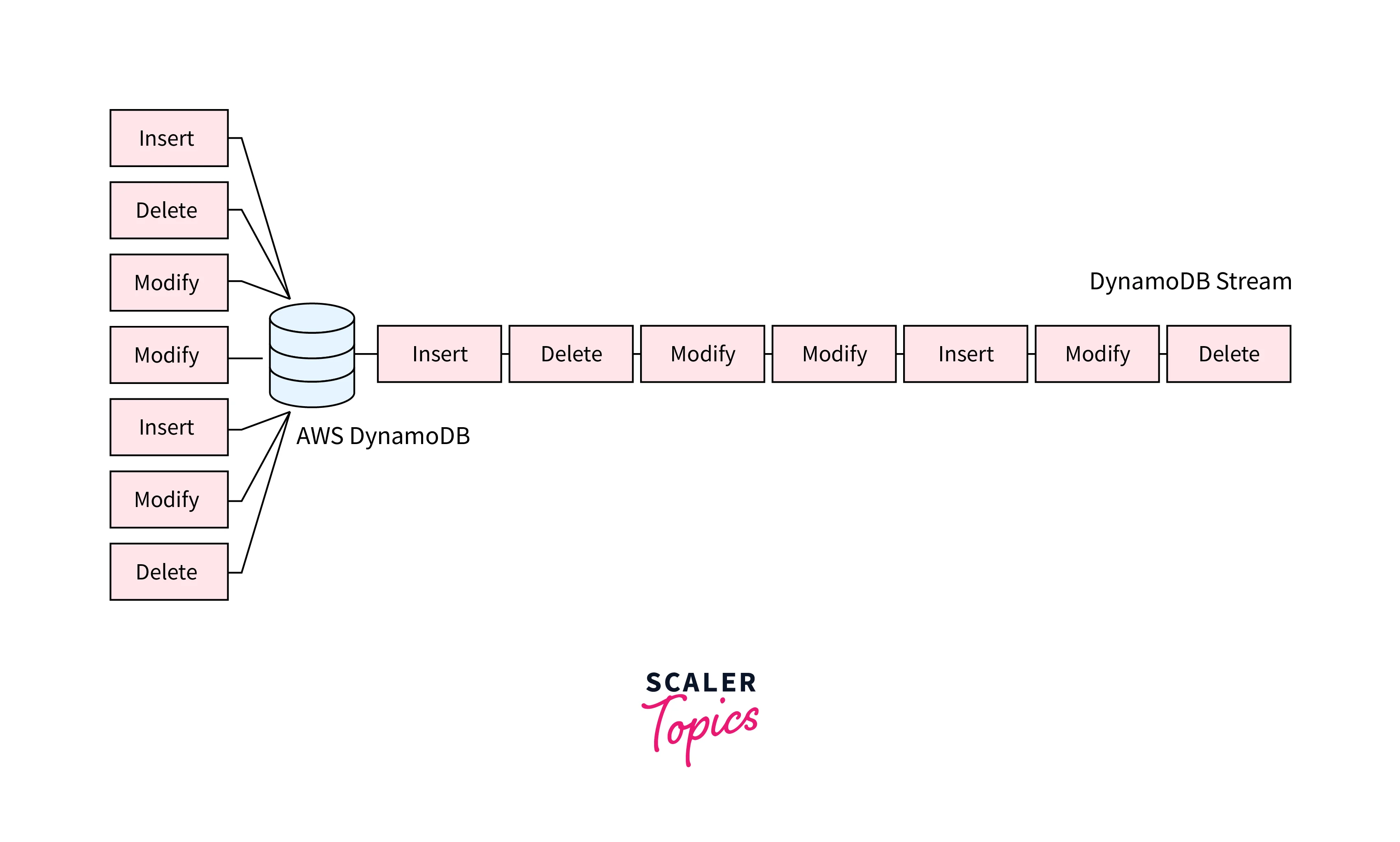

DynamoDB Streams is an AWS DynamoDB feature that lets you capture near real-time item modifications (like create, modify, or delete) events in a queue. DynamoDB Streams makes it super easy to process these modification events without needing to query your table.

AWS DynamoDB

AWS DynamoDB is a managed NoSQL database service offered by AWS. AWS DynamoDB provides high availability and automatic scaling out of the box. It also features scheduled backups and encryption at rest.

You can learn more about AWS DynamoDB and its features from Scaler Topic's article, linked here.

AWS DynamoDB Streams

AWS DynamoDB Streams is a feature that lets you capture modifications to items in your DynamoDB tables and store these changes in a queue in the order the modifications were made. The modifications can include insertion of new items, updates to existing items, or deletion of items. These snapshots of the modifications are called "change data capture" events and are stored in the queue for 24 hours. You can read from this queue and perform various actions using AWS services like AWS Lambda Functions.

AWS DynamoDB Streams are useful when you need to process modification events in your DynamoDB table without putting strain on the table itself and utilizing its allocated read throughput. Let's understand how DynamoDB Streams work and some use cases.

How Do DynamoDB Streams Work?

DynamoDB Streams are a feature that can be enabled/disabled using the AWS Console or AWS CLI. You can enable/disable DynamoDB Streams for a specific table. Let's understand how DynamoDB Streams work once this feature is enabled for a table.

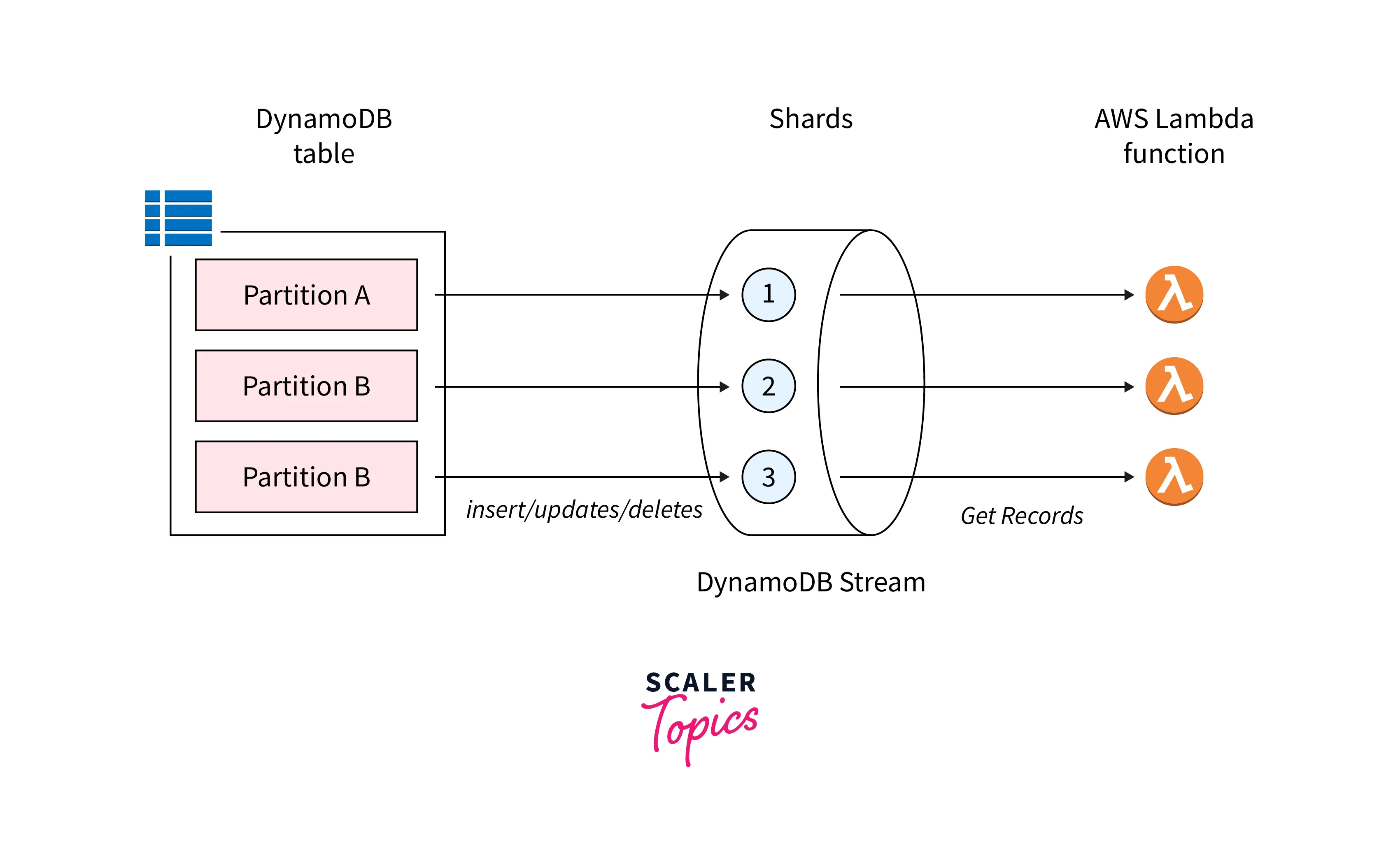

In DynamoDB data is stored in logical containers called "partitions". Your table can have one or more partitions depending on the amount of data your table contains.

When DynamoDB Streams is enabled, each partition is allocated with at least one "shard". A shard is essentially a data queue that stores the item modification events along with some other information. Consumers such as AWS Lambda Functions can read from these shards and process the change data capture events.

DynamoDB Stream Concepts

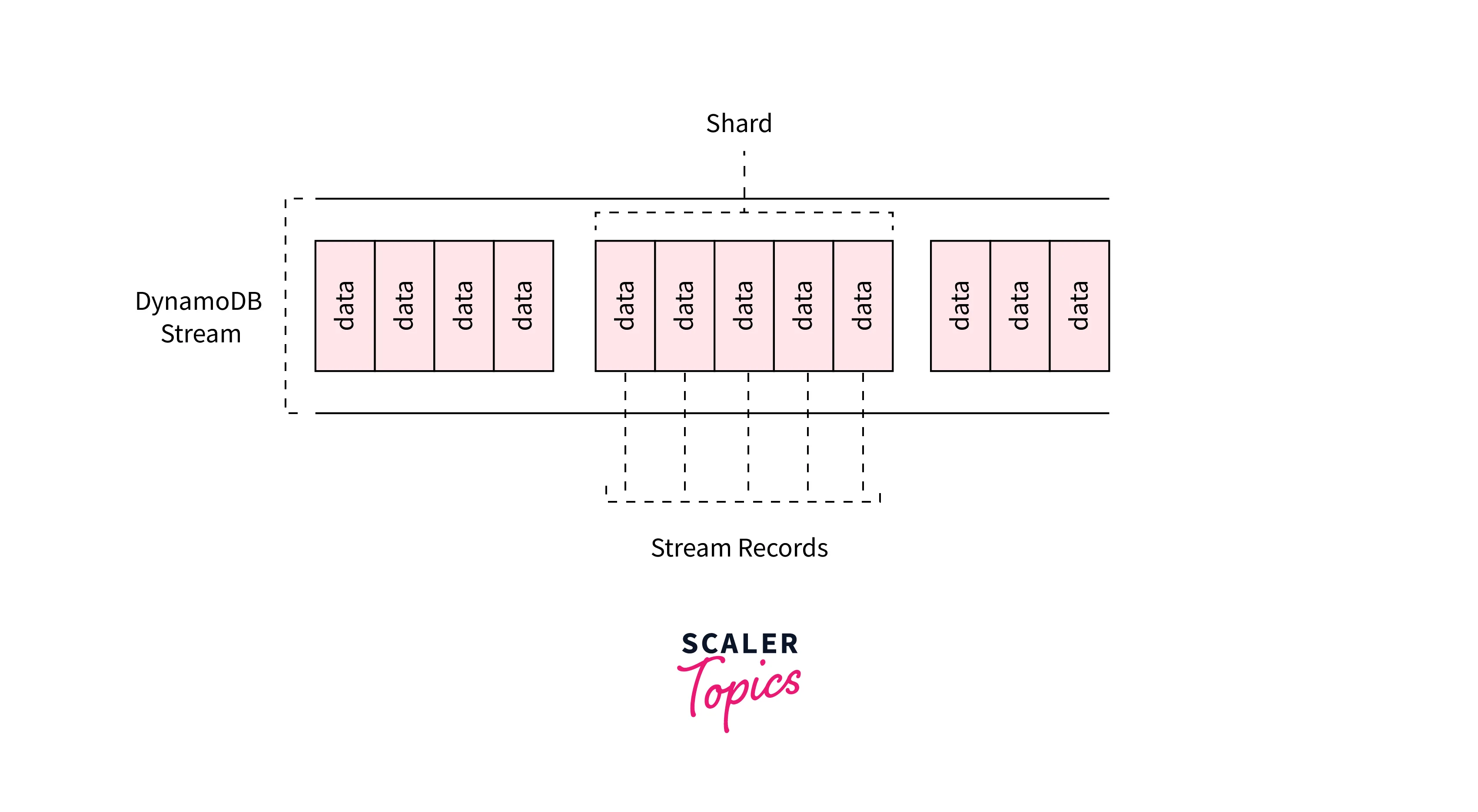

Item modification events are stored in shards. Let's dissect the internals of a shard and understand how an event is stored in DynamoDB Streams.

Each shard is a group of records, and each record represents a single item modification event. Records are pushed to DynamoDB Stream shards based on the information you want to consume. AWS gives you the following options:

| Option Name | Description |

|---|---|

| KEYS_ONLY | Capture only the key attributes of the modified item. |

| NEW_IMAGE | Capture the entire item, after it was modified. |

| OLD_IMAGE | Capture the entire item, before it was modified. |

| NEW_AND_OLD_IMAGES | Capture both the new and the old images of the item. |

Each DynamoDB Stream is assigned a unique identifier that can be used while changing the settings for a stream.

Note: It is not possible to edit the options once a stream has been setup. If you need to make changes to a stream after it has been setup, you must disable the current stream and create a new one.

Let's take a look an example record:

- The eventName tells us the type of event. This record is of the type INSERT. The other possible values are MODIFY and DELETE.

- dynamodb.Keys tells us the primary key of the item that was inserted. In this example, the primary key is Username.

- dynamodb.NewImage tells us the attributes of the item that was inserted. This example's item has three attibutes - Username, Message, and Timestamp.

DynamoDB Stream Use Cases

DynamoDB Streams have many uses cases such as:

Data Aggregation

- You can use DynamoDB Streams to aggregate data from item modification events and derive analytics out of these aggregations.

- If you use an AWS Lambda Function to perform the aggregation using event data instead of querying the table you can save on read throughput and not put a strain on the DynamoDB table.

- For example, suppose you have a mobile game that has thousands of user actions happening in a second and these actions are stored in a DynamoDB table. If you need to derive some metrics for these actions, you can batch the update events and store the aggregated metrics in a separate table.

Data Replication

- DynamoDB Streams can also be used to provide near real-time data replication to other tables in the same AWS Region, a different AWS region, or even a different AWS Account.

- You can also replicate data to other sources like AWS S3 or AWS RDS based on your custom criteria and the event type. This again enables you to perform operations on table data without needing to query the table.

Search Index Optimization

- You can use DynamoDB Streams to create optimized search indices and make your data easier to query.

- Whenever new items are inserted into the table, these can be added to the search index using an AWS Lambda Function. Similarly, when items are deleted from the table, these can be removed from the search index.

Archival Of Items With TTL

- Another interesting use case of DynamoDB Streams is archiving items deleted due to Time-To-Live (TTL) expiry.

- TTL is a feature that automatically deletes records as per a specific attribute that you have configured in the table. For example, you can create an attribute called ExpirationTime and insert a timestamp value that is one week from the creation timestamp. Then at the ExpirationTime value, DynamoDB will automatically delete the item.

- You can use DynamoDB Streams to capture these delete events and archive the deleted items to AWS S3 or another data store.

Conclusion

- DynamoDB Streams are a way to capture item modification events (like create, update, or delete) for an AWS DynamoDB table.

- DynamoDB Streams can be enabled or disabled for a table and you can choose what information(only keys, old item, new item, or both) you want to capture in the DynamoDB Stream queue.

- Each DynamoDB table partition is allocated at least one DynamoDB Stream shard. A shard contains the modification events or records.

- DynamoDB Streams have many use cases like data aggregation and data replication.

- They can also be used to create optimized search indices and archive items that are deleted due to TTL expiry.