Azure Blob Storage Configuration

Overview

Azure Blob Storage Configuration is a focal point for businesses and individuals looking to store massive amounts of unstructured data, such as text or binary data, on the cloud. With seamless management of data, from mere kilobytes to exabytes, understanding the intricate details of configuring Azure Blob Storage becomes paramount. This article will explain what Azure Blob Storage is and how to effectively configure it to manage, replicate, and price the stored data proficiently.

What is Azure blob storage?

Azure Blob Storage is a scalable cloud storage solution provided by Microsoft Azure that allows for the storing and managing of large amounts of unstructured data. It supports varying data types such as text, binary data, documents, media files, etc. This service enables organizations to create and manage containers (similar to folders) and blobs (files) under a storage account, with various configurations to optimize access, management, and pricing.

Features of Azure Blob Storage:

- Scalability: Azure Blob Storage allows organizations to scale up or down based on their storage needs, ensuring cost-effectiveness.

- Accessibility: Provides secure and global accessibility to data, ensuring that it can be reached, analyzed, and modified from any location.

- Security: With features like RBAC (Role-Based Access Control) and Azure AD-based authentication, it ensures the data is safeguarded against unauthorized access.

- Lifecycle Management: Through Azure Blob Storage Configuration, it allows for the creation of lifecycle management policies, ensuring data is moved across tiers or deleted, as per usage or archival policies.

- Automated Data Movement: Enables automatic transitioning of data across different storage tiers based on policies to ensure cost-effectiveness while meeting data accessibility requirements.

Configure Blob Storage and Blob access tiers

The very first step towards an efficient Azure Blob Storage Configuration involves establishing the Blob Storage and selecting an appropriate access tier. Azure Blob Storage offers different access tiers to manage the trade-off between access times and storage costs:

Hot Tier

When considering the Hot Tier within Azure Blob Storage Configuration, organizations are engaging with a domain crafted for data that demands frequent access and low-latency interactions. Ideal for data that is regularly accessed and manipulated, such as database backups, content for web applications, and data that is utilized in compute scenarios, the Hot Tier embodies an environment where cost per gigabyte is relatively low, but transaction costs are higher compared to other tiers. The meticulous configuration in this tier ensures that organizations can readily access and utilize their data with minimal latency, offering optimal performance for workloads that demand quick and frequent data retrieval and modification.

Cool Tier

Venturing into the Cool Tier of Blob Storage Configuration in Azure, the paradigm shifts towards managing data that is infrequently accessed and has a minimum storage duration requirement of 30 days. Typically, this tier is best suited for data that can tolerate slightly higher retrieval times, such as short-term backup and recovery solutions, older datasets required for periodic analysis, and data archives for specific projects. While the Cool Tier offers lower storage costs compared to the Hot Tier, it does impose higher costs for data access and transactions, making it a cost-effective solution for storing data that is accessed less frequently but still pivotal for operational workflows.

Archive Tier

The Archive Tier in Azure Blob Storage predominantly serves as a haven for data that is seldom accessed and is stored for long-term archival, necessitating a minimum of 180 days of storage. Often considered the most economical option for storing large amounts of data in Azure, this tier significantly reduces storage costs but imposes the highest fees for data retrieval, which can also take several hours to process. It is quintessentially utilized for data that is subject to regulatory archival requirements or data that is only required in very rare scenarios, ensuring that even the most dormant of datasets can be securely and economically stored for long durations within Azure Blob Storage Configuration.

Compare access tiers:

Here's a table that provides a comparison between the Hot, Cool, and Archive tiers in Azure Blob Storage:

| Compare | Hot Tier | Cool Tier | Archive Tier |

|---|---|---|---|

| Availability | 99.9% | 99% | Offline |

| Availability (RA-GRS reads) | 99.99% | 99.9% | Offline |

| Latency (time to first byte) | Milliseconds | Milliseconds | Hours |

| Minimum storage duration | N/A | 30 days | 180 days |

| Usage costs | Higher storage costs, Lower access & transaction costs | Lower storage costs, Higher access & transaction costs | Lowest storage costs, Highest access & transaction costs |

Configure the blob access tier:

In the Azure portal, the configuration of the blob access tier for your Azure storage account can be adjusted according to your requirements. The blob access tier, pivotal for aligning with your storage strategy, can be altered at any moment to accommodate your evolving needs. Ensuring that the selected access tier aligns with your requirements not only optimizes data accessibility but also substantiates a cost-effective data storage strategy.

Configure Blob lifecycle management rules

Each dataset follows its own distinctive lifecycle. In the initial phases, users might engage with certain data within the set, while as it ages, overall access typically declines significantly. Some data may remain dormant in the cloud, scarcely accessed once stored, while others may expire within days or months of creation. Conversely, certain data may continue to be actively read and modified throughout its lifecycle.

Azure Blob Storage facilitates lifecycle management for datasets, providing a comprehensive rule-based policy for GPv2 and Blob Storage accounts. With the use of lifecycle policy rules, you can seamlessly transition your data through appropriate access tiers and establish expiration timelines to denote the end of a data set's lifecycle.

Key Aspects of Lifecycle Management: Azure Blob Storage's lifecycle management policy rules are geared to fulfill several functions:

- Data Transitioning: Transition blobs between tiers like Hot to Cool or Cool to Archive, balancing between data accessibility and cost efficiency.

- Data Expiry: Set provisions to automatically delete blobs once they reach the end of their lifecycle.

- Rule Execution: These policy rules, framed at the Azure storage account level, are designed to be executed daily.

- Scoped Application: These rules can be directed at specific containers or a selected group of blobs.

Establishing Lifecycle Management Policy Rules

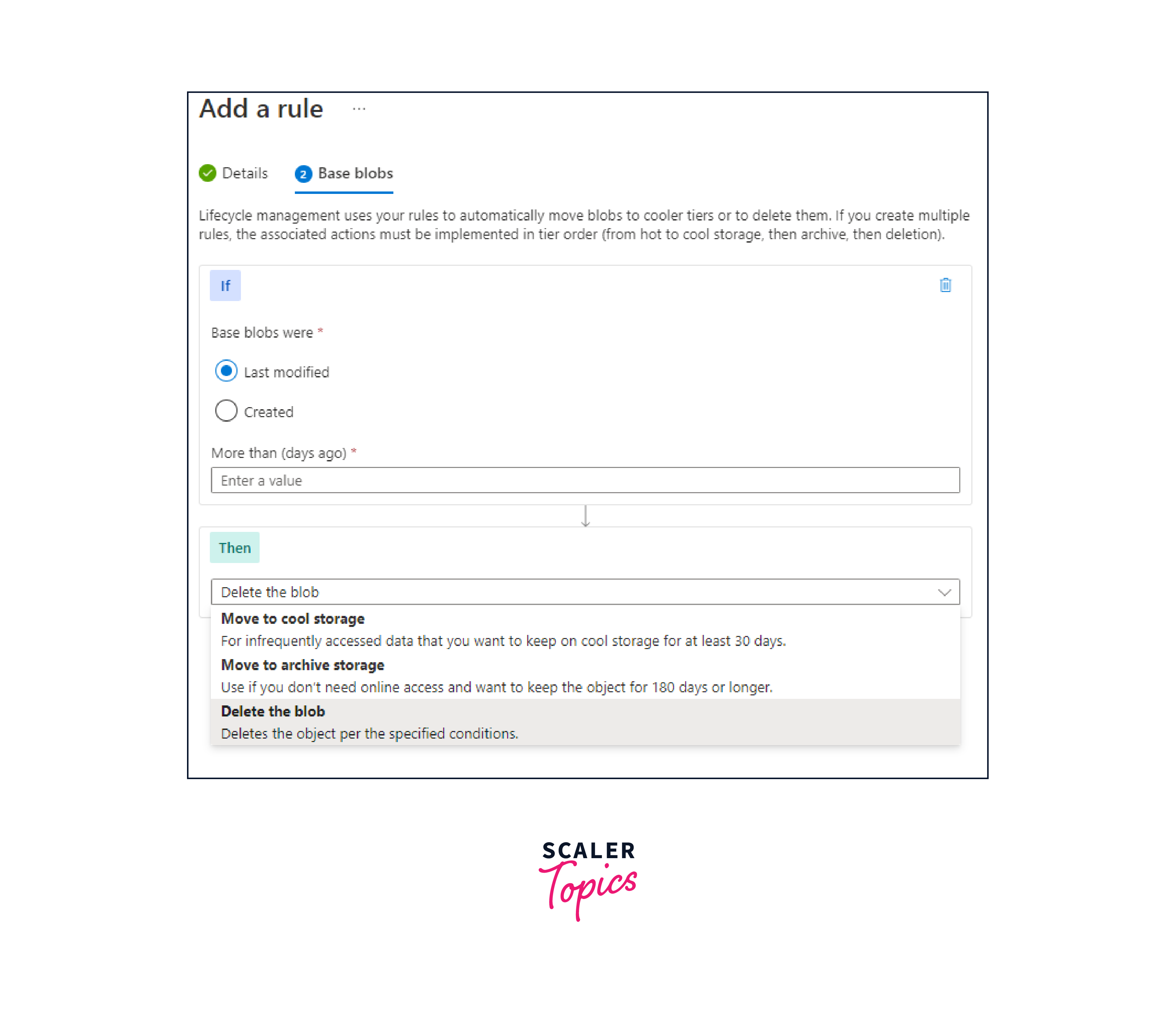

Within the Azure portal, crafting lifecycle management policy rules for your Azure storage account involves delineating various settings. Each rule establishes If - Then block conditions to transition or expire data in alignment with your specifications.

-

If: The If clause frames the evaluation criteria for the policy rule. When the If clause proves true, the Then clause is actioned. Utilize the If clause to define the time span applicable to the blob data. The lifecycle management feature verifies whether the data aligns with access or modification stipulations within the specified time.

-

More than (days ago): Determines the number of days utilized in the evaluation condition.

-

Then: The Then clause determines the action criteria for the policy rule. When the If clause proves true, the Then clause is actioned. Employ the Then clause to establish the transition action for the blob data, with the lifecycle management feature transitioning the data according to the setting.

- Move to cool storage: The blob data transitions to Cool tier storage.

- Move to archive storage: The blob data transitions to Archive tier storage.

- Delete the blob: The blob data is eradicated.

Practical Scenarios:

Imagine a business generating daily operational logs. While these logs are crucial in the initial weeks for diagnostics, their relevance drops over months. Using the Azure Blob Storage Configuration, one can set rules to move logs older than 30 days to cool storage and those older than 90 days to the archive storage. Furthermore, any data over a year old, which might have lost all operational relevance, can be set for automatic deletion.

By utilizing the Blob Storage Configuration in Azure effectively, businesses can ensure that data is stored optimally, aligning with its usage patterns, ensuring accessibility while also being cost-effective.

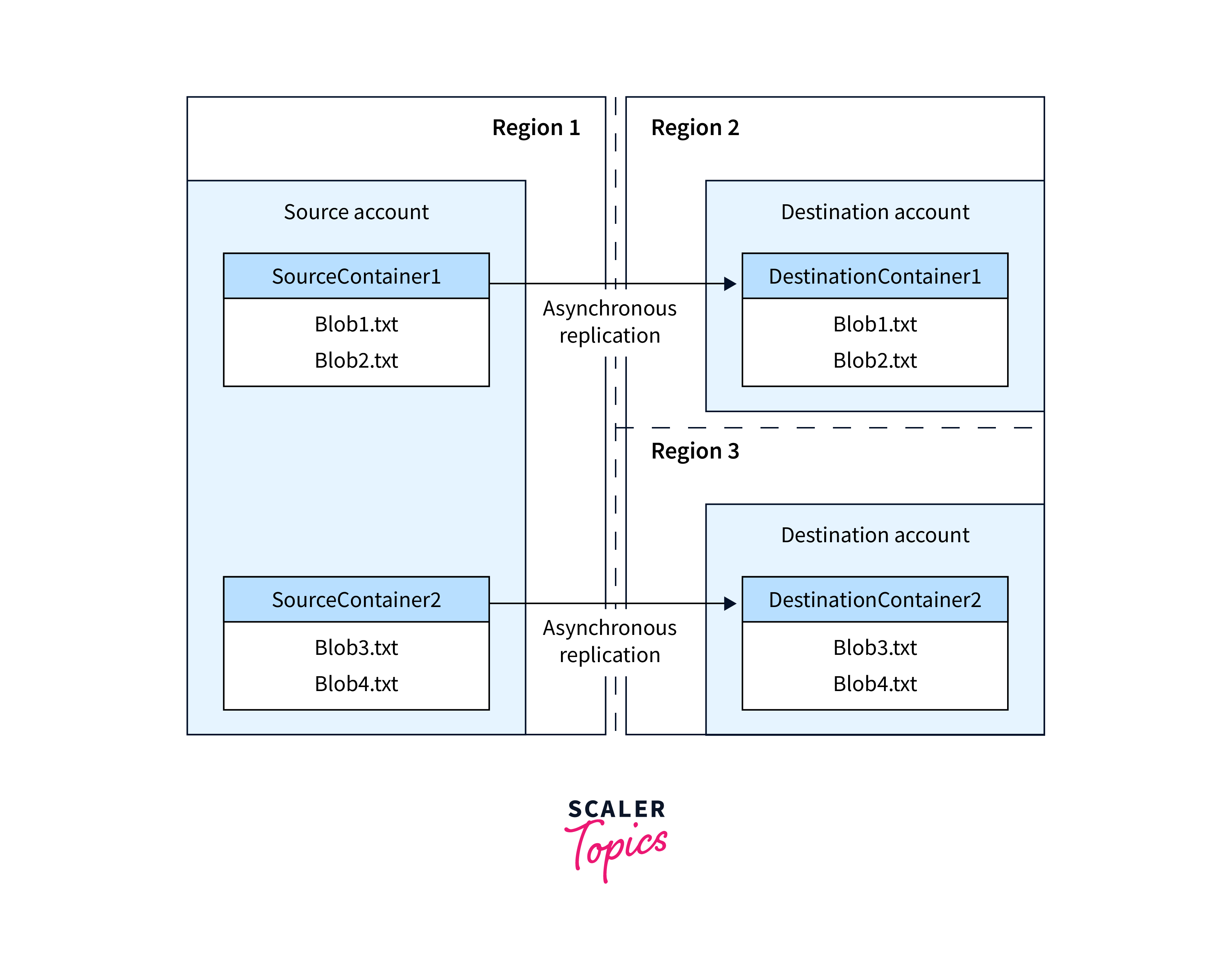

Configure Blob Object Replication

Object replication in Azure Blob Storage allows the asynchronous copying of blobs within a container, based on predefined policy rules. During the replication procedure, various elements - such as blob contents, associated metadata and properties, and any related data versions - are transferred from the source to the destination container.

Key Aspects of Blob Object Replication

When configuring blob object replication, it’s imperative to be mindful of several key aspects:

- Enabling blob versioning is a prerequisite for object replication on both source and destination accounts.

- Blob snapshots are not supported in object replication. Thus, snapshots from the source account are not mirrored in the destination account.

- Object replication is viable when both source and destination accounts are operating in Hot or Cool tiers, even if they are in different tiers.

- The establishment of a replication policy, which pinpoints the source and destination Azure storage accounts, is a crucial step in configuring object replication.

Considerations in Configuring Blob Object Replication

Blob object replication is not just a mechanism for data copying but a strategy that can be tailored for various operational benefits:

- Latency Mitigation: Through blob object replication, latency can be minimized by enabling data consumption from a region closer to the clients, thus, facilitating faster read requests.

- Efficiency in Compute Workloads: Enhancing efficiency across compute workloads is achievable with blob object replication by enabling the same blob datasets to be processed in different regions.

- Optimized Data Distribution: Configuring your system for streamlined data distribution is vital. Process or analyze data in a central location and replicate only the resultant data to other regions to manage bandwidth and compute resource allocation efficiently.

Upload Blobs

Uploading blobs, which can encapsulate any type and size of data, into Azure Storage can be realized through various blob types, namely block blob, append blob, and page blob, each catering to different use-cases and scenarios.

Understanding Blob Types

- Block Blobs: Typically utilized for most Blob Storage scenarios, block blobs compile data blocks to form a blob, proving to be optimal for storing text and binary data, such as files, images, and videos.

- Append Blobs: While append blobs also consist of data blocks like block blobs, they are optimized for append operations, making them a fitting choice for logging scenarios where data gradually accumulates.

- Page Blobs: Suitable for frequent read/write operations due to their efficiency, page blobs can attain a size of up to 8 TB and are used by Azure Virtual Machines for operating system and data disks.

Considerations for Blob Upload Tools Uploading blobs can be conducted through a variety of tools, each offering different functionalities, catering to varied use cases:

- AzCopy: A straightforward command-line tool available for both Windows and Linux, facilitating copying data to and from Blob Storage, across containers, and storage accounts.

- Azure Data Box Disk: Ideal for transferring on-premises data to Blob Storage, especially when dealing with large datasets or network constraints. Users can request SSDs from Microsoft, copy data to them, and subsequently, Microsoft uploads the data into Blob Storage.

- Azure Import/Export: A service conducive for exporting large data volumes from your storage account to user-provided hard drives. Microsoft returns these drives, now containing your data, back to you.

Blob Storage Pricing

Pricing in Azure Blob Storage is influenced by:

- Access Tiers: Different access tiers come with different pricing models.

- Data Retrieval and Transaction Costs: Costs associated with data read/write operations.

- Data Management Features: Utilizing features like lifecycle management or object replication may incur additional costs.

Understanding these aspects ensures that Blob Storage Configuration in Azure is both efficient and cost-effective.

Conclusion

- Implementing Azure Blob Storage Configuration enables businesses to manage voluminous data efficiently by selecting appropriate storage tiers, considering access frequency and retention needs.

- Utilizing the lifecycle management features within Blob Storage Configuration in Azure, businesses can automate data transitioning across various storage tiers and establish data expiry, optimizing cost and data accessibility.

- Employing blob object replication facilitates the asynchronous copying of blobs, ensuring data consistency and availability across different regions, thereby enhancing disaster recovery strategies and regional data accessibility.

- Through strategic Azure Blob Storage Configuration, including choosing suitable access tiers and establishing lifecycle management rules, businesses can ensure they are utilizing the most cost-effective storage solutions, tailored to their specific use-cases and data access patterns.