Azure Machine Learning Compute

Overview

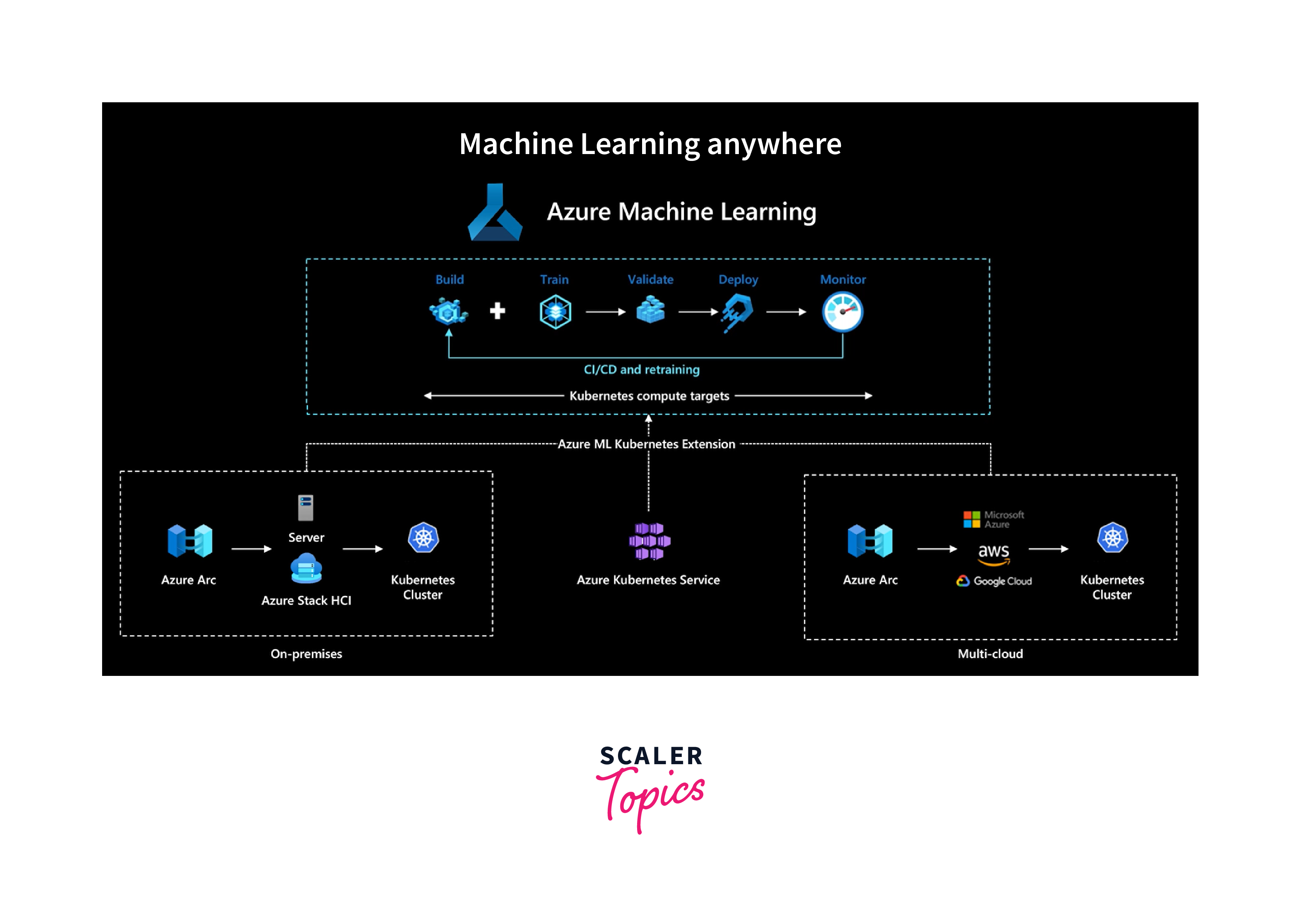

Azure Machine Learning Compute revolutionizes how machine learning models are trained and deployed in the cloud. This powerful service, offered by Microsoft Azure, provides a flexible and scalable environment for running training workloads. With options ranging from CPU-based virtual machines to GPU-accelerated resources, it caters to a wide spectrum of computational needs. Managed directly from the Azure Machine Learning workspace, it allows seamless provisioning and management of resources, ensuring optimal performance and cost efficiency.

What is an Azure Machine Learning Compute?

Azure Machine Learning Compute is a cloud-based service provided by Microsoft Azure that facilitates the training and deployment of machine learning models at scale. It offers CPU-based virtual machines, which are well-suited for traditional machine learning tasks, and GPU-based virtual machines that significantly accelerate the training of deep learning models. Azure Machine Learning Compute also supports distributed training across multiple nodes, enabling the handling of large datasets and complex model architectures. Managed directly from the Azure Machine Learning workspace, it provides an intuitive interface for provisioning and managing compute resources, allowing for seamless integration into your machine learning projects.

Types of Compute

Azure Machine Learning Compute offers a diverse range of compute options to cater to different aspects of the machine learning lifecycle:

Local Compute

Local Compute allows you to utilize the computational resources available on your local machine or within your on-premises infrastructure. This option is precious for initial experimentation, prototyping, and small-scale development tasks. It enables data scientists and developers to iterate quickly on their models without the need for cloud resources, making it a cost-effective choice for early-stage development.

Compute Clusters

Compute Clusters are a powerful feature of Azure Machine Learning Compute that enables dynamic provisioning and management of virtual machines in the cloud. These clusters are designed for distributed training, allowing you to parallelize the training process across multiple nodes. This is invaluable when working with large datasets or complex model architectures, as it significantly reduces training times. Compute Clusters can be easily configured and scaled to accommodate the computational demands of your specific machine-learning workloads.

Attached Compute

Attached Compute provides the flexibility to leverage existing Azure resources or virtual machines you may have already provisioned. This option is useful when you have specialized infrastructure requirements or want to use pre-existing resources. By attaching these resources to your Azure Machine Learning workspace, you can seamlessly integrate them into your machine learning workflows. This feature streamlines reusing infrastructure investments and ensures compatibility with your existing environment.

Inference Clusters

Inference Clusters are tailored for deploying machine learning models into production environments. They are optimized for efficiently serving predictions in real-time or batch-processing scenarios. Inference Clusters prioritize low-latency and high-throughput operations, ensuring that your deployed models can handle the demands of a production setting. This capability is crucial for applications such as recommendation systems, fraud detection, and image recognition, where timely and accurate predictions are essential.

Training Compute Targets

In Azure Machine Learning, training compute targets refer to the specific resources used to execute the training process for machine learning models. These targets play a crucial role in determining the speed, scalability, and cost of the training process. Azure Machine Learning provides several options for training compute targets:

Azure Machine Learning Compute

Azure Machine Learning Compute is a managed service that offers a scalable and flexible environment for training machine learning models. It provides a range of virtual machine sizes and GPU options, allowing you to choose the resources that best match your computational requirements. With the ability to scale up or down based on the complexity of your model and dataset size, Azure Machine Learning Compute ensures that you have the necessary resources to train your models efficiently.

Azure Databricks

Azure Databricks is an Apache Spark-based analytics platform optimized for Azure. It provides a collaborative environment for building, training, and deploying machine learning models. Azure Machine Learning seamlessly integrates with Azure Databricks, allowing you to use Databricks clusters as compute targets for your training workloads. This integration combines the power of distributed computing with the flexibility of Azure Machine Learning, enabling you to tackle large-scale machine learning tasks with ease.

Azure Kubernetes Service (AKS)

For scenarios that require containerized model training or deployment, Azure Kubernetes Service (AKS) can be used as a compute target. AKS provides a managed Kubernetes cluster that simplifies the process of deploying, managing, and scaling containerized applications. By leveraging AKS, you can run your machine learning workloads in containers, ensuring consistency and reproducibility across different environments.

Synergy between these Services

-

Flexibility and Portability:

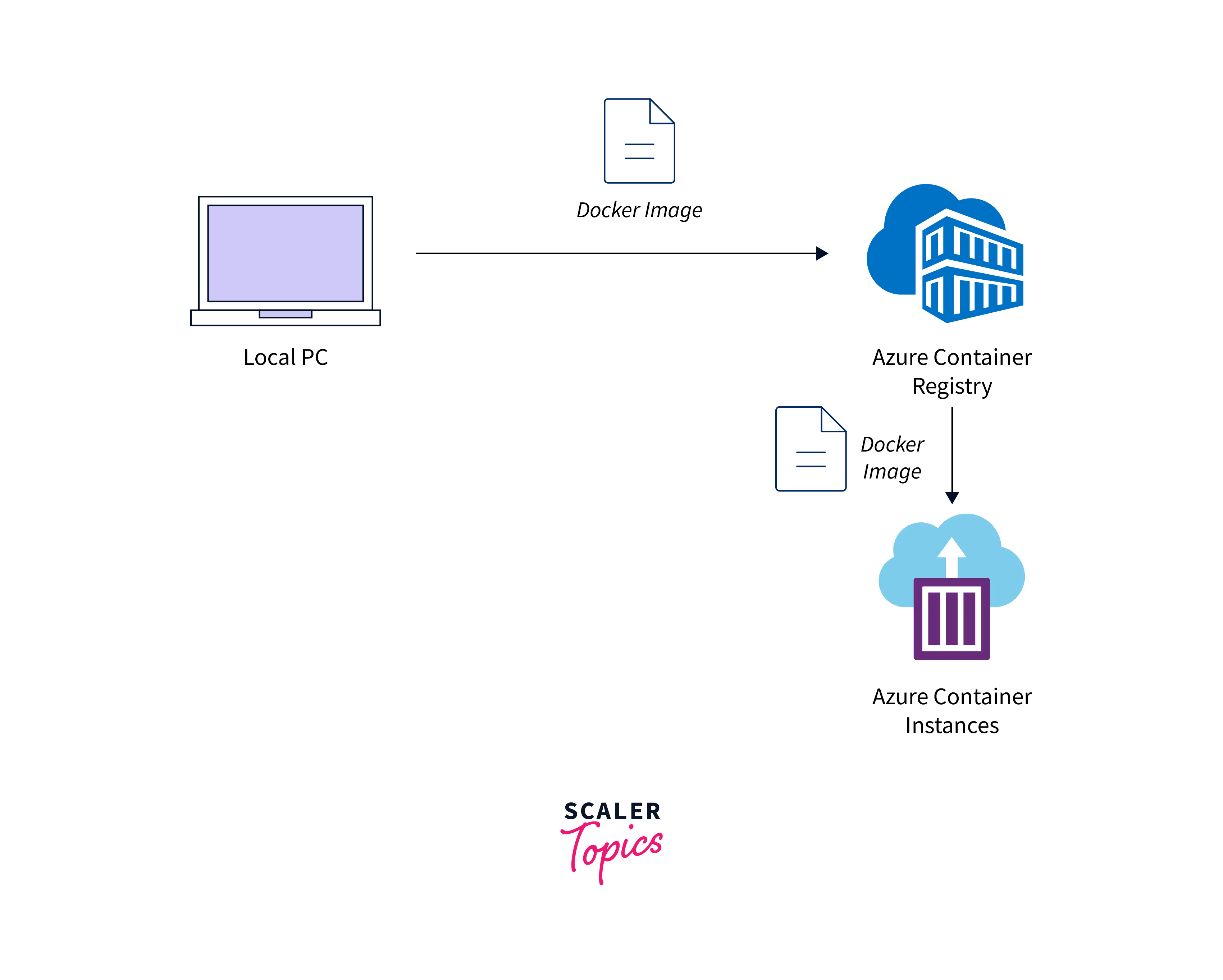

Azure Machine Learning Compute's ability to package models into containers ensures flexibility and portability. Models developed in one environment can seamlessly transition to other services like AKS or ACI.

-

Scalability:

Integration with services like Azure Databricks, AKS, and ACI allows machine learning workloads to scale dynamically based on demand, ensuring optimal performance during both training and inference.

-

Collaboration Across Teams:

Azure Databricks provides a collaborative platform for data scientists and engineers, while Azure Machine Learning Compute enables the deployment and management of machine learning models across various Azure services, improving collaboration across teams.

-

End-to-End Machine Learning Pipelines:

The integration of these services supports end-to-end machine learning pipelines, from data preparation and model training in Azure Databricks to containerized deployment and scaling with Azure Kubernetes Service or Azure Container Instances.

Compute Targets for Inference

When it comes to deploying machine learning models into production environments, choosing the right compute target is essential for ensuring optimal performance and scalability. Azure Machine Learning provides several options for deploying models for inference:

Azure Container Instances (ACI)

Azure Container Instances (ACI) is a serverless container service that allows you to deploy containers without managing the underlying infrastructure. It's a convenient option for quickly deploying and serving machine learning models without the overhead of managing virtual machines or clusters. ACI is well-suited for scenarios where you need to serve predictions on an ad-hoc basis or in low-demand environments.

Azure IoT Edge

Azure IoT Edge extends cloud capabilities to edge devices, enabling you to deploy and run machine learning models directly on IoT devices. This is particularly valuable for scenarios where low latency and real-time processing are critical, such as in industrial IoT applications or in environments with limited or intermittent connectivity to the cloud.

Azure Functions

Azure Functions is a serverless computing service that allows you to run event-triggered code in response to various events. While not typically used for high-throughput or low-latency inference, Azure Functions can be a suitable choice for sporadic or event-driven inference scenarios. It provides a cost-effective way to deploy and run lightweight machine-learning models in response to specific events.

Compute Isolation

In Azure Machine Learning, compute isolation ensures that your machine learning workloads are executed in an isolated environment, separate from other users or projects. Azure Machine Learning provides several mechanisms for achieving compute isolation:

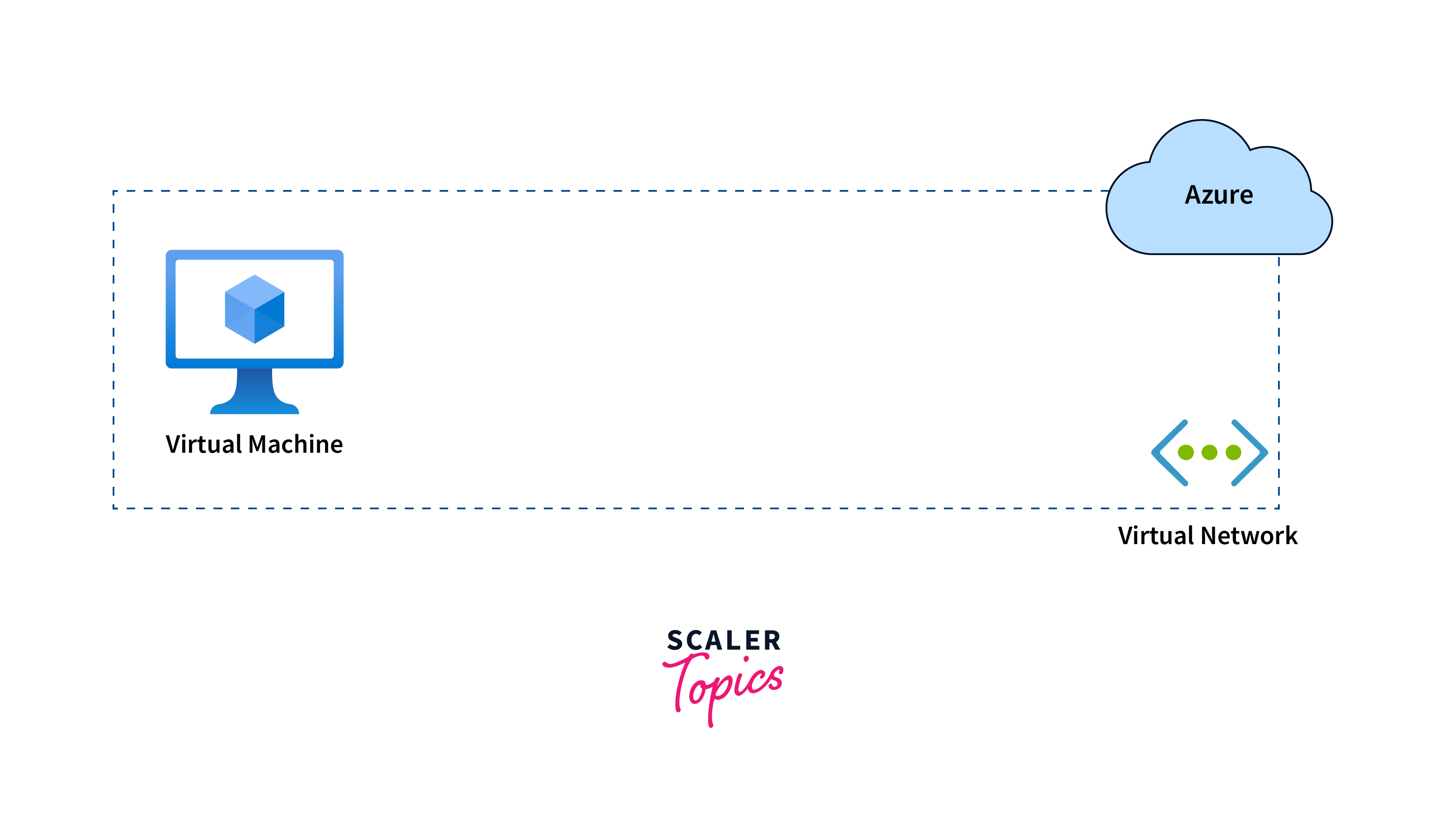

Virtual Networks (VNets)

Azure Virtual Networks allow you to create isolated networks within Azure, providing a secure environment for your compute resources. By integrating your Azure Machine Learning workspace with a VNet, you can ensure that all compute resources, including training clusters and inference deployments, operate within a controlled network environment.

Azure Container Instances (ACI) and Azure Kubernetes Service (AKS) Integration with VNets

Azure Container Instances and Azure Kubernetes Service can be integrated with Azure Virtual Networks, enabling you to deploy machine learning models within a VNet.

Compute Isolation Policies

Azure Machine Learning allows you to define compute isolation policies that govern how resources are allocated and shared within your workspace. These policies give you granular control over compute usage, allowing you to enforce specific isolation requirements based on your organization's needs.

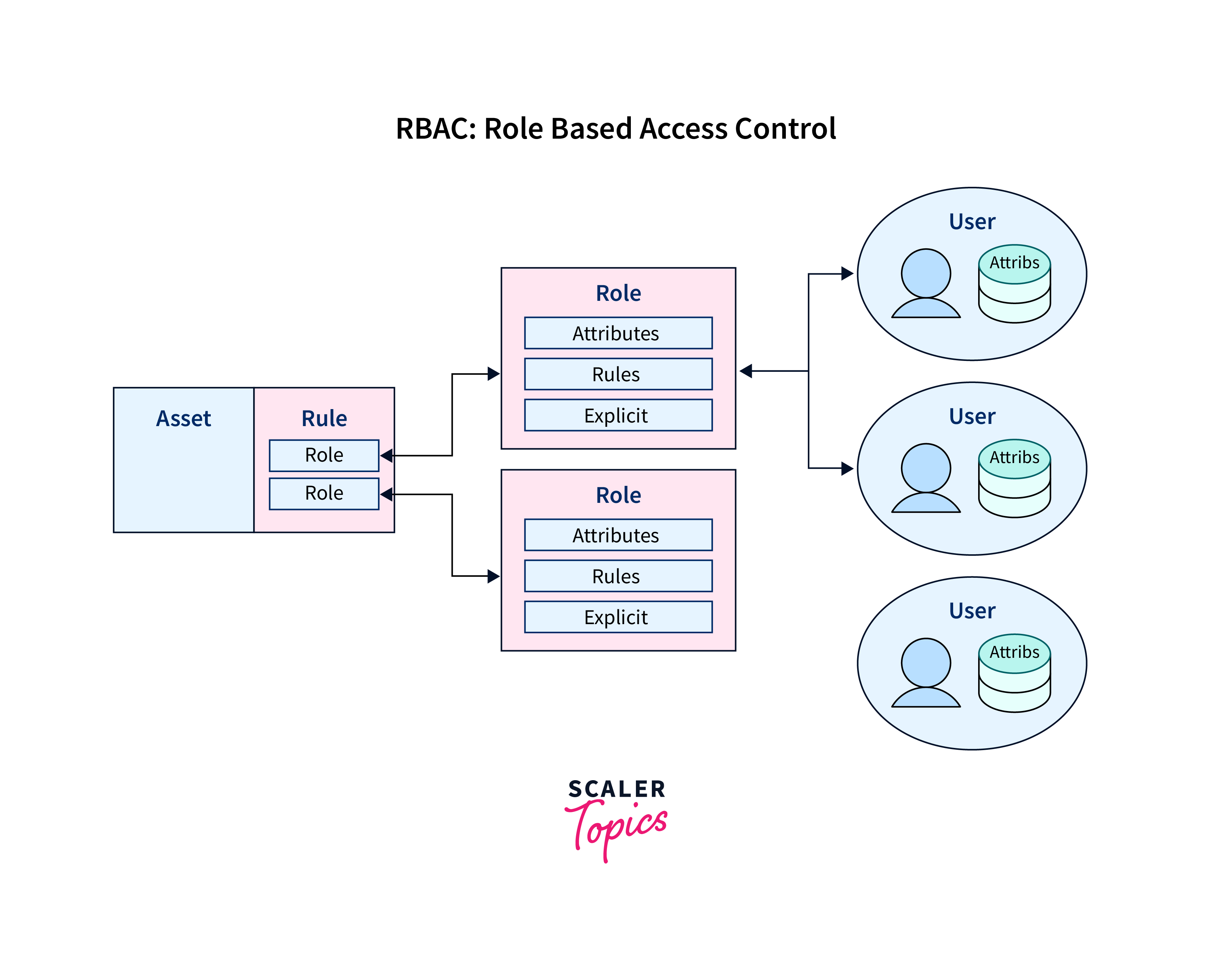

Role-Based Access Control (RBAC)

Azure Machine Learning leverages Azure's Role-Based Access Control (RBAC) to assign specific permissions and access levels to users and groups. This ensures that only authorized individuals have the ability to manage and interact with compute resources within your workspace.

Unmanaged Compute

Unmanaged compute in Azure Machine Learning refers to independently provisioned and managed resources outside the Azure Machine Learning service's direct control. This option offers flexibility for organizations with specific infrastructure requirements or those integrating existing resources. While it allows customization, it entails responsibility for infrastructure management. This option suits scenarios with pre-existing investments, specialized hardware needs, or specific compliance requirements, enabling a tailored environment for machine learning workloads.

Pricing Models and Cost Optimization Strategies

Pricing Models

-

Pay-As-You-Go (PAYG):

This is a flexible model where you pay for the resources you consume on an hourly or per-minute basis. Suitable for short-term projects, development and testing, or workloads with varying usage patterns.

-

Reserved Instances (RIs):

Allows users to commit to a one- or three-year term for a significant discount over PAYG rates. Best for predictable workloads with consistent usage.

-

Spot Instances:

Provides significant cost savings by allowing you to bid for unused Azure capacity. Ideal for fault-tolerant workloads that can handle interruptions.

-

Azure Hybrid Benefit:

Allows users with Software Assurance to use their on-premises Windows Server or SQL Server licenses on Azure at a reduced cost.

Cost Optimization Strategies

-

Rightsizing:

Regularly assess and adjust your resource sizes to match actual demand. Use Azure Advisor to get recommendations on right-sizing your resources.

-

Scaling:

Implement auto-scaling to dynamically adjust resources based on demand. Use Azure Logic Apps or Azure Functions for serverless computing.

-

Azure Policy and Tags:

Leverage Azure Policy to enforce organizational standards and compliance. Use resource tags to track and categorize resources for better cost allocation.

-

Azure Cost Management and Billing:

Use Azure Cost Management and Billing to gain insights into your spending and set up budgets. Create alerts for cost thresholds to be notified when spending exceeds predefined limits.

-

Azure Reservations and Spot Instances:

Consider using Reserved Instances for steady-state workloads and Spot Instances for cost-effective burstable workloads.

-

Serverless Computing:

Use serverless technologies like Azure Functions or Azure Logic Apps to pay only for the compute resources you consume during execution.

-

Azure Advisor:

Regularly review recommendations provided by Azure Advisor to optimize your resources for performance, security, and cost.

Conclusion

- Azure Machine Learning Compute offers a range of compute options, including CPU and GPU-based virtual machines, for training and deploying machine learning models at scale.

- Compute Clusters are well-suited for distributed training, handling large datasets, and complex model architectures.

- Choosing the right compute target is crucial for optimizing the efficiency and effectiveness of machine learning projects.

- Azure provides various options for deploying machine learning models into production environments, including AKS, ACI, Azure IoT Edge, and Azure Functions.

- Compute isolation in Azure Machine Learning ensures secure and separate execution of machine learning workloads, with mechanisms like Virtual Networks (VNets), ACI/AKS integration with VNets, Compute Isolation Policies, and Role-Based Access Control (RBAC) enhancing security.