An Introduction To Big Data on Cloud

Overview

Cloud Computing has revolutionized the way businesses manage and store their data. Big Data on Cloud refers to storing and processing large volumes of data on cloud-based platforms. These platforms offer scalable and cost-effective solutions for businesses to store and analyze data. With cloud-based Big Data platforms, businesses can access their data from anywhere, collaborate with others, and use machine learning and artificial intelligence tools to extract valuable insights from the data. Cloud-based Big Data platforms also provide enhanced security features to protect sensitive data.

Introduction

Before learning about Big Data on the cloud, let us get familiar with Big Data. Big Data has become an essential component of modern businesses, but managing and processing such large volumes of data can be challenging. Cloud Computing has solved this problem, allowing businesses to store and process their data on cloud-based platforms. Big Data on Cloud refers to storing and analyzing large amounts of data using Cloud Computing resources.

Cloud-based Big Data platforms offer numerous benefits to businesses, including scalability, flexibility, and cost-effectiveness. These platforms can easily scale to accommodate the growing data needs of a business while also providing flexible pricing models that allow businesses to pay only for the resources they use. Additionally, cloud-based platforms offer enhanced security features to protect sensitive data.

With the help of Big Data on the cloud, businesses can access their data from anywhere, collaborate with others, and use machine learning and artificial intelligence tools to extract valuable insights from the data. This can help businesses make more informed decisions and gain a competitive advantage in their respective industries.

Overall, Big Data on Cloud transforms how businesses approach data management and decision-making. By leveraging the power of Cloud Computing, businesses can store and analyze large amounts of data cost-effectively and securely, leading to improved business outcomes and greater success.

The Difference Between Big Data & Cloud Computing

Big Data and Cloud Computing are two different technologies that have become increasingly popular in recent years. While both are related to managing and processing large volumes of data, there are significant differences.

Big Data refers to collecting, storing, and analyzing large data sets to extract valuable insights and knowledge. Big Data technologies such as Hadoop and Spark are designed to handle massive data sets that cannot be processed using traditional data processing tools. Big Data technologies are primarily focused on data storage and analysis.

On the other hand, Cloud Computing is a technology that enables businesses to access and use computing resources over the Internet rather than relying on local hardware and software. Cloud Computing provides on-demand access to compute resources, including servers, storage, databases, and applications.

While Big Data and Cloud Computing are different, they are often used together. We combine both of them to form Big Data on the cloud. Cloud Computing provides the infrastructure needed to store and process large data sets, while Big Data technologies provide the tools needed to analyze and extract insights from the data.

In summary, Big Data and Cloud Computing are two distinct technologies that serve different purposes. Big Data is focused on managing and analyzing large data sets, while Cloud Computing provides on-demand access to compute resources. However, both technologies are important and often used to provide businesses with the tools to manage and analyze their data.

The Roles & Relationship Between Big Data & Cloud Computing

Big Data and Cloud Computing are two different technologies, but their roles and relationship are highly interconnected. Cloud Computing offers scalable and flexible computing resources, while Big Data helps manage and analyze large datasets.

The relationship between the two technologies lies in that Big Data requires significant computing power and storage, which Cloud Computing can provide. In addition, Cloud Computing offers on-demand resources that can be easily scaled up or down, depending on the requirements of the Big Data application.

The roles of Big Data and Cloud Computing complement each other. For example, Big Data requires extensive storage capacity, and cloud-based storage can provide cost-effective and flexible options. In addition, Cloud Computing provides access to various analytical tools, platforms, and applications that can help process Big Data.

Moreover, cloud-based platforms offer real-time data processing, essential for Big Data applications. As a result, Cloud Computing enables easy access to Big Data processing, which can significantly reduce the time and cost required to implement Big Data projects.

In conclusion, Big Data and Cloud Computing are complementary technologies that can work together to provide an efficient and effective data processing and storage solution. Cloud Computing offers the required computing resources, while Big Data provides the ability to manage, process, and analyze large amounts of data. Their integration offers a flexible, scalable, cost-effective solution for Big Data applications.

Big Data & Cloud Computing: A Perfect Match

Big Data and Cloud Computing are two buzzwords in the technology world. Combined, they offer a perfect match for organizations looking to harness the power of data for their business. Big Data is the large volume of structured, semi-structured, and unstructured data generated at a high velocity and comes from various sources. On the other hand, Cloud Computing is a technology that enables the delivery of computing services such as storage, processing, and analytics over the Internet.

Big Data and Cloud Computing complement each other, providing several benefits to organizations. The Cloud provides the infrastructure to store, manage, and process massive data. It offers flexibility, scalability, and `cost savings, allowing organizations to easily store and process large volumes of data without investing in expensive hardware.

Furthermore, cloud-based services such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform provide various data processing and analytics tools, including machine learning, artificial intelligence, and predictive analytics. These tools enable organizations to gain valuable insights from their data, which can be used to improve business processes, identify new opportunities, and make data-driven decisions.

In conclusion, Big Data and Cloud Computing are a perfect match for organizations looking to extract insights from large amounts of data. The Cloud provides the infrastructure, scalability, and flexibility needed to store and process Big Data, while Big Data helps us to process, and analyze large sets of data. By harnessing the power of these two technologies, organizations can gain a competitive advantage and drive growth.

Big Data Analytics in Cloud Computing

Big Data Analytics in Cloud Computing is a powerful combination that enables businesses to store, process, and analyze large volumes of data in a flexible and scalable manner. Cloud Computing provides the necessary infrastructure, such as storage, and computing resources, while Big `Data Analytics tools help to extract meaningful insights from the data. Cloud's elasticity allows organizations to quickly scale their resources up or down based on their data processing needs.

Moreover, cloud-based Big Data Analytics solutions provide faster processing times, improved data accuracy, and better cost-efficiency than traditional on-premise solutions. With the ability to handle massive amounts of data in real time, Big Data Analytics in Cloud Computing is a game-changer for businesses across all industries, enabling them to make data-driven decisions and gain a competitive edge in the market.

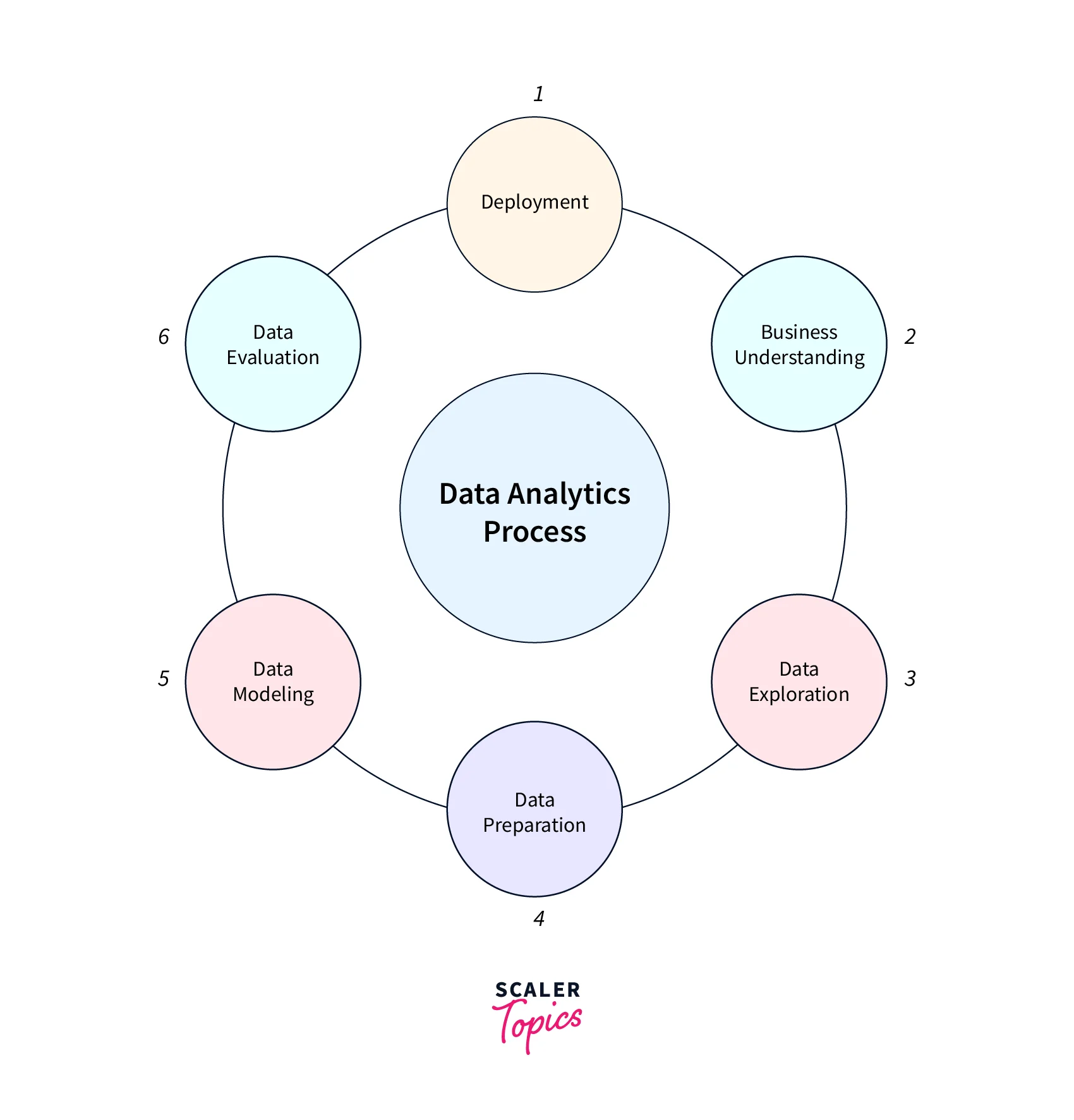

Big Data Analytics Cycle

The Big Data Analytics cycle` involves stages crucial for successful Big Data Analytics. The cycle begins with identifying the business problem and defining the objectives. In the next stage, data is collected from various sources and stored in a data lake or warehouse.

The third stage involves data processing, where data is cleaned, transformed, and prepared for analysis. Finally, the fourth stage is data analysis, where machine learning algorithms and statistical models extract insights and identify patterns from the data.

In the fifth stage, the insights gained from data analysis are visualized through charts, graphs, and dashboards to make it easier for decision-makers to understand and act upon. Finally, the insights are shared with stakeholders, and the process starts again as the insights gained may lead to identifying new business problems or opportunities.

The Big Data Analytics cycle is iterative and ongoing as businesses collect and analyze data to gain insights and improve their operations. By utilizing this cycle, businesses can harness the power of Big Data and use it to their advantage in decision-making, product development, and improving customer experiences.

Moving from ETL to ELT Paradigm

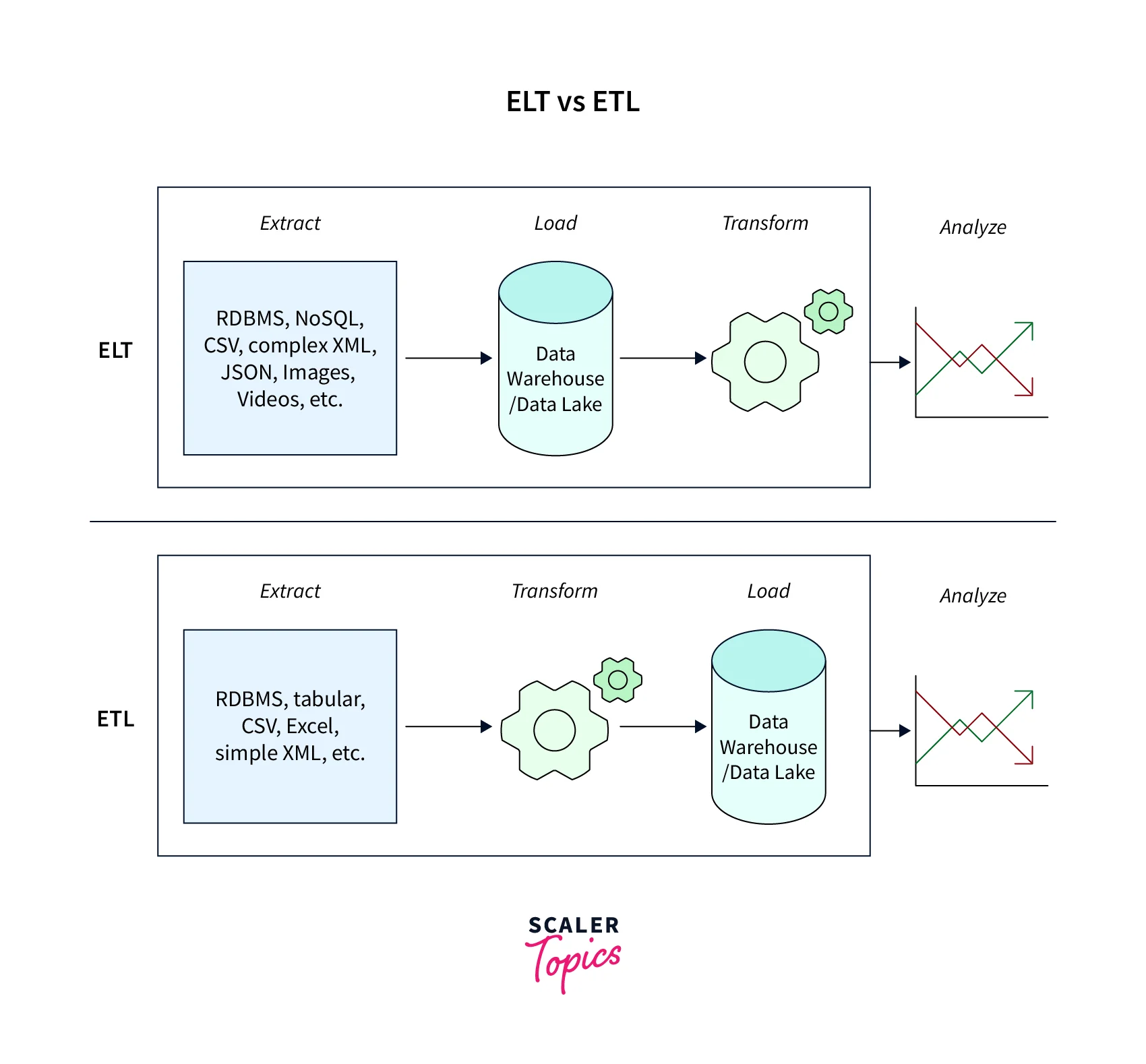

When it comes to processing and analyzing Big Data, the ETL (Extract, Transform, Load) paradigm has been the go-to approach for many years. However, as Big Data has grown in size and complexity, a new approach called ELT (Extract, Load, Transform) has emerged, which leverages the power and scalability of Cloud Computing.

In the ETL approach, data is extracted from its source, transformed into the desired format, and then loaded into a data warehouse for analysis. However, this approach can be time-consuming and resource-intensive, particularly as the volume of data increases.

With the ELT approach, data is first extracted from its source and loaded into a cloud-based data lake or storage platform, which can then be transformed and analyzed using cloud-based tools and services. This approach allows for faster and more flexible data processing and the ability to leverage the scalability and cost-effectiveness of Cloud Computing.

The ELT paradigm also allows for more agile data exploration and experimentation, as data can be easily ingested and transformed without needing a predefined schema or data model. This makes identifying patterns and insights in large and complex datasets easier.

Some Advantages of Big Data Analytics

In recent years, Big Data Analytics has become an integral part of businesses and organizations. Big Data Analytics has several advantages, which can help businesses make informed decisions, increase productivity, and improve customer experience.

One of the significant advantages of Big Data Analytics is that it helps businesses to identify patterns and trends in large data sets that would be difficult to identify using traditional data analysis methods. It also gives businesses insights into customer behavior and preferences, which can help them tailor their products and services to meet customer needs better.

Big Data Analytics can also help businesses reduce costs and increase efficiency by identifying areas where processes can be streamlined or automated. It can also help businesses to identify potential problems before they occur, allowing for proactive measures to be taken to mitigate them.

Another advantage of Big Data Analytics is its ability to enhance security by identifying and mitigating potential security threats before they become significant. It can also help businesses to comply with regulations and identify potential fraud or other types of non-compliance.

Overall, Big Data Analytics is a powerful tool businesses can use to gain insights and make better decisions. As a result, it could revolutionize how businesses operate and drive growth and profitability.

Case Study: Google's BigQuery for Data Processing and Analytics

Google's BigQuery is a cloud-based, fully managed data warehouse that enables businesses to run complex analytics on petabyte-scale data. It is a highly scalable, cost-effective solution that allows users to store and query large datasets quickly and efficiently.

One of the most notable use cases of BigQuery is at Google itself, where it is used to power various products such as Google Analytics, Google AdWords, and Google Play. Google originally developed BigQuery to help it process massive amounts of data that its various services generate.

Many other organizations, including media companies, financial institutions, and e-commerce businesses, have adopted BigQuery. One example is Home Depot, which uses BigQuery to analyze its sales data and make better business decisions.

One of the key benefits of using BigQuery is its speed and scalability. It can process large datasets quickly and can be easily scaled up or down depending on the needs of the business. BigQuery has a user-friendly interface that allows non-technical users to access and analyze data easily.

Overall, Google's BigQuery is a powerful tool for data processing and analytics that can help businesses of all sizes make better decisions based on the insights derived from their data.

Experiments with Different Dataset Sizes

Experiments with different dataset sizes are crucial in understanding the behavior of Big Data on the Cloud. With the vast amounts of data produced daily, it is necessary to test how Big Data Analytics tools perform with varying data sizes.

Cloud Computing provides an ideal platform for running experiments with different dataset sizes since it can scale up or down based on demand. These experiments can help determine the ideal size of the dataset for specific applications, such as machine learning, data mining, and predictive analytics. Additionally, they can help identify any bottlenecks or limitations of the tools used for Big Data Analytics.

Understanding how Big Data tools perform with different dataset sizes is crucial for making informed decisions about infrastructure requirements, costs, and performance expectations.

Conclusion

- Big Data on Cloud refers to storing and processing large volumes of data on cloud-based platforms.

- Big Data and Cloud Computing are two buzzwords in the technology world that offer a perfect match for organizations looking to harness the power of data for their business.

- Big Data Analytics has several advantages, which can help businesses make informed decisions, increase productivity, and improve customer experience.

- Google's BigQuery is a powerful tool that allows organizations to analyze their massive datasets quickly and efficiently.

- One of the key benefits of using BigQuery is its speed and scalability. It can process large datasets quickly and can be easily scaled up or down depending on the needs of the business.

- Using cloud-based `Big Data platforms, businesses can access their data from anywhere, collaborate with others, and use machine learning and artificial intelligence tools to extract valuable insights from the data.