Top Big Data Tools

Overview

Big data tools have become vital in today's data-driven economy for businesses and organizations to leverage the potential of big data. These tools, including Hadoop, Spark, and NoSQL databases, allow for efficient capture, storage, and analysis of huge datasets. Big data tools enable decision-makers to make educated choices, improve consumer experiences, and discover emerging trends by translating raw data into actionable insights. Big data tools are crucial for modern organizations due to their role in improving operational efficiency, personalizing offerings, and attaining competitive advantage.

The Need for Big Data Tools and Analytics

In today's digital era, Big Data plays a pivotal role in informed decision-making for businesses. These tools empower organizations to efficiently manage and derive valuable insights from the vast volume of data generated in today's tech-driven world.

Let us look at the various key considerations for Big Data tools:

Data Variety:

Big data tools excel in handling diverse data formats, from text to images, videos, and sensor readings, enabling a comprehensive approach to data analysis.

Speed and Real-Time Analytics:

These tools operate at remarkable speeds, facilitating real-time data analysis and swift decision-making. For instance, financial institutions use them to detect and prevent fraud instantaneously.

Enhanced Customer Experiences:

Big data analytics enables personalized services by studying customer behavior and preferences, leading to tailored marketing efforts and improved customer satisfaction, as seen with Netflix's movie recommendations.

Predictive Analytics:

Organizations harness the power of big data tools for predictive analysis, forecasting future trends based on historical data. This is particularly valuable in optimizing supply chains and reducing operational costs.

In summary, Big Data tools have revolutionized data management, enabling businesses to harness insights, enhance operations, and adapt to the evolving data landscape.

Top Big Data Analytics Tools

Let's delve into some of the top big data analytics tools.

Apache Hadoop

Apache Hadoop is an open-source platform that enables businesses to handle and analyze massive amounts of data easily. Hadoop's distributed design and MapReduce framework enable parallel processing, allowing for rapid analysis of large data volumes.

Apache Spark

Apache Spark's lightning-fast processing speed and adaptability make dealing with big datasets easy. Spark's in-memory computation feature allows for fast data processing and iterative analysis. Its extensive ecosystem meets many requirements, including batch processing, machine learning, graph computation, and streaming analytics.

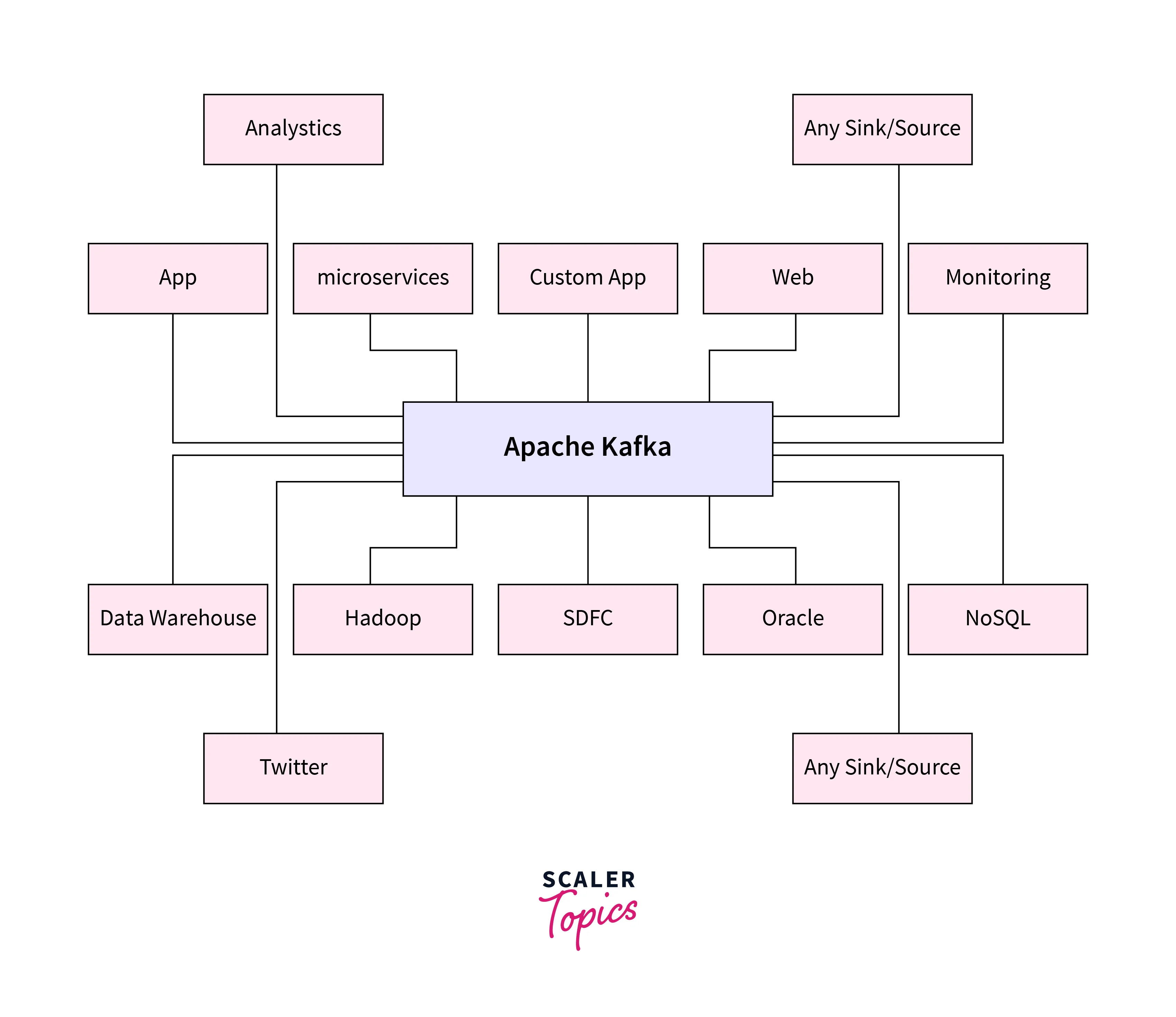

Apache Kafka

Kafka is a powerful real-time data processing platform well-known for its ability to handle enormous quantities of data. It excels in data streaming, allowing fast data flow between applications and systems. Kafka's fault-tolerant architecture protects data integrity in the face of failures.

Apache Storm

Apache Storm is an open-source platform that enables real-time data processing with finesse. Storm's revolutionary spout-bolt design effectively manages data streaming, making it a top choice for real-time data analysis.

Apache Cassandra

Apache Cassandra is a NoSQL database well-known for its scalability and fault-tolerance. It excels at processing massive amounts of data across several servers while maintaining consistent performance. Cassandra's adaptable data model supports structured and semi-structured data and is suited for real-time applications as well as dynamic data.

Apache Hive

Apache Hive is a robust and user-friendly solution for organizing and querying huge datasets using an SQL-like language. Even non-technical individuals may easily extract insights from enormous datasets by exploiting SQL's familiar syntax. The core structure of Hive translates queries into MapReduce or Tez processes, which improves performance and scalability.

Qubole

Qubole simplifies complicated data operations with its user-friendly interface and tremendous features. It easily handles processes like data preparation, processing, and visualization, allowing organizations to get valuable insights.

Xplenty

Xplenty streamlines the data extraction, transformation, and loading process. Even non-techies can modify data flows using its user-friendly interface and drag-and-drop capability. The capacity of Xplenty to connect with numerous data sources and destinations demonstrates its adaptability.

MongoDB

MongoDB's user-friendly approach to dealing with vast amounts of unstructured data, such as documents, makes it a favorite among data professionals. Users may quickly extract relevant insights because of MongoDB's flexible structure and sophisticated querying features. Scaling to meet increased data demands is straightforward because of its distributed design.

SAS

SAS has an easy-to-use interface that streamlines difficult processes and makes data management and analysis accessible to users of all skill levels. SAS has many advanced statistical tools that help discover trends, patterns, and correlations that drive informed decision-making. SAS transforms complicated information using its sophisticated data visualization capabilities.

Data Pine

Data Pine's user-friendly interface and cutting-edge technologies enable organizations to browse large datasets and extract useful insights easily. Its array of tools provides a full solution, from smooth data integration to real-time visualization. It supports predictive modeling, trend analysis, etc. while maintaining data security and privacy.

Hevo Data

Hevo Data simplifies the challenging work of data integration by seamlessly combining and processing enormous datasets from multiple sources. It has a simple UI, and automatic procedures make data conversion quick and easy. Hevo supports real-time data transmission, transformation, and interoperability with multiple data warehouses.

Zoho Analytics

With Zoho Analytics, gaining insights from massive datasets is simple. You can gather, analyze, and visualize data in real time to make educated decisions. Its easy-to-use interface allows users to build custom reports and dashboards and do ad hoc analysis without complicated code.

Cloudera

Cloudera is regarded as a top-tier tool in big data analytics. Its powerful platform enables enterprises to capitalize on the power of huge datasets. With Cloudera, you can easily collect, analyze, and generate insights from data. It also supports powerful data management, real-time processing, and machine learning capabilities.

RapidMiner

RapidMiner has a user-friendly interface that makes data preparation, modeling, and deployment easier. It comprises diverse algorithms and advanced analytics tools that enable users to identify hidden patterns. RapidMiner's adaptability serves various businesses, from predictive analysis to machine learning.

OpenRefine

OpenRefine is an open-source tool used to clean, alter, and enhance chaotic data, assuring its correctness and usefulness. Its user-friendly interface makes data reconciliation, duplication removal, and standardization of formats easier. It also allows you to customize functionality using various extensions.

Kylin

Kylin enables organizations to quickly uncover insights from big datasets by seamlessly combining speed and accuracy. Its parallel processing capability speeds up query response times, and its multidimensional cube technology simplifies complicated data modeling. Kylin's open-source platform allows interaction with existing data ecosystems, facilitating data democratization.

Samza

Apache Samza excels in real-time processing, handling large amounts of data while remaining fault tolerant. Its user-friendly architecture enables developers to build efficient data transformation and analysis pipelines. The interoperability of Samza with Apache Kafka improves its data ingestion capabilities, guaranteeing a continuous data flow.

Lumify

Lumify, with its simple interface and tremendous features, provides a complete visualization and analysis platform by seamlessly combining data from diverse sources. Lumify's interactive graph visualizations simplify complicated interactions, allowing users to discover hidden patterns easily. Its collaborative features also promote cooperation among users.

Trino

Trino simplifies data analysis with its user-friendly interface and powerful features. It rapidly traverses massive databases, offering real-time insights. The distributed query engine of Trino enables smooth scalability, allowing enterprises to handle data of any scale. It is also adaptable as it can link to a wide range of data sources, from classic databases to new data lakes.

Factors to Consider While Selecting the Big Data Tools

Choosing the appropriate Big Data tools is critical for effective data management. Let us look at the various factors to consider when choosing Big Data tools:

Project Requirements:

Evaluate the specific needs of your project to ensure tool compatibility.

Scalability:

Opt for technologies capable of managing data growth as your project evolves.

Usability:

Choose tools with user-friendly interfaces to maximize productivity.

Integration:

Consider the tool's ability to seamlessly integrate with your existing systems.

Learning Curve:

Check for available training materials and documentation to gauge the tool's ease of adoption.

Cost:

Weigh the flexibility and cost-effectiveness of open-source technologies against paid options that offer comprehensive support.

Security:

Prioritize tools with robust security features to safeguard sensitive data.

Key Advantages and Use Cases of Big Data Tools

Big Data tools let organizations harness and analyze huge amounts of data, resulting in better decision-making and operations. Here's an overview of their main benefits and applications:

Advantages:

- Data Insights:

Big Data tools unravel hidden patterns and insights from vast datasets, enabling businesses to make informed strategic choices. - Scalability:

These tools provide scalable infrastructure to meet expanding data demands without sacrificing speed. - Real-time Analysis:

Big Data tools enable real-time data processing, which is essential for quick reactions and preemptive measures. - Competitive Advantage:

Using Big Data tools helps businesses to remain ahead by anticipating trends and customer preferences.

Use Cases:

- Customer Analytics:

Understanding customer behavior and preferences assists in the development of personalized marketing tactics. - Risk Management:

Big Data tools evaluate risks by analyzing historical and real-time data, which is critical in the financial and insurance industries. - Healthcare Insights:

Big Data tools aid in illness diagnosis, epidemic prediction, and patient care. - Supply Chain Optimisation:

Big Data tools improve inventory management, logistics, and demand forecasting. - Fraud Detection:

Financial businesses use Big Data analytics to detect fraudulent operations using pattern recognition.

Conclusion

- Big data tools have transformed how we handle, analyze, and interpret large datasets.

- Apache Hadoop's distributed processing capabilities have opened the road for efficiently addressing big data volumes.

- In-memory computing of Spark has introduced lightning speed to data processing.

- Apache Cassandra and MongoDB provide scalable NoSQL database solutions.

- A crucial factor when choosing the right big data tool is its scalability and performance; the tool should be able to handle large datasets and processing demands efficiently.

- High performance of big data tools ensures that data processing and analysis can be completed within reasonable time frames, enabling timely and informed decision-making.