Blob Detection with OpenCV

Overview

Blob detection is a computer vision technique used to identify regions or areas of an image that share common properties, such as color or texture. In this technique, we aim to identify objects or regions of an image that differ from their surroundings and have a distinct shape. Blob detection with OpenCV is used in a wide range of applications, including image segmentation, object detection, and tracking.

Pre-requisites

To understand blob detection in OpenCV, it is helpful to have some familiarity with image processing concepts such as:

-

Linear algebra: Understanding the basics of linear algebra is important for understanding some of the mathematical concepts behind image processing and computer vision.

-

Probability and statistics: Understanding the basics of probability and statistics is important for understanding some of the machine learning and deep learning techniques used in blob detection.

-

OpenCV library: OpenCV is a popular library for computer vision and image processing. You should be familiar with the basics of the OpenCV library and how to use it to implement blob detection algorithms.

What is a Blob?

In computer vision, a blob (Binary Large OBject) refers to a region of interest or a connected component in an image or video that has some common characteristics such as color, texture, intensity, or shape

In image processing, a blob is a region of an image that appears different from its surroundings in terms of intensity or color. Blobs can come in various shapes and sizes and can represent a variety of features, such as edges, corners, or objects.

Blobs can have different properties such as area, perimeter, centroid, bounding box, orientation, circularity, convexity, and eccentricity. These properties can be used to describe and characterize the blobs and to distinguish them from other image features.

What is Blob Detection?

Blob detection is the process of identifying and localizing blobs in an image. The goal of blob detection is to find regions of an image that are significantly different from their surroundings and represent a meaningful feature or object.

There are several techniques for blob detection, including thresholding, Laplacian of Gaussian (LoG) filtering, and the Difference of Gaussian (DoG) method. These techniques involve convolving the image with various filters to identify regions that have high intensity or high contrast relative to their surroundings. Once blobs have been detected, they can be used for various tasks, such as object detection, tracking, and recognition.

For example, in object recognition, blobs can be used to represent specific features of an object, such as edges or corners, which can then be matched with features in other images to identify the object.

Blob detection using OpenCV has various applications in computer vision, robotics, and image processing. Some examples include object recognition, facial recognition, gesture recognition, tracking moving objects, identifying regions of interest, and detecting anomalies or events. Blob detection is also used in biomedical imaging, satellite imaging, and surveillance systems, among other fields.

Need for Blob Detection

Blob detection in OpenCV is needed for various reasons, such as:

- Object Detection : Blob detection helps to identify objects in an image. By detecting and localizing blobs, we can separate objects from the background and determine their size, shape, and position in the image.

- Feature Extraction: Blob detection is used to extract features from an image. These features can be used to classify objects or to match them with objects in other images.

- Tracking: Blob detection helps to track the movement of objects over time. By detecting and tracking blobs, we can determine the direction and speed of objects, which is useful in applications such as autonomous driving or robotics.

- Segmentation: Blob detection is used to segment an image into different regions based on their texture or color. This segmentation is useful for identifying regions of interest in an image and for separating them from the background.

Overall, Blob Detection in OpenCV is an essential technique in computer vision that helps us to understand the structure and composition of an image. It has numerous applications in various fields such as robotics, medical imaging, and autonomous driving.

Blob Extraction

Blob extraction is the process of isolating and extracting blobs from an image. This involves identifying regions in the image that are significantly different from their surroundings and represent a meaningful feature or object. This can be done using various techniques such as thresholding, filtering, or segmentation.

Important steps involved in blob extraction are given below:

-

Morphological operations : Morphological operations such as erosion, dilation, opening, and closing are often used to refine the binary mask and remove noise or small blobs. Erosion and dilation are used to remove or add pixels to the boundary of the blobs, while opening and closing are used to remove small gaps or connect disjoint blobs.

-

Connected component labeling : Connected component labeling is a technique for identifying and labeling contiguous regions of pixels in the binary mask. Each labeled region represents a blob or region of interest. This technique can be implemented using various algorithms such as the two-pass algorithm, the union-find algorithm, or the sequential algorithm.

-

Blob filtering : Once the blobs are extracted, they can be filtered or selected based on their size, shape, texture, or other characteristics. For example, small blobs or noise can be removed using a minimum size threshold, or non-circular blobs can be removed using a circularity threshold. Blob filtering can be performed using various techniques such as feature extraction, machine learning, or heuristic rules.

Representation of a Blob

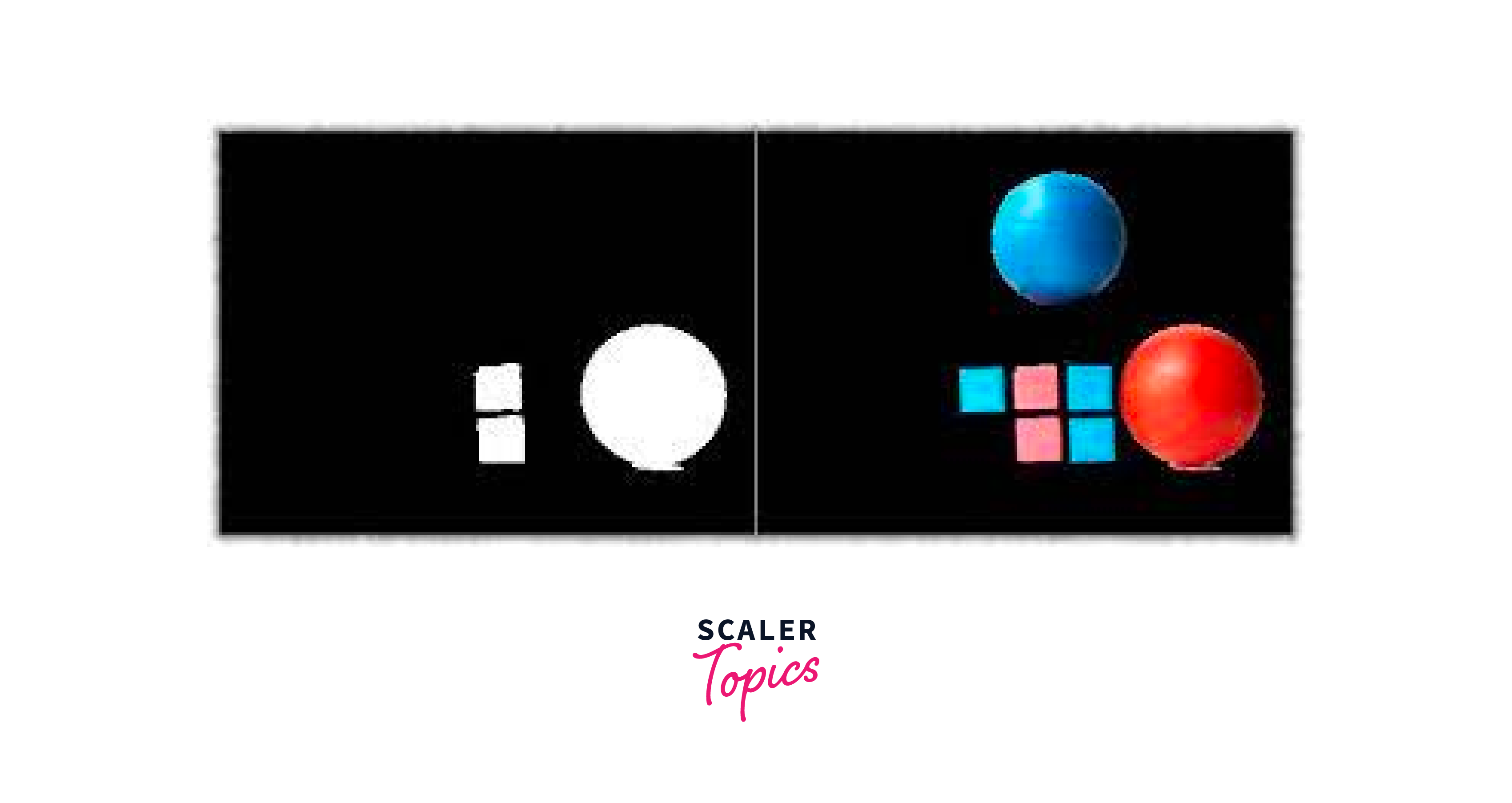

Once blobs have been extracted from an image, they need to be represented in a way that is suitable for further processing and analysis. One common representation is to use a binary image, where the blobs are represented as white pixels and the background as black pixels. Other representations can include using a set of features or descriptors that describe the size, shape, and texture of the blobs.

Below are the various ways to represent the blobs that have been extracted from an image.

- Bounding box: One of the simplest ways to represent a blob is to use a bounding box that encloses the region of interest. The bounding box is defined by its top-left corner coordinates and its width and height. This representation is easy to compute and is useful for tasks such as object detection, tracking, and segmentation.

-

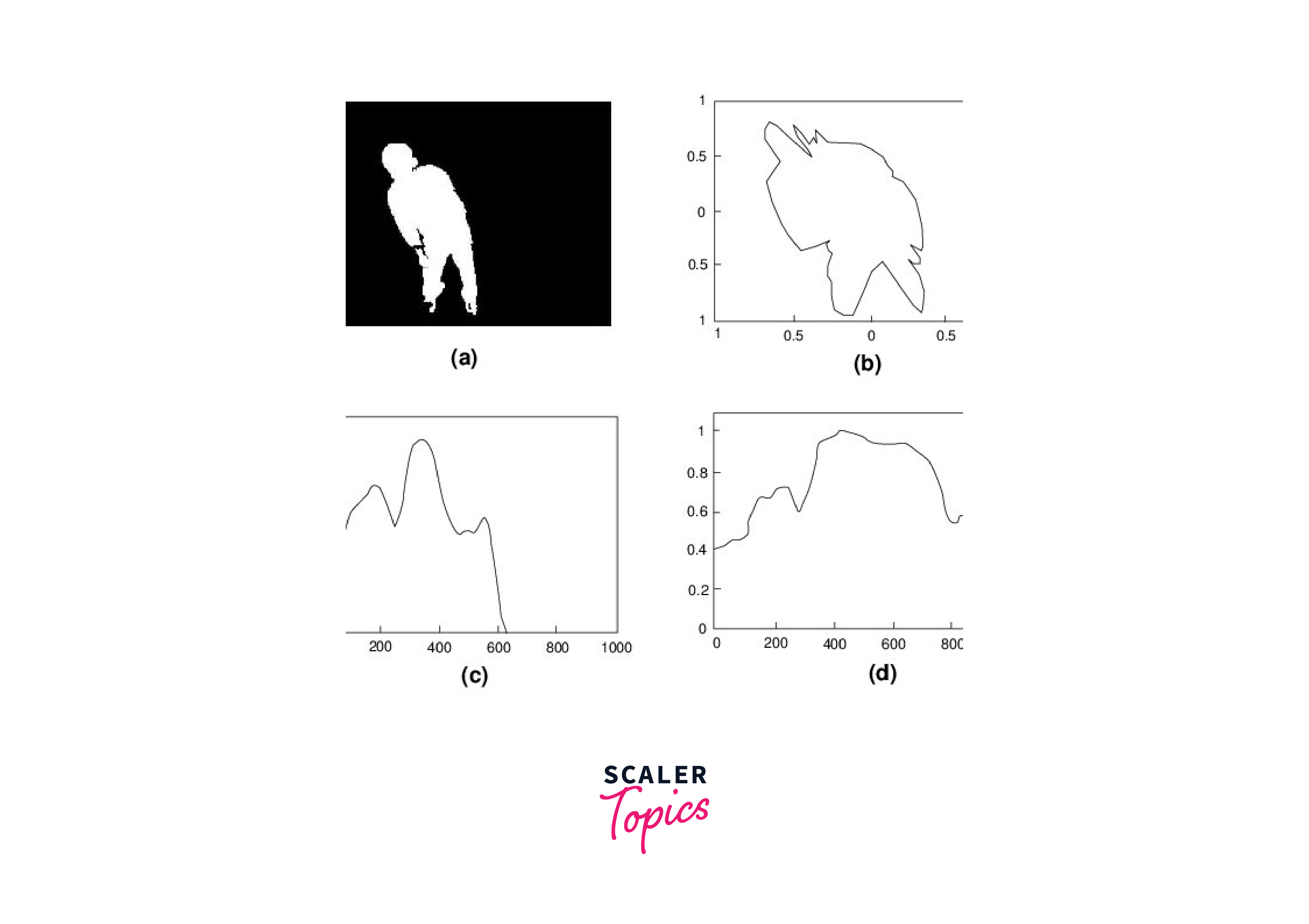

Contour: A contour is a set of points that forms the boundary of the blob. Contours can be represented using various data structures such as polygons, Bezier curves, or spline curves. Contour representation is useful for tasks such as shape analysis, texture analysis, and feature extraction.

-

Histogram: A histogram is a graphical representation of the distribution of pixel values or other features within the blob. Histograms can be used to capture the color, texture, or other characteristics of the blob. Histogram representation is useful for tasks such as image retrieval, classification, and segmentation.

-

Feature vectors: A feature vector is a numerical representation of the blob that captures its relevant characteristics such as size, shape, texture, and color. Feature vectors can be computed using various techniques such as moment invariants, Fourier descriptors, or Gabor filters. Feature vector representation is useful for tasks such as machine learning, pattern recognition, and object tracking.

-

Graphical models: Graphical models such as Markov random fields, conditional random fields, or graphical lasso can be used to represent the spatial relationships and dependencies among the blobs or other image elements. Graphical model representation is useful for tasks such as image segmentation, scene understanding, and object recognition.

Blob Classification

Blob classification is the process of assigning a label or category to a blob based on its properties. This can be useful in various applications such as object recognition, where blobs can be classified based on the object they represent. Classification can be done using various techniques such as machine learning or pattern recognition algorithms. The choice of technique will depend on the specific application and the properties of the blobs being classified.

Here is a detailed overview of blob classification with tabulated data:

-

Size-based classification: This method categorizes blobs based on their size or area. It is a simple and effective approach for separating large objects from small objects or noise.

-

Shape-based classification: This method categorizes blobs based on their shape or geometric features such as circularity, rectangularity, and aspect ratio. Table 2 shows an example of shape-based classification for three blobs with different shapes.

-

Texture-based classification: This method categorizes blobs based on their texture or surface characteristics such as smoothness, roughness, and homogeneity. Texture-based classification can be performed using texture analysis techniques such as gray-level co-occurrence matrix (GLCM) and local binary patterns (LBP). Table 3 shows an example of texture-based classification for three blobs with different textures.

-

Color-based classification: This method categorizes blobs based on their color or chromaticity features such as hue, saturation, and value. Color-based classification can be performed using color histograms, color moments, or other color descriptors. Table 4 shows an example of color-based classification for three blobs with different colors.

How to Perform Background Subtraction?

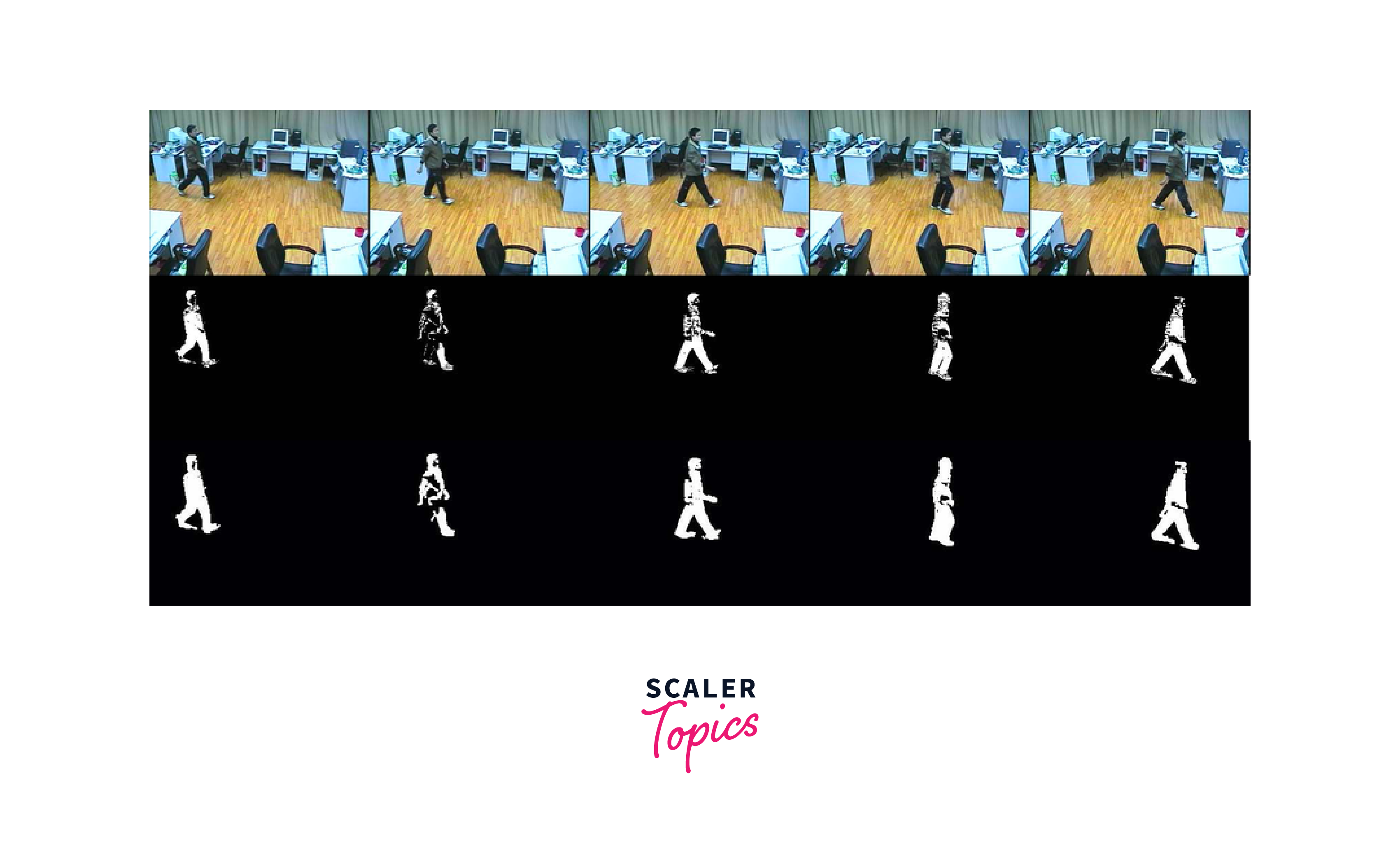

Background subtraction is a common technique used in Blob detection OpenCV to extract foreground objects from a video or image sequence. Here is the detailed overview of the steps to perform blob detection using OpenCV.

-

Manual subtraction from the first frame: In this method, the first frame of the video sequence is used as a reference or static background. The subsequent frames are then subtracted from the reference background to identify the moving or foreground objects. This method is simple and effective when the background is relatively static, but it is sensitive to lighting changes and noise.

-

Subtraction using Subtractor MOG2: Subtractor MOG2 is a built-in function in OpenCV for background subtraction that uses a mixture of Gaussians to model the background. The algorithm adapts the background model over time to handle changes in lighting and scene dynamics. The foreground objects are identified by comparing the current frame to the background model and applying a threshold. This method is more robust and accurate than manual subtraction and can handle complex scenes with multiple moving objects.

-

Adaptive thresholding: This method involves dynamically adjusting the threshold value based on the pixel values of the current frame and the background model. This approach helps to minimize false positives and false negatives in blob detection and produces better segmentation results. However, adaptive thresholding can be computationally intensive, especially when dealing with high-resolution videos or complex scenes.

-

Morphological operations: Morphological operations, such as erosion and dilation, are commonly used in conjunction with background subtraction to improve the segmentation accuracy. These operations help to remove small blobs or noise in the foreground mask and fill gaps between the foreground objects. Morphological operations are particularly useful when dealing with uneven lighting or shadows in the scene.

-

Blob detection: Once the foreground mask is obtained, blob detection algorithms can be used to extract meaningful features or objects from the scene. These algorithms typically identify regions of interest or blobs based on their size, shape, and intensity. Common blob detection algorithms include contour detection, connected component labeling, and watershed segmentation.

Manual Subtraction from the First Frame

This method involves manually subtracting the first frame from all subsequent frames to extract the moving foreground objects. The first frame is considered as the background, and any pixel values that differ from the background by a certain threshold are considered as foreground objects. This method is simple but is prone to errors due to changes in lighting conditions or sudden movements in the background.

Here is a code snippet to perform manual subtraction from first frame:

Subtraction using Subtractor MOG2

MOG2 (Mixture of Gaussians) is a popular background subtraction algorithm that models the background as a mixture of Gaussian distributions. This algorithm maintains a history of pixel values and updates the Gaussian model of each pixel over time. The algorithm then subtracts the current pixel value from the background model to detect the foreground objects. This method is more robust to changes in lighting conditions and can adapt to dynamic backgrounds.

Here is a code snippet to perform manual subtraction in Blob detection using subtractor MOG2:

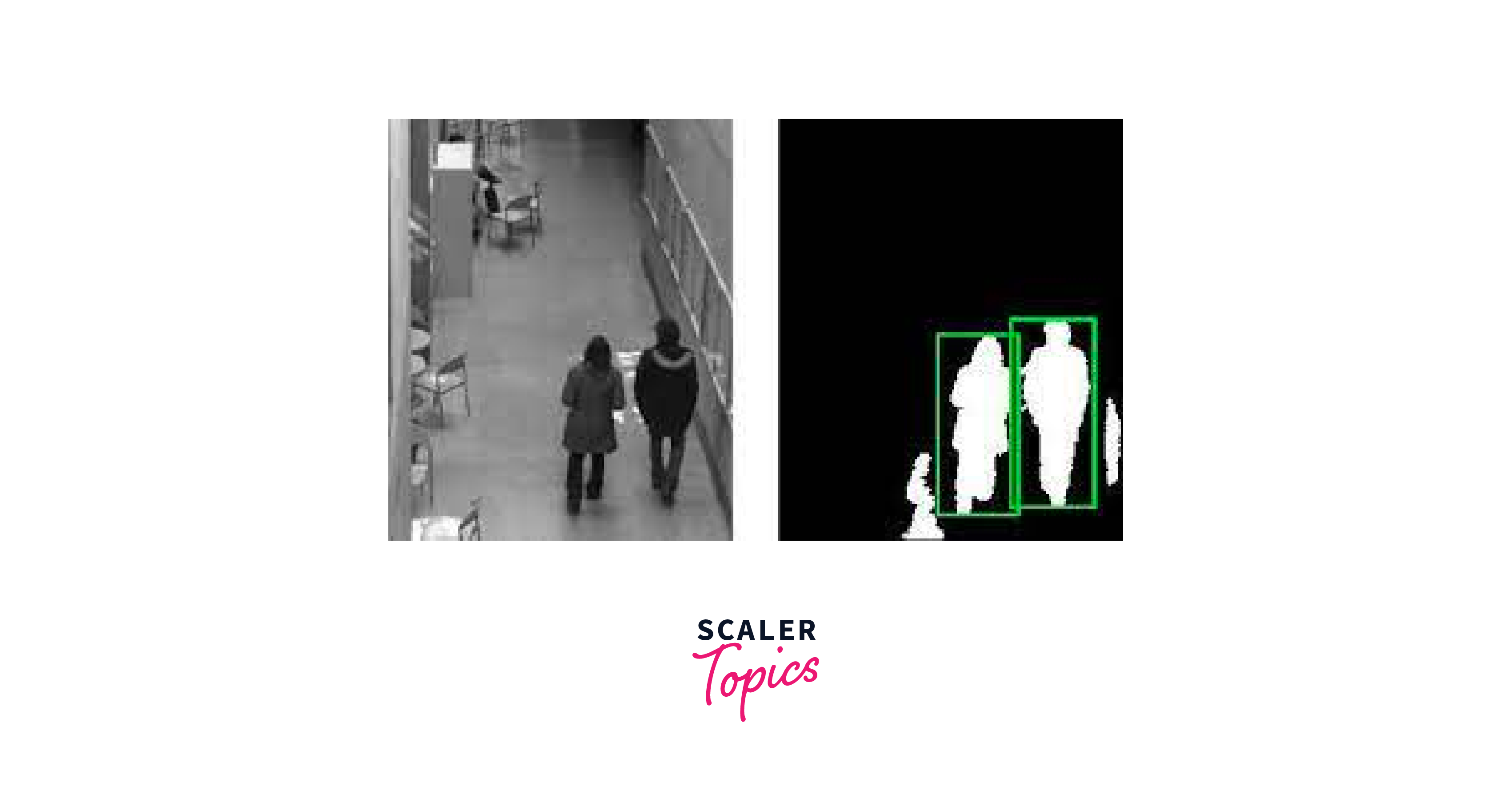

Output:

Blob Detection using LoG, DoG, and DoH

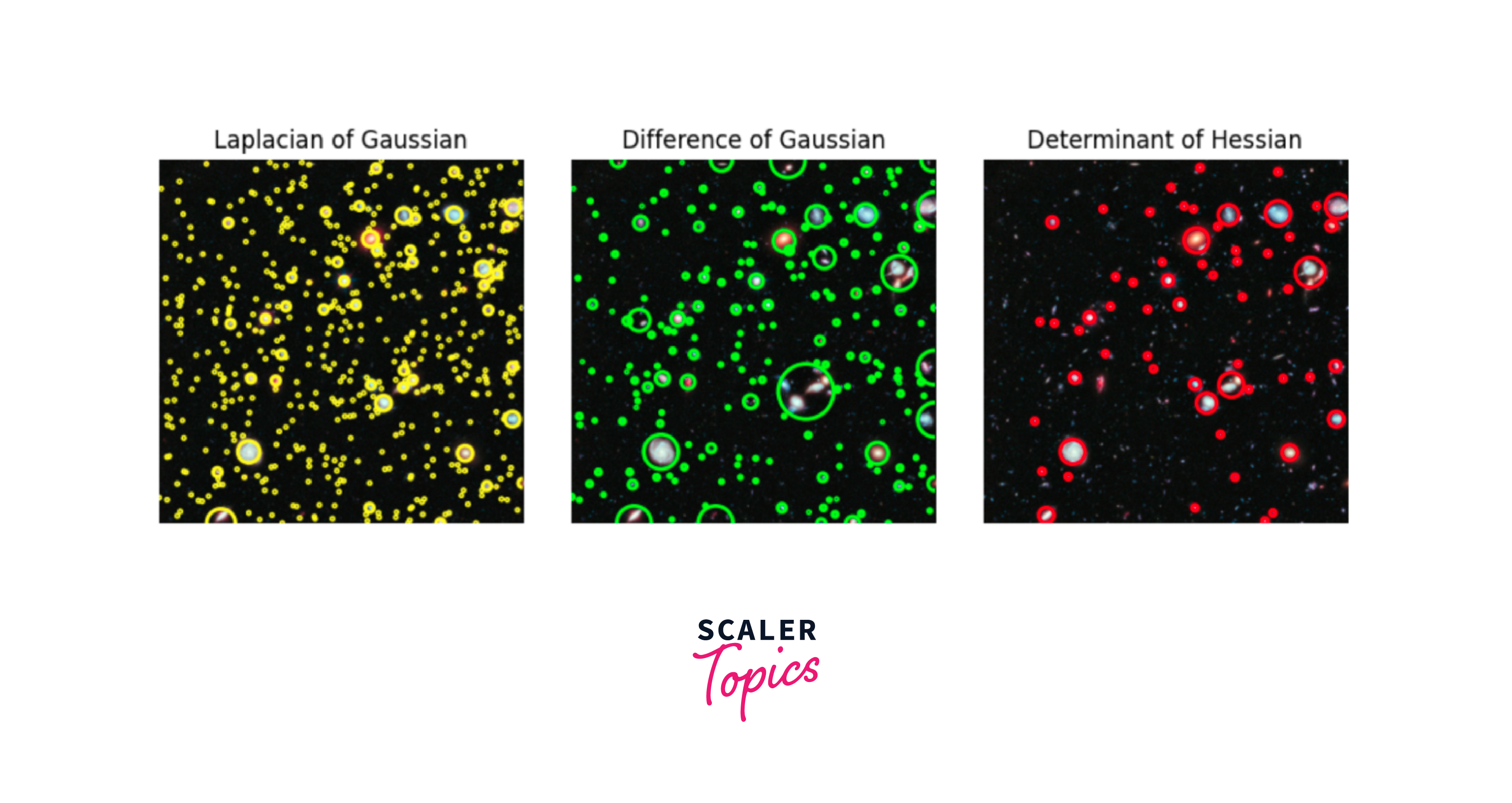

Blob detection is a technique used to identify regions of an image that differ in properties such as brightness, color, or texture. LoG (Laplacian of Gaussian), DoG (Difference of Gaussian), and DoH (Determinant of Hessian) are commonly used methods for blob detection. These methods of Blob detection OpenCV apply a filter to the image to highlight regions of interest and then threshold the resulting image to extract the blobs. This method is useful when the foreground objects have distinct texture or color from the background.

Here's some code to perform blob detection using LoG (Laplacian of Gaussian), DoG (Difference of Gaussian), and DoH (Determinant of Hessian) filters:

Output:

After knowing about all the possible strategies to perform blob detection using OpenCV, let us now learn about the code implementation in the next section.

Implementing Blob Detection with OpenCV

Now, let us implement Blob detection with OpenCV with the interactive tutorial given below.

Step 1: Import necessary libraries:

The cv2 module provides access to various OpenCV functions, while the numpy module is used for array manipulation.

Read an Image using OpenCV imread() Function

The imread() function reads the input image file and stores it as a numpy array to perform Blob detection in OpenCV. The second argument, cv2.IMREAD_GRAYSCALE, specifies that the image should be read in grayscale mode.

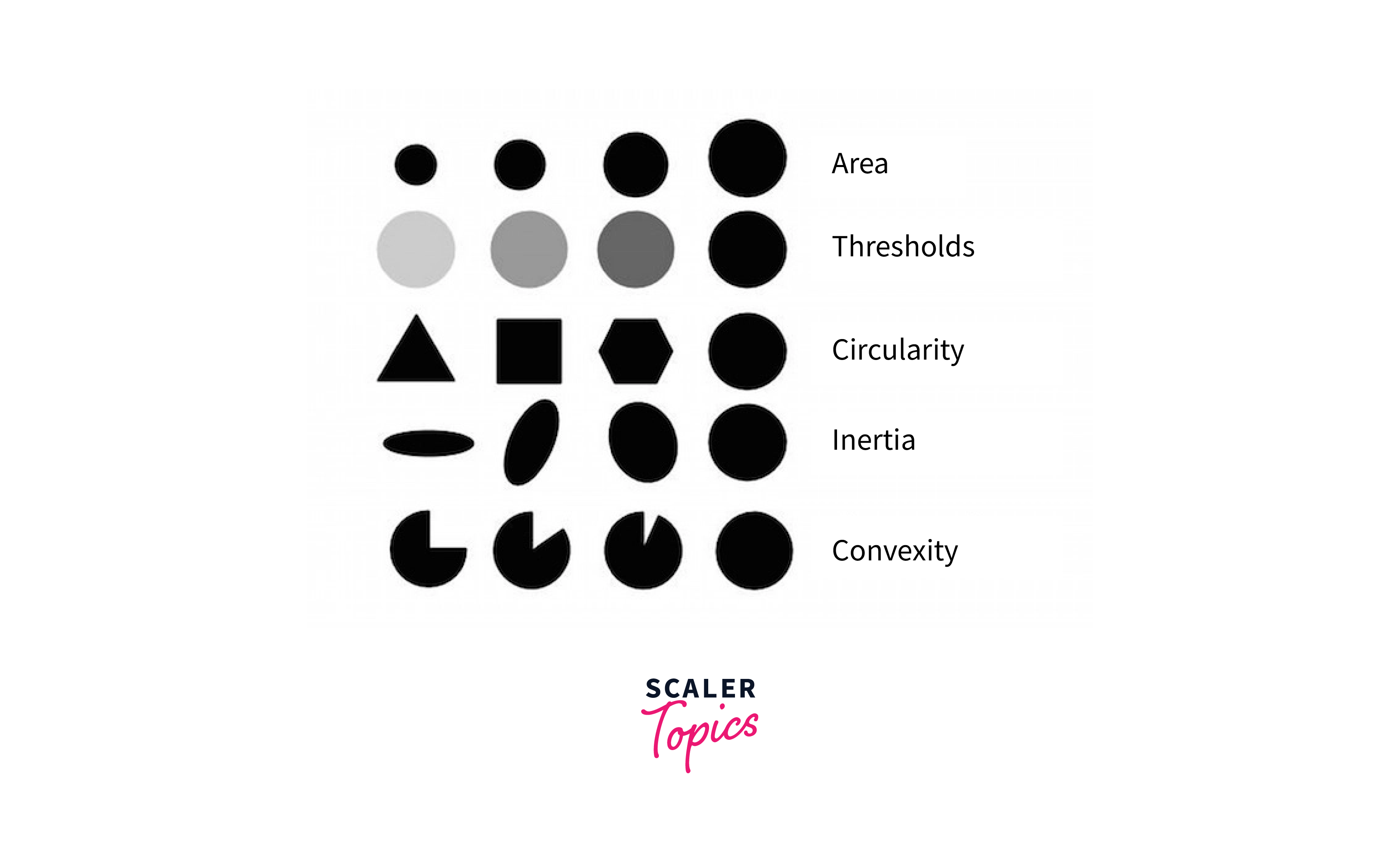

Create or Set Up the Simple Blob Detector

We first create a SimpleBlobDetector_Params object to set up the detector parameters. We then enable the filter for area, and set the minimum area to detect blobs as 100. We disable the filters for circularity, convexity, and inertia. Finally, we create a detector object using the specified parameters.

Input Image in the Created Detector

The detect() function takes the input grayscale image as argument and detects blobs using the detector object created in the previous step. It returns a list of KeyPoint objects, where each KeyPoint represents a detected blob and makes it easier to perform Blob detection in OpenCV.

Obtain Key Points on the Image

The drawKeypoints() function is used to draw circles around the detected blobs on the input image.

We pass the input image, the detected keypoints, an empty array, a red color tuple (0, 0, 255), and the flag cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS. The flag ensures that the size of the circle corresponds to the size of the detected blob.

Draw Shapes on the Key Points Found on the Image

Finally, we display the output image using cv2.imshow() function, with the window title 'Blob Detection'. We wait for a key press using cv2.waitKey(0) and destroy all open windows using cv2.destroyAllWindows().

Putting all the steps together, here's the complete code for performing Blob detection in OpenCV:

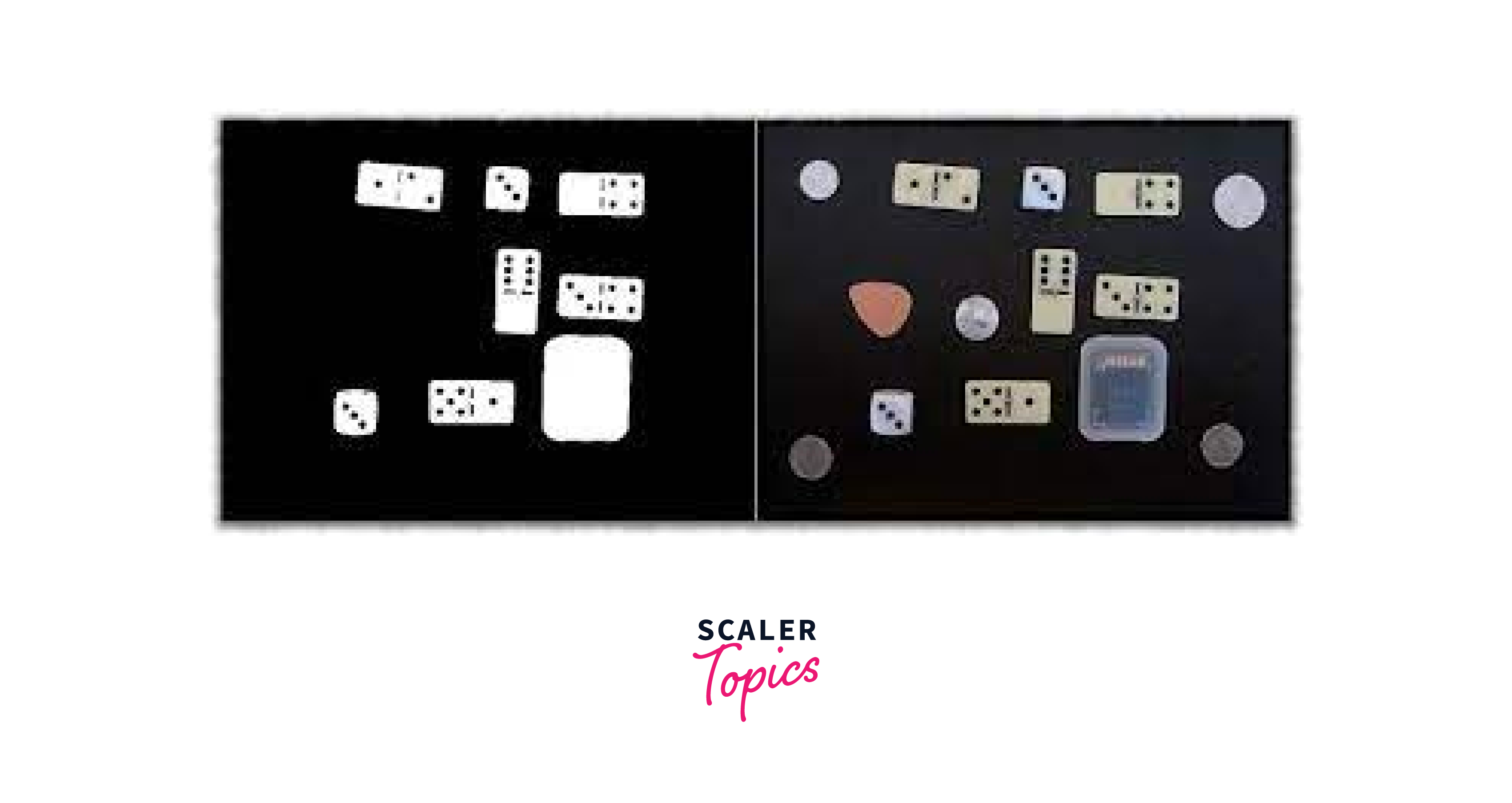

Output:

Conclusion

- In conclusion, Blob detection in OpenCV is a fundamental technique in computer vision that involves identifying objects or regions of an image that have a distinct shape or texture.

- It is used in various applications such as object detection, tracking, and segmentation.

- OpenCV provides a simple and intuitive interface for performing blob detection using various techniques such as LoG, DoG, and DoH.

- By detecting and visualizing blobs, we can gain insights into the structure and composition of an image.

- Overall, blob detection is a powerful tool in computer vision and has numerous applications in various fields such as robotics, medical imaging, and autonomous driving.