Building a Neural Network

Overview

To build a neural network, you must identify the task you want the model to perform and collect and preprocess the data used for training. You will then need to design the architecture of the network, including the number of layers and neurons in each layer, and choose an activation function for each layer. Once the network is set up, you must initialize the weights and biases and train the model using an optimization algorithm such as stochastic gradient descent. After training, you can evaluate the network's performance on a test set and adjust as needed. Once the model performs to your satisfaction, you can use it to make predictions on new, unseen data.

Introduction

To build a neural network, you must determine the task you want the network to perform and collect and preprocess the data used for training. You will then design the architecture of the network, including the number of layers and neurons in each layer, and choose an activation function for each layer. You will then need to initialize the weights and biases and train the network using an optimization algorithm. After training, you can evaluate the network's performance on a test set and adjust as needed. Once the model performs well, you can use it to predict new data.

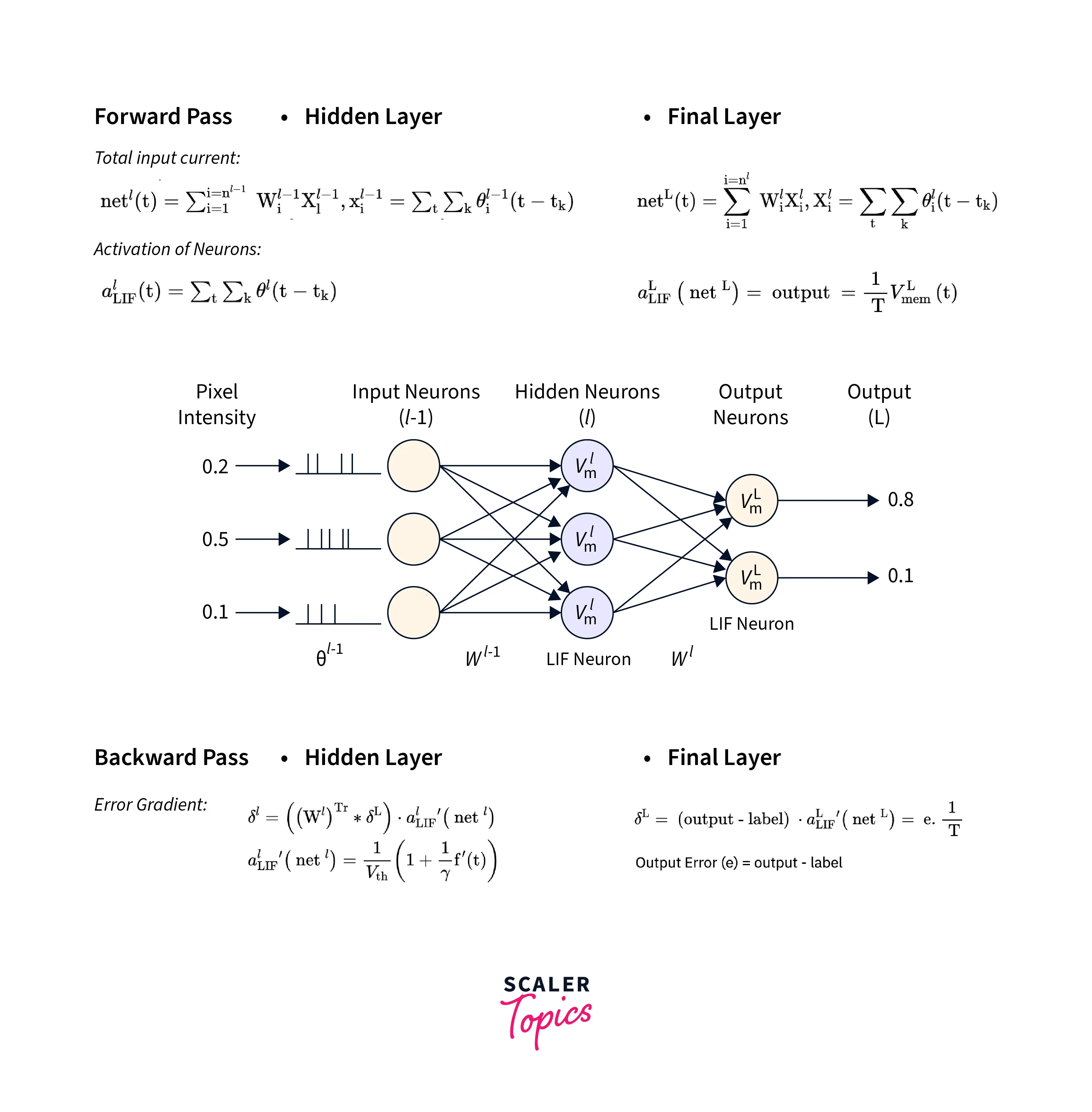

Neural Networks: Main Concepts

- Neurons: A neuron is a fundamental neural network unit that receives input, performs computations and produces an output. It can mathematically represent a set of weighted inputs passed through an activation function.

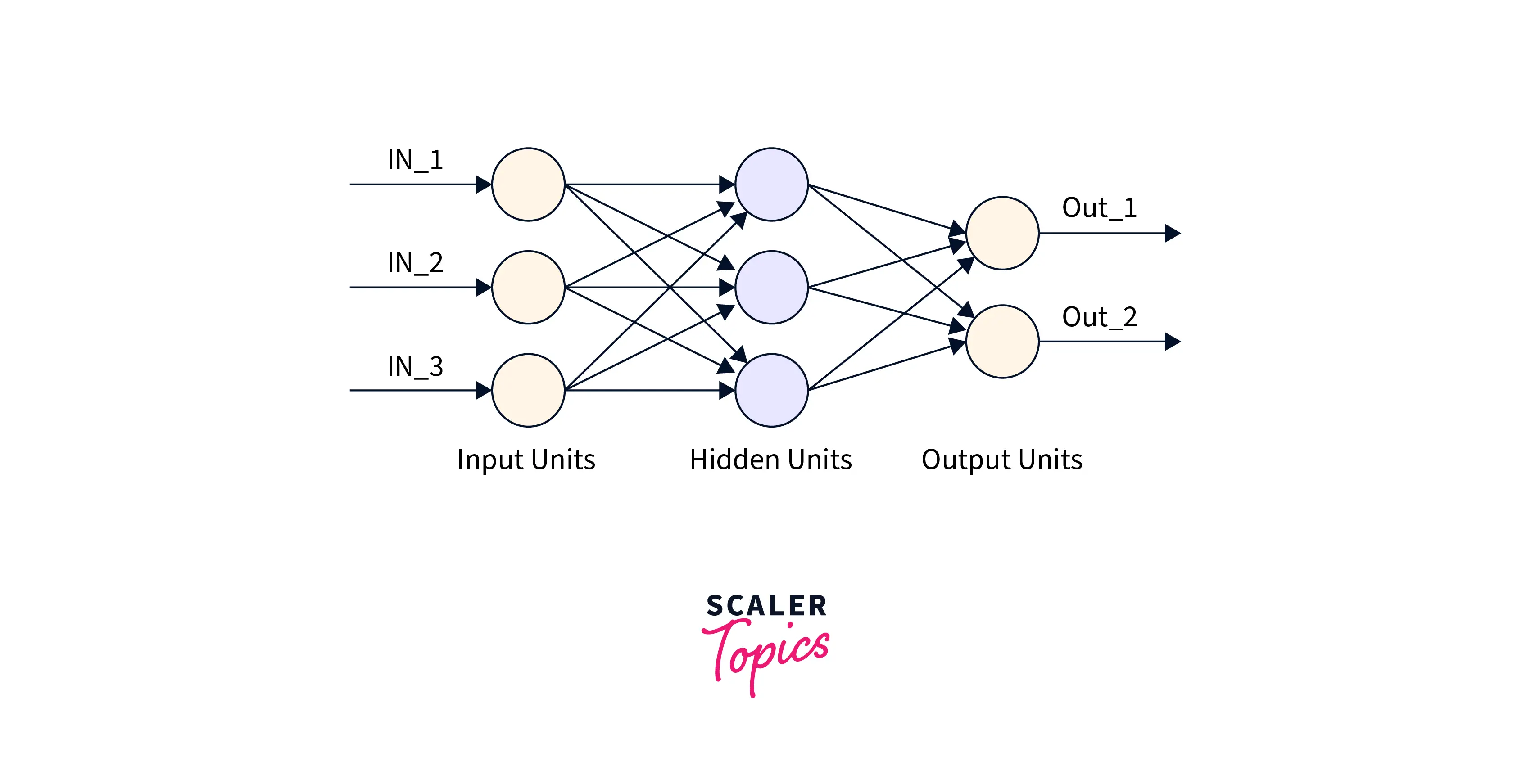

- Layers: Layers in a neural network refer to the arrangement of neurons and their order, with the input layer being first, hidden layers in the middle, and the output layer being last. Each layer performs specific computation on input data and passes it to the next layer. Each layer's number of layers and neurons is adjustable to optimize the network's performance.

- Weights and biases: weights and biases are the parameters that control the computations performed by the neurons in a neural network. Weights adjust the influence of the input on a neuron, and biases adjust the output of a neuron. They are learned during the training process to optimize the network's performance.

- Activation function: Activation functions are mathematical functions used in artificial neural networks to determine the output of a neuron given its inputs, allowing the network to learn and model complex relationships between inputs and outputs. Examples include sigmoid, ReLU, and tanh.

- Training: Training a neural network involves adjusting the weights and biases to minimize the error between predicted and actual output using an algorithm such as backpropagation with a training set. The process is repeated until the error is minimized or a certain threshold is reached. Then, we can use the trained network to predict new data, called test sets.

- Overfitting: Overfitting occurs when a neural network model becomes too complex and fits noise in the training data, leading to poor performance on unseen data. Ways to prevent overfitting include regularization techniques, early stopping, dropout, using a more robust architecture, or using more data for training.

The Process to Train a Neural Network

- Collect and preprocess the training data: This may involve cleaning and formatting the data, as well as splitting it into training, validation, and test sets.

- Define the neural network's architecture: This includes deciding on the number of layers and the number of neurons in each layer and choosing the activation function for each layer.

- Initialize the weights and biases of the neural network: These parameters will be adjusted during training.

- Feed the training data through the network: This involves presenting the training data to the network and using an optimization algorithm such as stochastic gradient descent to adjust the weights and biases to minimize the error between the predicted output and the true output.

- Evaluate the performance of the trained network on the validation set: This helps ensure that the network is not overfitting the training data.

- Fine-tune the hyperparameters of the network: This may include adjusting the learning rate, the number of epochs, and the batch size.

- Test the performance of the trained network on the test set: This provides an estimate of the generalization error of the network.

- Use the trained network to make predictions on new, unseen data.

Vectors and Weights

A neural network utilizes vectors representing each layer's input data and output. These vectors represent a single example from the training set. The weights, which are adjustable parameters, are used to minimize the error between the predicted output and actual output by determining the strength of connections between neurons. During training, these weights are adjusted to reduce error. The input vectors are multiplied by the connection weights to produce output for the current layer, which is then passed through an activation function to decide if it should be carried to the next layer.

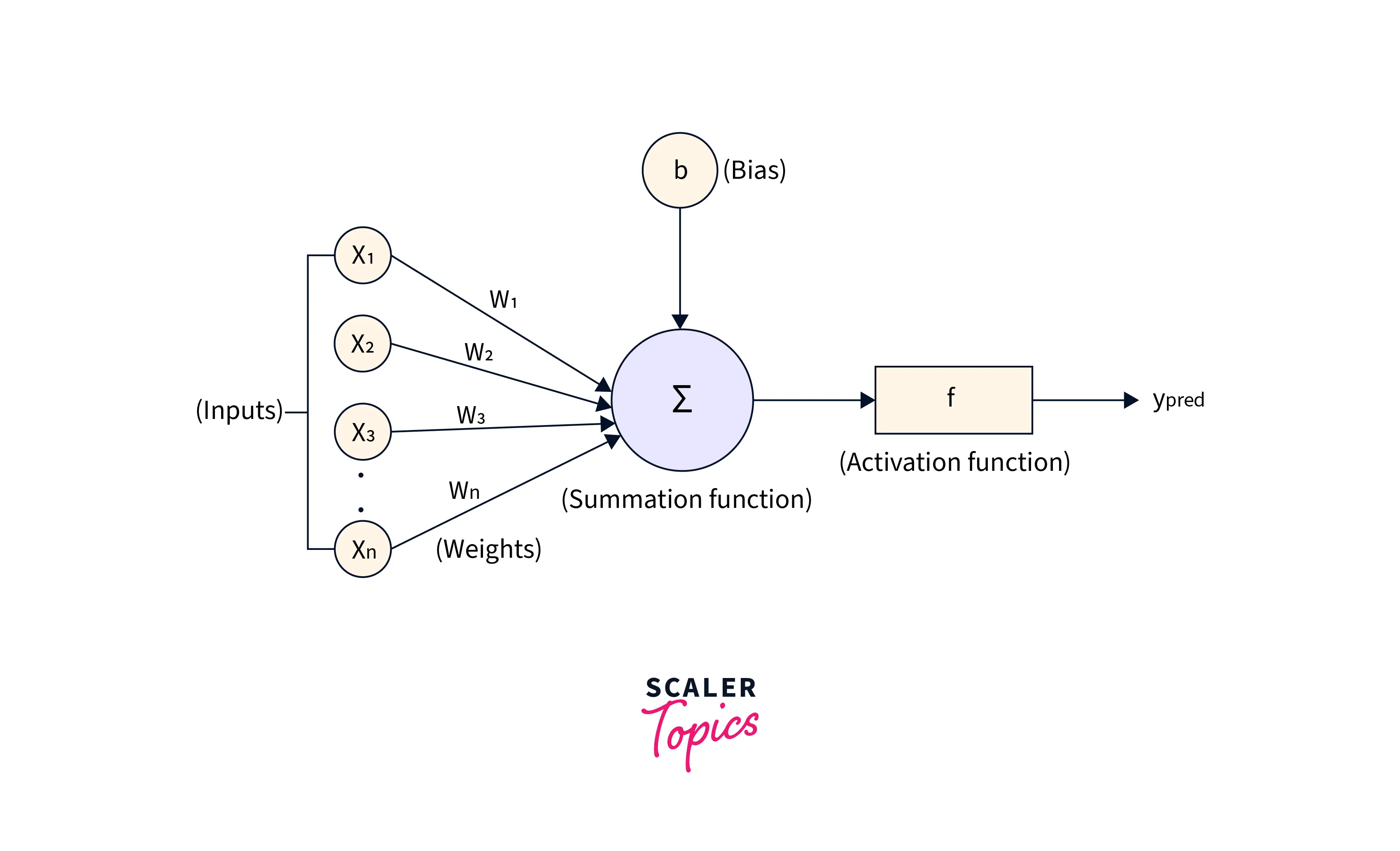

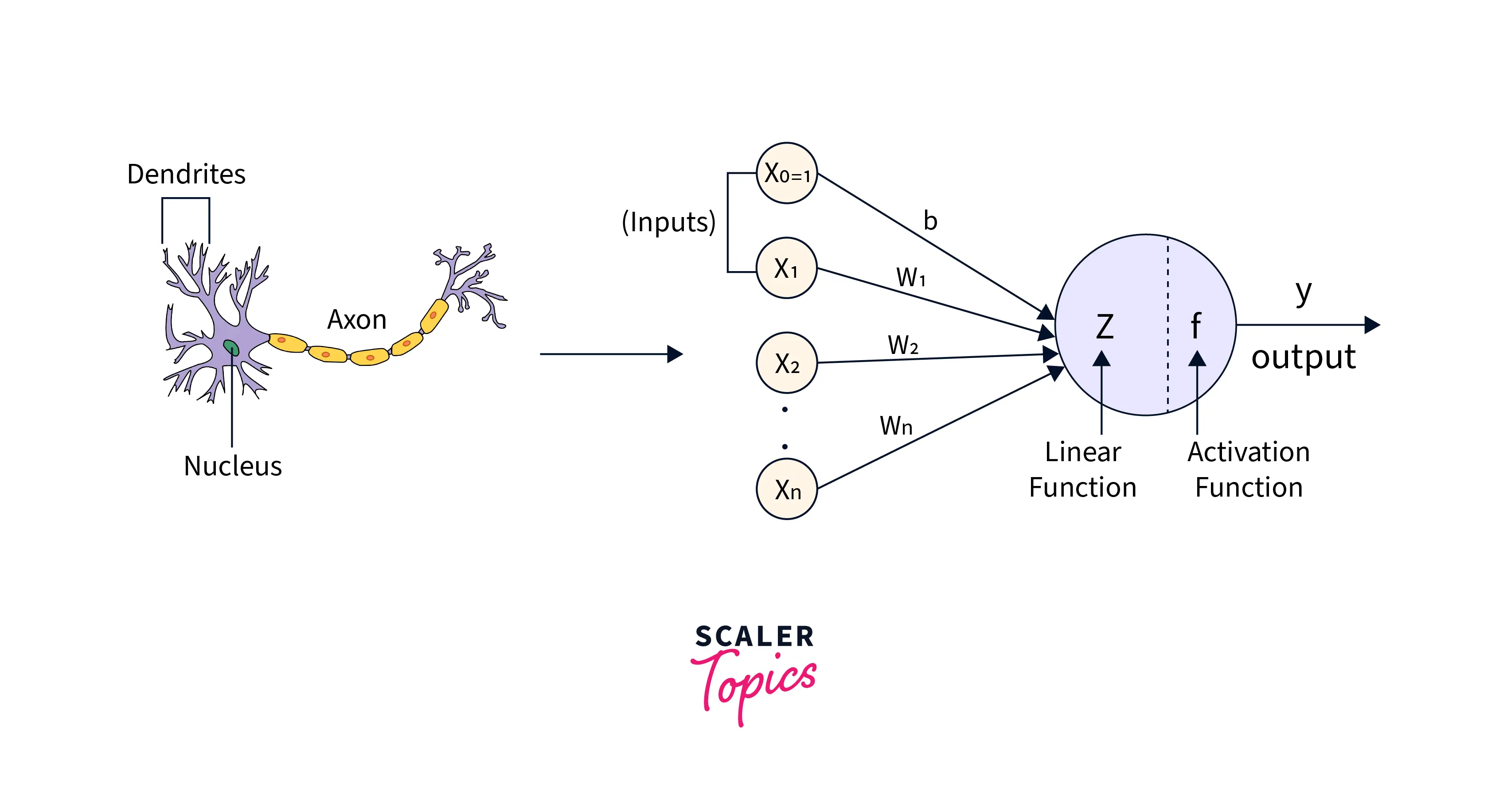

Schematic of a Neuron

A neuron in a deep neural network can be represented schematically as follows:

A neuron in a deep neural network can be represented schematically as follows:

Input layer: The input layer receives input data, typically feature vectors.

Weight layers: The weight layers apply a set of learnable weights to the input data. These weights are initially random but are adjusted during training to optimize the network's performance.

Activation function: The activation function is applied to the output of the weight layers to introduce non-linearity into the network. Common activation functions include ReLU (Rectified Linear Unit) and sigmoid.

Output layer: The output layer produces the final output of the neuron, which is passed on to the next layer in the network.

Bias: bias is a neuron parameter, giving the model more freedom and making it more flexible.

This is a simplified representation; in practice, a neural network can have multiple hidden layers with multiple neurons in each layer. Training a deep neural network is often performed by adjusting the weight and bias of the neurons to minimize the difference between the predicted and actual output.

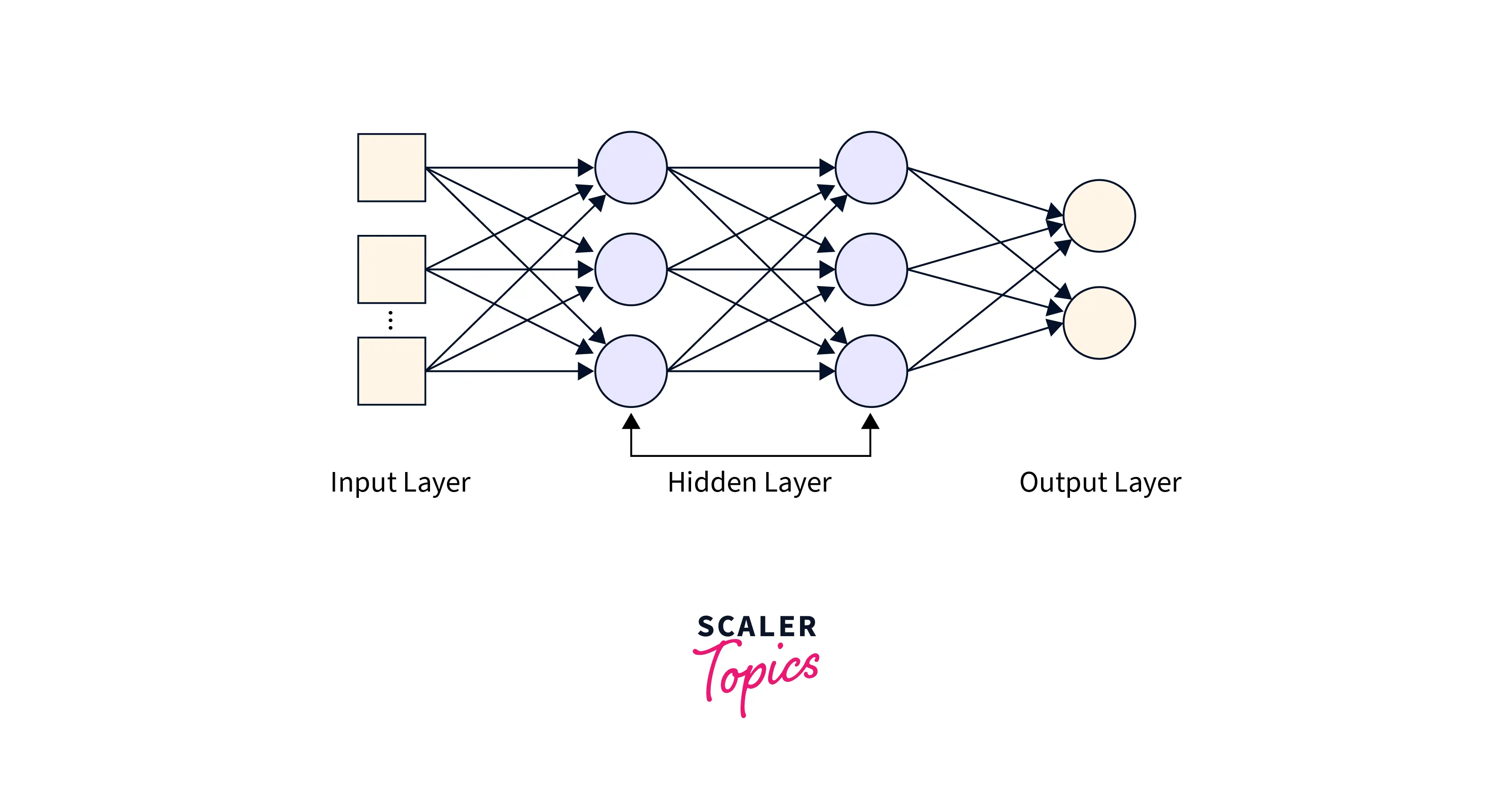

Schematic of a Neural Network

In this schematic, the input layer receives the raw input data, which is then processed by the hidden layer. Finally, the output of the hidden layer is passed through an activation function and then transmitted to the output layer, which produces the network's final output.

The weights and biases of the connections between the layers are adjusted during training to minimize the error between the predicted output and the true output.

This is a very simplified schematic of a neural network, and in practice, neural networks can have many more layers and a much larger number of neurons. However, this basic structure applies to many neural networks.

Types of Perceptron Models

Perceptron is a type of neural network model first proposed in the late 1950s. It is a simple model that consists of a single layer of artificial neurons and is used for binary classification tasks. Perceptrons are considered to be the building blocks of more complex neural network models. However, they are not commonly used in practice because they are limited to linear decision boundaries, which limits their ability to learn more complex relationships. There are different types of perceptron models, including:

- Single-layer perceptron

- Multi-layer perceptron (MLP)

- Convolutional neural network (CNN)

- Recurrent neural network (RNN)

Single-layer Perceptron Model

Using neural networks with Python, a single-layer perceptron can be implemented for binary classification tasks where the output is either 0 or 1. It consists of a single layer of neurons with inputs, weights, and an activation function that produces the final output. The weights and biases of the connections between the input and output layers are adjusted during training to minimize the error between the predicted and true output. However, single-layer perceptrons are limited to linear classification tasks and cannot learn more complex relationships between input and output.

Advantages and Disadvantages of Single Layer Perceptron Model

Advantages:

- Simple and easy to implement

- Can be used as a starting point for understanding neural networks

Disadvantages:

- Limited to linear classification tasks

- Unable to learn more complex relationships between the input and output

- May not perform as well as more sophisticated neural network architectures on more complex tasks

Multi-Layered Perceptron Model

"Neural networks with Python can create and train a multi-layered perceptron (MLP) model. These models are more powerful and versatile than single-layer perceptrons, making them well-suited for complex data sets and tasks. However, because of their added complexity, they may require more computational resources to train. Python libraries such as TensorFlow, Keras, and PyTorch provide tools for building and training MLPs in a user-friendly way. With these libraries, it is possible to create and train MLPs with various architectures and hyperparameters to achieve optimal performance on a given task."

Advantages and Disadvantages of the Multi-Layered Perceptron Model

Advantages:

- Able to learn complex relationships between the input and output

- Versatile and can be used for a wide range of tasks

- Can achieve good performance on many tasks

Disadvantages:

- More complex than single-layer perceptrons

- May require more computational resources to train

- Can be prone to overfitting if not properly regularized

Characteristics of the Perceptron Model

- "Neural networks with Python" can implement the perceptron model for binary classification tasks, where the output is 0 or 1.

- It is composed of a single layer of neurons, and the model's output is determined by the weighted sum of the inputs passed through an activation function.

- The weights and biases of the connections between the input and output layers are adjusted during training to minimize the error between the predicted output and the true output.

- The perceptron model is limited to linear classification tasks and cannot learn more complex relationships between the input and output.

- The training process for the perceptron model is known as the perceptron learning algorithm, which can be implemented using neural networks with Python.

Limitations of the Perceptron Model

- The perceptron model is limited to linear classification tasks. Therefore, it cannot learn more complex relationships between the input and output and may need to perform better as more sophisticated neural network architectures on more complex tasks.

- The perceptron model is prone to get stuck in local minima during training. This can occur if the data is not linearly separable or there are too many weights and biases to adjust.

- The perceptron model does not have a built-in mechanism for handling missing or corrupted input data. This can lead to poor performance if the training data is incomplete or contains errors.

- The perceptron model is sensitive to the scale of the input data. Therefore, the model may perform poorly if the input data is not properly normalized.

Conclusion

- When working neural networks with Python, following the general process of collecting and preprocessing training data is important. First, initialize the weights and biases. Train the network using an optimization algorithm. Evaluate the performance of the validation set.

- Fine-tune the hyperparameters. Test the performance on the test set. Then, use the trained network to make predictions on new data.

- There are many architectures to choose from when working with neural networks with Python, such as single-layer perceptrons, multi-layered perceptrons, convolutional neural networks, and recurrent neural networks.

- It is important to choose the appropriate architecture and hyperparameters for the task at hand and to ensure the network is trained and validated properly to avoid overfitting.