Canary Deployment in Kubernetes

Overview

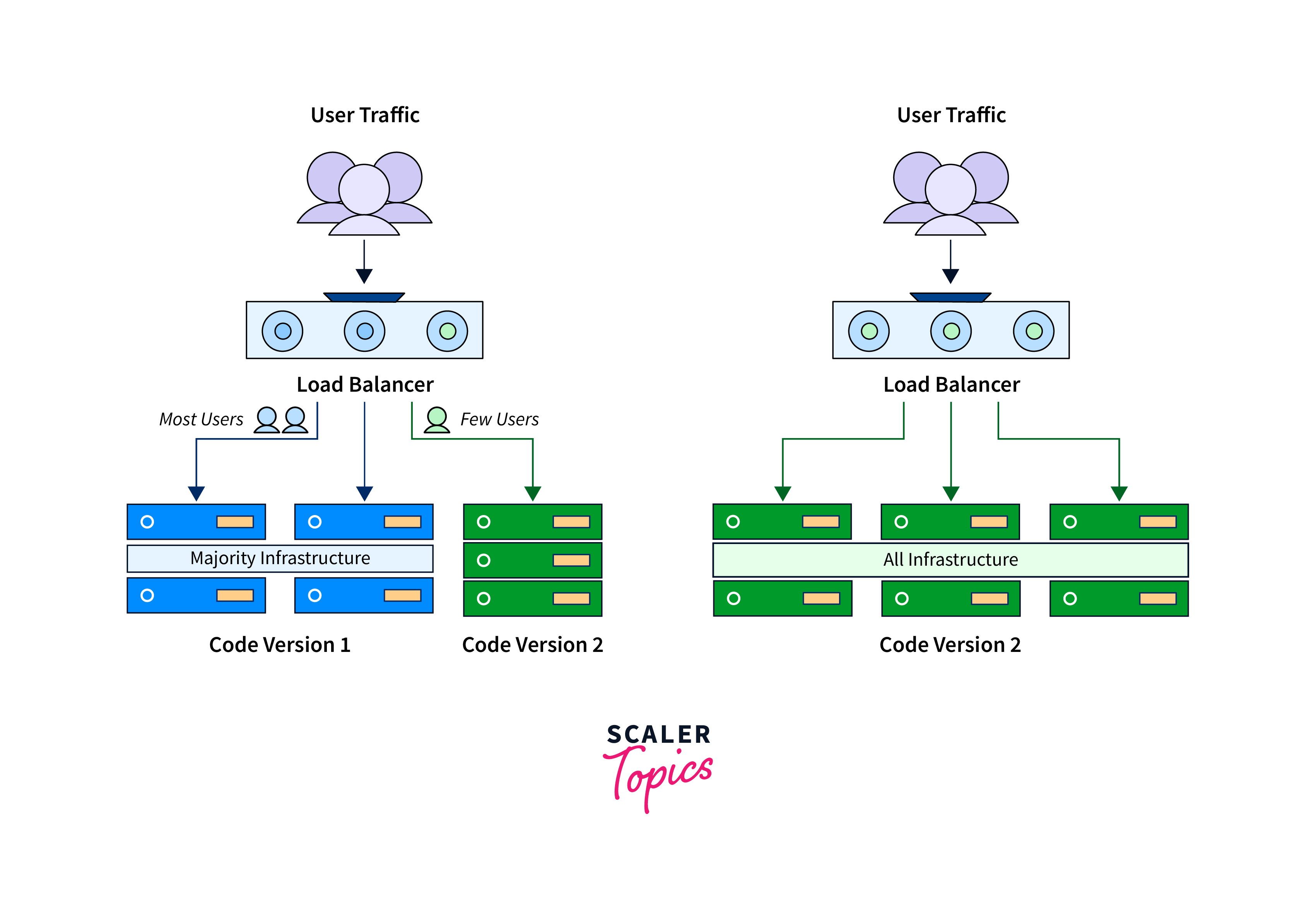

Canary deployment in Kubernetes is a strategy that allows you to roll out new software versions or updates gradually, minimizing the impact of potential issues. It involves routing a small percentage of user traffic to the new version while keeping the majority on the stable version. This approach helps to test the new release in a real production environment before fully deploying it.

Prerequisites

To implement canary deployments in Kubernetes, the following are the prerequisites:

- Kubernetes cluster setup.

- Application containerization.

- CI/CD pipeline for automation.

- Application monitoring and logging.

- Service mesh or ingress controller for traffic management.

- Metrics and alerting for monitoring deployment health.

- Authentication and RBAC

What is a Canary Deployment?

A Canary Deployment is a strategy used to release new software versions or updates gradually and safely by rolling them out to a small subset of users or servers before deploying them to the entire infrastructure. It allows for testing the new release in a real production environment, monitoring its behavior and performance, and minimizing the impact of potential issues by easily rolling back if necessary.

Let's consider a real-world scenario of a popular e-commerce website that wants to deploy a new version of its application using a Canary Deployment strategy.

In this scenario, the e-commerce website wants to introduce a new feature that allows users to submit product reviews. However, the development team wants to ensure that the new feature doesn't negatively impact the overall user experience or introduce any critical bugs.

To implement a Canary Deployment, the team follows these steps:

- They deploy the new version of the application to a small subset of servers or a specific region, representing only a fraction of the overall user traffic.

- This subset of servers now receives a small percentage of real user traffic while the majority of users continue to be directed to the stable version of the application.

- The team closely monitors the behavior of the new version, including performance, stability, and user feedback, to ensure it functions as intended and doesn't cause any significant issues.

- If the new version performs well and receives positive feedback, the team gradually increases the percentage of user traffic directed to the new version, expanding its reach.

- If any issues or negative feedback arise, the team can quickly detect them and rollback the deployment, ensuring minimal impact on the overall user experience.

- Once the team is confident in the new version's stability and performance, they complete the deployment by routing all user traffic to the new version.

How to Use Canary Deployment in Kubernetes?

To use Canary Deployment in Kubernetes, we need to follow these steps:

- Define Deployment Strategy: Create a Kubernetes Deployment manifest or configuration file for your application, specifying the desired number of replicas and other deployment settings. Both stable deployment and canary deployment configuration file needs to be created.

- Implement Traffic Splitting: Use a service mesh like Istio or an Ingress controller to split the incoming traffic between the existing stable deployment and the new canary deployment. This can be done using weighted routing, where a percentage of traffic is directed to the canary deployment while the remaining traffic goes to the stable deployment.

- Monitor Metrics: Set up monitoring and metrics collection to track the performance, resource usage, and behavior of both the canary and stable deployments. This will help you assess the impact of the new version and make informed decisions.

- Gradually Increase Traffic: Start with a small percentage of traffic being routed to the canary deployment (e.g., 5% or 10%) and monitor its performance closely. Observe user feedback, logs, and metrics to ensure the new version is functioning correctly.

- Evaluate and Iterate: Analyze the metrics and user feedback to evaluate the performance of the canary deployment. If it meets the desired criteria, gradually increase the traffic allocation to the canary deployment (e.g., 25%, 50%) over time.

- Rollback or Promotion: Continuously monitor the canary deployment and compare its performance against the stable deployment. If any issues or negative impacts are detected, you can quickly roll back the canary deployment to the stable version. Conversely, if the canary deployment performs well, you can promote it to become the new stable version.

- Cleanup and Repeat: Once the canary deployment is successfully promoted or rolled back, you can clean up any unused resources related to the canary deployment and repeat the process for future releases or updates.

- Metrics Collection: Gather data on response time, error rates, resource usage, throughput, success rates, user experience, application-specific metrics, infrastructure health, and logs. These metrics provide feedback on both stable and canary deployments.

- Process Improvement: Utilize collected metrics to optimize the canary deployment. Adjust traffic splitting percentages, implement feature flags, and integrate user feedback. Develop automated rollback/promotion procedures and continuously learn from insights to enhance future deployments.

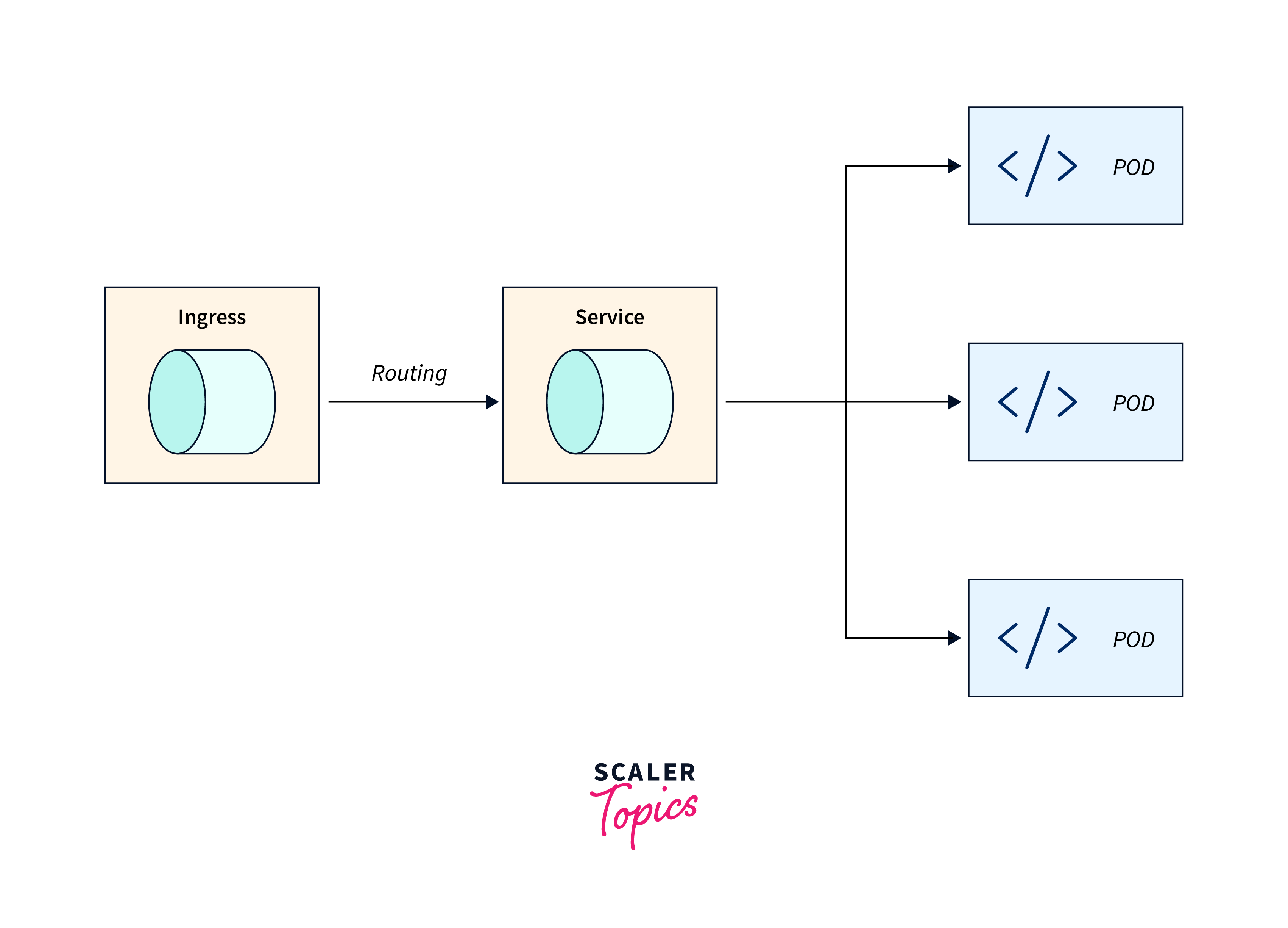

Kubernetes Request Flow

In a canary deployment, the selectors and labels used in the configuration or YAML file may differ from those used in regular deployments. Let's break down the Kubernetes Request flow in relation to canary deployments:

- Deployment: Use different labels or selectors to distinguish canary and stable versions. Deployment configuration defines desired pod and ReplicaSet state. Canary deployments utilize different labels and selectors for version differentiation.

- Service: Create a service for stable endpoint access, routing traffic to both canary and stable deployments based on defined rules. Service abstracts pod addresses to a single IP or name for access.

- Ingress: Configure rules in the ingress to direct traffic to canary or stable deployments.Ingress allows inbound connections to communicate with cluster services. Service and ingress configurations enable traffic routing and access control.

Defining deployment

In a canary deployment, you define a Deployment object that represents the desired state of your application. This includes specifying labels and selectors to differentiate between the canary and stable versions. The Deployment configuration also includes the number of replicas for each version.

In this example, we have three replicas for the stable version of the app, with the app and version labels set to my-app and stable respectively.

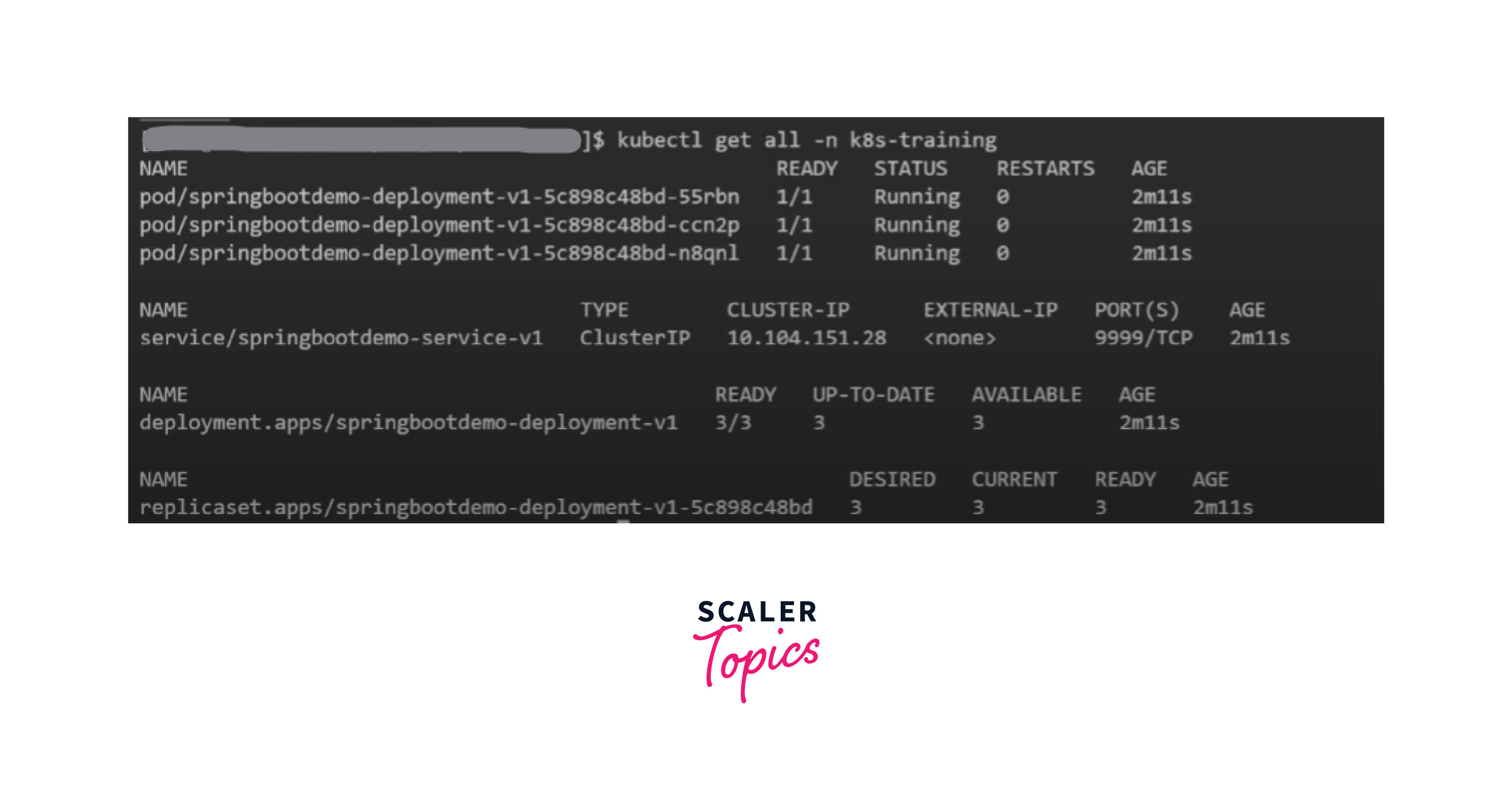

For example, here a deployment file name springboot-demo-service-v1.yaml is used. The deployment file has detailed configurations like namespaces, secret, configmap etc. Using the kubectl apply command the deployment is created.

Defining Service

To provide access to your application, you define a Service object. The Service abstracts away the individual pod addresses and provides a single stable endpoint. In a canary deployment, the Service configuration routes traffic to both the canary and stable deployments based on defined rules or percentages.

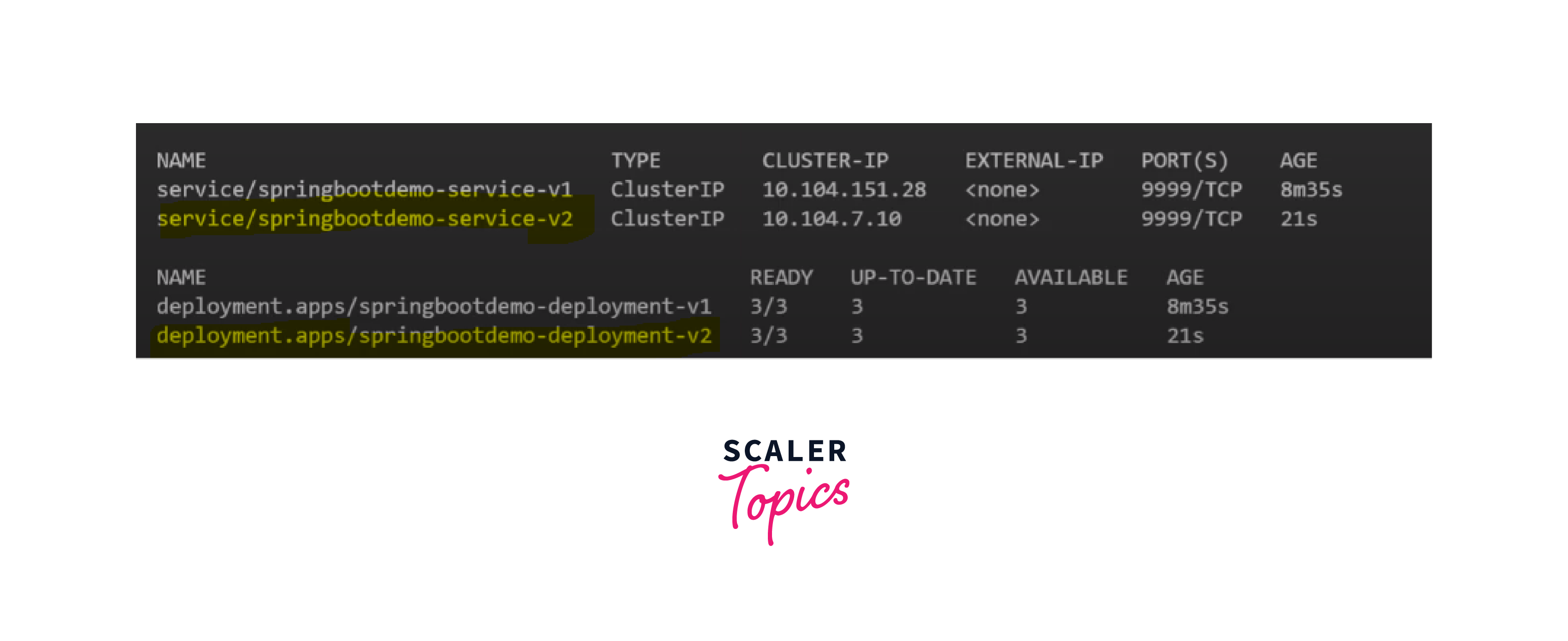

After running the deployment and creating the services, you can run the kubectl get all command to see the list of services. Here the -n flag is used to specify the namespace.

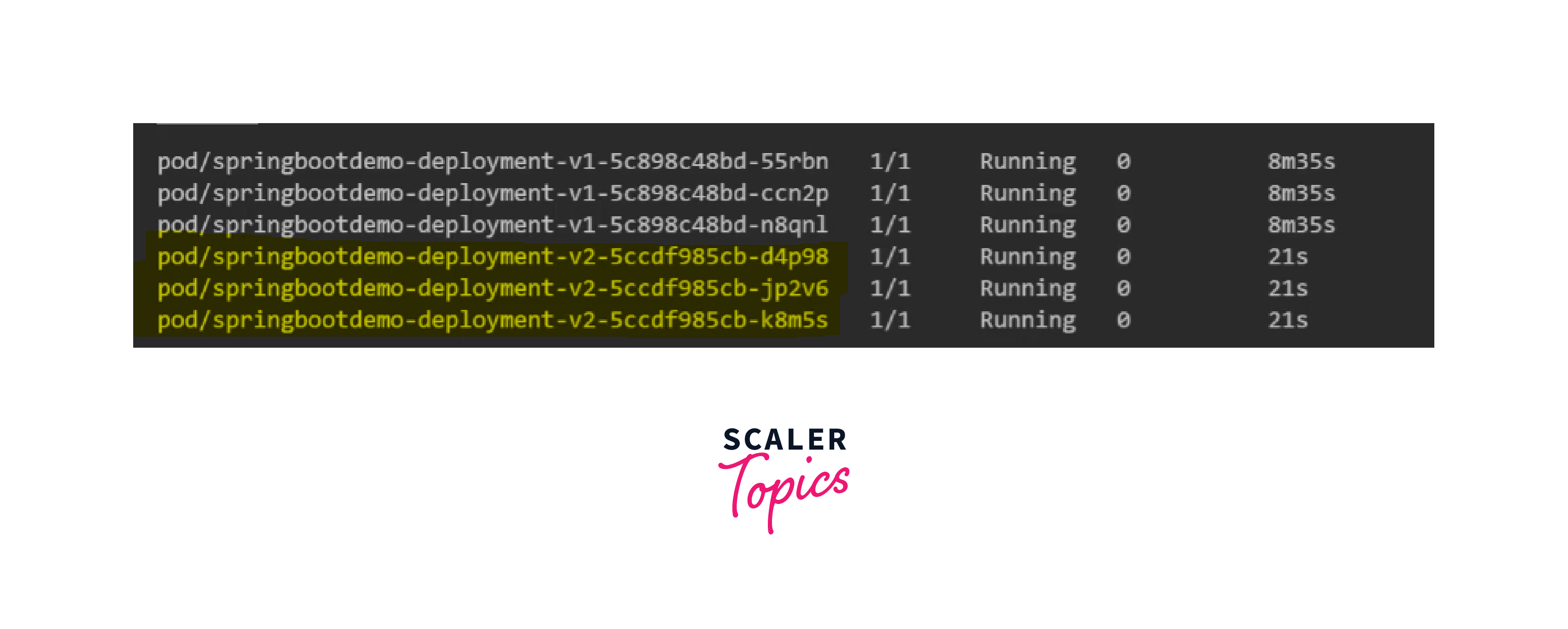

Based on the specification provided in the YAML file, 3 pods are created with one service.

Based on the specification provided in the YAML file, 3 pods are created with one service.

Defining Ingression

You configure an Ingress object to handle inbound connections and route them to your cluster services. In the context of canary deployment, the Ingress configuration includes rules that direct traffic to the canary or stable deployments based on specific criteria, such as hostnames or paths.

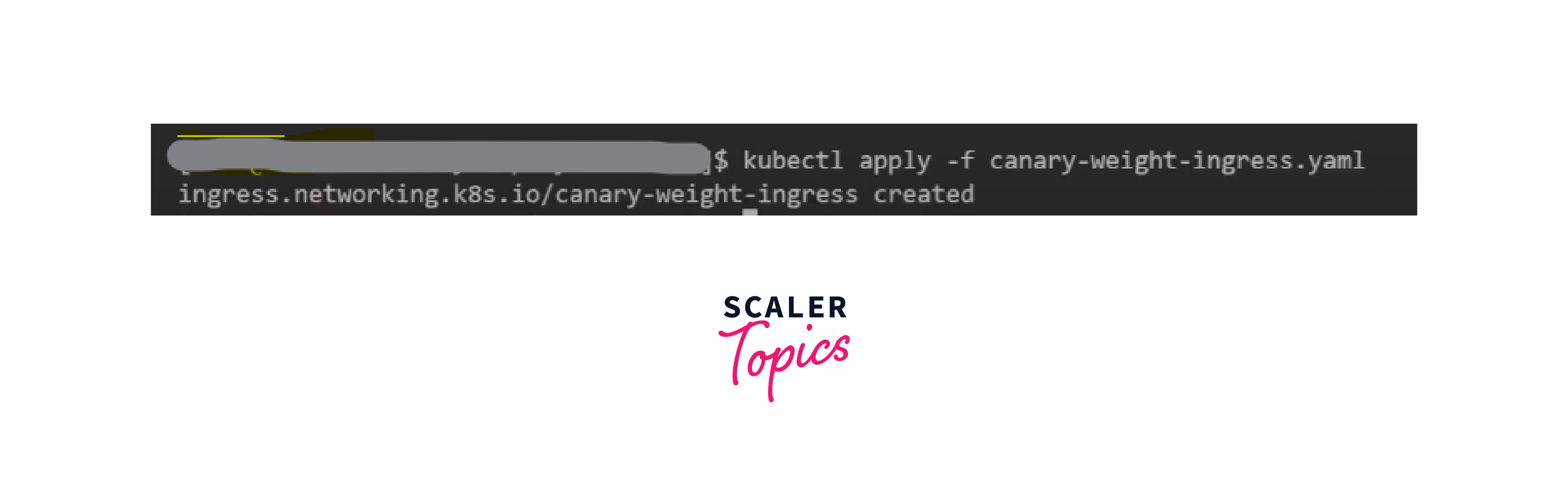

For routing traffic you need to create ingress using the kubectl apply command:

Canary Deployment

To set up a canary deployment, you can create a replica Deployment and a Service object with an Ingress configuration that reroutes traffic based on set rules between the stable and canary deployments.

- Replica Deployment: Create a Deployment named canary-deployment with the desired number of replicas. Specify the labels for the canary version. Use an updated image for the canary version.

For example here v2 of the springboot image is deployed with similar configuration with 3 replicas.

2. Service Object:

Create a Service named my-app-canary-service that selects Pods with the label my-app-canary. Configure the service to expose port 80, which will target port 8080 of the Pods.

2. Service Object:

Create a Service named my-app-canary-service that selects Pods with the label my-app-canary. Configure the service to expose port 80, which will target port 8080 of the Pods.

A sepearte service is created for V2 using the kubectl apply command.

- Ingress Configuration: Create an Ingress named my-app-canary-ingress. Add annotations to the Ingress configuration, such as nginx.ingress.kubernetes.io/canary: true to indicate that it's a canary Ingress. Set the nginx.ingress.kubernetes.io/canary-weight annotation to determine the percentage of traffic to be routed to the canary service. Configure the Ingress rules to match the desired host and path. Route the traffic to the my-app-canary-service on port 80.

With this setup, the canary deployment will receive a portion of the traffic based on the defined canary-weight percentage, while the stable deployment continues to handle the remaining traffic. This allows you to observe and evaluate the canary version's behavior before making further decisions.

Here, we are creating a separate ingress for routing the traffic for canary deployment.

Based on the weight specified in the canary ingress the traffic will be redirected as you can see in the image below:

Performing Canary Deployment in CI/CD Framework in Kubernetes

Performing a Canary Deployment in a CI/CD framework in Kubernetes typically involves the following steps:

- Build and Test: Set up a CI/CD pipeline to build and test your application code.

- Containerization: Package your application into a container image using tools like Docker.

- Canary Deployment Configuration: Create configuration files or manifests for the canary deployment in Kubernetes.

- Infrastructure as Code (IaC): Define and manage the infrastructure resources needed for the canary deployment using tools like Kubernetes manifests or Helm charts.

- Deployment Strategy: Specify the percentage of traffic to be routed to the canary deployment initially and any subsequent adjustments.

- Deploy Canary: Configure the CI/CD pipeline to deploy the Canary version of the application to the Kubernetes cluster.

- Monitor and Evaluate: Monitor the canary deployment for metrics, performance, and user feedback.

- Service Discovery and Load Balancing: For routing traffic between the canary and stable deployments, service discovery and load balancing play a major role.

- Gradual Traffic Adjustment: Gradually adjust the traffic allocation between the canary and stable deployments based on evaluation results.

- Promotion or Rollback: Decide whether to promote the canary version to production or roll back to the stable version based on the evaluation.

- Cleanup: Properly clean up any unused resources after the canary deployment has been promoted or rolled back.

FAQs

Q. What is canary deployment vs blue-green deployment in Kubernetes?

A. Canary Deployment and Blue-Green Deployment are both strategies used for releasing new versions or updates of an application, but they differ in their approach and the way they handle the deployment process. Here's a breakdown of the key differences between Canary Deployment and Blue-Green Deployment:

Canary Deployment:

- Gradual rollout of the new version to a subset of users/servers.

- Monitors behavior, performance, and stability in the production environment.

- Small portion of traffic is directed to the new version initially.

- Enables real-time observation and easy rollback if issues arise.

Blue-Green Deployment:

- Maintains two identical production environments (stable and new version).

- Traffic switches immediately between environments.

- Provides quick and reversible transitions between versions.

- Easy rollback to stable version if issues occur in the new version.

Q. What are the different types of canary deployment?

Canary deployments involve running two versions of the application simultaneously, with the stable version and the canary version. Let's explore the two ways of deploying the update in canary deployments:

- Rolling Deployments: Gradually replace the stable version with the canary version by sequentially deploying and shifting traffic to the canary instances until the stable version is fully replaced.

- Side-by-Side Deployments: Run both the stable and canary versions simultaneously, splitting incoming traffic between them based on predefined rules or percentages for direct comparison and evaluation.

Q. What are the examples of canary release?

A.

- Netflix has used canary releases to introduce new features and improvements to their streaming platform gradually. They deploy new versions to a subset of users to gather feedback and monitor performance before rolling out to a wider audience.

- Google has employed canary releases in various products, including Google Chrome and Google Cloud Platform. They use canary deployments to test new features, bug fixes, and performance enhancements on a small scale before a full release.

Conclusion

- Canary Deployment

- Gradually release new software versions or updates to a subset of users or servers.

- Test and monitor the new release in a real production environment.

- Rollback easily if issues arise, minimizing the impact on users.

- Using Canary Deployment in Kubernetes:

- Define deployment strategy and configuration.

- Implement traffic splitting between stable and canary deployments.

- Monitor metrics to evaluate performance and make informed decisions.

- Gradually increase traffic to the canary deployment.

- Rollback or promote based on evaluation results.

- Clean up unused resources and repeat for future releases.

- Kubernetes Request Flow for Canary Deployment:

- Use different labels or selectors to distinguish canary and stable versions in the deployment.

- Create a service to route traffic to both canary and stable deployments.

- Configure ingress rules to direct traffic accordingly.

- Deployment, service, and ingress enable traffic routing and access control.