Concurrent Programming and Low-Level Programming

Overview

In today's technological environment, machines with multiple cores process multiple tasks simultaneously. So the best way to use these multicore machines is through concurrency and its techniques. Concurrent programming makes it easy to use the resources of the CPU judiciously.

What is Concurrency?

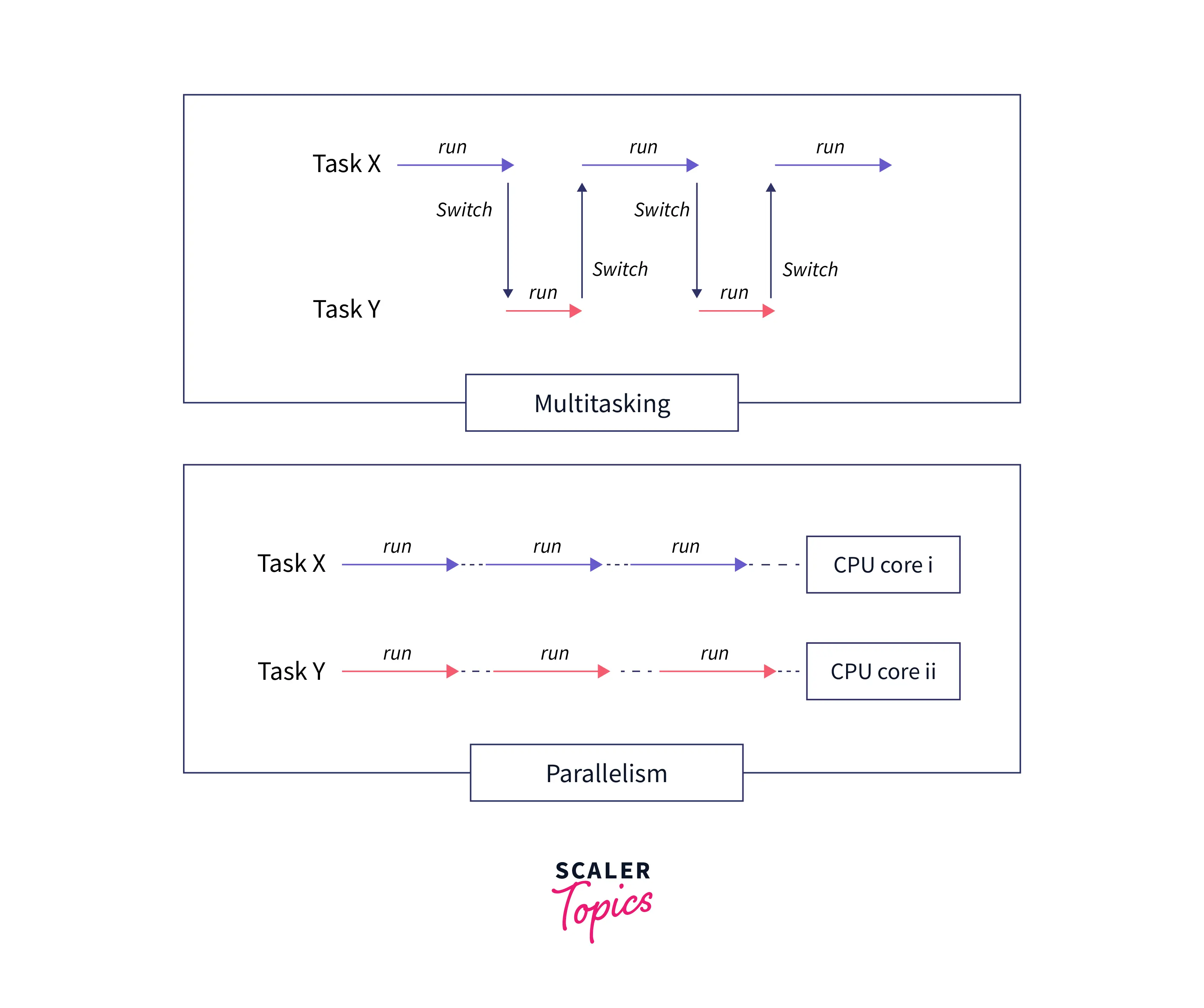

Concurrency refers to the concept of carrying out multiple tasks simultaneously. Concurrency can be applied in a time-shared fashion on a single CPU core, known as multitasking, or in parallel if multiple CPU cores are used, known as parallel processing.

In multitasking, tasks are getting switched after an amount of time. Switching of tasks by CPU makes it easy to handle and carry out multitasking, whereas, in parallel processing, we have multiple cores processing multiple tasks individually. A thread describes the execution path across the code. Each thread belongs to a process (the instance of an executing program that owns resources such as memory, file handle, and so on) since it executes the process's code and utilizes its resources. Numerous threads can run at the same time inside a process. At least one thread must be present in each process to show the main execution path.

Concurrency vs. parallelism

Concurrency:

Concurrency describes an application that performs multiple tasks at the same time. Concurrency is a technique for reducing the system's reaction time by utilizing a single processing unit. Concurrency gives the appearance of parallelism, but the chunks of a job aren't executed in parallel; instead, multiple tasks are processed simultaneously inside the application. It completes a part of the task and then moves on to the next.

Concurrency is done via interleaving activities on the e or, to put it another way, by context switching. That's the logic; it's similar to parallel processing. It increases the amount of work completed in a given length of time.

We can see that multiple tasks are making progress simultaneously, as seen in the diagram above. Because concurrency is a technique for dealing with many items at once, this diagram depicts concurrency.

Parallelism:

Parallelism refers to an application in which tasks are broken down into smaller sub-tasks that are processed in a seemingly parallel manner. Using many processors, it is utilized to boost the system's throughput and computing performance. It allows single sequential CPUs to do a lot of things "apparently" at the same time. Parallelism causes central processing units and input-output tasks in one process to overlap with another process's central processing units and input-output tasks. Concurrency, however, boosts speed by overlapping the input-output activities of one process with the CPU process of another.

The tasks are separated into smaller sub-tasks that process concurrently or in parallel, as shown in the diagram above. This diagram depicts parallelism, a mechanism for running multiple threads simultaneously.

| S.NO. | Concurrency | Parallelism |

|---|---|---|

| 1. | The task of conducting and controlling numerous computations simultaneously is known as concurrency. | Parallelism is the process of running many computations at the same time. |

| 2. | Concurrency is done via interleaving activities on the central processing unit (CPU) or, to put it another way, by context switching. | While numerous central processing units are used to do this (CPUs). |

| 3. | A single processing unit can achieve concurrency. | This cannot be accomplished with a single processing unit. It necessitates the use of numerous processing units. |

| 4. | Concurrency deals with several things at the same time. | It can accomplish several things at the same time. |

| 5. | Concurrency increases the quantity of work that may be completed in a given time. | While it increases the system's throughput and processing performance. |

| 6. | Debugging concurrency is difficult. | Debugging is also difficult in this but easier than concurrency. |

| 7. | Concurrency is the non-deterministic control flow approach. | Parallelism is a deterministic control flow approach. |

Methods of Implementing Concurrency

Multithreading and parallelism are the two most frequent approaches to implementing concurrency in C++. While these can be utilized in other programming languages, C++ stands out because of its concurrent capabilities, cheap overhead costs, and ability to handle complicated instructions.

In the sections below, we'll look at concurrent programming and multithreading in C++ programming.

C++ Multithreading

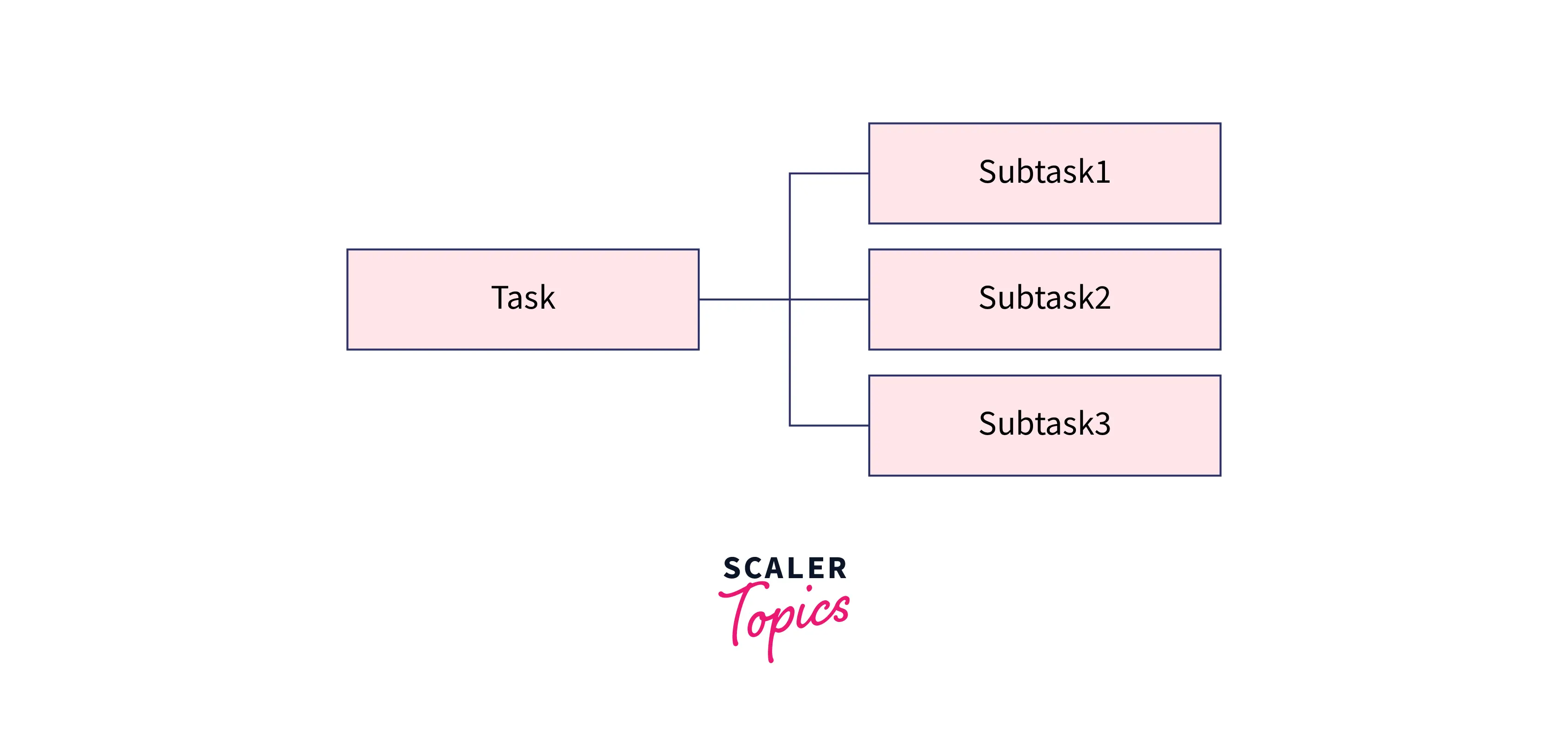

C++ multithreading involves creating and using thread objects, seen as std::thread in code, to carry out delegated sub-tasks independently.

A function to complete is supplied to new threads, along with optional parameters for that function.

While each thread can only do one function at a time, thread pools allow us to recycle and reuse thread objects, giving programs the appearance of endless multitasking. This takes advantage of numerous CPU cores and allows the developer to manipulate the thread pool size to regulate the number of tasks taken on. The program may then efficiently use the computer's resources without being overburdened.

Parallelism

Creating different threads is typically expensive in terms of the program's time and memory overhead. When dealing with short, simpler functions, multithreading can be wasteful.

Instead, developers can utilize parallel execution policy annotations, which allow them to indicate particular functions as candidates for concurrency without explicitly creating threads.

There are just two marks that can be encoded into a function at its most basic level. The first is parallel, which tells the compiler that the function is completed concurrently with other parallel functions. The other is sequential, meaning each function must be completed separately.

Because parallel functions use more of the computer's CPU resources, they can greatly speed up operations.

It's best saved for functions with little interaction with other functions using dependencies or data editing. This is because while they are worked on concurrently, there is no way to know which will complete first. Hence the outcome is uncertain unless synchronization like a mutex or condition variables is used.

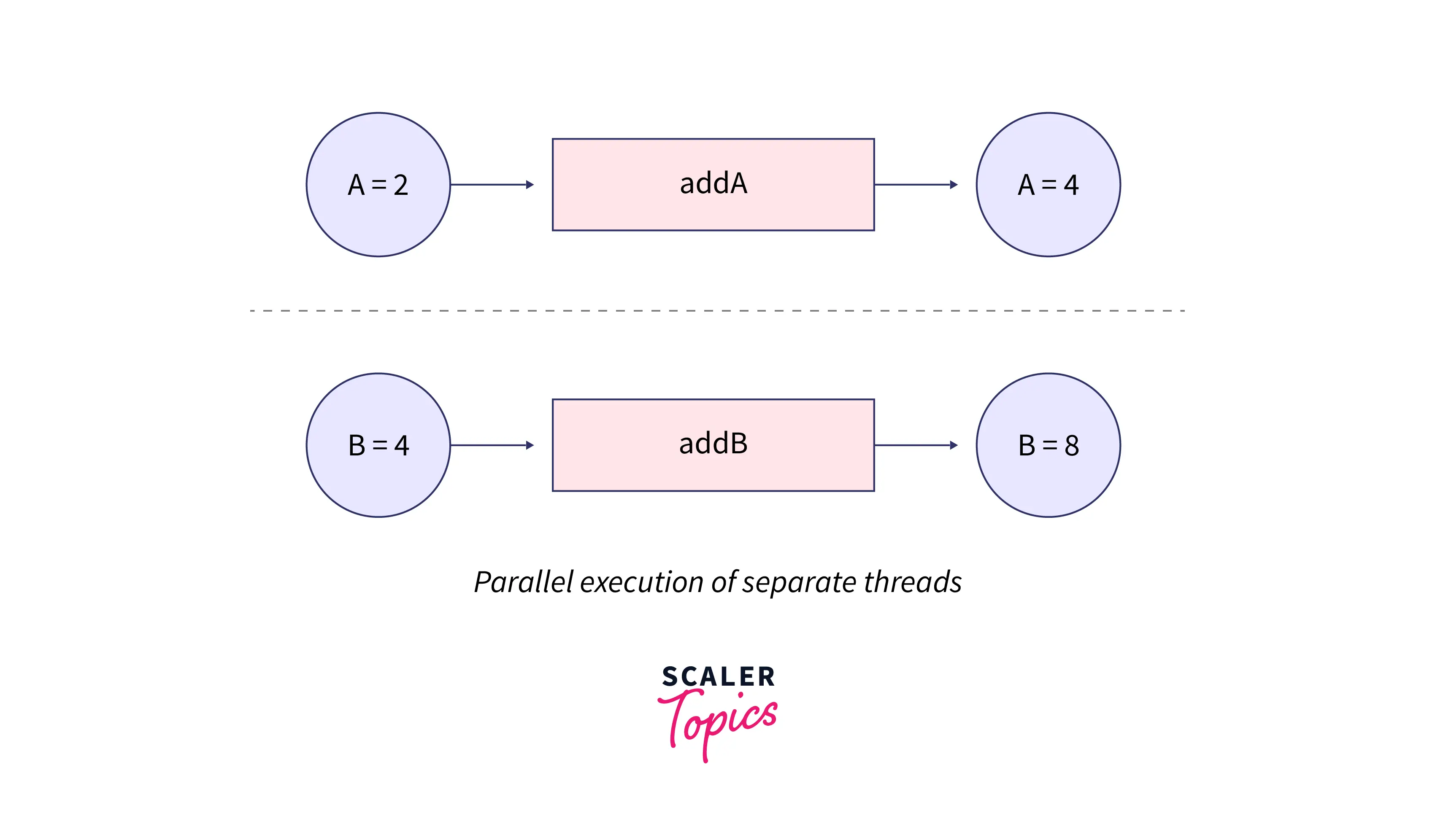

Consider the following scenario: we have two variables, A and B, and we want to create functions addA and addB that add 2 to their values.

We could accomplish so using parallelism because addA's behavior is unaffected by the second parallel function addB behavior so that it can run concurrently.

If the functions both affect the same variable, however, sequential execution would be preferable.

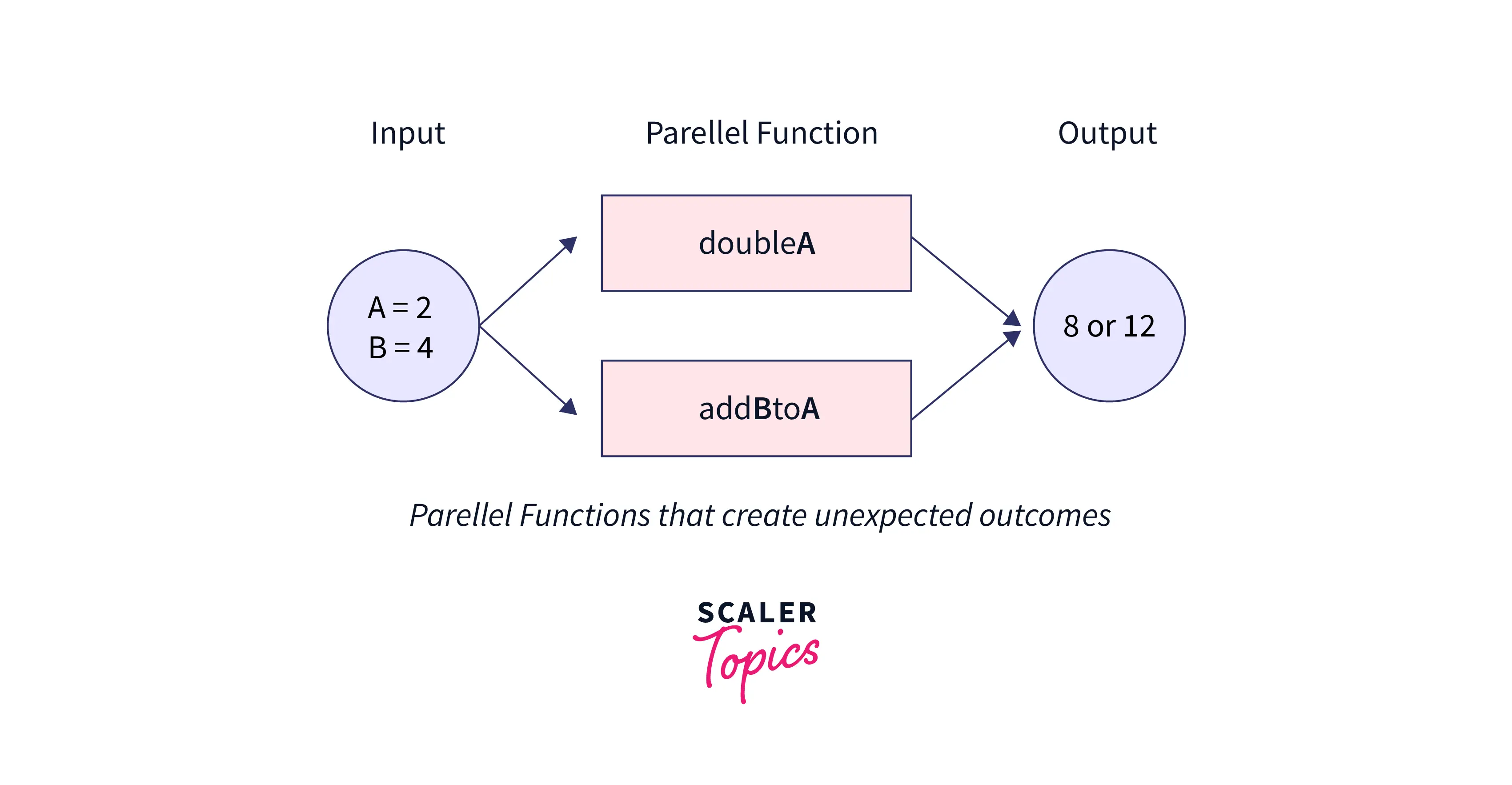

Consider if we had two variables: one that doubled variable A by two, doubleA, and one that added B to A, addBtoA.

We would want to avoid using parallel execution in this scenario since the outcome of this set of functions depends on which is completed first, resulting in a race condition.

While both multithreading and parallelism are helpful concepts for implementing concurrency in a C++ application, multithreading is more generally used due to its ability to perform complicated operations.

C++ Concurrency in Action: Real-world Applications

Multithreading programs and applications are common in today's commercial systems.

Example 1: Web Crawler

A web crawler downloads pages from all over the internet. The web crawler would use as much of the hardware's power as possible to download and index multiple pages simultaneously if the developer used multithreading.

Example 2: Email Server

Another example could be an email server, returning mailbox contents when a user requests. We cannot know how many people will request mail through this service at any moment.

The application can process as many user requests as feasible without risking an overload by using a thread pool.

Conclusion

- The concurrency coding technique is the best way to use these multicore processors.

- Multithreading programs and applications are common in today's commercial systems.

- Parallelism is the process of running many computations at the same time.

- With the basics and real-world examples, we got familiar with concurrent programming and multithreading in this lesson.