Master’s Theorem is the best method to quickly find the algorithm’s time complexity from its recurrence relation. This theorem can be applied to decreasing and dividing functions, each of which we’ll look into in detail.

We can apply Master’s Theorem for:

- Dividing Functions

- Decreasing Functions

Master Theorem for Dividing Functions

Highlights:

- Used to directly calculate the time complexity function of ‘dividing’ recurrence relations of the form:

- where

- Compare

Master's Theorem is the most useful and easy method to compute the time complexity function of recurrence relations.Master's Algorithm for dividing functions can only be applied to the recurrence relations of the form:

where

for example:

where,

n = input size (or the size of the problem)a = count of subproblems in the dividing recursive functionn/b = size of each subproblem (Assuming size of each subproblem is same)

Where the constants a, b, and k are constants and follow the following conditions:

a>=1b>1k>=0

Master's Theorem states that:

Case 1) IfCase 2) If- a) If p>-1, then

- b) If p=-1, then

- c) p<-1, then

Case 3) If- If p>=0, then

- If p<0, then

The same can also be written as:

Case 1– IfCase 2– If- a) If p>-1, then

- b) If p=-1, then

- c) If p<-1, then

Case 3– If- a) If p>=0, then

- b) If p<0, then

Hence, using the values of a, b, k and p, we can easily find the time complexity function of any dividing recurrence relation of the above-stated form. We’ll solve some examples in the upcoming section.

Examples of Master Theorem for Dividing Function

We’ll now solve a few examples to understand Master’s Theorem better. Before solving these examples, remember:

Master's Theorem can solve ‘dividing’ recurrence relations of the form

where

Where the constants a, b, and k follow the following conditions:

- a>=1

- b>1

- k>=0

Example 1:

After comparison,a = 1, b = 2, k = 2, p = 0,

- Step 1: Calculate

k - k = 2,

- Step 2: Compare with

k - Step 3: Calculate time complexity

- If

Final Time complexity:

Example 2:

This recurrence relation function is not of the form

Let

On replacing

Suppose

=>

Finally ,we have this equation of the form T(n) = aT(n/b) + f(n), where a = 2, b = 2, k = 1, p = 0, and f(m) = m

Step 1: Calculate- k = 1,

Step 2: Compare with kStep 3: Calculate time complexity*- If p>-1, then

Step 4: Replacing m with

Final Time complexity:

Master Theorem for Decreasing Functions

Highlights:

- Used to directly calculate the time complexity function of ‘decreasing’ recurrence relations of the form:

- The value of ‘a’ will decide the time complexity function for the ‘decreasing’ recurrence relation.

For decreasing functions of the form

where

for example:

Where:n = input size (or the size of the problem)a = count of subproblems in the decreasing recursive functionn-b = size of each subproblem (Assuming size of each subproblem is same)

Here, a, b, and k are constants that satisfy the following conditions:

- a>0, b>0

- k>=0

Case 1) If a<1, then

Case 2) If a=1, then

Case 3) If a>1, then

Hence, given a decreasing function of the above-mentioned form, we can easily compare it to the given form to get the values of a, b, and k. We can find the time complexity function by looking at the three cases mentioned above. We’ll solve some examples in the upcoming section to understand better.

Examples of Master Theorem for Decreasing Function

We can solve ‘decreasing’ functions of the following form using ‘Master’s Theorem for Decreasing Functions’.

Form: T(n) = aT(n-b) + f(n),

where f(n) = θ(n^k)

Here, a, b, and k are constants that satisfy the following conditions:

- a>0, b>0

- k>=0

Example 1:

After comparison,

Step 1: Compare ‘a’ and 1- Since a>1,

Step 2: Calculate time complexity- Putting values of a = 2, b = 2, f(n) = n.

Final Time complexity:

Example 2:

After comparison,a = 1/2, b = 1, k = 2,

Step 1: Compare ‘a’ and 1- Since

Step 2: Calculate time complexity- Putting values of

a = 2,and k = 2.

Final Time complexity: θ(n^2^)

Idea Behind Master Theorem for Dividing Functions

Highlights:

- To calculate

The idea behind Master's algorithm is based on the computation of

For Example:

- In

Case I, - In

Case II, - In

case III, when

Now, Why do We Compare

Given

To achieve this, let’s first understand the first term, i.e.,

Understanding the Term – n, we divide the whole problem into 'a' subproblems of size 'n/b' each. Suppose

Hence,

=>

Hence,

=>

Similarly,

=>

to

=>

Let’s assume 1/2 each time, we can say that b each time,, hence,

=>

=>

At the ith level, the size of the sub-problem will reduce to 1, i.e. at the ith level,

=>

=>

=>

Therefore, i = k at the last level, where the size of the sub-problem reduces to 1.

Using this deduction, we can re-write

=>

=>

=>

Hence,

f(n) (i.e. the second term) to decide which one dominates, which decides the final time complexity function for the recurrence relation.

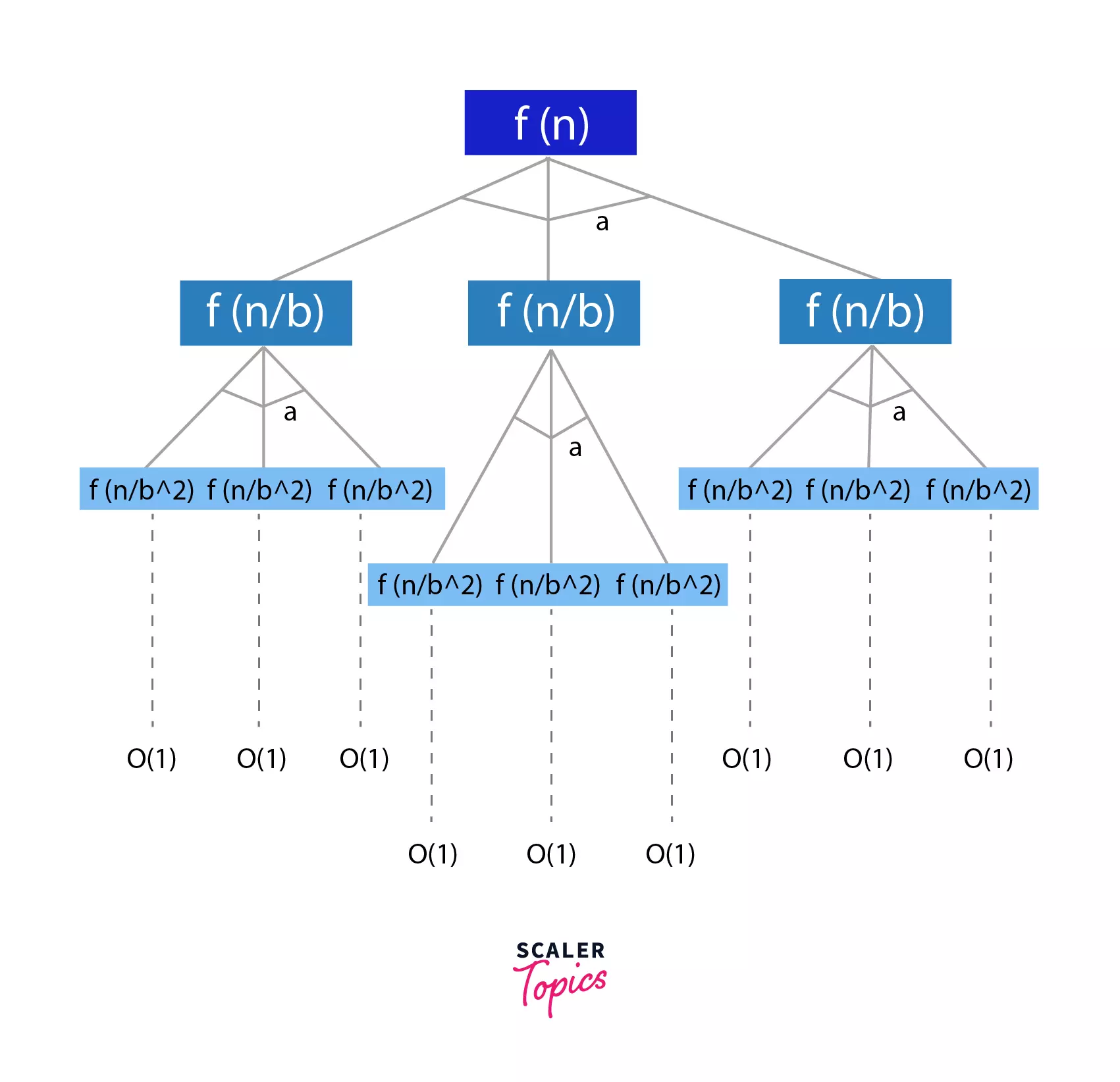

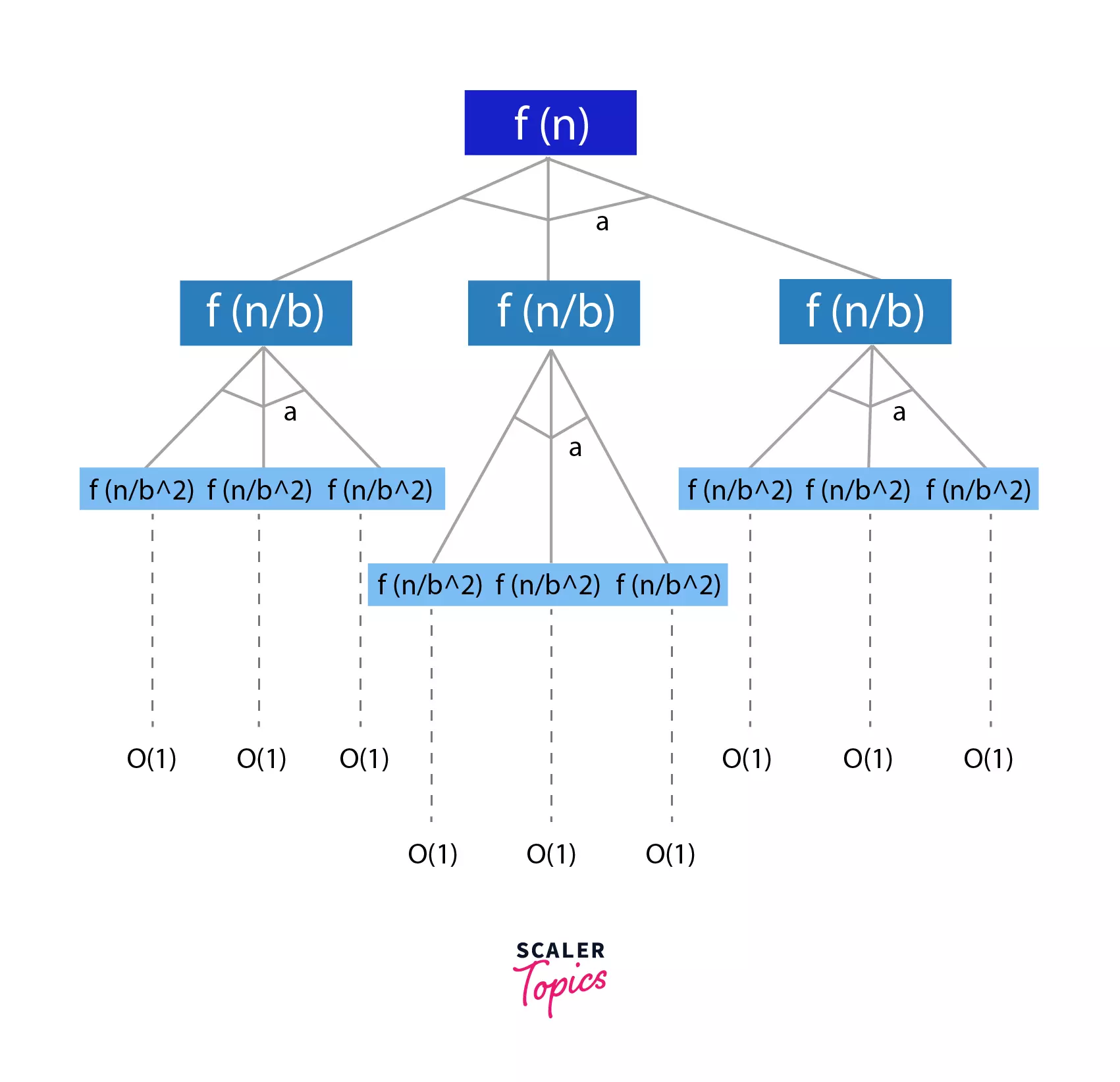

Proof of Master Algorithm

This form of recurrence relation is in the form of a tree, as shown below, where every iteration divides the problem into ‘a’ sub-problems of size (n/b).

At the last level of the tree, we will be left with problems of size 1. This is the level where we have the shortest possible sub-problems.

The level =

Size of sub-problem = 1

Let us now calculate the work done at each level and that will help us compute the total work done, i.e. the sum of work done at log~b~n levels.

- Work done at

level 1=>

n^d^ comes from the recurrence relation - Work done at

level 2=>

At the second level, the problems are divided into size n/d, and there are ‘a’ sub-problems. - Work done at

level k=> - Hence, Total Work Done =>

Note: This Summation forms a Geometric Series.

Now, let us look at every single case and prove it.

Proof of Case I: When

- In this case, the work done increases as we move down the tree, i.e. towards the lower levels of the tree, with more sub-problems.

- Also, the total work done is an expression of the Geometric Series, and in this case,

r<1. Hence, the last term of the geometric series will have the maximum effect. - Hence, the total work done will only include the last term since all the other terms of the geometric series can be ignored compared to the last one.

- Hence, we can substitute k = log~b~n in the time complexity expression.

=>

=>

=>

=>

Proof of Case II: When

- This means that the work done by each term in the geometric series is equal.

- Hence, the total work is

work done at each level*Number of levels.

=>

=>

=>

Proof of Case III: When

- This is exactly the opposite of the first case discussed.

- This is the case of decreasing Geometric Series. Hence, the first term is the maximum and major term to be considered.

- Hence, the total work done = first term, i.e. O(n^d^)

Hence, Proved!

Limitations of Master Theorem in DAA

Highlights:

The relation function cannot be solved using Master's Theorem if:

T(n)is a monotone functionais not a constantf(n)is not a polynomial

Master's Theorem can only be used for recurrence relations of the form:

Or,

This marks the limitations of Master's Theorem, which cannot be used if:

T(n)is monotone function, For example:ais not a constant. Consider this:

If a is not constant, this changes our Master Theorem's conditions altogether.

- If

Master's Theoremcan only be applied to the above-stated form under the given constraints.

Conclusion

- We learn the master theorem for dividing and decreasing functions.

- For the recurrence relations of the type

Or,

Furthermore, our online DSA course delves deep into the Master Theorem, providing comprehensive coverage to ensure a thorough understanding.