Deep Learning in R Programming

Overview

Our world has become highly data-defined, and Artificial Intelligence (AI) is the powerful tool with which several industries and organizations are able to bring innovation and transformation. Deep learning (a subset of Machine Learning) is inspired by the functioning of a human brain. This characteristic of the human brain is achieved through the use of artificial neural networks that empower a deep learning model to uncover patterns by processing vast datasets. With deep learning, machines can classify sounds, images, or texts like humans. The deep learning technology leverages robust neural networks to reshape how we handle complex tasks in various industries such as automotive, healthcare, finance, etc.

In this article, we’ll understand how to perform deep learning in R using different packages.

Introduction to Deep Learning

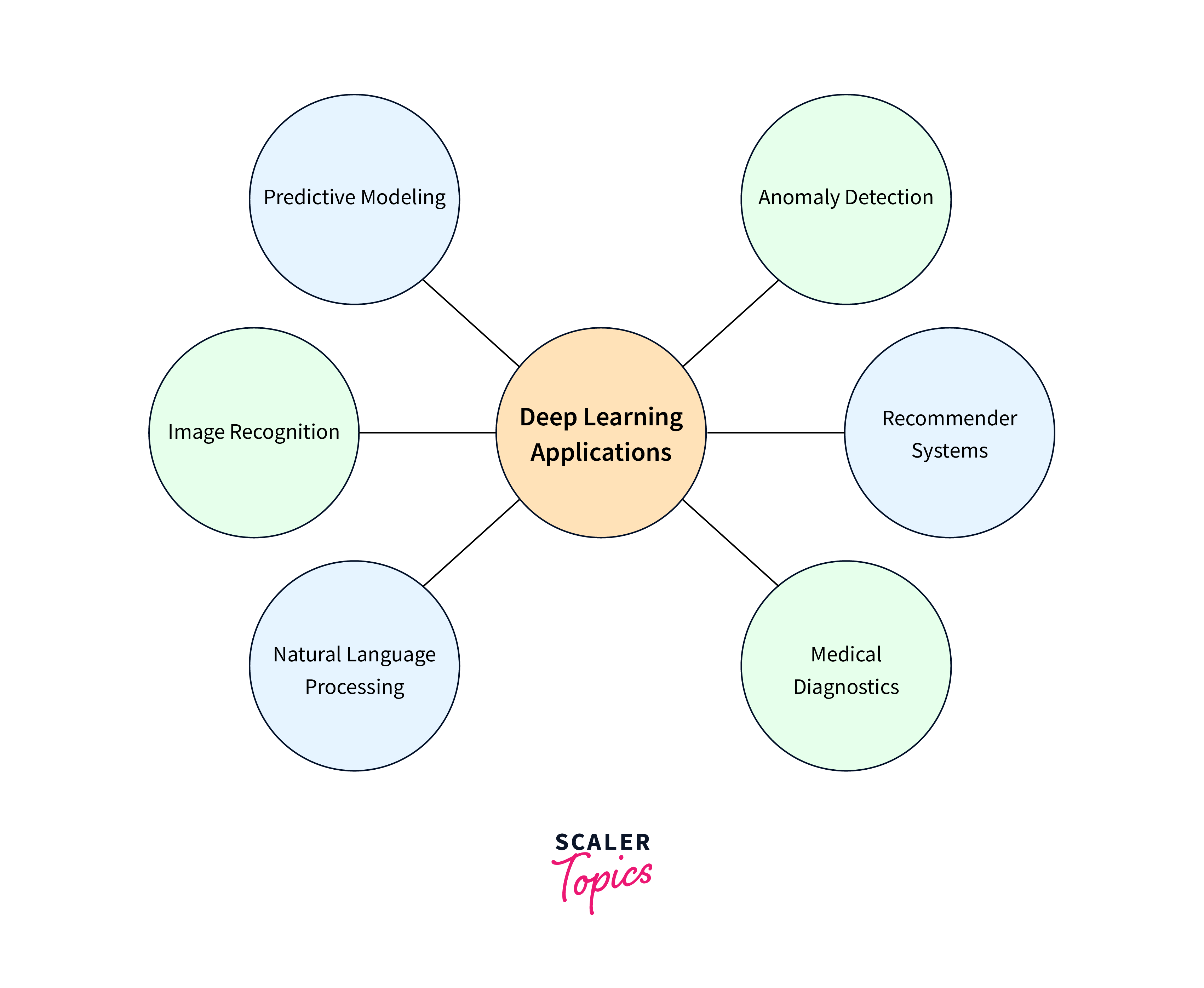

Inspired by the cognitive abilities of the human brain, deep learning models make use of neural networks. These networks consist of multiple layers with interconnected neurons to solve challenging tasks. Such neural networks can automatically learn and extract specific patterns and representations from vast amounts of data. This feature makes them particularly effective for different tasks like image classification, speech recognition, natural language processing, etc. A few common examples of deep learning models are:

- Convolutional Neural Networks (CNNs) for image analysis tasks,

- Recurrent Neural Networks (RNNs) for sequential data,

- Transformer models for understanding natural language, i.e, NLP projects

All of these above models have significantly advanced the capabilities of AI systems in recent years. We can use a flexible programming language like R to develop these models.

In this article, we will explore how to set up a deep learning environment and implement a model for deep learning in R, fine-tuning it, etc. Additionally, we will discuss some deep learning packages that are helpful to data scientists and analysts to build, train, and deploy neural networks using R. This article assumes that you have some understanding of some important machine learning concepts such as classification, regression, and clustering and a basic familiarity with R programming syntax. Let us begin by setting up our R environment for the Deep Learning task.

Setting up the Deep Learning Environment in R

In this section, we will discuss the various essential steps required to properly set up a deep learning environment in the R programming language. To configure R for deep learning, we need to ensure that R is currently installed on our system along with an IDE like RStudio to access its powerful capabilities.

The next step is to install the required Deep Learning Packages. There are some powerful packages available for deep learning in R, like TensorFlow or Keras. These can be easily installed using R's package management system. For example, we can install the TensorFlow package using the following command -

An important point to consider here is that Deep Learning models are computationally expensive and might require additional hardware support to perform well. This means that we need to have a machine with GPU Support enabled for accelerated model training, especially for large datasets or image classification tasks.

Building Neural Networks in R

Let us now see an example of how to build a neural network in R. A neural network in R can be achieved using various libraries and frameworks like keras and TensorFlow that provide several tools and functions for designing, training, and evaluating these neural networks. We'll discuss the essential steps required first, followed by the actual model building in some Deep Learning R packages.

Data Preparation

a. Dataset

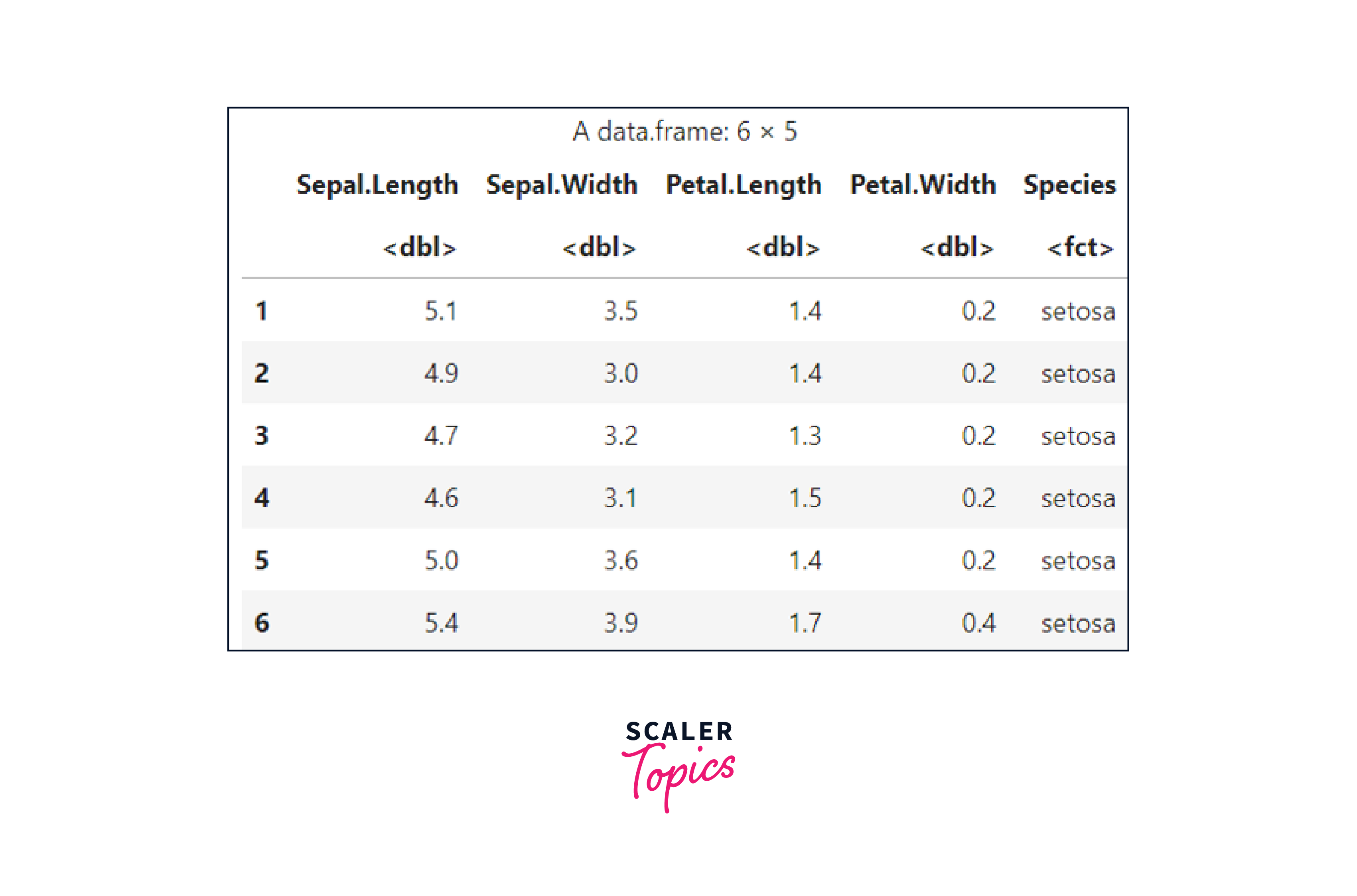

For our example, we'll use the popular iris dataset available as a built-in dataset in Base R. For exploring the dataset, we’ll need the tidyverse package which can be loaded with the following command.

Let us begin using the preloaded Iris dataset directly. We can view the first six rows of this dataset to have a general idea about the information contained in the dataset.

Output:

Next, we can summarize the dataset using the summary command:

Output:

b. Data Cleaning

Once we have reviewed the dataset, the second step in preprocessing can be 'Data cleaning'. This step is crucial in the case of a deep learning model as it involves different sub-steps, such as handling missing values, normalizing or standardizing features, and removing outliers to avoid overfitting issues. Addressing these steps can ensure the model's robustness and performance. For the iris dataset, we can check for missing values as shown below-

Output:

Since there are no missing values and there is an equal distribution of the Species based on the dataset summary, we can proceed with the next step.

Data Preprocessing

a. Feature Engineering

This step calls for selecting the relevant dataset features, transforming variables, and encoding categorical data to feed the neural network.

Since the target column 'Species' is a categorical column, we need to encode it first before training the model. We can encode it as -

We can check the converted output as -

Output:

This shows that category 'setosa' has been renamed as '1'. Similarly, the other two categories of iris flowers, versicolor, and virginica, have been renamed as 2 and 3, respectively, with one-hot encoding.

b. Splitting the Data

The next step is to split the dataset into training and validation datasets.

Output:

Now, we can proceed to set up the model using different packages for deep learning in R.

Building a Deep Learning Model in R

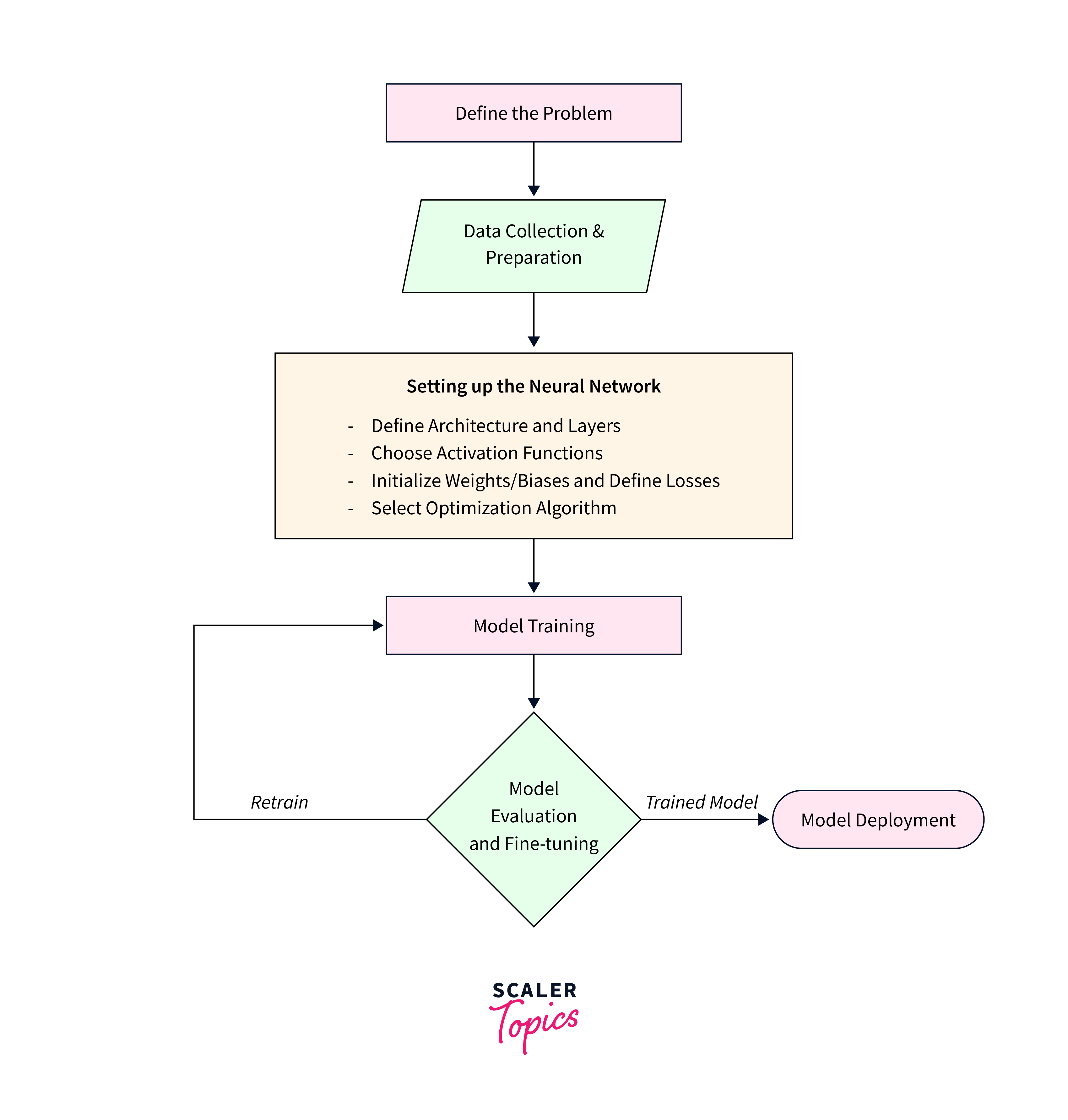

In this step, we define the neural network's architecture, including its layers and activation functions. Then, we compile the model with a specific optimizer, loss function, and evaluation metrics before training it on the training data. We can also adjust the weights and biases to minimize loss, if needed, and finally evaluate its performance on validation or test datasets.

Deep Learning in R Using Different Packages

To build a deep learning model in R, we start with data preparation, dividing it into training and testing sets. We then train the model, specifying the neural network structure (i.e. including the number of hidden layers and neurons). After training, we use the model to make predictions on the testing data and compare these with actual values to evaluate performance. This can be done using various metrics. The model can be fine-tuned by adjusting parameters like the learning rate or the number of hidden layers and neurons.

Several packages in R facilitate deep learning, including Keras, neuralnet, deepnet, and h2o. These packages provide tools for designing the neural network architecture, training with optimization algorithms, monitoring performance, and performing hyperparameter tuning, validation, and testing.

For simplicity, we will continue with the Iris dataset to demonstrate how to construct our deep learning model using different packages in R.

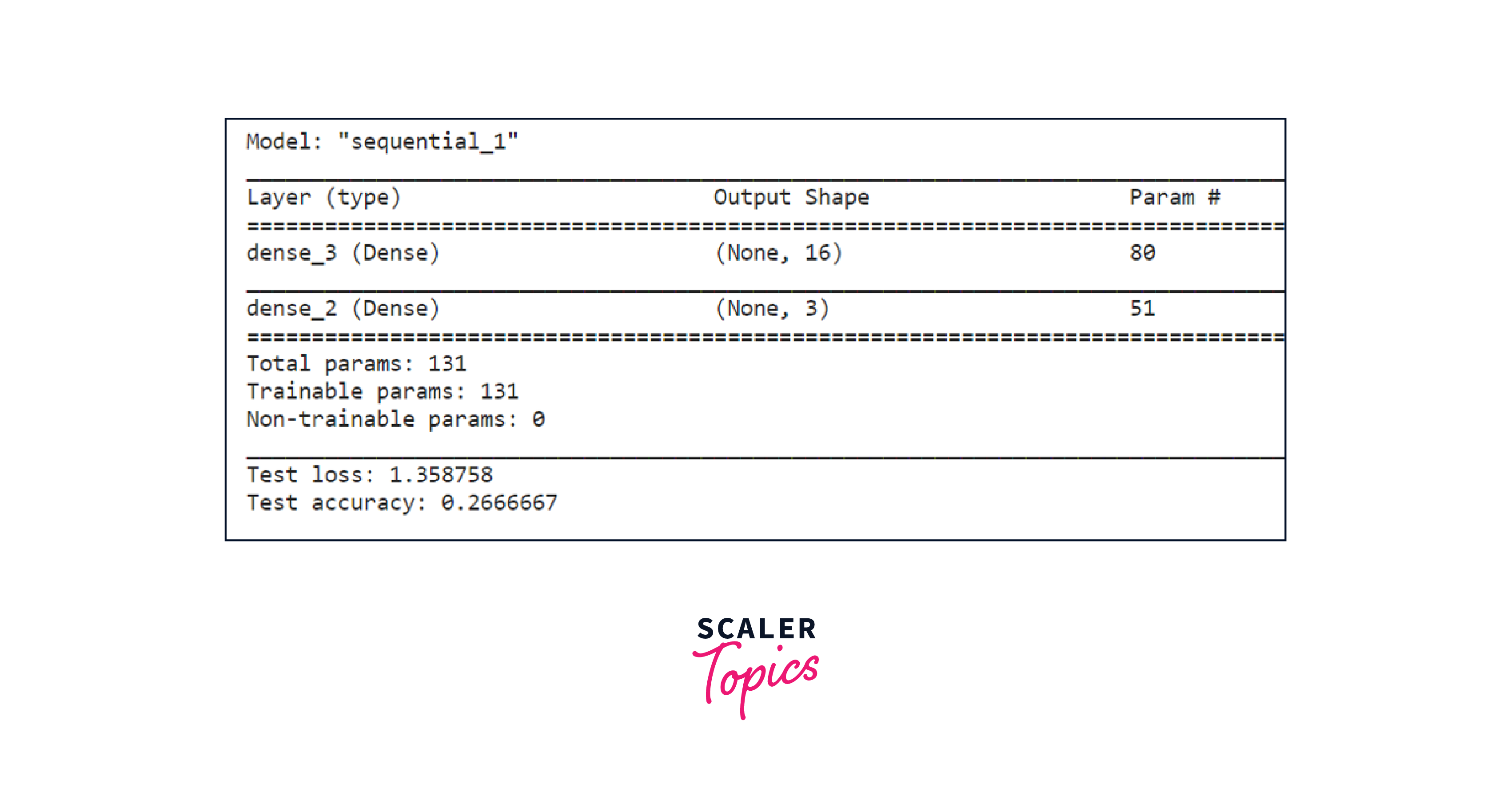

1. Keras

Keras, a popular Python package, is also available for R. This open-source package provides an interface to the Keras Deep Learning Library. It's a high-level API for neural networks written in Python and runs on top of the famous package TensorFlow.

Here's how we can set up the deep learning model in keras for the iris dataset.

Output:

2. deepnet

Another deep learning package in R is the deepnet package, which provides tools for training and building deep neural networks, including feedforward and convolutional neural networks, for various machine learning tasks. Here’s how we can set up the deep learning model in deepnet for the iris dataset.

Output:

Predicted classes:

Output:

3. neuralnet

The "neuralnet" package is used for training feedforward neural networks with a focus on simplicity and ease of use in R. This package is capable of adjusting the weights of the network based on the error of the output (back-propagation).

Here’s how we can set up the deep learning model in neuralnet package for the iris dataset.

Output:

4. H2O

“H2O” is a machine learning platform that provides an R package integration. It allows us to build and train various machine learning models, including deep learning models. One of the main advantages of using the h2o package in R for deep learning is its ability to handle large datasets and perform distributed computing, offering scalability and performance optimizations.

Here’s how we can set up the deep learning model in H2O for the iris dataset.

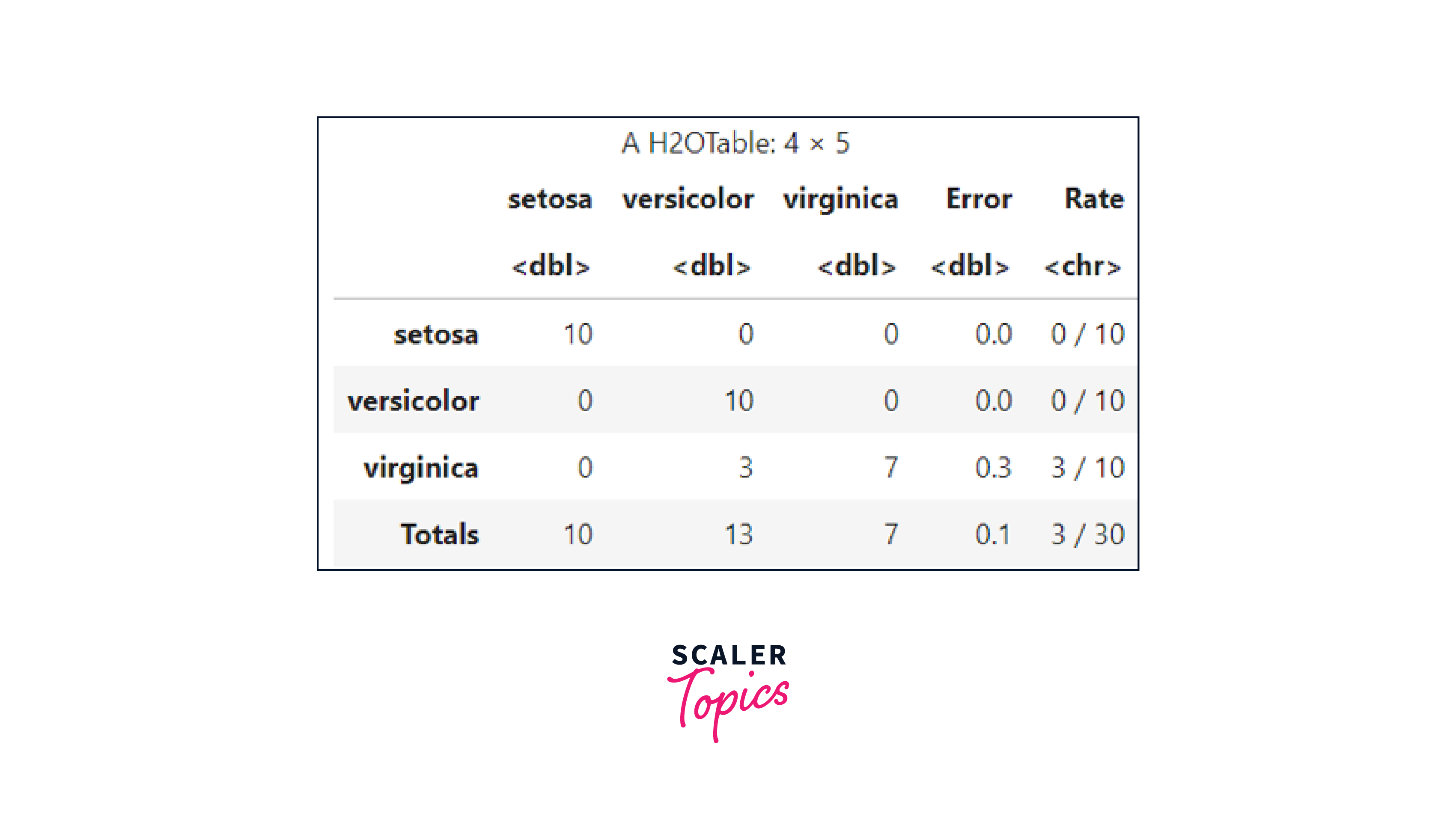

We can print a confusion matrix to visualize the performance of the model

Output:

Fine-Tuning Deep Learning Models in R

Fine-tuning deep learning models in R is a crucial aspect of building robust and high-performing neural networks. This process involves carefully optimizing various components of the model and training process to achieve optimal results. Key considerations include adjusting hyperparameters, optimizing architecture, addressing data preprocessing, and implementing regularization techniques. Through iterative experimentation and validation, fine-tuning ensures that deep learning models are well-suited for specific tasks, delivering both accuracy and generalization.

Following are some key steps that can be carried out as standalone or in combination for fine-tuning these models -

- Ensuring that any missing data has been handled appropriately, required features have been scaled, and categorical variables have been encoded properly before re-training the model.

- Experimenting with the Model Architecture, which includes trying different but appropriate values for layers, neurons, and activation functions.

- Use Hyperparameter Tuning to optimize learning rates, batch sizes, and dropout rates, which improves the overall model performance.

- Applying L1 and L2 regularization to prevent overfitting.

- Assess and improve model performance with cross-validation techniques.

- Monitoring and tracking the training and validation metrics.

- Consider combining multiple models, i.e., ensemble techniques, for improved results.

Conclusion

In this article on deep learning in R, we discussed deep learning and the power of neural networks for real-world applications. Further, we covered different aspects of setting up the deep learning environment to leveraging multiple R packages.

Here are some key takeaways:

- Deep learning is a subfield of machine learning that excels at handling complex tasks by simulating the human brain's neural networks.

- R proves to be a versatile tool for deep learning, offering packages like deepnet, neuralnet, H2O, and Keras.

- Deep learning finds its use in image recognition, natural language processing, finance, healthcare, and more.

- Professionals from various domains, including data scientists, researchers, and engineers, can utilize R for deep learning tasks to solve complex problems.

In conclusion, R serves as a valuable programming tool for setting up complex deep learning models.