DevOps Roadmap 2024 | How to Become a DevOps Engineer

DevOps, a blend of software development and IT operations, emphasizes efficient, high-quality software delivery through collaborative practices. It's a cultural mindset advocating for communication, integration, and automation between development and operations teams. This roadmap guides beginners, detailing steps and concepts vital for becoming a proficient DevOps engineer, focusing on collaboration and continuous improvement across the software lifecycle. Learn key actions and skills to master, paving your way in the evolving world of DevOps.

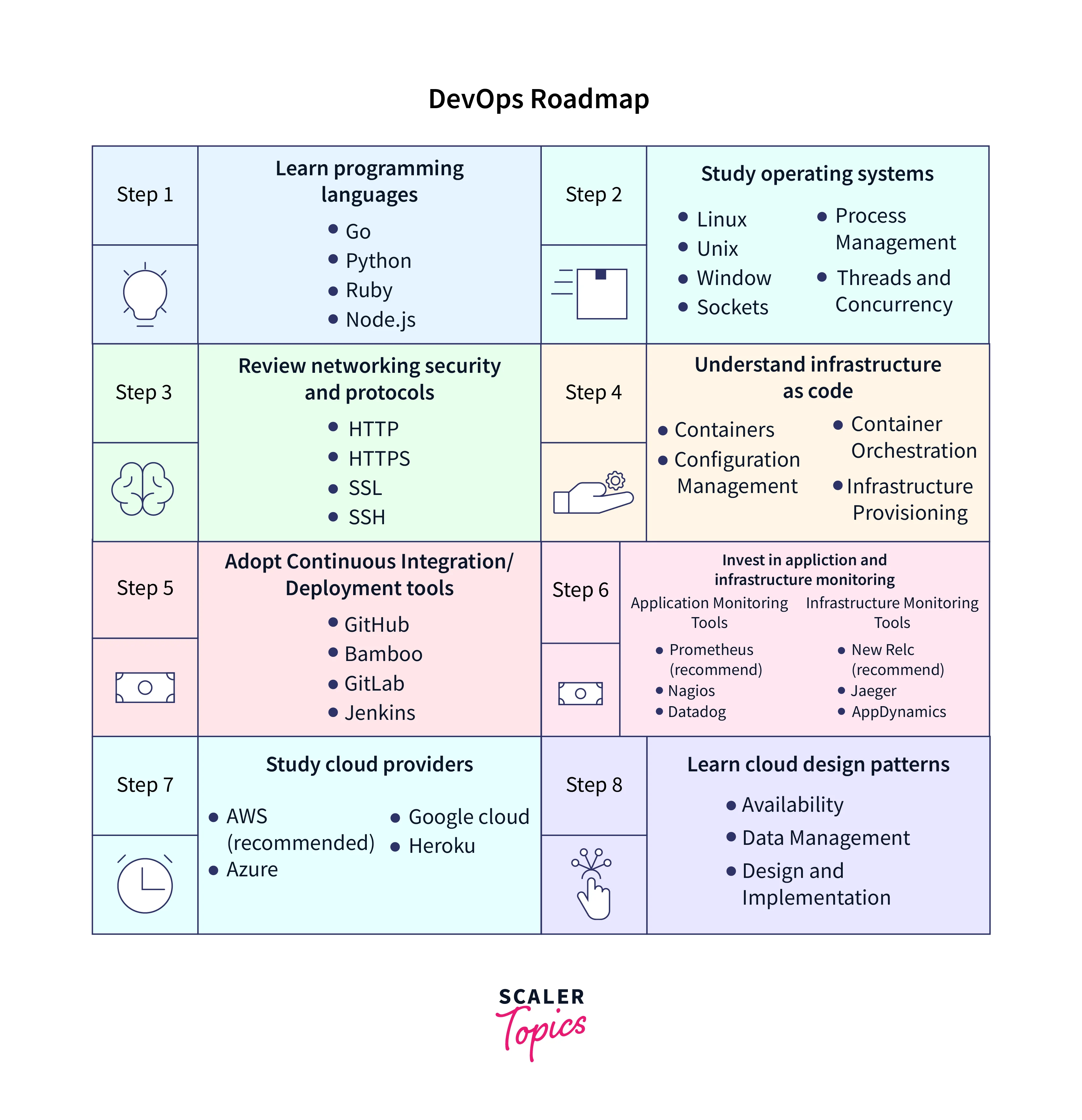

The DevOps Roadmap

You'll see that the DevOps roadmap touches on a wide range of software development ideas. That's because DevOps covers the complete software development and maintenance process, spanning the entire technology stack, from development through operations. This road map is useful information, but as we chart our trip together, we'll concentrate on the major route and go through each stage in greater detail.

Learn Programming Languages

DevOps is more of a set of strategies and methodologies to optimize the software delivery pipeline and is not restricted to any particular programming language. However, having knowledge and proficiency in specific programming languages might benefit DevOps Professionals. The following programming languages are frequently used in DevOps:

Go (recommended)

Go is a programming language created by Google that is becoming increasingly well-liked in DevOps because of its quick compilation times, effective memory management, and integrated concurrency support. It is frequently used to create network applications and microservices.

Ruby

Another well-liked scripting language for automation and configuration management in DevOps is Ruby. It works particularly well in creating server configuration management systems like Chef and Puppet.

Python

Python is a powerful programming language frequently used in DevOps for task orchestration, automation, and scripting. It is suitable for various tasks, including data analysis and machine learning, thanks to its vast standard library and extensive third-party package selection. Some of the most common Python libraries used in DevOps are: boto3(for interacting with AWS services), Kubernetes Python client (kubernetes-py), paramiko, fabric, PyYAML, Jinja2, etc.

Node.js

With the open-source, cross-platform JavaScript runtime environment Node.js, developers can execute JavaScript code outside a web browser. It is frequently employed in DevOps for creating web apps, server-side scripting, and task automation utilizing tools like Grunt and Gulp.

Study Operating Systems

The DevOps roadmap emphasizes the importance of studying operating systems. Operating systems provide the fundamental infrastructure on which the software delivery pipeline is built. Hence successful DevOps deployment requires a thorough understanding of operating systems. Some of the important aspects of the Operating system a DevOps Engineer should be familiar with include memory management, processes, threads, file systems, networking, etc.

Professionals in the DevOps field should also be aware of the specific operating systems and distributions, such as Linux, Unix, and Windows, that are frequently used in the software development sector.

Linux (recommended)

In software development, Linux is a popular free and open-source operating system. Many DevOps tools and technologies, including Docker and Kubernetes, are built to operate well with Linux and are especially popular for web servers. The Linux commands and the particular Linux distributions that are frequently used, such as Ubuntu, CentOS, and Red Hat Enterprise Linux, should be known to DevOps experts.

Unix

The 1970s saw the creation of the Unix family of multitasking, multiuser computer operating systems. While Linux has mostly taken place in the software development business, legacy systems and specialized industries like finance and aerospace still utilize it. Many areas may require DevOps practitioners to know Unix principles and commands.

Windows

Microsoft created the well-known operating system known as Windows. While it is less extensively used in software development than Linux, it is nevertheless a powerful platform for many organizations. PowerShell, a scripting language and automation framework used for controlling Windows systems, must be used for DevOps workers working in Windows environments. They should also be knowledgeable about Windows Server, a server operating system created especially for business-class settings.

OS Concepts to Learn

There are several operating systems (OS) concepts that are important for DevOps professionals to understand:

Process Management

This involves planning and prioritizing the execution of programs and managing their creation and execution. DevOps experts should know how operating systems distribute resources, control memory usage, and handle interprocess communication.

Sockets

Endpoints for network communication across applications are sockets. In DevOps, they are heavily utilized for network communication between various application components or between various apps in a distributed system. DevOps specialists should be knowledgeable in socket programming, including establishing and managing sockets, sending and receiving data over sockets, and solving typical socket-related problems. Jenkins is a popular DevOps tool that uses socket programming to communicate between its master and slave nodes.

Threads and Concurrency

Concurrency can be achieved in software by using threads. Because many apps are built to handle many requests or carry out multiple operations concurrently, this is crucial in DevOps. To avoid race scenarios and other concurrent problems, DevOps personnel should be conversant with thread synchronization mechanisms like mutexes, semaphores, and monitors. They should be able to maximize the performance and resource efficiency of multi-threaded programs.

Virtualization

The main goal of DevOps is to accelerate and enhance software development, which is made feasible by the increasing usage of virtualization. Every step of the process, including development, testing, and deployment as well as operations, delivery, and maintenance, are impacted by virtualization, which is a crucial component of DevOps.

Review Networking Security and Protocols

Networking security and protocols are critical components of any DevOps roadmap. Here's a brief overview of some of the key concepts under network security and protocols:

HTTP

The Hypertext Transfer Protocol (HTTP) transfers data over the internet. It serves as the backbone of the World Wide Web and facilitates communication between web servers and browsers. Understanding HTTP is crucial in DevOps domain as it introduces concepts like setting up web servers, delivering web applications, and resolving problems with web traffic.

HTTP is a protocol that governs web server-to-client communication (such as browsers). When a client sends an HTTP request to a server, the server returns an HTTP response with data or instructions for the client. HTTP runs on top of the TCP/IP i.e. Transmission Control Protocol and Internet Protocol, which ensures reliable internet communication. HTTP requests and responses are typically text-based, but they can also include binary data such as images or videos.

HTTPS

A secure variant of HTTP called Hypertext Transfer Protocol Secure (HTTPS) encrypts data as it is transferred. HTTPS is crucial for safeguarding online traffic and preventing data breaches, particularly for applications that handle sensitive user data. DevOps specialists need to be knowledgeable in installing and configuring SSL/TLS certificates and configuring HTTPS on web servers.

HTTPS guarantees the authenticity, secrecy, and integrity of data sent over the internet. The server provides a secure digital certificate with a public key when a client establishes an HTTPS connection. This key is used by the client to create a securely encrypted connection with the server. Unauthorized parties cannot intercept and read the data thanks to its encryption. Then, in order to protect the data's integrity, the client and server communicate via encrypted messaging.

SSL

The protocols Secure Sockets Layer (SSL) and Transport Layer Security (TLS) are used to protect online communication. They are used to authenticate servers and clients and encrypt data in transit. DevOps specialists should be knowledgeable about setting up SSL/TLS on web servers, including how to create, administer, and debug SSL/TLS certificate difficulties.

SSH

SSH is a protocol for safely connecting to distant servers over a network. It offers a safe, encrypted connection and can be used for file transfers, tunnelling, and remote command execution. DevOps experts should understand SSH to manage and securely access remote servers.

Understand Infrastructure as Code

The process of managing and provisioning infrastructure resources using code, which can be versioned, reviewed, and tested just like software code, is called infrastructure as code (IaC). DevOps engineers may automate setting up and configuring infrastructure resources by leveraging IaC, creating a more dependable, consistent, and scalable infrastructure. Additionally, IaC can improve cooperation between the development and operational teams while minimizing manual errors and ensuring industry standards are followed.

Containers

Containerization entails placing a software component and its environment, dependencies, and configuration, into an isolated unit called a container. Containers offer a uniform runtime environment for applications across various operating systems and infrastructures, enabling the bundle and distribution of software in a portable and lightweight manner. DevOps engineers may streamline application deployment, testing, and scaling by adopting containers, accelerating time-to-market and boosting agility. Moreover, containers can also increase resource utilization, improve application security, and save infrastructure expenses.

Docker

The well-known containerization platform Docker makes the design and deployment of containers simple. DevOps engineers can deploy and manage applications across many environments more easily by bundling programs and dependencies into a single container using Docker. Moreover, Docker offers a consistent runtime environment that makes it simpler to recreate problems and debug programs. Deploying applications on various infrastructure and operating systems are also simpler thanks to Docker's broad platform compatibility.

Kubernetes

The deployment, scaling, and management of containerized applications can be automated with the help of the open-source container orchestration platform Kubernetes. DevOps developers can quickly deploy and manage containerized apps across numerous hosts and clusters by utilizing Kubernetes. Kubernetes' extensive feature set makes large-scale application management simpler, including load balancing, automatic scaling, rolling upgrades, and self-healing capabilities. Kubernetes also supports a wide variety of platforms, making it simpler to deploy apps across many cloud service providers.

Configuration Management

Automating the process of configuring and managing infrastructure resources and software is a component of configuration management. DevOps engineers can ensure systems are set appropriately, regularly, and securely by utilizing configuration management tools like Ansible, Chef, and Puppet. Moreover, configuration management systems can improve productivity, decrease human errors, and give users better insight and control over infrastructure resources.

Container Orchestration

Controlling many containers and their interactions in a distributed environment is known as container orchestration. DevOps developers can automate containerised applications' deployment, scaling, and management across multiple hosts and clusters by using container orchestration solutions like Kubernetes, Docker Swarm, and Mesos. Large-scale application management is made simpler by the features offered by container orchestration tools, like load balancing, automatic scaling, rolling upgrades, and self-healing capabilities.

Infrastructure Provisioning

Infrastructure provisioning is automatically and programmatically establishing and managing infrastructure resources, such as servers, networks, and storage. DevOps engineers may supply and manage infrastructure resources across many cloud providers using infrastructure provisioning technologies like Terraform and CloudFormation. Tools for infrastructure provisioning can also aid with efficiency gains, increased visibility, and control over infrastructure resources.

Adopt Continuous Integration/Continuous Deployment Tools

A good DevOps strategy must include Continuous Deployment (CD) and Continuous Integration (CI). By automating software development, testing, and deployment, CI/CD systems enable the timely and reliable release of new features and issue fixes. A brief description of some of the most well-liked CI/CD tools follows:

GitHub

Teams can collaborate on software development projects using the web-based version control platform GitHub. Version control, code review, and issue tracking are just a few of the services that GitHub offers to help teams manage the software development lifecycle. Also, GitHub interfaces with a number of CI/CD solutions, simplifying the automation of the build and deployment process.

GitLab

A web-based open-source Git repository manager called GitLab offers features including container registry, continuous deployment, and continuous integration. Teams can automate the build, test, and deployment process using GitLab's CI/CD pipelines, making it simpler to ship new features on time and with reliability. Moreover, GitLab offers a number of project management tools that make it simpler for teams to interact and oversee the development lifecycle.

Bamboo

Bamboo is a continuous integration and delivery technology connecting with Atlassian products such as Jira, Bitbucket, and Confluence. With the help of Bamboo, teams may more efficiently and reliably release new features by using features like automated builds, test execution, and deployment. Moreover, Bamboo offers a number of project management capabilities that make it simpler for teams to interact and control their development lifecycle.

Jenkins

Jenkins is an open-source CI/CD solution that offers capabilities, including automated builds, test execution, and deployment. Jenkins facilitates the automation of the build and deployment process by supporting various programming languages and integrating them with several other technologies. Jenkins also offers a comprehensive collection of plugins, making it simple to tweak and extend the tool for specific use cases.

FluxCD and ArgoCD

In the Kubernetes world, two well-liked and potent continuous delivery (CD) tools are FluxCD and ArgoCD.

A declarative and version-controlled approach to managing infrastructure is made possible by FluxCD's focus on GitOps principles, which automatically synchronise and deploy changes to the cluster based on Git repository commits.

Argo CD has a graphical user interface (GUI) and a broad set of functions, making it a more complete CD solution. It offers a visualisation of the planned and actual states of the cluster and enables the deployment of complicated applications. It is appropriate for bigger and more complicated settings since it supports a broad variety of deployment options and manages numerous clusters at once.

Invest in Application and Infrastructure Monitoring

Application and infrastructure monitoring are critical components of modern DevOps practices. Here's a brief explanation of some of the most popular tools for application and infrastructure monitoring:

Application Monitoring

Some of the popular tools under application monitoring are:

Prometheus (recommended)

Prometheus is an open-source time-series database and monitoring system that gathers and stores metrics from services and applications. Prometheus offers robust query language and visualization capabilities to analyse and track the performance of applications and services.

Nagios

Nagios is a well-known open-source monitoring system that offers a full range of capabilities for keeping track of services and applications. Nagios can keep track of a variety of metrics and send warnings and alarms when problems are found.

Datadog

Datadog is a cloud-based monitoring and analytics platform that offers many tools for monitoring and examining services and applications. Datadog has strong visualization and alerting capabilities and interfaces with various technologies.

Infrastructure Monitoring

Standard tools used for Infrastructure monitoring are listed below:

New Relic (recommended)

A comprehensive set of tools for monitoring infrastructure and applications are provided by the cloud-based monitoring and analytics platform New Relic. When problems are found, New Relic may monitor various metrics and send alerts and notifications.

Jaeger

Jaeger is a robust set of tools for monitoring and assessing the performance of distributed systems. It is an open-source distributed tracing system. Jaeger offers extensive analytics for each request and can track requests across numerous services.

AppDynamics

AppDynamics is a cloud-based application performance monitoring (APM) platform that offers a full suite of tools for tracking and examining software and services. When problems are found, AppDynamics can monitor a wide range of metrics and send alerts and notifications.

Study Cloud Providers

DevOps engineers must be aware of the various cloud providers, and their offerings as more and more businesses migrate to the cloud. Here is a quick rundown of some of the most well-known cloud service providers:

AWS (recommended)

The most well-known cloud service provider is AWS, which provides various services, including computing, storage, databases, networking, and security. For DevOps engineers, AWS offers a full range of tools and services, including AWS CodePipeline, AWS CodeCommit, AWS CodeBuild, AWS CodeDeploy, and AWS CloudFormation. Teams may automate every step of the software development lifecycle with these tools, from code commit to deployment.

Azure

Another well-known cloud service provider, Azure, provides various services, including computing, storage, databases, analytics, and artificial intelligence. For DevOps developers, Azure offers a variety of tools, including Azure DevOps, Azure DevTest Labs, and Azure Resource Manager. Teams may automate all phases of the software development lifecycle with these tools, from planning to development to testing to deployment.

Google Cloud

A comprehensive range of services, including computing, storage, databases, networking, and security, are provided by GCP. For DevOps developers, GCP offers a variety of tools, including Google Cloud Build, Google Cloud Deployment Manager, and Google Cloud Functions. Teams may automate the entire software development lifecycle using these tools, from code commit to deployment.

Heroku

A team may easily and quickly deploy, manage, and scale applications using the cloud platform Heroku. DevOps engineers can use various tools from Heroku, including Heroku CI/CD, Heroku Review Applications, and Heroku Add-ons. Teams may automate the entire software development lifecycle using these tools, from code commit to deployment.

Learn Cloud Design Patterns

Scalable, highly available, and resilient cloud-based solutions can be designed and implemented using architectural patterns known as cloud design patterns. Here's a brief description of some of the most common cloud design patterns:

Availability

Even in the case of failures or downtime, availability patterns guarantee that applications and services are highly available. Common patterns of availability include the following:

- Load Balancing: Distributes incoming network traffic among several servers through load balancing to increase availability and scalability.

- Auto Scaling: To ensure availability, auto-scaling automatically increases or decreases computer resources based on demand.

- Redundancy: In the event of failures or downtime, redundancy offers backup systems or components to ensure availability.

- Disaster Recovery: In the case of calamities or disruptions, data and apps are replicated across several sites to assure availability.

Data Management

Data management patterns aid in scalable and highly available data management. Common data management patterns include the following:

- Sharding: To increase scalability and performance, data is divided into smaller bits and distributed across several servers.

- Caching: Frequently accessed material is stored in memory to enhance performance and lower latency.

- Replication: Data is replicated across numerous servers to guarantee availability and dependability.

Design and Implementation

Design and implementation patterns aid in creating scalable, highly available, and resilient cloud-based solutions. Typical design and implementation patterns include the following:

- Microservices: An application is divided into smaller, independent services called microservices, which can be independently developed, deployed, and scaled.

- Serverless: Runs program in reaction to events without requiring management of infrastructure or servers.

- Event-driven: Increases scalability and decreases latency by responding to events by launching code or processes.

Chaos Engineering

In chaos engineering, errors and disruptions are purposefully introduced into systems to test and enhance their resilience. DevOps teams can better systems' overall dependability and availability by identifying and fixing potential flaws through simulations of real-world events. Tools like Chaos Monkey, Gremlin, and ChaosIQ can be used to put chaos engineering into practice.

Service Mesh and Traffic Management

Service Mesh is a dedicated infrastructure layer for microservices-based systems that offers services like service discovery, load balancing, and traffic management. DevOps teams can monitor and manage service traffic thanks to Service Mesh's assistance in managing the intricate connections between microservices. Istio, Linkerd, and Consul are some well-known Service Mesh tools.

Modern microservices-based applications are complicated, and managing their complexity requires service mesh and traffic management. Service Mesh helps DevOps teams to manage the network of interactions between microservices by offering a dedicated infrastructure layer, assuring dependable and scalable connectivity.

DevOps teams can manage traffic flows between services using traffic management capabilities like load balancing and routing, enhancing performance and lowering latency. Teams can build a fully automated and simplified DevOps pipeline that will result in a shorter time to market, greater agility, and higher quality by integrating Service Mesh and Traffic Management with other DevOps technologies like CI/CD, containers, and configuration management.

Harness the Force of DevOps: Enlist in Our DevOps Online Course for Practical Insights and In-Depth Learning. Enroll Now!

Conclusion

- DevOps professionals should have knowledge and proficiency in programming languages frequently used in DevOps, such as Go, Ruby, Python, and Node.js.

- DevOps professionals should be familiar with specific operating systems and distributions, such as Linux, Unix, and Windows, and be knowledgeable about important OS concepts, such as process management, sockets, and concurrency.

- Organizations can fulfil their DevOps objectives by investing in infrastructure such as code, containerization, configuration management, CI/CD tools, and monitoring solutions.

- DevOps automation tools like CI/CD, containerization, and configuration management help teams to automate their software delivery pipelines, reducing errors and improving quality.

- DevOps teams can enhance their system's resilience by implementing chaos engineering techniques to identify and fix potential system flaws through simulations of real-world events.

- Service Mesh and traffic management tools like Istio, Linkerd, and Consul can help DevOps teams to manage the network of interactions between microservices, improving the performance and scalability of their applications.