Epochs in Machine Learning

Epochs in Machine Learning play a pivotal role in the iterative process of training models, refining accuracy and leading to better predictions over time.

Epoch in Machine Learning

In the realm of machine learning, an epoch represents a crucial concept in the process of training models, particularly when it comes to improving their ability to make predictions. Understanding the role and impact of epochs in machine learning can significantly enhance the effectiveness and efficiency of training processes.

Features of Epoch

- Comprehensive Dataset Exposure:

Epochs in machine learning ensure that models are exposed to every piece of data in the training set, allowing them to learn from the full range of data. This complete exposure is crucial for models to generalize effectively to new, unseen data. - Iterative Learning and Adjustment:

During each epoch in machine learning, models adjust their internal parameters in response to prediction errors, facilitating continuous improvement in accuracy and reduction of errors over time through this iterative learning process.

- Performance Monitoring and Evaluation:

Epochs enable ongoing monitoring of model performance, allowing developers to track progress, identify overfitting, and make data-driven decisions on training duration to optimize model effectiveness. - Flexibility in Training Duration:

The adjustable nature of epoch count offers the flexibility to tailor training lengths to the model's and dataset's specific requirements, preventing underfitting or overfitting by optimizing the number of training cycles.

Example

To illustrate the concept of epochs in machine learning, consider the example of training a convolutional neural network (CNN) to recognize handwritten digits from the MNIST dataset—a common benchmark in the field of machine learning. The MNIST dataset comprises tens of thousands of labeled images of handwritten digits (0 through 9).

Training Process:

- Initial Exposure:

In the first epoch, the CNN is exposed to the entire dataset, processing each image and learning to recognize patterns that distinguish between different digits. The model's initial performance may be modest, as it is just beginning to learn from the data. - Iterative Improvement:

As the training process continues through multiple epochs, the CNN repeatedly sees the entire dataset. With each epoch, it adjusts its parameters in an effort to reduce the discrepancy between its predictions and the actual labels of the images. - Performance Evaluation:

After each epoch, the model's performance is evaluated using a separate validation dataset. This evaluation helps to monitor improvements in accuracy and detect signs of overfitting, informing decisions about whether to continue training.

Outcome:

After a predetermined number of epochs or once the model's performance on the validation set ceases to improve significantly, the training process is concluded. At this point, the CNN has learned to accurately recognize handwritten digits, demonstrating the power of epochs in machine learning to facilitate comprehensive and iterative learning.

What is Iteration?

An iteration refers to a single update of the model's parameters, which occurs once for every batch of data during the training process. Unlike an epoch, which encompasses an entire pass through the training dataset, an iteration deals with processing only a subset of the dataset at a time. This method allows for more frequent updates to the model's weights and biases, potentially leading to faster convergence on an optimal set of parameters.

Example

Let's consider a practical example of training a machine learning model on a dataset containing 2,000 images used for facial recognition. If you decide to use a batch size of 200 images, the training process would involve:

- Division into Batches:

The dataset is divided into 10 batches, each containing 200 images. - Processing Batches:

For each iteration, the model processes one batch of 200 images, calculates the loss (the difference between the predicted outputs and the actual labels), and updates its parameters (e.g., weights and biases) in an attempt to minimize this loss. - Iterations within an Epoch:

Since there are 10 batches, completing an entire epoch would require 10 iterations. Each iteration improves the model slightly based on the specific batch it is currently processing.

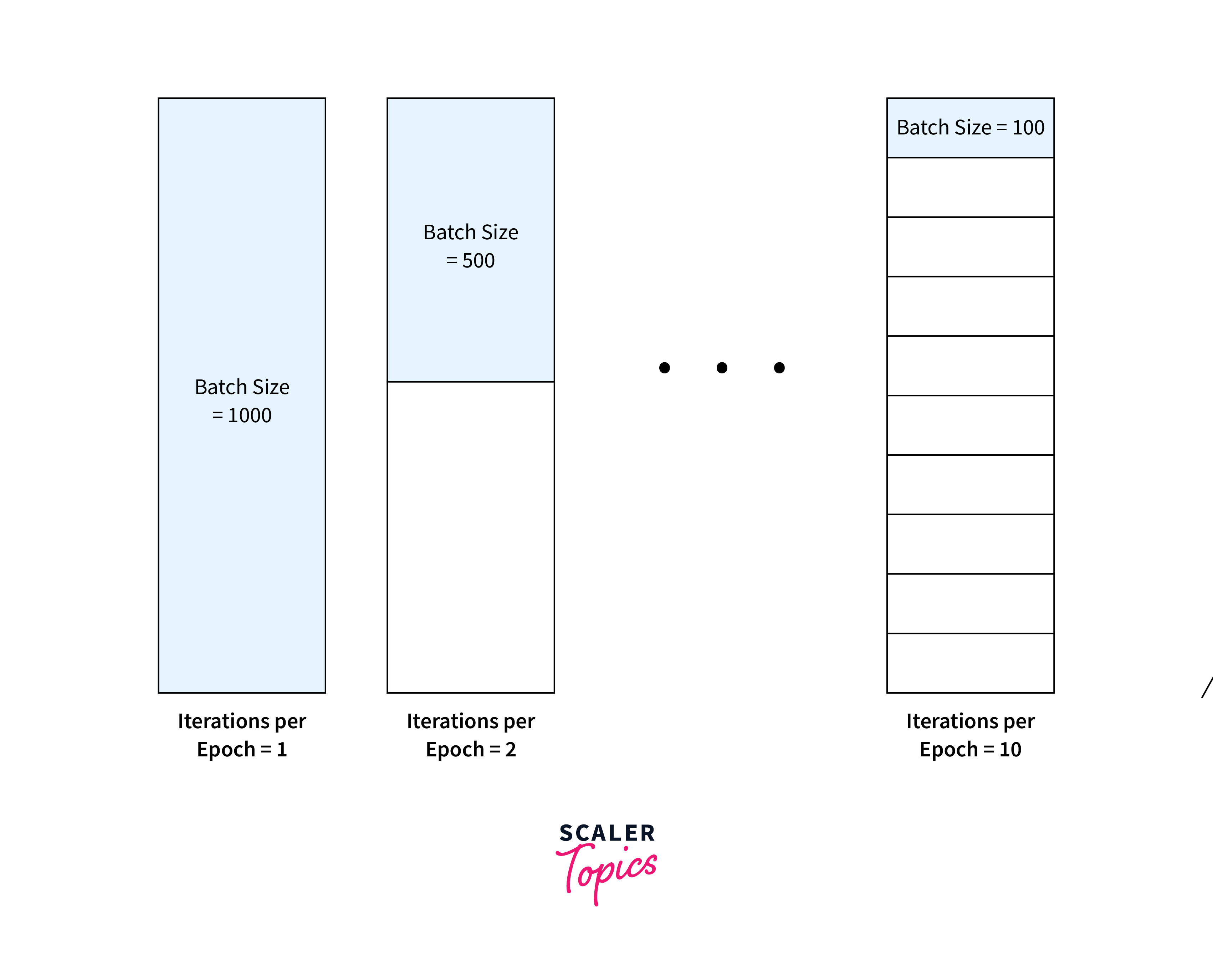

Batch in Machine Learning

A batch refers to the subset of the dataset that is used for training in one iteration. Batching is a crucial technique, especially in the context of batch gradient descent and mini-batch gradient descent algorithms, where it strikes a balance between the computational efficiency of stochastic gradient descent (processing one data point at a time) and the stability of batch gradient descent (processing the entire dataset at once).

Key Aspects of Batching:

- Efficient Data Processing:

By dividing the dataset into smaller, more manageable groups, batching allows for more efficient use of computational resources. This efficiency is particularly beneficial when dealing with large datasets that might not fit into memory if processed all at once. - Parallel Computation:

Batches enable parallel processing of data points within the batch, which can significantly speed up the training process on hardware that supports parallelization, such as GPUs. - Noise Reduction:

While stochastic gradient descent (SGD) updates parameters for each data point, leading to a noisy but fast convergence, batching reduces this noise by averaging the gradients over a batch. This results in smoother convergence towards the minimum of the loss function.

Example of Batching in Training:

Imagine training a deep learning model on a dataset consisting of 50,000 images for image classification. To manage memory resources and speed up the training process, you might choose a batch size of 100 images. This approach means that the dataset will be divided into 500 batches (50,000 divided by 100), with each batch containing 100 images. During each iteration of the training process, the model will process one batch, adjust its parameters based on the calculated loss, and then move on to the next batch. This cycle repeats until the model has processed all batches, marking the completion of one epoch.

Epoch Vs Batch in Machine Learning

This table highlights the fundamental differences between epochs and batches in machine learning, underlining their distinct roles and impacts on the training process of models.

| Feature | Epoch | Batch |

|---|---|---|

| Definition | An epoch refers to one complete pass through the entire training dataset, allowing the model to learn from every sample. | A batch is a subset of the training dataset used in one iteration of model updates. |

| Scope | Covers the entire dataset. | Covers a portion of the dataset at any given time. |

| Purpose | Allows the model to learn from the entire data set, improving generalization and accuracy over time. | Enables efficient use of memory and computational resources during training. |

| Update Frequency | Model parameters are updated after the model has seen the entire dataset once. | Model parameters are updated after each batch is processed. |

| Impact on Training | Determines how many times the model will see the entire dataset throughout the training process. | Influences the granularity of the learning process and can affect the speed and stability of convergence. |

| Typical Use | Used to track overall progress in training and to prevent overfitting or underfitting by adjusting the number of epochs based on performance. | Used to control the speed of training and to manage the computational load during training. |

Advantages of Epoch in Machine Learning

- Enhanced Learning from Comprehensive Data Exposure:

Each epoch ensures the model is exposed to every data point in the training set, promoting a thorough understanding and learning from the entire dataset. This complete exposure is crucial for the model's ability to generalize to new, unseen data effectively. - Iterative Optimization for Improved Accuracy:

With every epoch, the model iteratively refines its internal parameters (like weights in neural networks), leading to a gradual reduction in prediction errors. This process enables the model to improve its accuracy and performance over time. - Effective Monitoring and Performance Evaluation:

Epochs provide a structured framework for evaluating the model's performance after each complete pass through the dataset. This allows for the monitoring of progress, identification of when and if the model starts overfitting, and making informed decisions on when to stop training. - Flexibility in Training Process:

The number of epochs can be adjusted according to the model's needs and the dataset's characteristics. This flexibility ensures that the training duration is optimized, preventing both underfitting (too few epochs) and overfitting (too many epochs). - Facilitates SGD Convergence:

In models using SGD or its variants for optimization, epochs are essential for the convergence process, allowing the algorithm to iteratively adjust to find the minimum loss, thus optimizing the model's parameters for better performance.

Limitations

- Risk of Overfitting:

Training a model for too many epochs can lead the model to learn not just the underlying patterns but also the noise within the training dataset. This overfitting reduces the model's ability to generalize to new, unseen data. - Increased Computational Cost:

Each epoch involves processing the entire training dataset, which can be computationally intensive and time-consuming, especially with large datasets and complex models. - Diminishing Returns:

Beyond a certain number of epochs, improvements in model performance can become marginal, leading to a point where additional epochs do not contribute significantly to better accuracy or lower loss. - Requirement for Proper Tuning:

Determining the optimal number of epochs requires careful tuning and monitoring to avoid underfitting or overfitting. This process can be challenging, especially for beginners or in the absence of a clear validation performance improvement.

FAQs

Q. How do I determine the optimal number of epochs for my model?

A. The optimal number of epochs varies by model and dataset; it's usually found through experimentation, using validation data to monitor performance.

Q. Can too many epochs harm my model's performance?

A. Yes, too many epochs can lead to overfitting, where the model performs well on training data but poorly on unseen data.

Q. Is there a way to automatically adjust the number of epochs during training?

A. Yes, techniques like early stopping monitor validation loss and automatically halt training when improvement stops, effectively adjusting the number of epochs.

Q. Do epochs affect the training time of a model?

A. Yes, more epochs increase training time as the model processes the entire dataset multiple times, but they can also improve model accuracy.

Conclusion

- Epochs in machine learning are fundamental to the iterative learning process, allowing models to learn comprehensively from the entire dataset.

- roperly managed epochs enhance model accuracy while mitigating the risk of overfitting, striking a balance between learning effectively and generalizing well to new data.

- They provide a structured framework for performance evaluation and optimization, facilitating informed decisions about training duration and model adjustments.

- The flexibility in setting the number of epochs allows for tailored training strategies, accommodating different models and datasets to optimize learning outcomes.