Best Hadoop Certifications

Overview

In today's data-driven world, Hadoop has emerged as a vital tool for managing and analyzing Big Data, and professionals with expertise in Hadoop are in high demand. Big Data Hadoop certifications offer individuals a way to acquire the necessary skills and knowledge to excel in this field.

What is the Difference between Hadoop and Big Data?

| Aspect | Hadoop | Big Data |

|---|---|---|

| Definition | An open-source framework for data storage and processing across distributed clusters. | The vast volumes of data generated from diverse sources which require specialized tools for management and analysis. |

| Function | Manages, stores, and processes data efficiently using its ecosystem of tools. | Encompasses the extensive quantity of data. |

| Purpose | Designed to handle and derive insights from large-scale data through parallel processing. | Refers to the sheer magnitude of data, demanding innovative solutions for storage and analysis. |

| Components | Comprises HDFS, MapReduce, YARN, and various tools like Hive, Pig, and HBase. | Involves various data types - structured, unstructured, and semi-structured - from sources like social media, IoT devices, etc. |

| Role | Acts as a framework for processing and analyzing Big Data. | Describes the data itself, regardless of the tools used to manage it. |

| Importance | Crucial for efficiently managing and processing vast datasets. | Signifies the challenge posed by the volume, variety, and velocity of data. |

| Focus | Focuses on the technical infrastructure for data handling. | Focuses on the magnitude and complexity of data. |

| Example | Hadoop processes data using MapReduce to analyze customer behavior. | Big Data includes terabytes of social media posts and sensor readings. |

What will I Learn from Big Data Hadoop Training and Certification?

-

Hadoop Ecosystem:

Gain an understanding of the Hadoop ecosystem such as Pig, Hive, Hbase, Oozie and its components, and their roles in managing and processing data.

-

Hadoop Distributed File System (HDFS):

Learn about HDFS, which divides and stores data across multiple nodes for scalability and fault tolerance.

-

MapReduce:

Master the MapReduce programming model, used for processing and analyzing large datasets in parallel.

-

Data Processing:

Learn to process and manipulate Big Data using tools like Hive and Pig for querying. Also, learn different data types like Avro, etc.

-

Cluster Management:

Understand how to manage Hadoop clusters in Amazon EC2 or any platform.

-

Fault Tolerance:

Learn about Hadoop's fault tolerance mechanisms and testing using MRUnit and other tools.

-

Data Analytics:

Learn to extract valuable insights from vast datasets, contributing to informed decision-making.

-

Optimization Techniques:

Learn techniques to optimize Hadoop jobs and clusters for enhanced performance.

-

Data Integration:

Explore methods to integrate Hadoop with other tools and platforms for seamless data flow.

-

Machine Learning Integration:

Discover how Hadoop can be integrated with machine learning frameworks to build predictive models.

-

Case Studies:

Analyze real-world case studies showcasing successful implementations of Hadoop for Big Data challenges.

Pre-requisites of Big Data Hadoop Certifications

While prerequisites can vary, the following are the most basic prerequisites for Big Data Hadoop certifications:

-

Programming Knowledge:

A solid foundation in programming languages such as Java or Python.

-

Basic Data Understanding:

Familiarity with fundamental data management concepts.

-

Linux Familiarity:

An understanding of basic Linux commands and navigation is valuable, as Hadoop operates in Linux environments.

-

Database Concepts:

Basic knowledge of databases and SQL can be beneficial when working with tools like Hive and querying data stored in Hadoop clusters.

-

Analytical Skills:

Strong analytical abilities will aid in comprehending data patterns and deriving meaningful insights from vast datasets.

Online Big Data Hadoop Certifications

Cloudera Hadoop Certifications

Cloudera offers a comprehensive range of certifications for Hadoop professionals, including Hadoop administrators, developers, and data analysts.

- Widely recognized in the industry and holds substantial value in job markets.

- Designed to validate practical skills in real-world Hadoop environments.

Hortonworks Hadoop Certifications

Hortonworks, now part of Cloudera, provides certifications for Hadoop professionals across various domains, including administration, development, and data analysis.

- Known for their hands-on approach, requiring practical skills similar to real projects.

- Focus on the practical application of Hadoop skills in scenarios relevant to industry needs.

MapR Hadoop Certifications

MapR offers certification programs catering to different aspects of Hadoop, including essentials, administration, and development.

- Emphasize hands-on expertise.

- Prepares candidates for diverse roles within the Hadoop ecosystem.

IBM Hadoop Certifications

IBM provides certifications covering various aspects of Big Data and Hadoop, including Hadoop fundamentals, data analysis, and machine learning integration.

- Offer a comprehensive understanding of Hadoop while also delving into advanced topics like machine learning and AI integration within the Hadoop environment.

- Backed by IBM, adding credibility to the certified professional's skill set.

SAS Hadoop Certifications

SAS offers certifications for data professionals seeking to enhance their Hadoop skills, covering topics like data manipulation, transformation, and analysis.

- SAS certifications focus on data analytics using Hadoop.

- Emphasize SAS-specific tools and techniques for data manipulation and analysis.

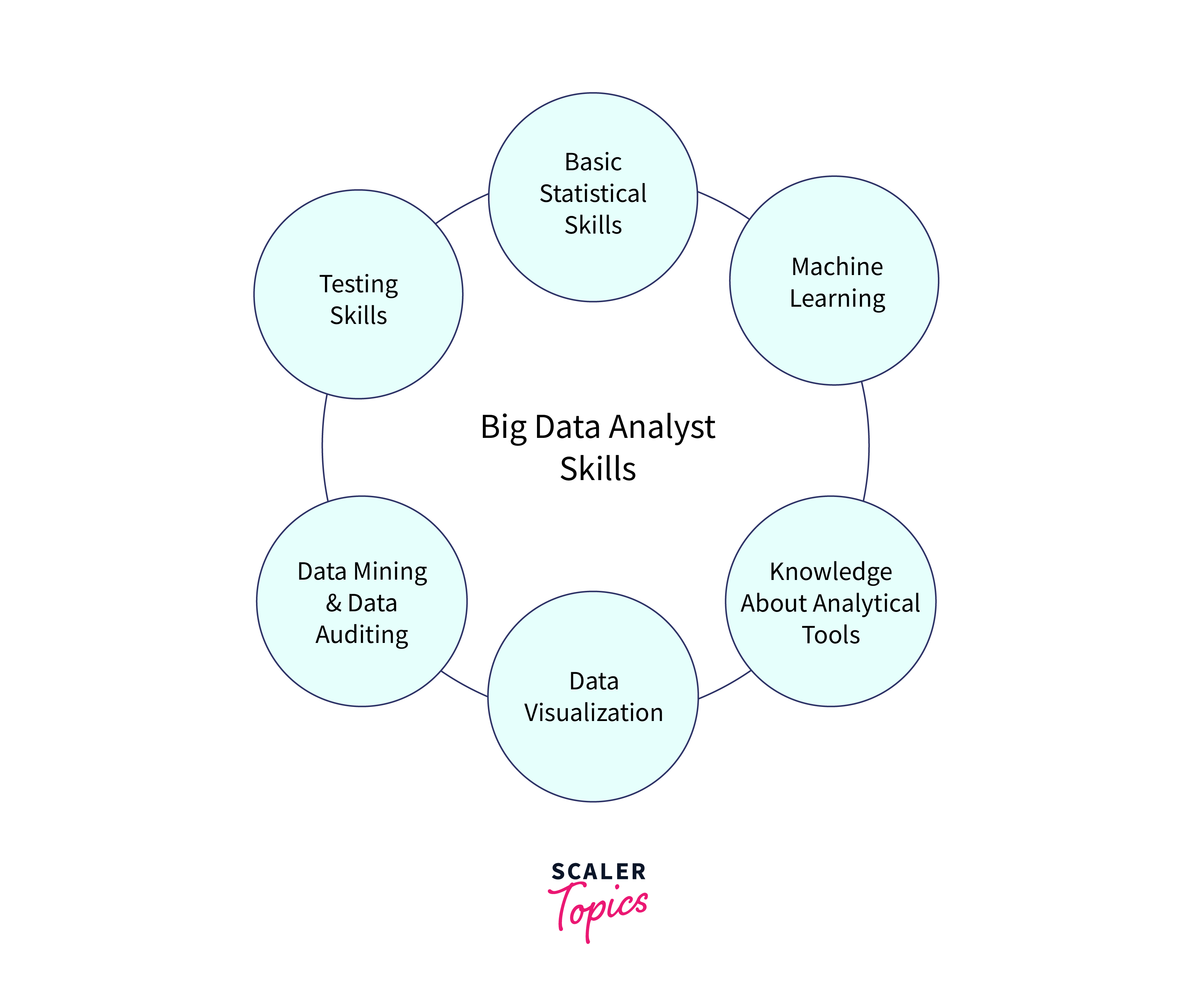

Data Jobs for Professionals with Big Data Hadoop Certifications

Professionals who hold Big Data Hadoop certifications are well-equipped to pursue a wide range of data-focused roles across various industries.

-

Hadoop Developer:

Responsible for designing, developing, and implementing Hadoop applications.

-

Data Engineer:

Specializes in building and maintaining the data pipeline required to process and store large datasets.

-

Data Analyst:

Extracts valuable insights from complex datasets, often presenting findings to non-technical stakeholders.

-

Big Data Architect:

Responsible for designing end-to-end data solutions that incorporate Hadoop and other relevant technologies.

-

Data Scientist:

Utilizes advanced analytical techniques to extract insights and develop predictive models from vast datasets.

-

Hadoop Administrator:

Manages and maintains Hadoop clusters, ensuring their performance, availability, and security.

-

Business Intelligence Analyst:

Extracts insights from diverse data sources to create interactive dashboards, visualizations, and reports using BI tools.

-

Machine Learning Engineer:

Applies machine learning algorithms to analyze and predict outcomes from large datasets.

Course Contents for Expertise in Big Data Hadoop Certifications

Course contents for expertise in Big Data Hadoop certifications typically cover:

-

Hadoop ecosystem components:

Understand the roles of HDFS, MapReduce, YARN, and related tools.

-

Data storage and retrieval:

Learn efficient data storage techniques and retrieval methods within Hadoop clusters.

-

MapReduce programming:

Master the MapReduce model for parallel processing and data analysis.

-

Data processing tools:

Explore tools like Hive and Pig for querying and processing large datasets.

-

Cluster management:

Learn to manage Hadoop clusters, ensuring optimal performance and scalability.

-

Fault tolerance mechanisms:

Understand how Hadoop ensures data integrity and system resilience.

-

Data analytics techniques:

Gain insights into deriving meaningful patterns from Big Data using analytics.

-

Machine learning integration:

Explore the integration of machine learning frameworks for predictive modeling.

-

Real-world projects:

Apply skills to practical projects, solving data challenges using Hadoop tools.

-

Data security and privacy:

Learn about securing Hadoop clusters and implementing data privacy measures.

-

Performance optimization:

Discover techniques to optimize Hadoop jobs and cluster performance.

Popular Types of Big Data Hadoop Certifications

Big Data Hadoop Developer Certificate

This certification is specific for individuals aspiring to become proficient Hadoop developers. It focuses on building applications, implementing MapReduce, and working with Hadoop's ecosystem of tools.

Aspects:

-

MapReduce Mastery:

Developers learn to design, code, and optimize MapReduce applications, which are essential for parallel processing and analysis of large datasets.

-

Ecosystem Proficiency:

This certification covers a range of tools within the Hadoop ecosystem, such as Hive, Pig, and HBase, enabling developers to work effectively with various data processing scenarios.

-

Real-world Projects:

Candidates complete hands-on projects that simulate real-world challenges, solidifying their skills in developing Hadoop applications.

| Field | Value |

|---|---|

| Exam Pattern | Multiple-choice questions and coding tasks |

| Time Duration | Around 2-3 hours |

| Passing Score | Typically, 70% or above |

| Language for Test | English |

| Cost of Certification | Varies by provider; approximately 300 |

| Prerequisites | Basic programming knowledge, Understanding of data concepts, Familiarity with Hadoop basics |

Data Analyst

This certification is for individuals interested in deriving insights from data. It focuses on querying, cleaning, and visualizing data stored within Hadoop clusters.

Aspects:

Aspects:

-

Querying and Analysis:

Data Analysts learn to write efficient queries using tools like Hive and Pig to retrieve and analyze data stored in Hadoop.

-

Data Transformation:

Candidates master the art of cleaning and transforming raw data into formats suitable for analysis, ensuring data accuracy.

-

Visualization Skills:

This certification often includes data visualization techniques using tools like Tableau or similar platforms, enabling analysts to present findings effectively.

| Field | Value |

|---|---|

| Exam Pattern | Multiple-choice questions and practical tasks |

| Time Duration | Around 2-3 hours |

| Passing Score | Usually, 60% or higher |

| Language for Test | English |

| Cost of Certification | Varies by provider; approximately 250 |

| Prerequisites | Familiarity with data concepts, Basic querying skills, Understanding of the Hadoop environment |

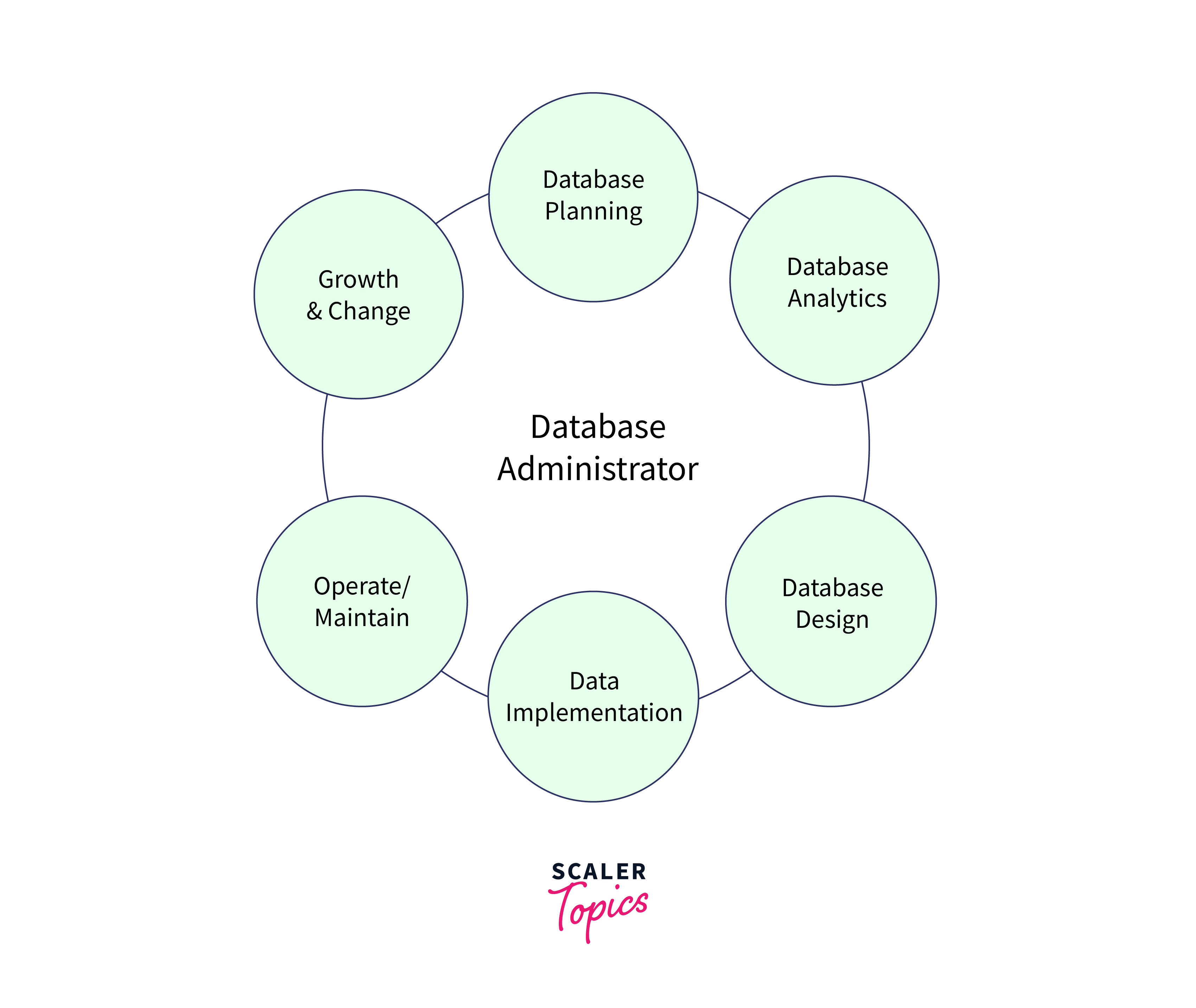

Data Administrator

This certification is designed for individuals interested in managing Hadoop clusters. It covers tasks related to cluster installation, maintenance, and security.

Aspects:

-

Cluster Management:

Data Administrators learn to deploy, configure, and manage Hadoop clusters, ensuring their stability and optimal performance.

-

Security Implementation:

This certification covers security aspects, including user authentication, data encryption, and access control mechanisms, safeguarding data within Hadoop environments.

-

Troubleshooting and Optimization:

Candidates acquire skills to identify and address performance issues, ensuring smooth data operations and minimal downtime.

| Field | Value |

|---|---|

| Exam Pattern | Multiple-choice questions and scenario-based tasks |

| Time Duration | Approximately 2-3 hours |

| Passing Score | Generally, 65% or above |

| Language for Test | English |

| Cost of Certification | Varies by provider; approximately 400 |

| Prerequisites | Familiarity with Hadoop architecture, Linux basics, Knowledge of cluster management |

Course Projects Associated with Hadoop

-

Data Processing Pipeline:

Design and implement a data processing pipeline using Hadoop tools to ingest, clean, transform, and load data from various sources.

-

Sentiment Analysis:

Analyze social media data stored in Hadoop clusters to determine sentiment trends and patterns, contributing to brand analysis and customer insights.

-

Recommendation System:

Build a recommendation engine using Hadoop's collaborative filtering techniques to provide personalized product or content recommendations.

-

Log Analysis:

Analyze server logs stored in Hadoop clusters to identify anomalies, track user behavior, and improve system performance.

-

E-commerce Sales Analysis:

Perform data analysis on large e-commerce datasets stored in Hadoop to extract sales trends, customer preferences, and peak buying times.

-

Clickstream Analysis:

Analyze user clickstream data to understand website navigation patterns, optimize user experience, and identify popular content.

-

Predictive Maintenance:

Utilize machine learning algorithms on Hadoop to predict equipment failures, enabling proactive maintenance in industries like manufacturing.

-

Healthcare Analytics:

Analyze patient data stored in Hadoop to identify patterns, track disease outbreaks, and aid in clinical decision-making.

-

Financial Fraud Detection:

Implement fraud detection algorithms using Hadoop's parallel processing capabilities to identify suspicious financial transactions.

-

Image Processing:

Process and analyze large volumes of image data using Hadoop for tasks like image recognition, object detection, and pattern identification.

-

Natural Language Processing:

Perform text analysis on vast amounts of textual data stored in Hadoop to extract insights, sentiment, and trends.

-

Weather Forecasting:

Use historical weather data stored in Hadoop clusters to build predictive models for weather forecasting and climate analysis.

Conclusion

- Big Data Hadoop certifications equip professionals with skills to manage and analyze large datasets using the Hadoop framework.

- Hadoop is an open-source tool for data storage and processing across distributed clusters, while Big Data refers to the massive volumes of data generated daily.

- Big Data Hadoop training covers Hadoop ecosystem components, MapReduce, data processing tools, cluster management, and more.

- Prerequisites for certifications include programming knowledge (Java/Python), data understanding, Linux familiarity, and analytical skills.

- Online certifications from Cloudera, Hortonworks, MapR, IBM, and SAS offer comprehensive training and practical skills validation.

- Certified professionals find roles such as Hadoop developers, data engineers, data analysts, administrators, and more.

- Course contents include Hadoop architecture, MapReduce, data processing tools, cluster management, and data security.

- Certifications cater to roles like Hadoop Developer, Data Analyst, and Data Administrator.

- Course projects allow hands-on application of skills in data processing, analysis, visualization, and machine learning.