Hadoop Installation

Hadoop, an open-source big data platform, is essential for handling large datasets. This guide simplifies the installation process, outlining steps for setting up Hadoop in various environments, including UNIX and Windows. It highlights key Hadoop Distributed File System (HDFS) commands crucial for data management and processing.

Prerequisites

Before delving into the realm of Hadoop and Hadoop installation, ensuring a smooth and effective installation process is critical. Let us review the many conditions that must be completed to set up Hadoop efficiently.

-

Hardware Requirements:

Check that your machine fulfills the hardware requirements for Hadoop. It should have enough RAM, disk space, and a multi-core processor. Furthermore, a 64-bit operating system is recommended to handle the massive volume of data adequately.

-

Java Development Kit (JDK):

Because Hadoop largely relies on Java, you must install the most recent JDK on your PC. Check for compatibility with the Hadoop version you plan to use.

-

SSH Configuration:

Hadoop operates in a distributed environment that necessitates constant connectivity between nodes. Set up SSH keys to enable safe, password-free communication between nodes.

-

Network Configuration:

To maintain stability and accessibility, provide static IP addresses to each node in the Hadoop cluster. Proper network configuration minimizes unwanted conflicts during the installation process.

-

Software Dependencies:

Check for and install any Hadoop-required software dependencies. Apache ZooKeeper and HDFS (Hadoop Distributed File System) are two common dependencies.

-

Firewall and Security Settings:

Set firewalls and security settings to allow communication between nodes while maintaining the necessary security measures to protect your cluster.

Environment Required for Hadoop

When working with Hadoop (after we install Hadoop), setting up the proper environment is critical. This section will walk you through the steps to ensure a successful Hadoop installation. We'll go over installing Java, SSH, and Hadoop.

Java Installation

Java is required for Hadoop to run. To install Java on your PC, follow these steps:

-

Step 1:

Determine whether or not Java is already installed

Open a terminal and execute the following command to see if Java is already installed on your machine:

If Java is installed, the version information will be displayed. Otherwise, go to the next step.

-

Step 2:

Download Java Development Kit (JDK)

Download the latest version of the JDK compatible with your operating system from the official Oracle website. Choose the correct version (32-bit or 64-bit) based on your system architecture.

-

Step 3:

Install JDK

Run the downloaded installer file and follow the on-screen directions to install the JDK. Please note the installation directory because it will be needed later during the Hadoop installation procedure.

-

Step 4:

Set the JAVA_HOME environment variable

Set the JAVA_HOME environment variable to the JDK installation directory after the installation. This can be accomplished by following the guidelines for setting up environment variables for your operating system.

SSH Installation

SSH (Secure Shell) is used for secure communication between Hadoop cluster nodes. To install SSH, follow these steps:

-

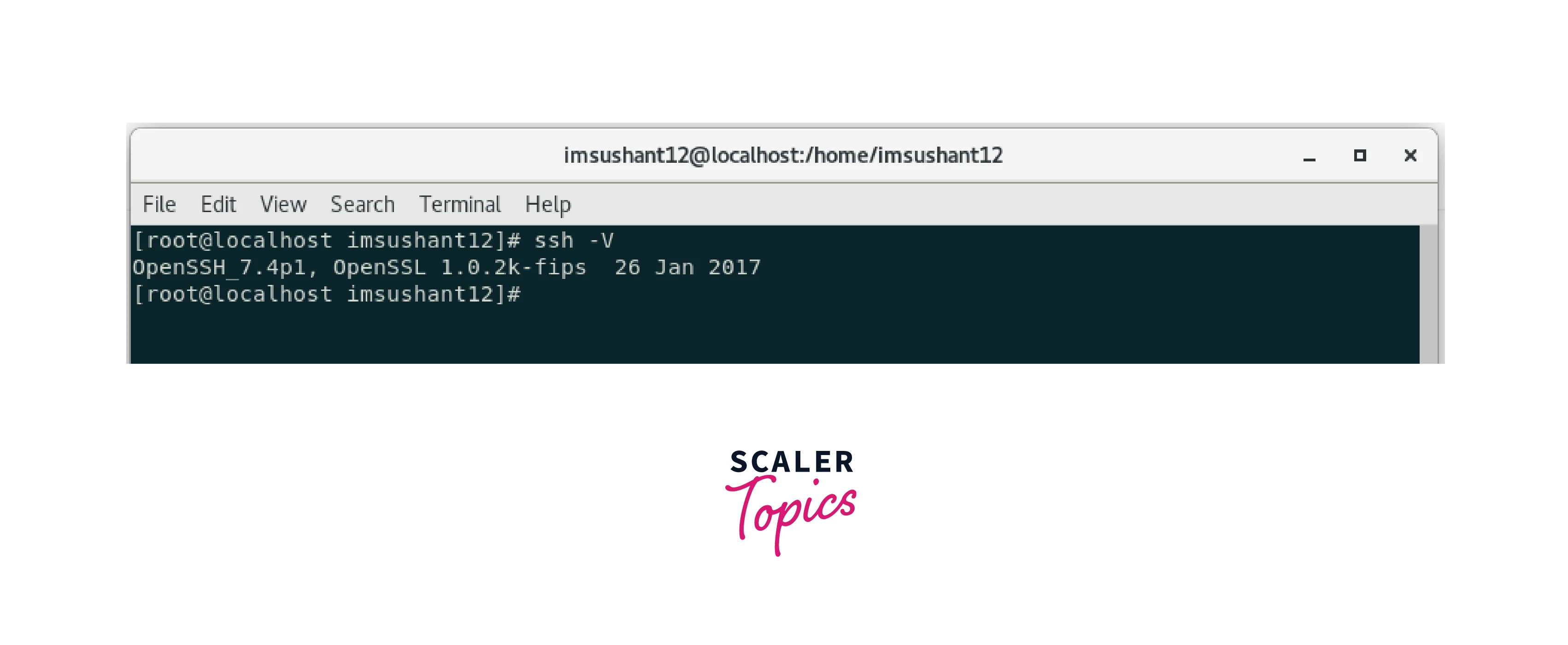

Step 1:

Check SSH installation

Open a terminal and type the following command to see if SSH is already installed:

If SSH is installed, you will see the version information. If not, proceed to the next step.

-

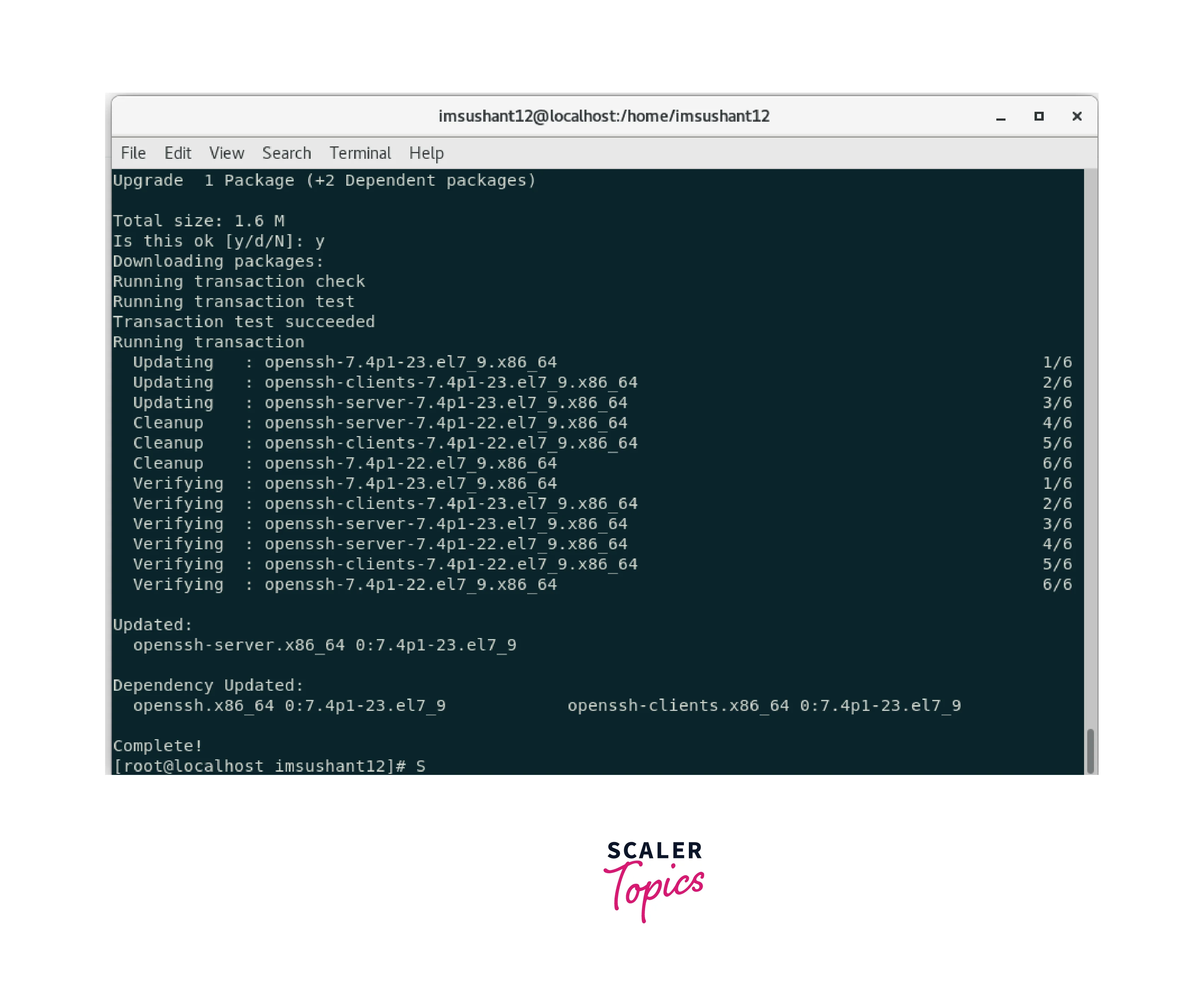

Step 2:

Install SSH

To install SSH on Linux-based systems, open a terminal and use your distribution package manager. On Ubuntu, for example, you can run the following command:

For Windows, you can download and install an SSH client like PuTTY.

-

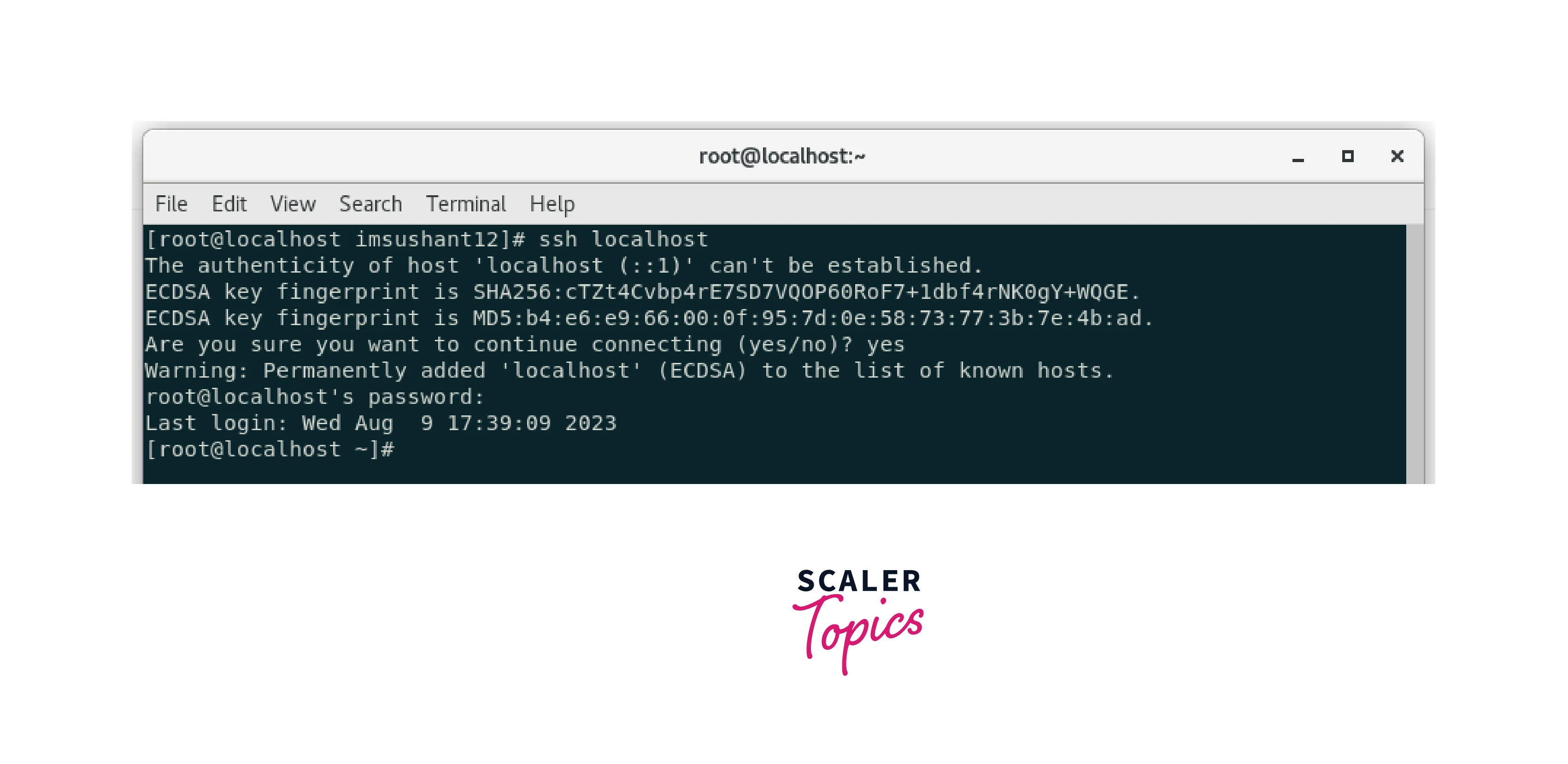

Step 3:

Verify SSH installation

After the installation, verify the SSH service by running the following command:

If you can establish a connection, SSH is successfully installed and configured.

Hadoop Installation

Let us now see how to install Hadoop.

-

Step 1:

Get Hadoop

Download the newest stable release of Hadoop from the official Apache Hadoop website. Depending on your operating system, select the relevant package.

-

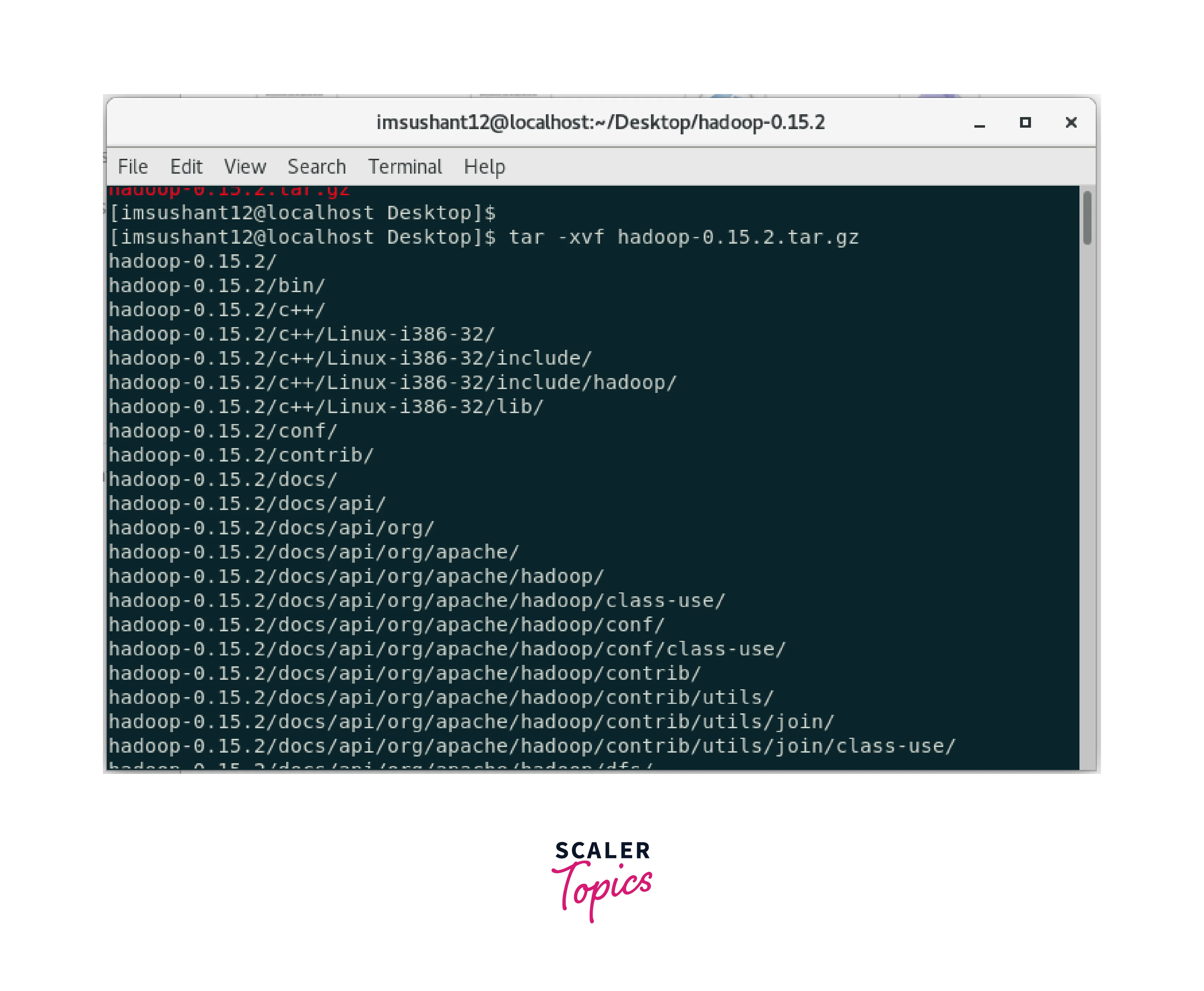

Step 2:

Unzip the Hadoop package

Extract the downloaded Hadoop package to a convenient location. This will result in creating a new directory containing the Hadoop files.

-

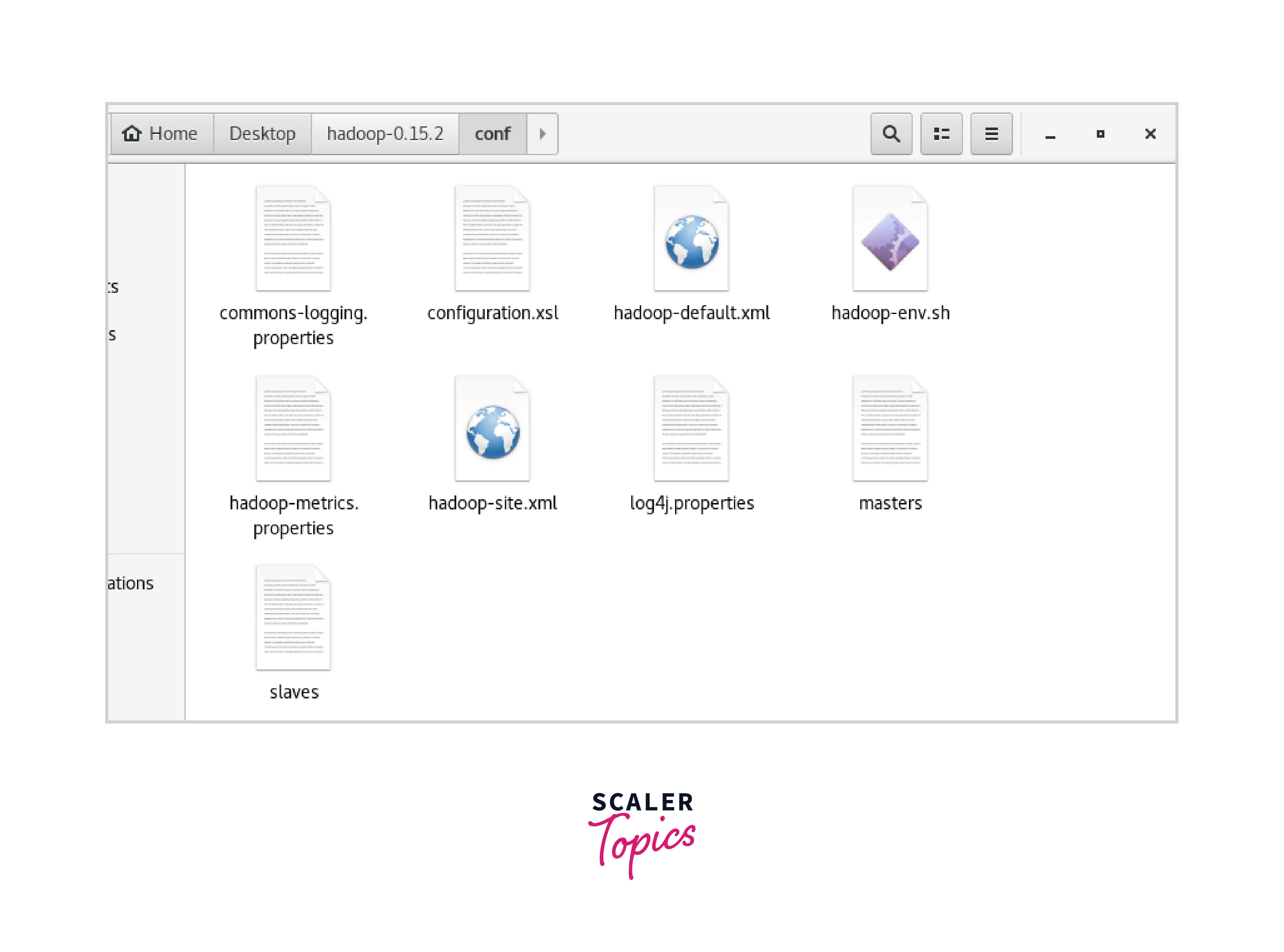

Step 3:

Setup Hadoop

Locate the conf folder in the Hadoop installation directory. Edit the configuration files to meet your needs, including modifying the file paths and cluster settings.

-

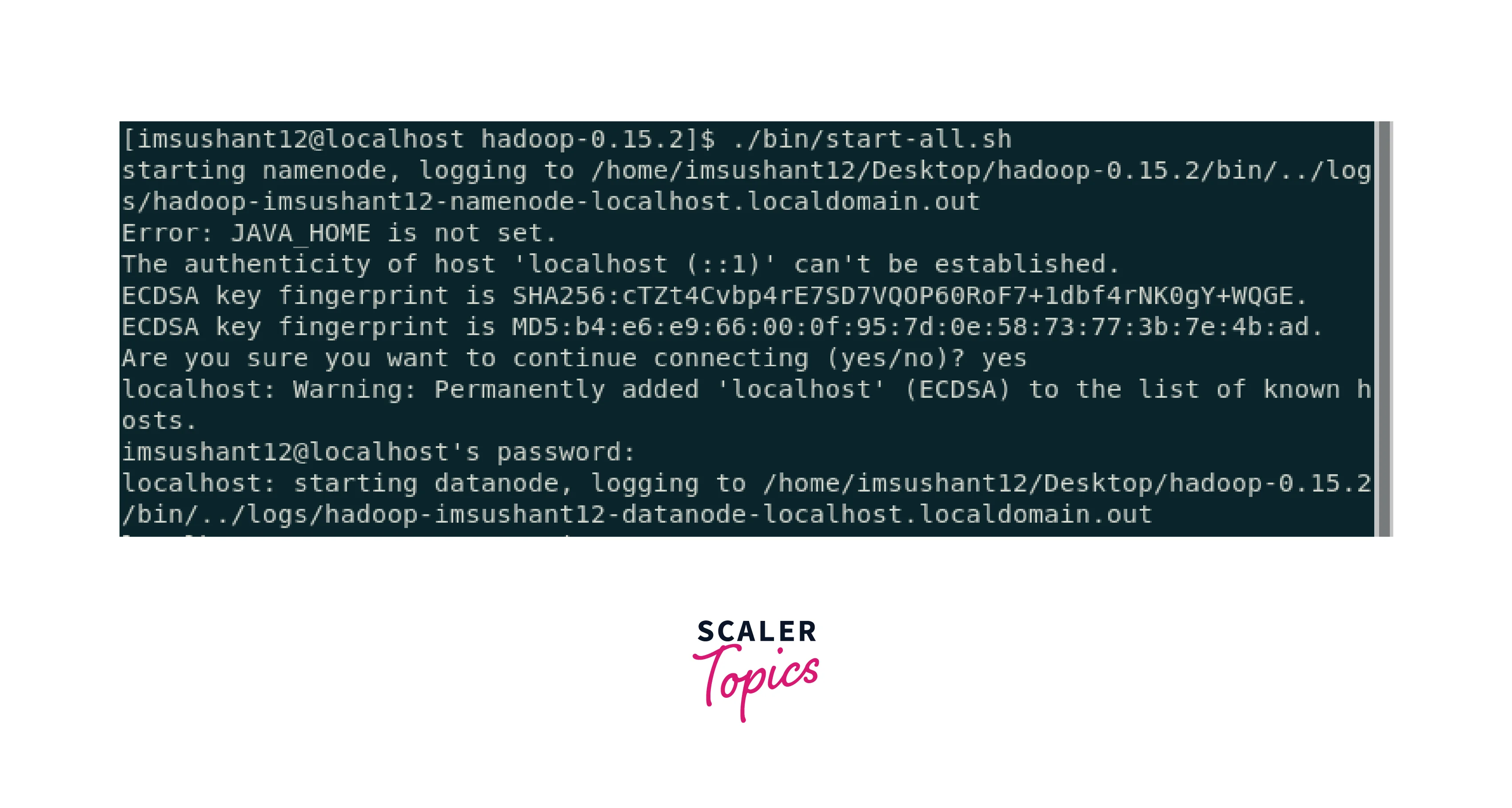

Step 4:

Launch Hadoop

Open a terminal, navigate to the Hadoop installation directory, then type the following command to start the Hadoop cluster:

Hadoop should now be up and running, ready to process big data efficiently.

Conclusion

- HDFS commands make interacting with Hadoop's distributed file system simple and efficient.

- HDFS commands such as ls, mkdir, and rm allow users to list directories and files, create directories, and delete files.

- The put and get commands allow you to transfer data between your local file system and HDFS.

- Hadoop HDFS commands are run using the Hadoop command line interface (CLI) or through programming interfaces like Java.

- To install Hadoop, several components and dependencies need to be set up correctly. Hadoop is built on Java, so you need to have JDK installed on your system. Hadoop requires SSH for distributed cluster management. Hadoop also relies on several configuration files to define its behavior.