Apache Pig In Hadoop

Overview

Apache Pig in Hadoop is a versatile data processing tool that streamlines complicated Hadoop processes. Even non-programmers can process large amounts of data with its user-friendly, high-level language, Pig Latin. Pig accomplishes data transformations efficiently, making it a significant asset in large data analytics. It increases productivity by abstracting low-level Java coding, allowing developers to focus on data analysis and exploration. Pig's smooth interaction with MapReduce in Hadoop enables distributed processing, which results in faster data processing.

What is Apache Pig in Hadoop?

Apache Pig in Hadoop is a high-level platform, and scripting language developed on top of Hadoop that aims to make huge dataset processing easier. The necessity for tools that can properly manage enormous amounts of data has become critical in the world of big data.

Users of Apache Pig can express data processing tasks using Pig Latin. Pig Latin is also used to specify data transformations, aggregations, and analyses without delving into the complexities of Hadoop's native Java-based MapReduce architecture. Pig optimizes and transforms these Pig Latin scripts into low-level MapReduce jobs automatically, relieving users of manual optimization.

The intrinsic complexity of Hadoop's native MapReduce calls for using Apache Pig. For even simple data processing jobs, traditional MapReduce requires developers to write considerable code, which can be time-consuming and error-prone. Apache Pig simplifies this procedure, allowing users to concentrate on data processing logic rather than implementation specifics.

Features of Apache Pig

This section will look at the fundamental characteristics distinguishing Apache Pig in Hadoop from other data processing frameworks.

Ease of Programming

One of Apache Pig's most notable features is its user-friendly programming model. Pig employs Pig Latin, a high-level scripting language that abstracts sophisticated MapReduce operations into simple, straightforward instructions. Users are shielded from the underlying difficulties of distributed data processing by using this abstraction.

Optimization Opportunities

Apache Pig in Hadoop optimizes data processing processes automatically, ensuring optimal performance even for large-scale datasets. Pig optimizes the logical and physical plans, reorganizing activities to reduce data transport and processing overhead.

Extensibility

Apache Pig in Hadoop was created with flexibility in mind. It lets select a choice of programming language to construct custom functions known as User Defined Functions (UDFs).

Flexible

Apache Pig's versatility is crucial as it supports multi-language implementations, meaning developers can add Python, Java, and JavaScript components to their data processing processes.

In-built Operators

Apache Pig in Hadoop includes many built-in operators that allow users to conduct a wide range of data manipulations. These operators simplify typical data transformations such as filtering, grouping, sorting, joining, and aggregating.

Differences between Apache MapReduce and PIG

| Aspect | Apache MapReduce | PIG |

|---|---|---|

| Programming Style | Complicated, low-level code | High-level scripting language |

| Developer Expertise | Requires parallelism and distributed systems knowledge | More accessible for a wider range of users |

| Control | Granular control over data flow | Abstracts complexity of MapReduce |

| Data Transformations | Manual coding of data transformations | Built-in operators for expressing transformations |

| Optimization | Requires manual optimization | Automatically optimizes underlying MapReduce code |

| Use Case | Strict performance and control needs | Rapid development and experimentation |

Advantages of Apache Pig

Let us now examine the numerous benefits provided by Apache Pig.

- Simplified Data Processing: Apache Pig in Hadoop encapsulates Hadoop MapReduce complexities, allowing users to define high-level data manipulation algorithms in Pig Latin. This abstraction streamlines the data processing workflow, making big data more accessible to non-experts.

- Extensibility: Pig's extensible design allows users to write custom functions (UDFs) to execute specialized data processing tasks. This extensibility encourages code reuse and integration with existing libraries.

- Scalability: Because Pig in Hadoop is built on top of Hadoop, it inherits Hadoop's scalability advantages. It can effectively handle enormous datasets dispersed across a Hadoop cluster.

- Optimization Opportunities: Apache Pig in Hadoop optimizes data processing tasks, automatically reordering operations to maximize performance. This optimization reduces data transportation, hence reducing processing time and resource consumption.ploratory data analysis, allowing for ad hoc queries and iterative development.

- Community Support: Apache Pig in Hadoop has a thriving and active community that ensures ongoing development and support.

Applications of Apache Pig

Let us now look at the many Apache Pig applications.

- Data Transformation: Apache Pig in Hadoop enables smooth data transformation by accelerating the process and improving data quality for downstream analytics by cleaning and filtering data and translating unstructured data into organized representations.

- ETL (Extract, Transform, Load): Pig in Hadoop is essential in the ETL process because it allows users to extract data from a variety of sources, transform it into the desired format, and load it into Hadoop Distributed File System (HDFS) or other storage systems.

- Data Analysis: By leveraging Pig's expressive language, analysts and data scientists may undertake exploratory data analysis more efficiently. They can extract insights, find patterns, and acquire a deeper knowledge of the data.

- Iterative Processing: Apache Pig in Hadoop supports iterative data processing, making it suitable for tasks like machine learning and graph processing, allowing users to perform multiple runs through data automatically, and optimizing processing procedures.

- Text Processing: Pig in Hadoop provides powerful text parsing, tokenization, and analytics features for processing massive amounts of text data. This is useful for sentiment analysis, natural language processing, and text mining.

Types of Data Models in Apache Pig

Below we have dicussed various data models used to handle data within Pig successfully.

Relational Data Model:

Apache Pig's relational data model is similar to typical relational databases. It displays data as tables with rows and columns, with each cell containing a single piece of data. This architecture works well with structured data and integrates well with relational databases.

Semi-Structured Data Model:

The semi-structured data model supports irregular data, such as nested data structures and varying attributes. Apache Pig handles semi-structured data using the JSON and XML formats, making it appropriate for dealing with complicated datasets where schemas may vary over time.

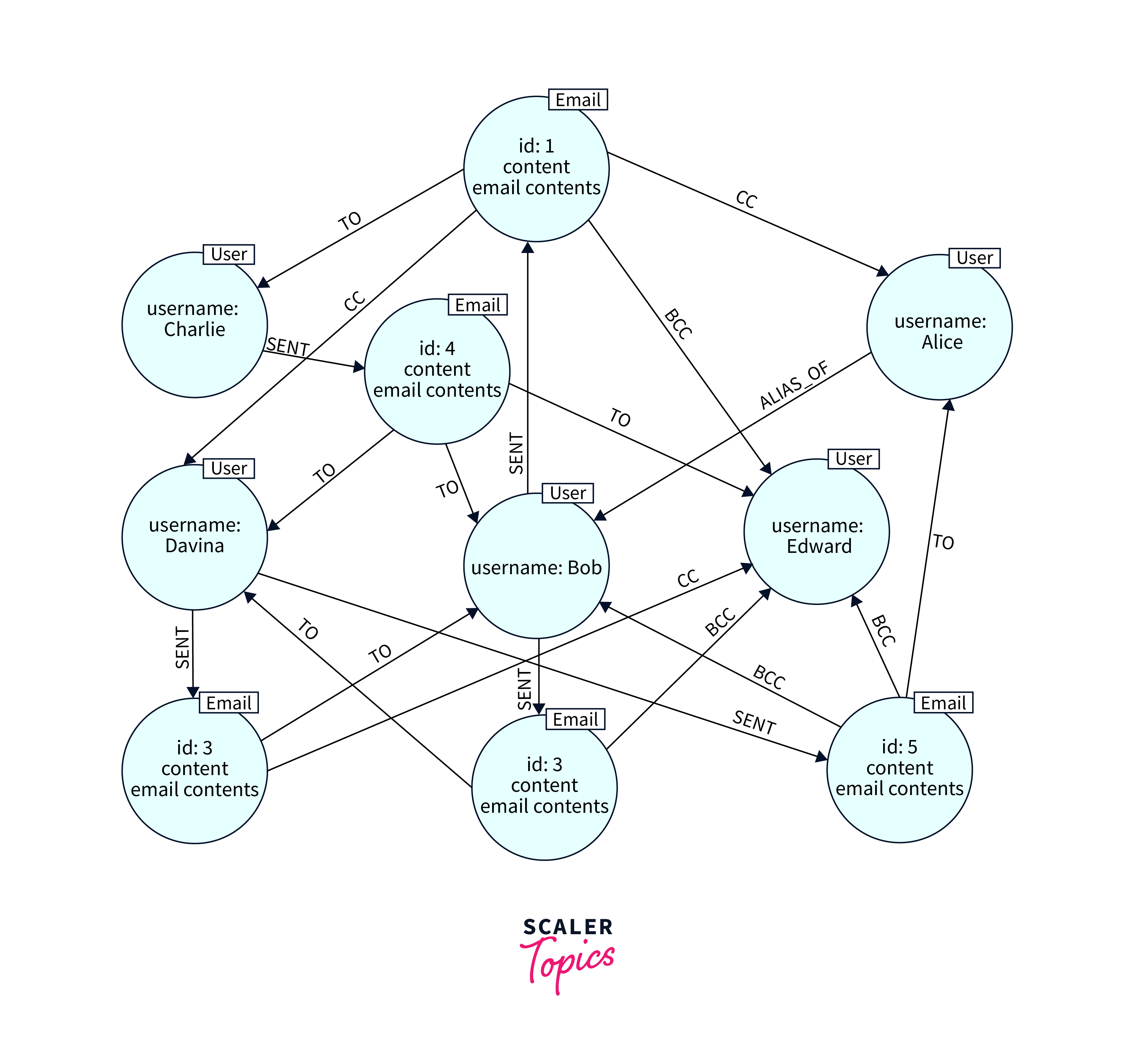

Graph Data Model:

Apache Pig incorporates the graph data model for analyzing and processing graph-based data. This paradigm efficiently displays data as vertices and edges, allowing graph algorithms to be executed to detect patterns and relationships within the data.

Multi-Dimensional Data Model:

The multidimensional data model is commonly used in OLAP (Online Analytical Processing) applications to manage data with numerous dimensions. It is especially helpful for slicing and dicing data across multiple dimensions to generate complete analytical insights.

Conclusion

- Pig Latin, Apache Pig's abstraction over MapReduce, allows users to describe complicated data transformations in a compact and simple scripting language.

- Pig easily handles large-scale data processing operations, making it an excellent candidate for big data projects with large datasets.

- Apache Pig works smoothly with other Hadoop ecosystem components such as HDFS and Hive, enabling a unified and comprehensive big data solution.

- Apache Pig has a vibrant open-source community that ensures regular updates, bug patches, and continual enhancements, ensuring its relevance and dependability.