Sqoop integration with Hadoop

Overview

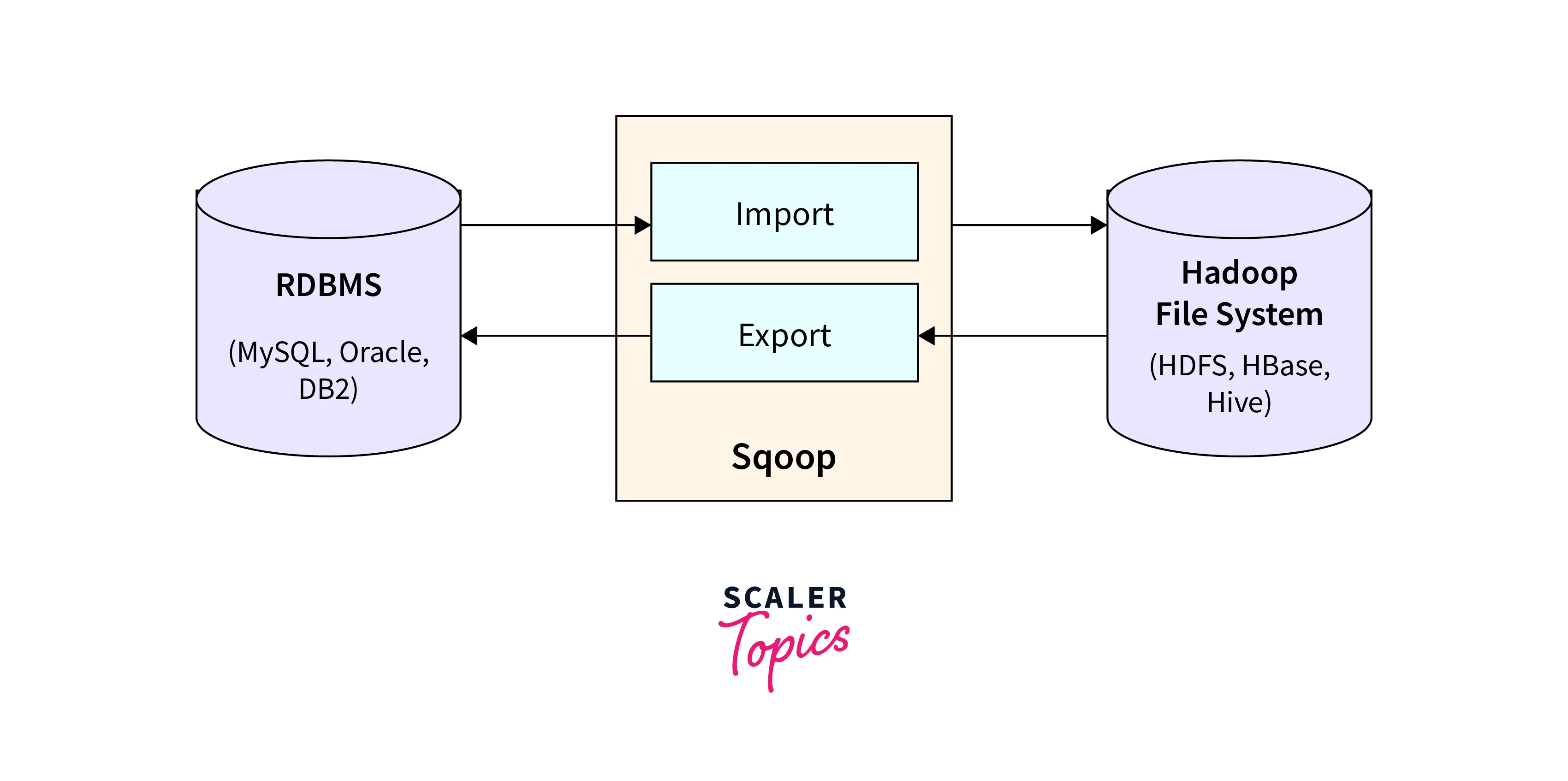

This topic emphasizes the seamless Sqoop integration with Hadoop, which allows for efficient data movement. Sqoop facilitates seamless data transfer from relational databases to Hadoop, offering benefits like SQL query integration, direct import to HBase/Hive, security through Kerberos, data compression, and robust performance. Sqoop integration with Hadoop enhances data management, supports efficient data import/export, and ensures data security. Data export entails HDFS preparation, Sqoop-driven MySQL export, and validation. Sqoop optimizes data flow, increasing Hadoop's insights potential. Users improve data management inside Hadoop by following these procedures, resulting in more efficient analysis and decision-making.

Sqoop's fundamental functionalities are as follows:

- Sqoop also assists us in integrating the results of SQL queries into the distributed Hadoop file system.

- We may use Sqoop to import processed data straight into the nest or Hbase.

- It works with data security by utilising Kerberos.

- We may compress the processed data with the aid of Sqoop.

- Sqoop is a strong and naturally energetic creature.

Installing Sqoop in Hadoop

-

Prerequisites:

- While Sqoop integration with Hadoop ,Validate the Hadoop installation.

-

Sqoop can be downloaded here:

- To get the newest version, Visit link to install the Sqoop integration with Hadoop: https://sqoop.apache.org/.

- Select the distribution that corresponds to your Hadoop version.

-

Sqoop Extraction:

- When the download is finished, go to the directory where the downloaded file is stored and extract it by the following command:

- Bashrc Configuration

- You must configure the Sqoop environment by adding the following lines to your ~/.bashrc file.

- To run the ~/.bashrc file, use the following command.

- You must configure the Sqoop environment by adding the following lines to your ~/.bashrc file.

- Sqoop Configuration

- Edit sqoop-env.sh and set the following environment variables (update paths as necessary):

- Install and configure mysql-connector-java

- We may get the mysql-connector-java.tar.gz file from this link: http://ftp.ntu.edu.tw/MySQL/Downloads/Connector-J/

- The steps below are used to extract the mysql-connector-java tarball and copy the mysql-connector-java-bin.jar file to the /usr/lib/sqoop/lib directory.

- Sqoop verification

- The command below is used to check the Sqoop version.

Connecting to Hadoop

- In Sqoop integration with Hadoop,Installation and Configuration

- Install and set up Sqoop as outlined above.

- Install and setup Sqoop

- Sqoop may be downloaded from the Apache Sqoop website https://sqoop.apache.org/ and unzipped to a directory of your choosing.

- Determine above environment variables in sqoop-env.sh as given below:

- Install and configure mysql-connector-java

- Download the MySQL Connector/J JDBC driver from the official MySQL website https://dev.mysql.com/downloads/connector/j/.

- Select the driver version that corresponds to your MySQL database and Java version.

- Sqoop verification

- The command below is used to check the Sqoop version.

Importing Data to Hadoop

- Build the source database and the table.

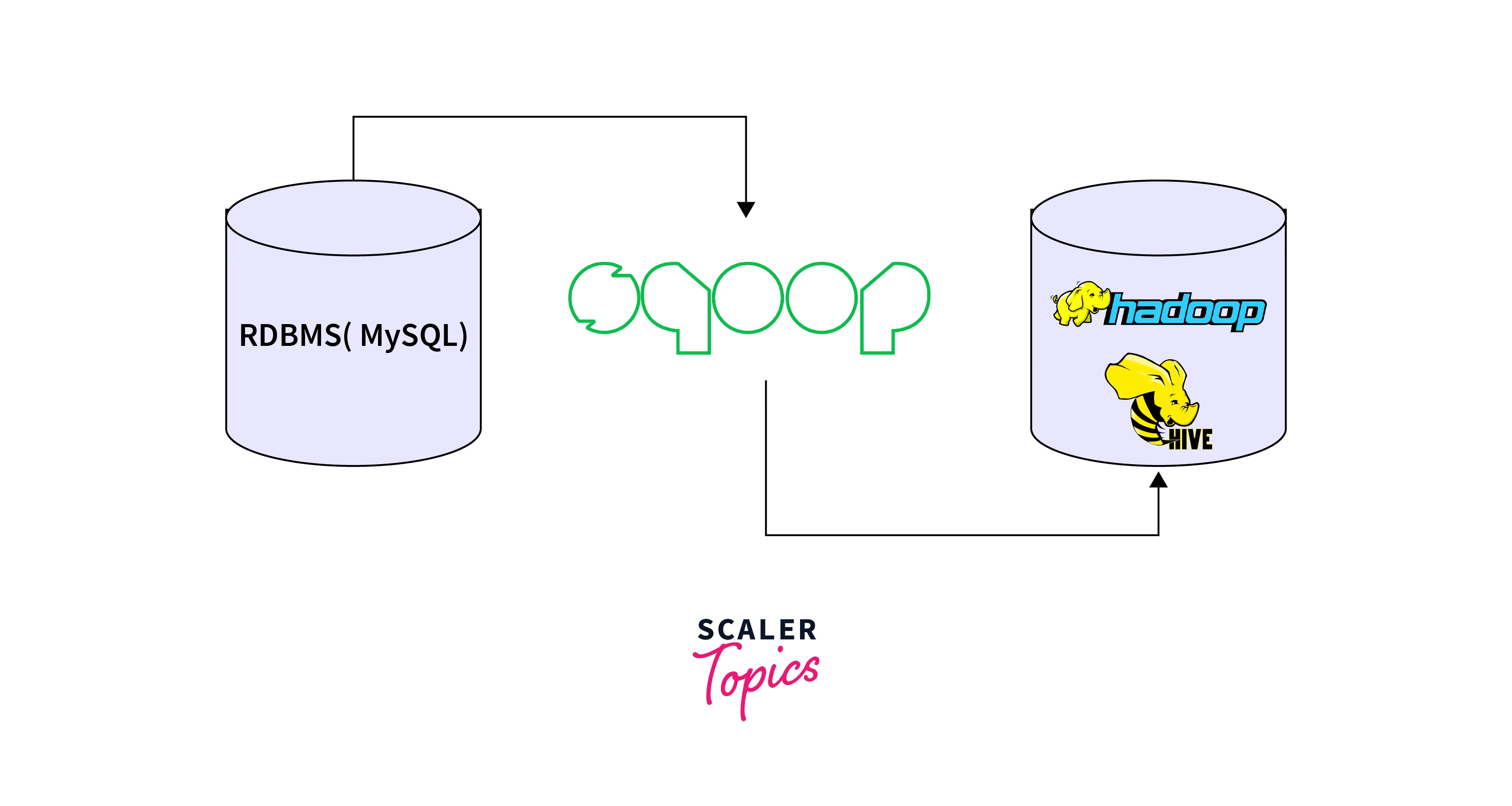

- In Sqoop integration with Hadoop, Let's start with a simple MySQL database and table to import data from.

- Import data to Hadoop HDFS

- To import data from the MySQL table into Hadoop HDFS, use the Sqoop import command:

- Replace your_username and your_password with your MySQL login information.

- Import Verification

- Check to see if the data was properly loaded into HDFS:

- Making Use of Imported Data

- The imported data is now usable using Hadoop ecosystem tools. Hive, for example, may be used to construct an external table from the imported data:

Exporting Data from Hadoop

- Prerequisites

- While Sqoop integration with Hadoop, Check that Hadoop and Sqoop are installed and setup correctly on your system. In addition, the JDBC driver for your database (in this case, MySQL) should be available in the Sqoop directory.

- Prepare the source data in the Hadoop HDFS.

- If you have data in HDFS that you wish to export, make sure it is accessible and appropriately structured in Hadoop HDFS.

- Export Data from Hadoop HDFS to MySQL

- Run the Sqoop export command to export the data from Hadoop HDFS to a MySQL table:

- For example, exporting from HDFS to MySQL. Assume you have data in HDFS at the path /user/hadoop/export_data and wish to export it to a MySQL table named workers in the sampledb database:

Conclusion

- Sqoop integration with Hadoop involves key steps for smooth data transfer.

- Sqoop is used for transferring data from relational databases to Hadoop.

- Sqoop integrates SQL query results into Hadoop, imports data to HBase/Hive, ensures security via Kerberos, offers data compression, and exhibits robust performance.

- Use Sqoop export command to move data from HDFS to target databases.

- Sqoop enables seamless data movement between Hadoop and external databases, enhancing data management and analysis.

- Organizations can utilize Sqoop to harness Hadoop's power for improved data processing and insights.