Twitter Data Analysis Using Hadoop

Overview

In the digital age, companies are constantly seeking insights to make informed decisions and social media platforms like Twitter generate a vast amount waiting to be explored. Twitter Data Analysis Using Hadoop is an exploration into the world of big data analytics using Hadoop, a powerful big data processing framework. We will walk you through the process of extracting, processing, and analyzing Twitter data to gain valuable insights.

What are We Building?

The primary goal of this project is to demonstrate how to use Hadoop and Hive to analyze massive Twitter data.

Pre-requisites

Here's a list of key topics that you should be familiar with, along with links to articles that can help you brush up on these concepts:

-

Hadoop:

Learn more about Hadoop and learn how to Setup Hadoop Cluster.

-

Twitter Data:

Get the Twitter data.

-

Hive:

Get familiar with Hive Basics and also explore the commands in Hive.

How are we going to build this?

-

Data Collection:

Get the data from Twitter API or using the dataset link from the above section.

-

Data Preparation:

Clean and preprocess the collected data to remove any missing values or irrelevant information.

-

Hadoop Setup:

Store the preprocessed data in the Hadoop Distributed File System (HDFS).

-

Hive Setup:

Create Hive databases and tables to structure the data with an appropriate schema.

-

Analysis:

Utilize Hive to perform analysis on the Twitter data.

-

Interpretation:

Interpret the insights derived from the analysis.

-

Final Output:

Insights on the twitter data.

Final Output

The following images show the execution of hive commands to analyze Twitter data in a Hadoop environment,

Requirements

Below is a list of the key requirements for this project:

-

Software:

Hadoop (HDFS, Hive), Java Development Kit (JDK), Integrated Development Environment (IDE)

-

Libraries:

Tweepy, NLTK (Natural Language Toolkit)

-

Programming Languages:

Python and Java

-

Data:

Twitter Developer Account (for API access), Access to Twitter API (Consumer Key, Consumer Secret, Access Token, Access Token Secret)

-

Knowledge:

Data preprocessing tools, SQL, Testing frameworks(JUnit), Terminal or Command Prompt

Twitter Data Analysis Using Hadoop

Are you ready to get started with the climatic implementation of the project?

1. Data Extraction and Collection

In this section, we will walk you through the steps to obtain the necessary credentials for the Twitter API, provide code examples to collect data, and demonstrate how to clean, preprocess, and store the data as a CSV file.

Data extraction and collection are the initial steps in any Twitter data analysis project using the Twitter API. In this section, we will guide you through the process of obtaining Twitter API credentials, collecting data, and then cleaning and preprocessing that data for further analysis.

Step 1: Obtaining Twitter API Credentials

To obtain API keys and access tokens. Follow these steps:

-

Create a Twitter Developer Account:

If you don't already have one, go to the Twitter Developer portal and sign in with your Twitter account.

-

Create a New App:

Click on the Create App button and fill in the required information about your application. This includes a name, description, and a website (you can use a placeholder URL if you don't have a website).

-

Generate API Keys and Tokens:

Once your app is created, go to the Keys and tokens tab. Here, you will find your API Key (Consumer Key), API Secret Key (Consumer Secret), Access Token, and Access Token Secret. These are the credentials you will need to authenticate your application with the Twitter API.

a. Collecting Data Using Python

Now that you have your access token, you can use Python to collect Twitter data. We'll use the Tweepy library to fetch tweets based on specific criteria, such as keywords or hashtags

Install the tweepy library using pip,

Code:

Explanation:

- Set up authentication with the Twitter API using your provided consumer key, consumer secret, access token, and access token secret.

- You specify a search query, which can be a keyword or hashtag of your choice.

- The code initializes an empty list called tweets_data to store the collected Twitter data. It then uses Tweepy to search for tweets based on your query and language preferences ('en' for English).

- The preprocess_data() function is called to clean and preprocess the tweet's text. The rating is also returned.

b. Clean and Preprocess Data

After collecting the raw data, we will need to clean and preprocess it.

Code:

Explanation:

The code manages the NLTK resources used for tokenization and removes stopwords and punctuation marks from the text. It also calculates a simple rating for each tweet.

c. Store Data as CSV

To store the cleaned and preprocessed data, you can save it as a CSV file using Python's csv module.

Code:

Explanation:

- The fieldnames is a list of field names used in a CSV file.

- Then we open a new CSV file named twitter_data.csv for writing (w) and create a DictWriter object called writer that will be used to write data to the CSV file.

- Then we write the header row containing the field names to the CSV file followed by the actual data.

You can also download the data directly from the link in the Pre-requisites section.

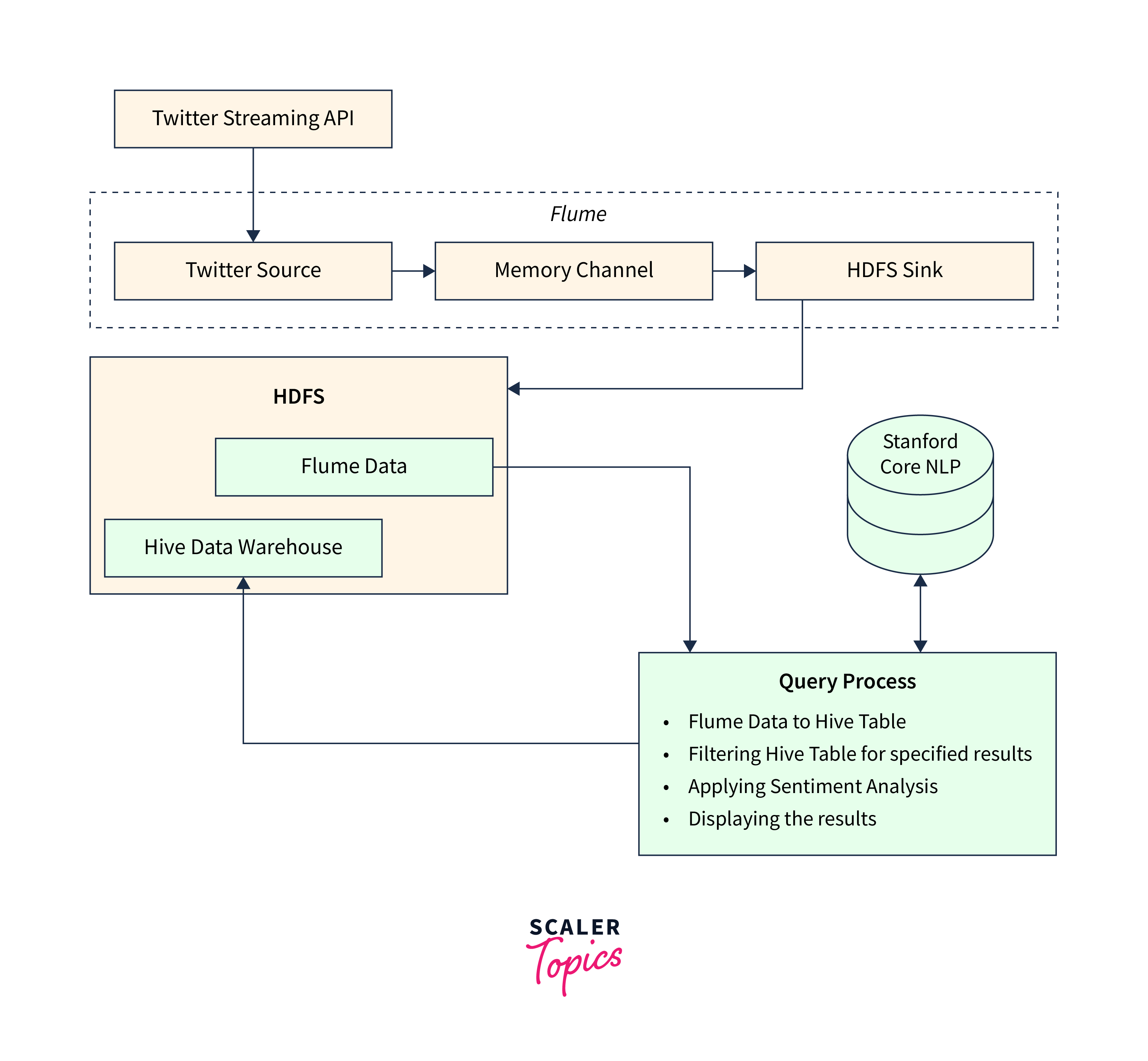

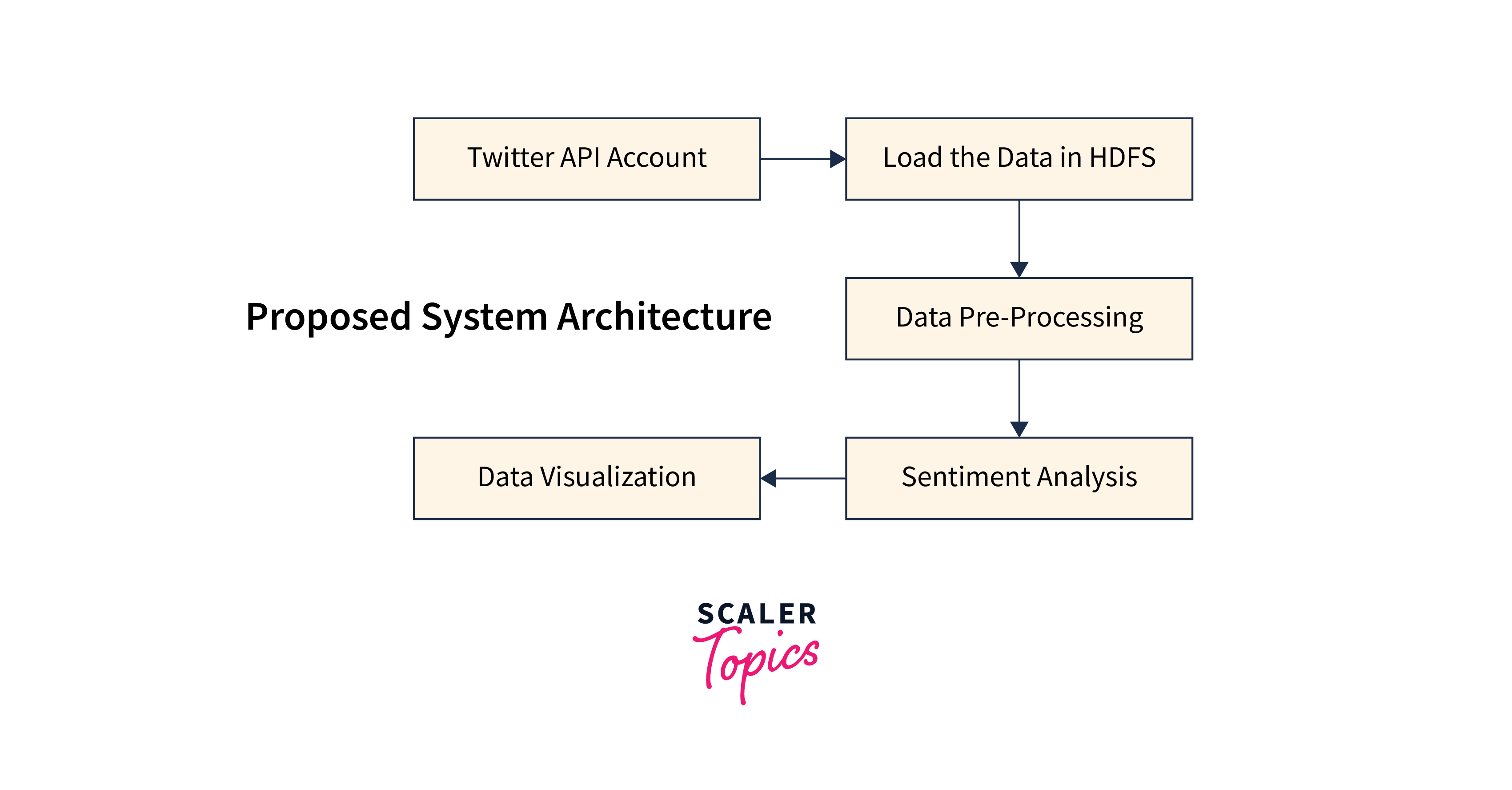

2. Architecture

The data flow within this architecture typically follows these steps:

- Data is collected from Twitter through the Graph API or dataset.

- The collected data is ingested into the Hadoop cluster and stored in HDFS.

- Data preprocessing is performed to clean, transform, and structure the data.

- Hive databases and tables are created to represent the data schema.

- Hive queries are written to analyze the data and derive insights.

- Testing and validation ensure the accuracy of the analysis.

3. Load data to HDFS

To load data into the Hadoop Distributed File System (HDFS), follow these steps:

- Access the Hadoop cluster where you want to load the data. Ensure you have the necessary permissions and access rights.

- Use the following command to transfer data from your local file system to HDFS. The twitter_data.csv is the Local source path and /test/twitter_data.csv is the HDFS destination path with the desired HDFS destination directory.

- Confirm that the data has been successfully transferred to HDFS by listing the contents of the target HDFS directory using the command:

This command should display the files and directories within the specified HDFS directory(/test/).

4. Hive Databases

Step 1: Access Hive

Access the Hive environment within your Hadoop cluster using the following command,

Step 2: Create a Hive Database

Use the CREATE DATABASE command to create a new Hive database.

The twitter_data_db is the name of the database.

Step 3: Use the Database

Switch to the newly created database using the USE command:

Step 4: Create Hive Tables

Create Hive tables to represent the data schema. Define the table name, column names, and their data types using the CREATE TABLE command.

The twitter_data is the name of the table and the column's names and data types are as per the processed data. We specify that the data in the table is delimited by commas (,), as is typical for CSV files. The table is stored as a text file in Hive.

Step 5: Load Data into Hive Table

After creating the table, you can load data into it using the LOAD DATA command.

As we have our data files in HDFS, we can load them into the table, by using the LOAD DATA command:

The /test/twitter_data.csv is the path to the file on HDFS.

We can also insert data directly into the table from another table or query result, using the INSERT INTO command:

Step 7: Verify Table Creation

Verify that the table has been created and data has been loaded by running a SELECT query:

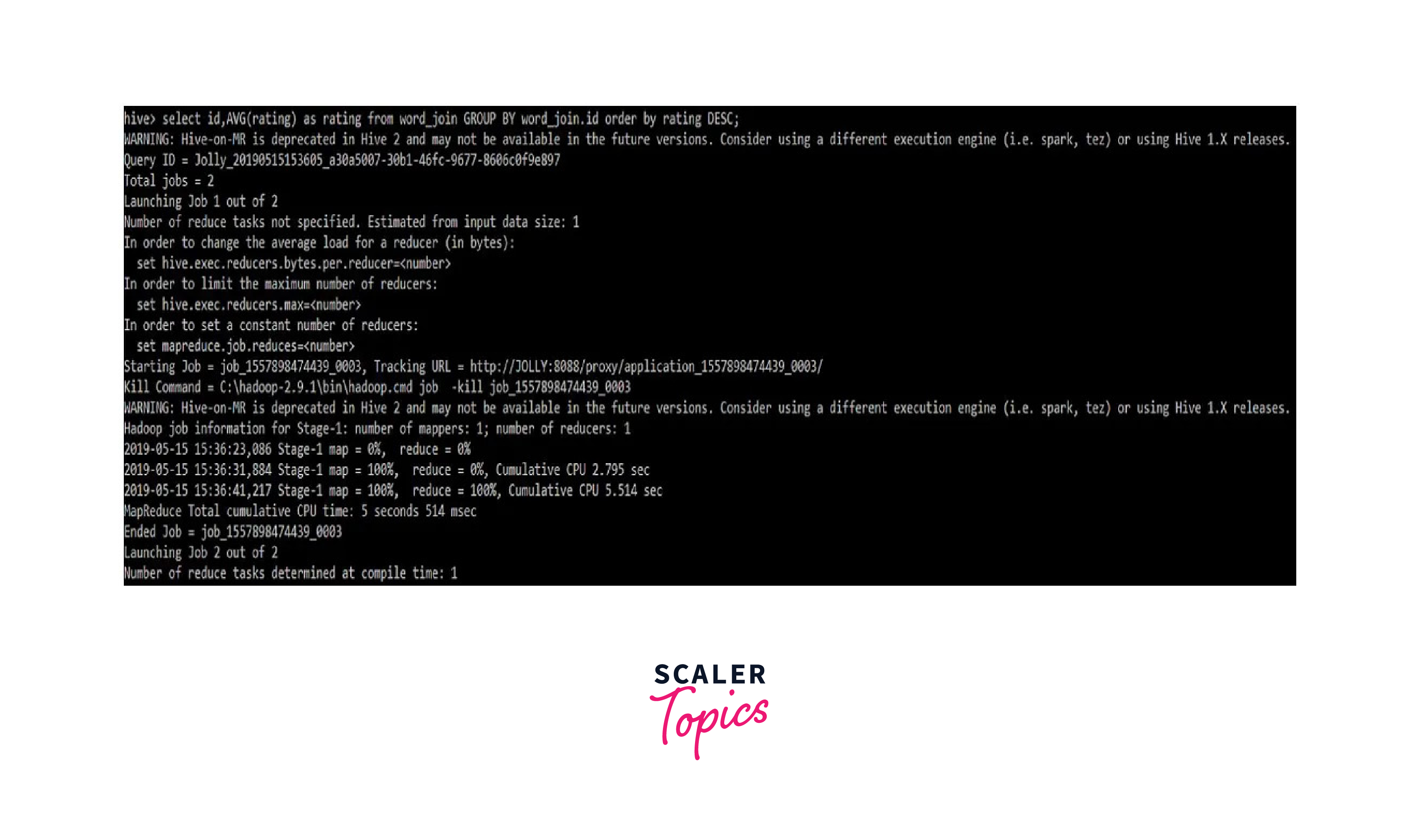

5. Analysis with Hive Command

As we have already created a Hive table named twitter_data, let us see some example Hive commands for analysis:

1. Count the Total Number of Tweets:

2. Average Ratings and Engagement:

3. Hive Command to Get ID and Average Ratings:

6. Results

1. Count the Total Number of Tweets:

This command calculates the total number of tweets in the dataset.

2. Average Ratings and Engagement:

This command calculates the average ratings, retweets, comments, and likes across all tweets in the dataset.

3. Hive Command to Get ID and Average Ratings:

This Hive command calculates the average rating for each tweet (Tweet ID) in the dataset and arranges the results in descending order based on the average rating.

Testing

Testing is a crucial phase in any data analysis project to ensure the accuracy, reliability, and validity of your results.

- Define Testing Objectives and Criteria

- Prepare Test Data

- Perform testing of individual components or queries within your analysis

- Verify Data Consistency of analysis results.

- Test Performance and Scalability

What’s Next

Now that you've completed the Twitter Data Analysis project using Hadoop and Hive, here are a few ideas for what you can do next to further enhance your skills:

-

Machine Learning Integration:

Extend your analysis by incorporating machine learning models and techniques using tools like Apache Spark MLlib or scikit-learn in Python.

-

Real-time Data Processing:

Explore technologies like Apache Kafka and Apache Flink to process and analyze data in real time for immediate insights.

-

Automate Data Pipelines:

Build data pipelines to automate the collection, preprocessing, and analysis of data. Tools like Apache NiFi and Apache Airflow can help you create robust and scalable data workflows.

Conclusion

- Twitter Data Analysis using Hadoop is a powerful approach to derive insights from large-scale social media data.

- The project involves data extraction, preprocessing, and analysis within a Hadoop ecosystem.

- The project requires a Hadoop cluster, Hadoop ecosystem tools, and an understanding of Hive for data warehousing.

- Data extraction involves using the Twitter API or data sets.

- Data preprocessing includes cleaning, handling missing values, and structuring data for analysis.

- Hive databases and tables are created to define the data schema and analysis is performed using Hive queries.