Locking Down Apache Kafka A Comprehensive Guide to Kafka Security

Overview

Apache Kafka is an open-source distributed event streaming service designed for high-performance data pipelines, analytics, and critical applications. Its security includes SSL/TLS encryption, authentication, authorization, monitoring, certificate-based authentication, and cluster configuration for compliance and cyber threat prevention.

Introduction

Brief Overview of Apache Kafka

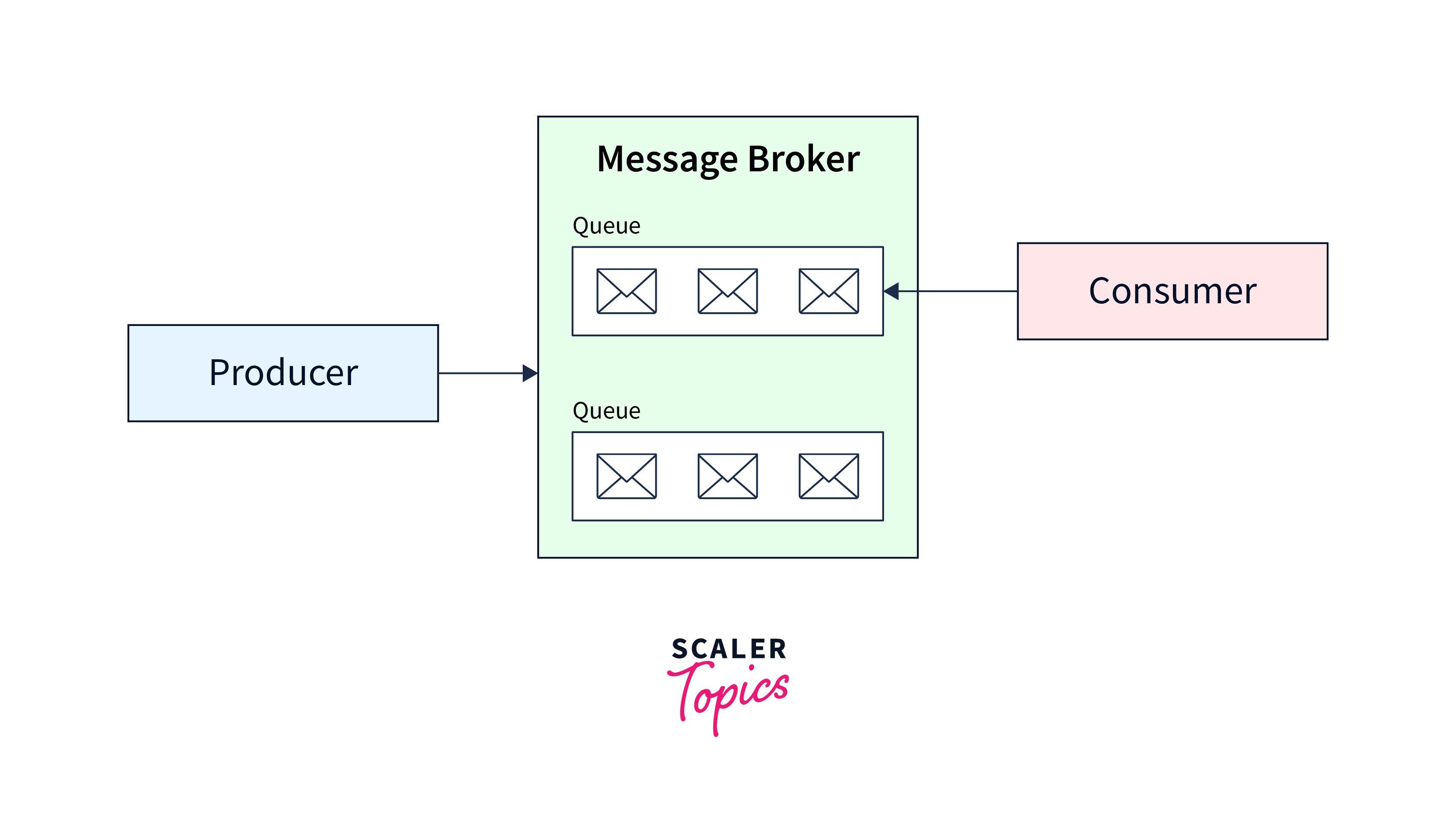

Apache Kafka is an open-source distributed event streaming service for high-performance data pipelines, analytics, integration, and critical applications, utilizing the publish/subscribe messaging system.

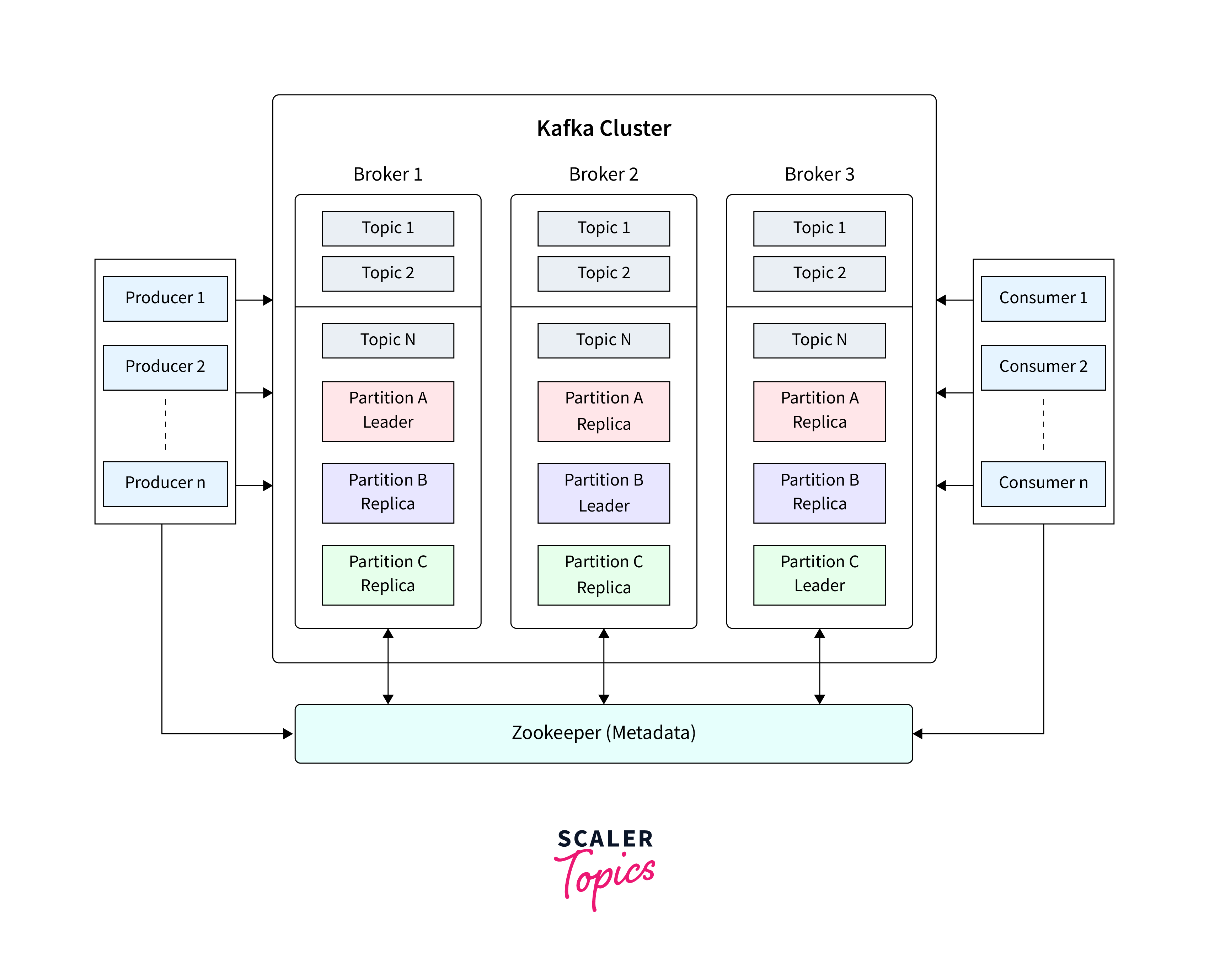

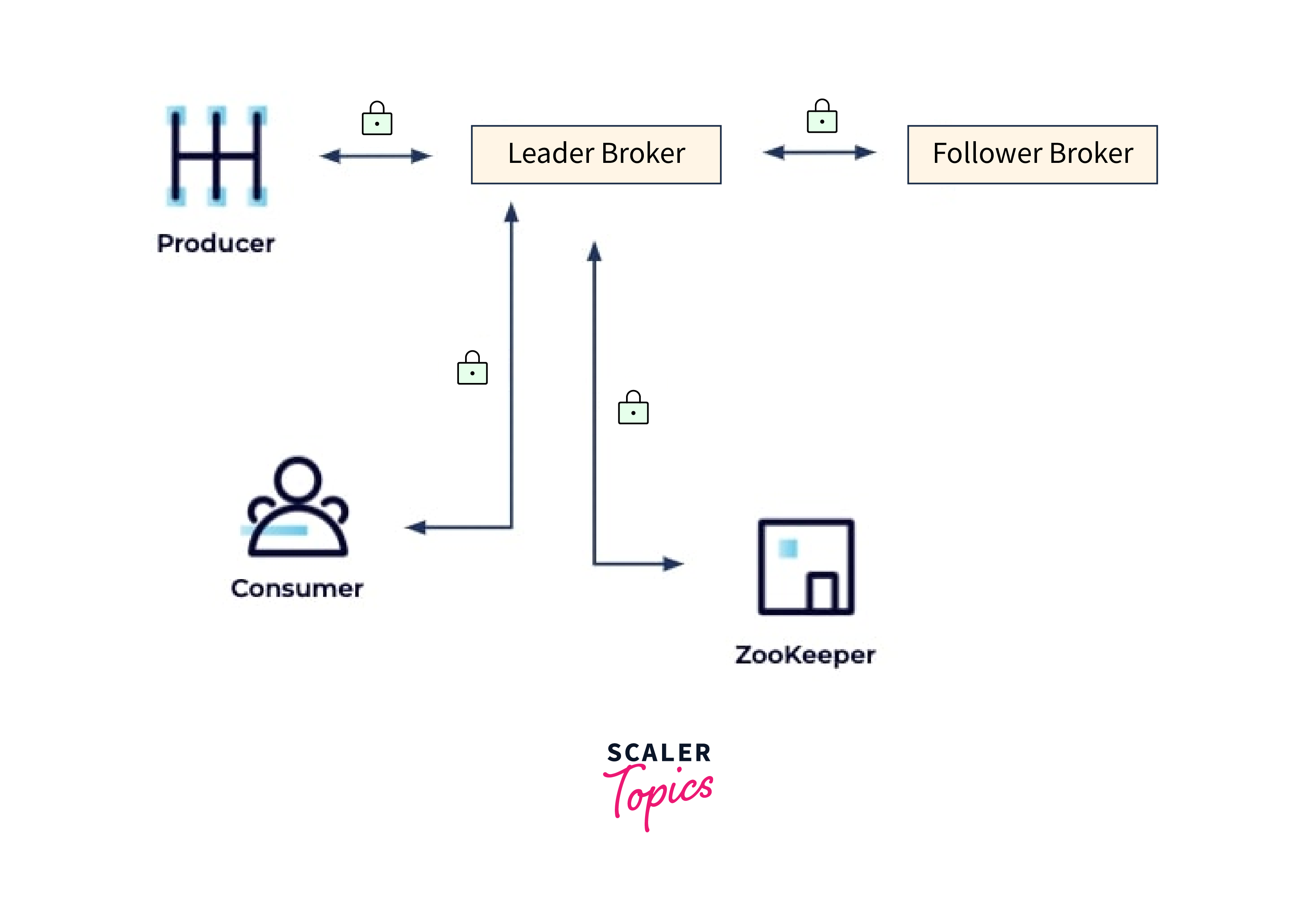

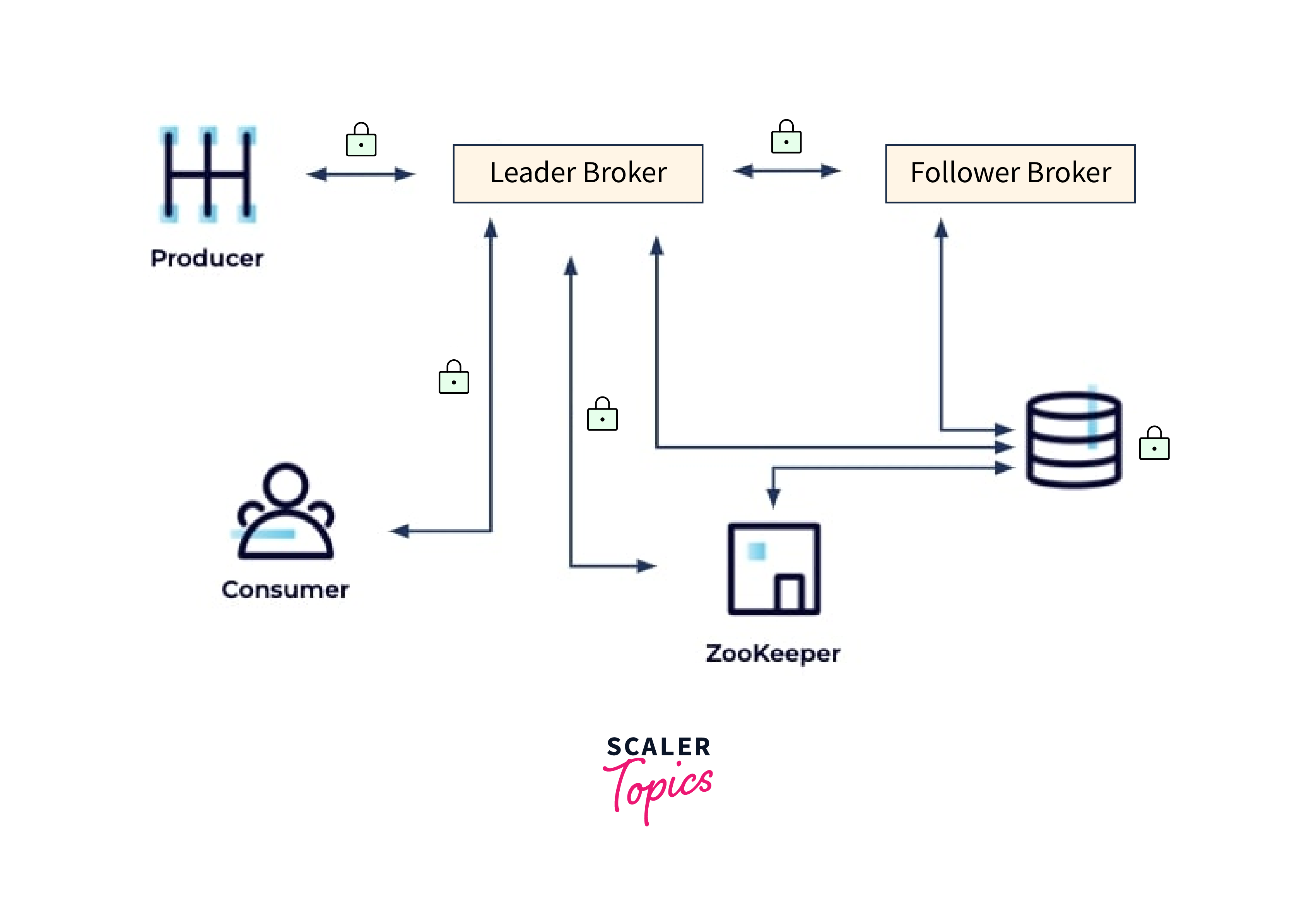

Various applications use different methodologies when developing the architecture of Apache Kafka. However, other fundamental components are required to build a strong Kafka architecture, including Kafka Cluster, Producers, Consumers, Brokers, Topics, Partitions, and Zookeeper.

Importance of Securing Kafka

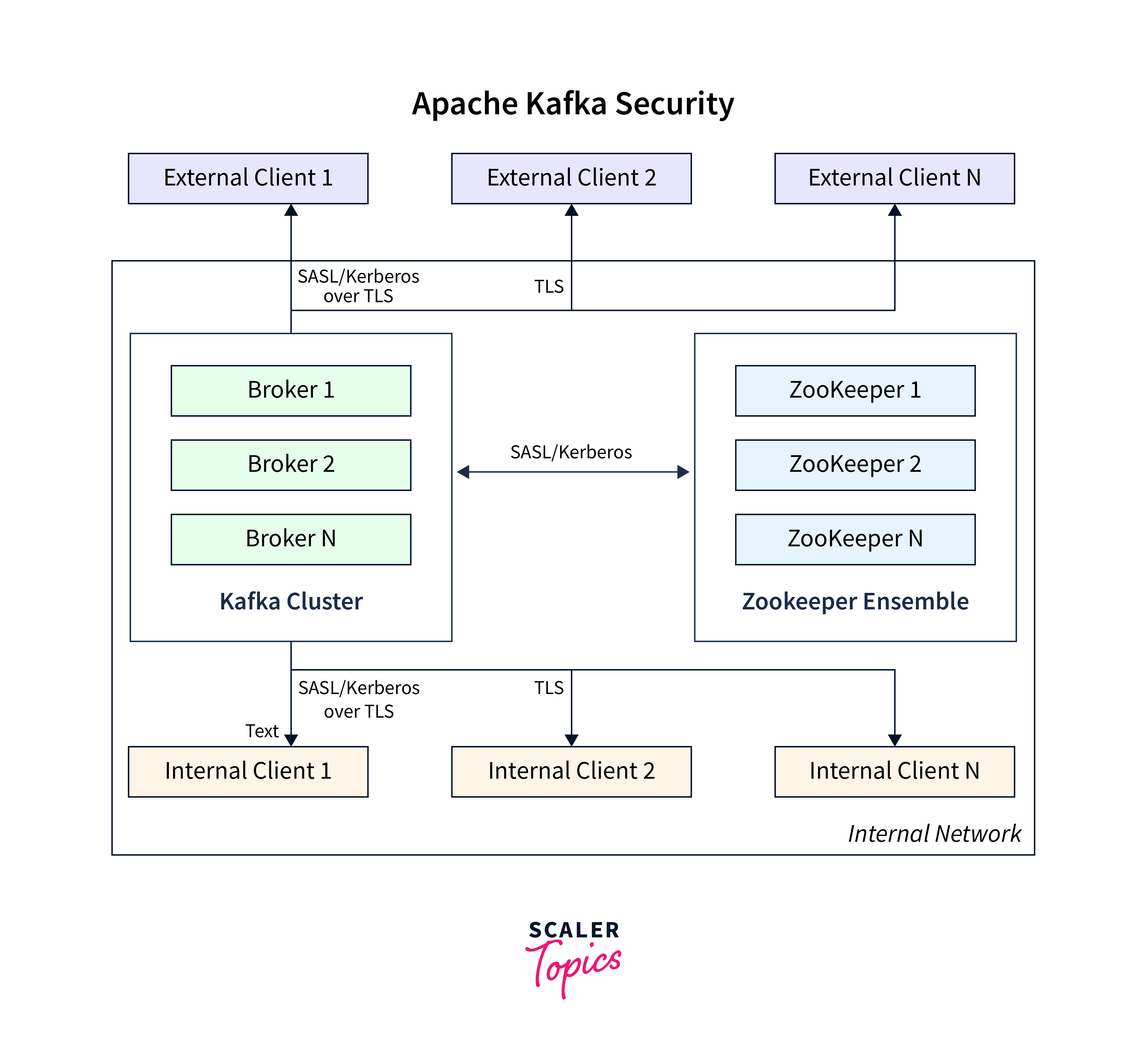

The internal intermediate layer Apache Kafka enables the sharing of real-time data flows across your back-end systems via Kafka topics. Any user or application may publish any messages to any topic and consume data from any topic using a typical Kafka configuration. You need to integrate security when your business transitions to a shared tenancy model where several teams and applications utilize the same Kafka Cluster or as your Kafka Cluster begins ingesting certain sensitive information.

Currently, the following security mechanisms are supported:

- Using SSL or SASL, connections to brokers from clients (producers and consumers), other brokers, and tools are authenticated.

The following SASL mechanisms are supported by Kafka:

- SASL/GSSAPI (Kerberos) - beginning with version 0.9.0.0

- SASL/PLAIN - beginning with version 0.10.0.0

- SASL/SCRAM-SHA-256 and SASL/SCRAM-SHA-512 - beginning with version 0.10.2.0

- SASL/OAUTHBEARER - beginning with version 2.0

- Data encryption utilizing SSL when being exchanged between brokers and clients, brokers, or brokers and tools (Take note that when SSL is enabled, performance suffers; its extent depends on the CPU type and JVM implementation.)

- Authentication of broker connections to ZooKeeper

- Client read/write authorization

- Authorization is pluggable, and connection with external authorization systems is possible.

Understanding Kafka and Kafka Security

What is Apache Kafka?

Apache Kafka is a distributed streaming technology that was originally created by LinkedIn and contributed to the Apache Software Foundation. The Scala and Java-based project seeks to create a uniform, high-throughput, low-latency framework for managing real-time data flows. A streaming platform, by definition, has three main capabilities:

- Publish and subscribe to record streams, much like a message queue or business messaging system.

- Store record streams in a fault-tolerant and long-lasting manner.

- Process record streams as they come in.

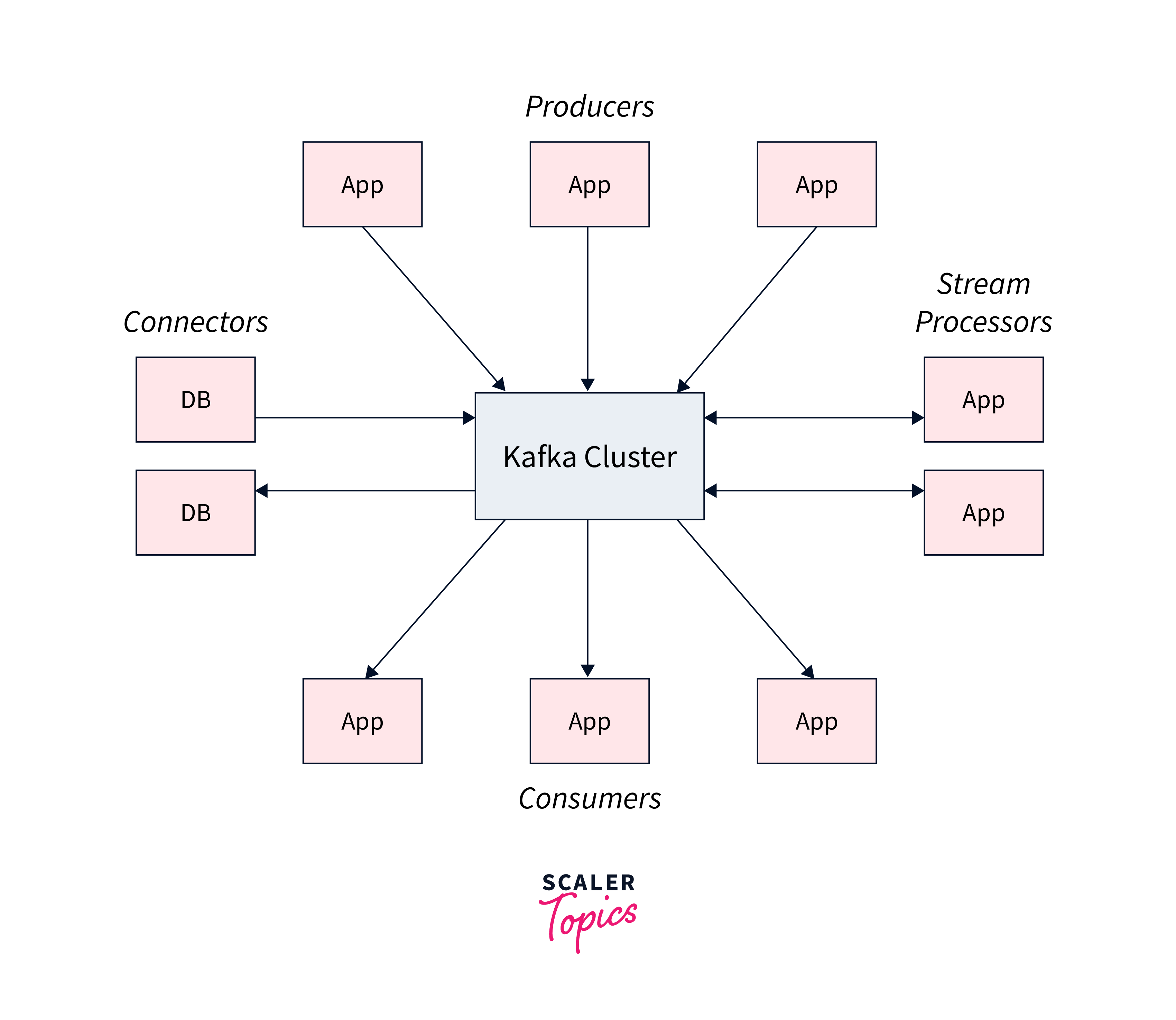

Kafka provides five key APIs for communication with topics:

- Producer API: enables the publication of a stream of records to one or more topics.

- Consumer API: allows you to subscribe to one or more topics and handle the stream of records that are sent to you.

- Streams API: allows you to function as a stream processor, ingesting an input stream from one or more topics and providing an output stream to one or more output topics, thereby converting input streams to output streams.

- Connector API: Reusable producers and consumers link Kafka topics to existing applications or data systems.

- Admin API: lets you to manage and examine Kafka topics, brokers, and other objects.

Basics of Kafka Security and Its Significance

Regularly reviewing and updating Kafka security measures ensures organizations protect Kafka infrastructure, maintain data integrity, and comply with industry regulations and best practices, addressing vulnerabilities and threats.

- Authentication: Kafka provides many authentication protocols for validating client and server identities. SSL/TLS-based authentication, SASL (Simple Authentication and Security Layer), and connectivity with other authentication providers such as Kerberos are all part of this.

- Authorization: After authenticating clients, Kafka employs authorization techniques to manage access to topics, partitions, and actions. To establish the rights and roles of distinct individuals or groups, access control lists (ACLs) or role-based access control (RBAC) can be used.

- Encryption: End-to-end encryption is supported by Kafka to maintain data confidentiality while in transit. SSL/TLS can be used to encrypt client-broker communication, preventing unauthorized data interception.

Need for Kafka Security

Apache Kafka essentially serves as an internal intermediate layer, allowing our backend systems to exchange real-time data streams via Kafka topics. In a typical Kafka deployment, every user or application may generally publish messages to and receive data from any topic.

When the organization adopts a shared tenancy model and several teams and apps use the same Kafka Cluster, as well as when the Kafka Cluster begins to onboard some critical and secret information, Kafka security must be established.

Kafka Authentication

- Kafka supports SSL/TLS and SASL for secure communication between clients and brokers.

- SSL/TLS encrypts data in transit, ensuring confidentiality and authenticating Kafka brokers and clients.

- Best practices for Kafka authentication include using secure SASL mechanisms like SCRAM, certificate-based authentication, and rotating credentials.

- Strong password policies and encryption of sensitive information in configuration files enhance security.

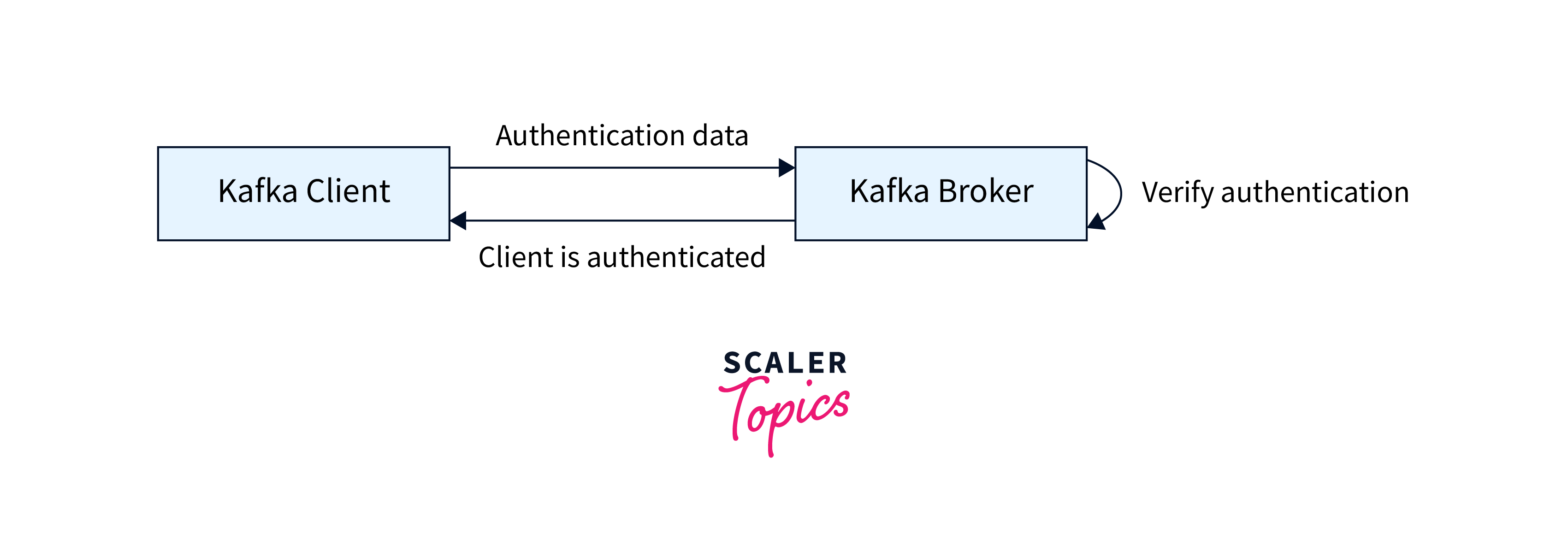

Understanding Authentication in Kafka

Authentication in Kafka is an important part of safeguarding communication between clients and brokers in a Kafka cluster. Kafka has multiple authentication options that let you to govern who may access and conduct operations on topics and other resources.

SSL and SASL are the two methods for authenticating your Kafka clients to your brokers. Let's go through both of them.

SSL Authentication : SSL/TLS encryption is supported by Kafka for secure communication between clients and brokers. Simple Authentication and Security Layer (SASL): SASL is a framework that enables the addition of authentication capabilities to many network protocols in a flexible and extensible manner.

Implementing SSL/TLS and SASL Authentication

Transport Layer Security (SSL/TLS):

SSL/TLS encryption is supported by Kafka for secure communication between clients and brokers. SSL/TLS encrypts data in transit, maintaining secrecy, and may also be used to authenticate the identity of Kafka brokers and clients, giving some authentication.

Simple Authentication and Security Layer (SASL):

SASL is a framework that enables the addition of authentication capabilities to many network protocols in a flexible and extensible manner. SASL is supported by Kafka as a pluggable authentication method, which means that alternative SASL techniques can be used for authentication. The following are the most typical SASL mechanisms used with Kafka:

- SASL PLAINTEXT: This is a standard username/password pair. These users and passwords must be pre-stored on the Kafka brokers, and any changes must result in a rolling restart. It's inconvenient and not a recommended kind of security. If you utilize SASL/PLAINTEXT, make careful to use SSL encryption so that credentials are not transferred over the network as PLAINTEXT.

- SASL SCRAM: This is aQ username/password combination with a challenge (salt) to increase security. Furthermore, Zookeeper stores username and password hashes, allowing you to grow security without restarting brokers. If you utilise SASL/SCRAM, make careful to set SSL encryption so that credentials are not transferred over the network as PLAINTEXT.

- SASL GSSAPI (Kerberos): This is based on the Kerberos ticket system, which is a fairly secure method of authentication. The most prevalent Kerberos implementation is Microsoft Active Directory. SASL/GSSAPI is an excellent alternative for large corporations since it enables them to handle security from within their Kerberos Server. Furthermore, SASL/GSSAPI allows for optional SSL encryption of connections. Setting up Kafka with Kerberos is obviously the most complicated approach, but it is well worth it in the end.

Best Practices for Kafka Authentication

It is critical to follow best practises while deploying Kafka authentication to maintain the security and integrity of your Kafka cluster. Here are several Kafka authentication best practises:

Use Secure SASL Mechanisms: When using SASL for authentication, avoid utilising the PLAIN method as much as feasible. PLAIN delivers credentials in plaintext, which attackers can intercept if SSL/TLS is not utilised. Instead, use stronger techniques such as SCRAM-SHA-256 or SCRAM-SHA-512, which improve security by providing salted password hashes rather than bare passwords.

Enable Configuration File Encryption: Ensure that sensitive information, including passwords and keys, is encrypted and securely stored in the Kafka broker and client configuration files. Avoid hardcoding passwords in plain text and consider utilizing external configuration management solutions to securely store and retrieve sensitive data.

Implement Certificate-Based Authentication: Rather than depending just on passwords, utilise certificate-based authentication for SSL/TLS. Certificate-based authentication provides a higher level of security and is especially beneficial in large-scale deployments and business scenarios.

Regularly Rotate Credentials: Rotate the credentials used for authentication, such as passwords and certificates, on a regular basis. Rotating these credentials on a regular basis can help to limit the consequences of potential credential leaks or unauthorized access.

Use Strong Password Policies: If you use password-based authentication (e.g., SCRAM-SHA-256), make sure you have strong password policies in place. To reduce the possibility of brute-force attacks, require passwords with suitable complexity, length, and expiration restrictions.

Kafka Authorization

- Authentication and authorization are vital in Kafka to secure communication and restrict access to resources.

- Kafka uses an authorizer plugin, such as AclAuthorizer, to enforce ACLs and grant permissions.

- Proper implementation of ACLs ensures that only authenticated and authorized users have appropriate access to Kafka resources, enhancing overall security and data protection.

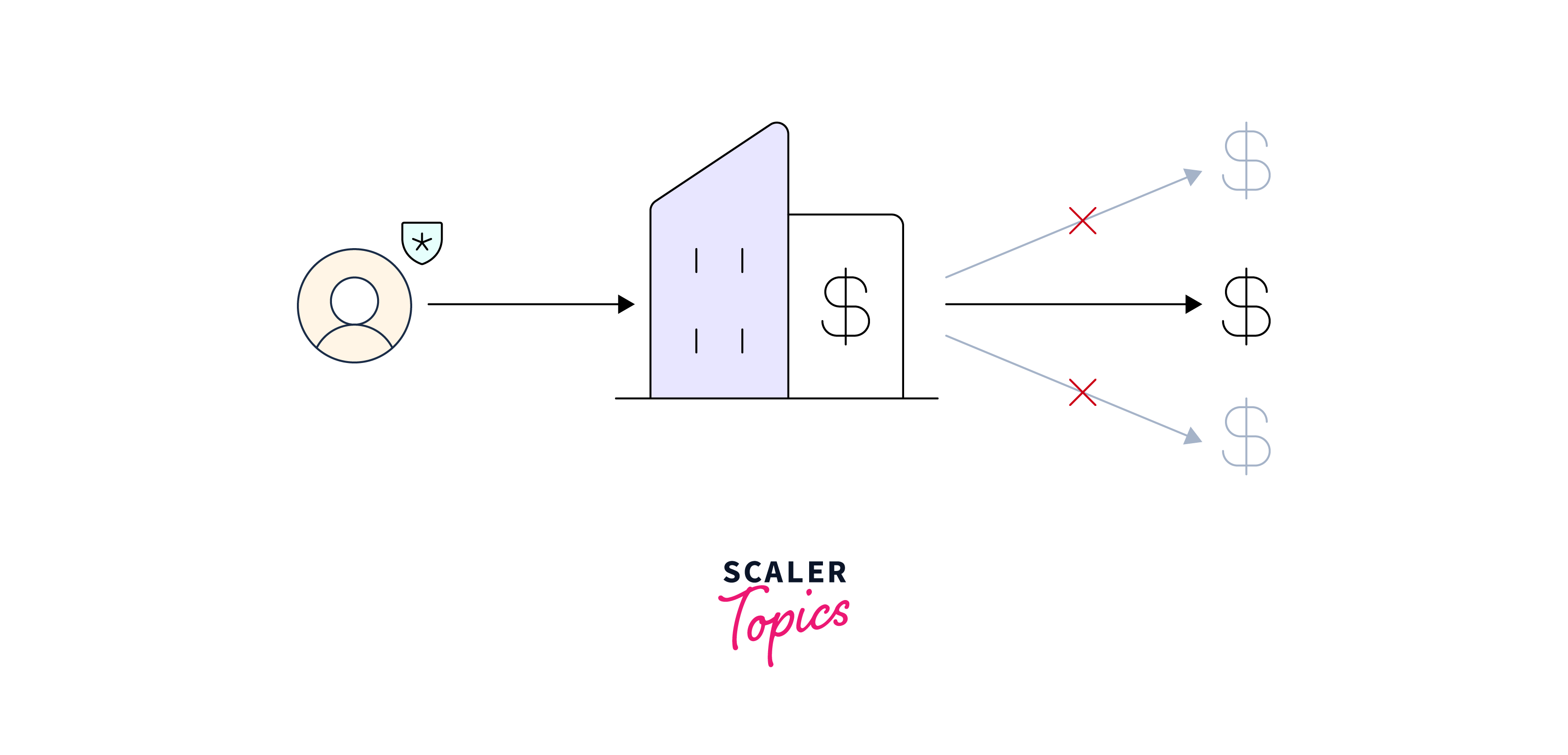

Understanding Authorization in Kafka

After an entity has been authenticated, authorization governs what it can perform on a system. Consider an ATM: after properly authenticating with your card and PIN, you can only access your accounts, not all of the accounts on the machine.

The process of restricting access to various resources inside a Kafka cluster is known as authorization. It guarantees that only authorised users or clients with the necessary rights are able to conduct particular actions on Kafka topics, consumer groups, and other resources. Kafka's Access Control Lists (ACLs) protocol provides authorisation.

ACL: An Access Control List (ACL) is a rule that describes the actions that a particular principal is permitted to do on a resource. It specifies the permissions that a principal has been given or refused for a certain resource.

Implementing Access Control Lists (ACLs)

ACL: An Access Control List (ACL) is a rule that describes the actions that a particular principal is permitted to do on a resource. It specifies the permissions that a principal has been given or refused for a certain resource.

ACLs are used by Kafka to enforce authorisation. ACLs are defined in the Kafka broker configuration and may be maintained with different Kafka tools or commands, such as thekafka-acls and kafka-configs scripts.

A typical ACL rule has the following elements:

- Resource Type: The type of Kafka resource (such as TOPIC, GROUP, or CLUSTER) to which the ACL rule applies.

- Operation: The operation that is permitted or prohibited (for example, READ, WRITE, ALL).

- Resource Name: The name of the particular Kafka resource (such as the topic name) to which the ACL rule applies.

- Principal: The identity for whom permission is given or withheld. This may be a client ID or a user ID.

- Operation: The action that is approved or rejected (for example, READ, WRITE, ALL).

- Permission Type: The kind of permission given or refused, which might be Allow or Deny.

For instance, you can create an ACL rule like this to allow user alice read and write access to a topic called my-topic:

Creating ACLs

To construct ACLs, use the kafka-acls command-line tool. For example, suppose you want users named Alice and Fred to be able to read and write from the finance topic:

However, in a real-world application, your primary names are likely to be more complicated. If you utilise SSL authentication for clients, for example, the principal name will be the subject name of the SSL certificate. When using SASL_SSL with Kerberos, the Kerberos principal format is used. By specifying ssl.principal.mapping.rules for SSL and sasl.kerberos.principal.to.local.rules for Kerberos, you may additionally customise how the principal is obtained from the identity.

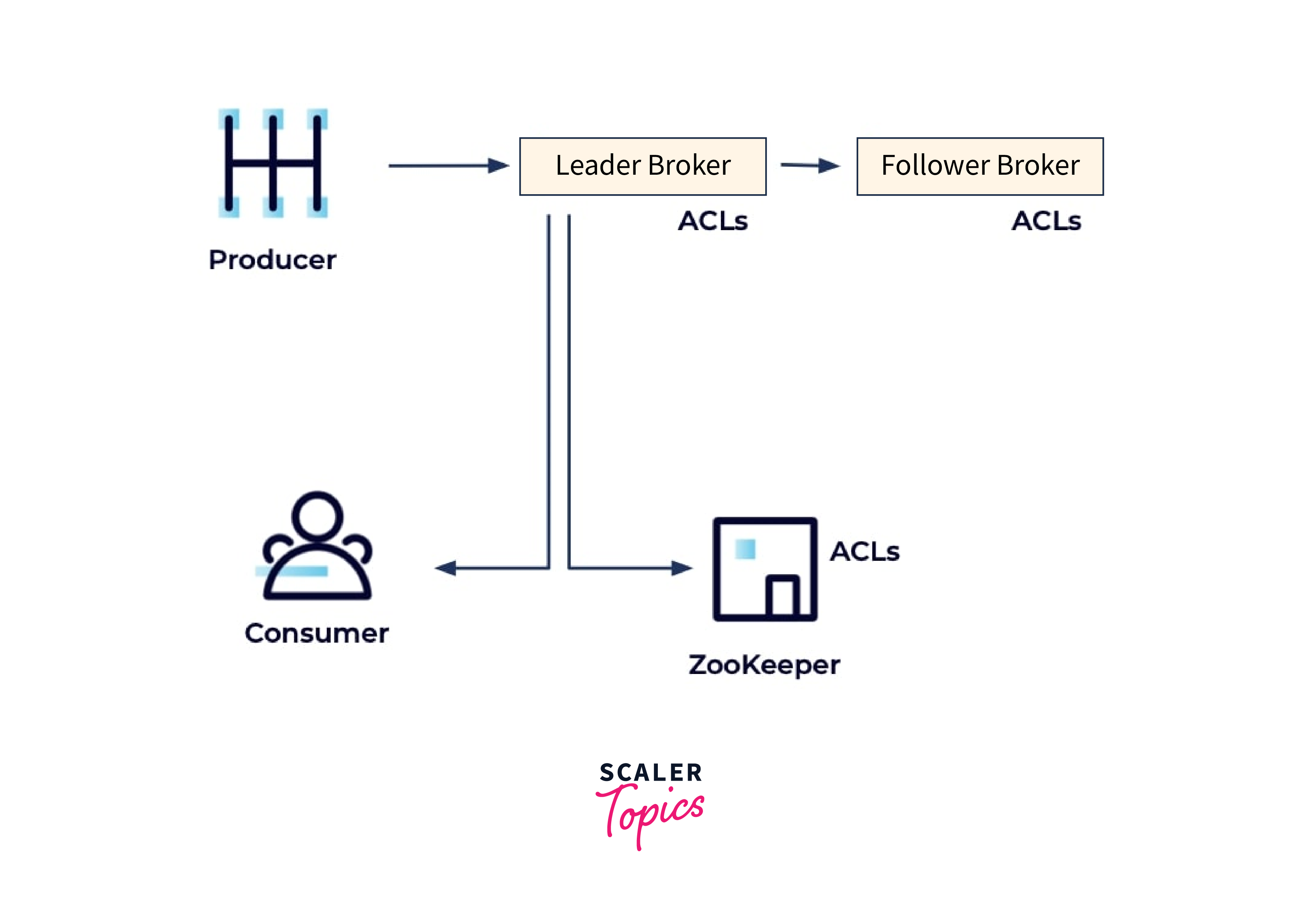

How Kafka Applies ACLs

ACLs developed with kafka-acls are kept in ZooKeeper and cached in memory by each broker to allow for quick lookups when authorizing requests.

- To apply ACLs to requests, Kafka employs a server plugin called as an authorizer, which can take a variety of forms, including customized ones.

- An authorizer allows a requested action if there is at least one "Allow ACL" that fits the operation and no "Deny ACL" that prohibits it ("Deny" always overrides "Allow").

- AclAuthorizer is the default authorizer (for ZooKeeper Kafka), which you define in the broker configuration: authorizer.class.name=kafka.security.authorizer.AclAuthorizer.

- However, if you utilize Kafka's native consensus implementation based on KRaft, you will use a new built-in StandardAuthorizer that does not rely on ZooKeeper. StandardAuthorizer does all of the same tasks as AclAuthorizer for ZooKeeper-dependent clusters, and it saves its ACLs in the __cluster_metadata metadata topic.

Best Practices for Kafka Authorization

- Limit Cluster-Level Permissions: Avoid providing too much access at the cluster level. Administrators and highly trustworthy entities should normally have cluster-level permissions.

- Principle of Least Privilege

defining ACLs, use the idea of least privilege. Only provide clients or users with the rights they need depending on their specific roles or responsibilities. - Automate ACL Management: To maintain consistency and lower the risk of human mistakes, think about automating the management of ACLs using scripts or tools.

- Regularly Review and Audit: Make sure the ACLs are consistently reviewed to make sure they appropriately reflect the access needs of users and applications. Conduct routine security audits to find any possible configuration errors or weaknesses in security.

Kafka Encryption

Encryption scrambles data so that it is unreadable by those who do not have the correct key, and it also safeguards the data's integrity so that you can tell if it was tampered with along the way.

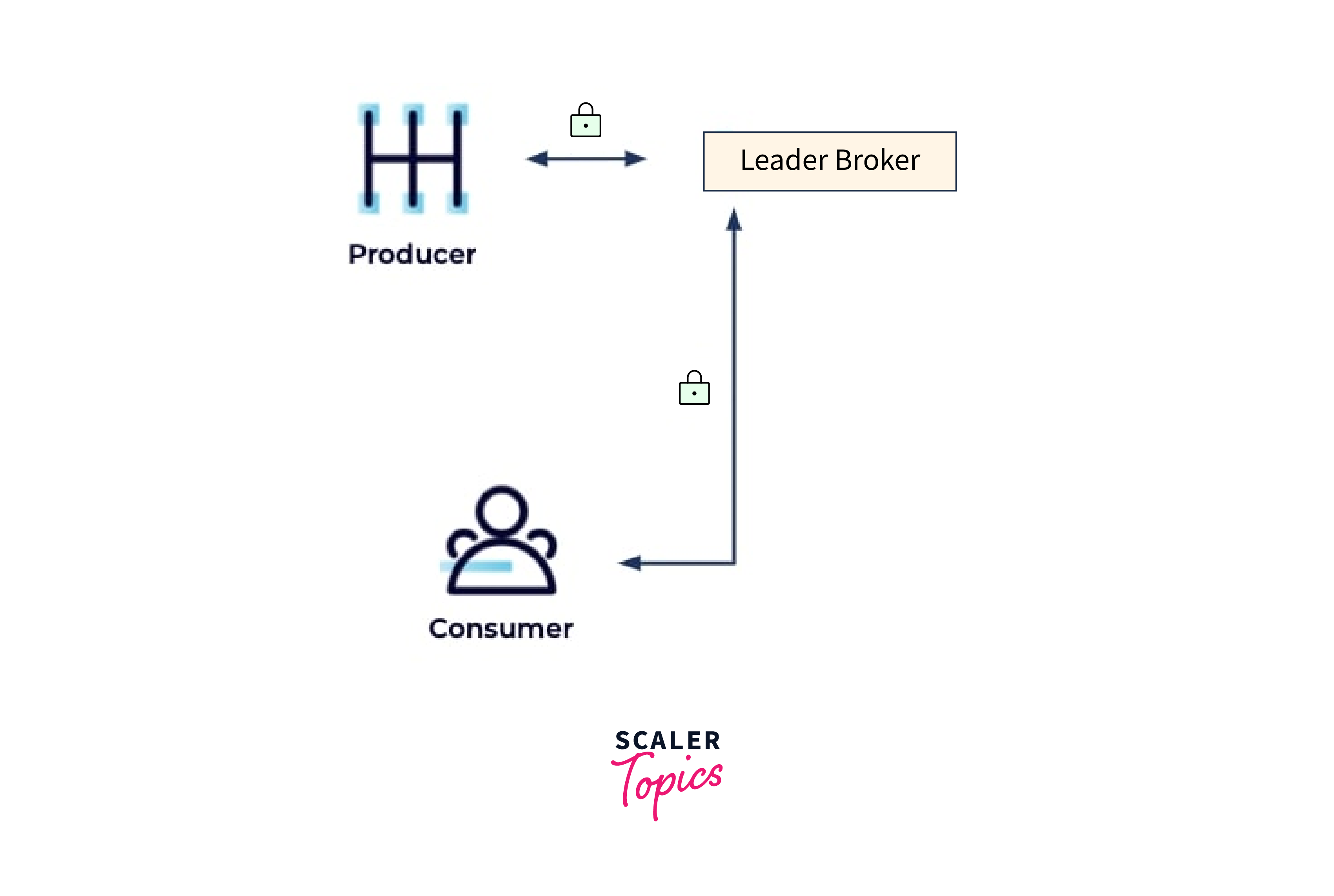

Client/Cluster

The most basic encryption arrangement consists of encrypted traffic between clients and the cluster, which is critical if customers connect to the cluster over an unsecured network such as the public internet:

Client/Cluster – Inter-Broker – Broker/ZooKeeper

The next step is to encrypt traffic between brokers and between brokers and ZooKeeper. Even private networks may be penetrated, so make sure your private network communication is resistant to eavesdroppers or anybody wishing to meddle with it while it is in action.

Client/Cluster – Inter-Broker – Broker/ZooKeeper – Data at Rest

Another item to consider is your data at rest, which will be large because Kafka makes data persistent by writing to disk. As a result, you should consider encrypting your static data to safeguard it from anyone who has unauthorized access to your filesystem.

Encryption Strategies

Encrypting Data in Transit with SSL

- Fortunately, as we discussed in the Authentication Basics module, it's relatively simple to implement SSL or SASL_SSL in order to TLS encrypt data in transit.

- This is accomplished by enabling the SSL security protocol and setting ssl.client.auth=required in the broker config, and it is sometimes referred to as mutual TLS or mTLS.

- Each client does too—if client authentication is enabled.

- Note that if you want to enable TLS for inter-broker communication, add security.inter.broker.protocol=SSL to your broker properties file.

- You should keep in mind, that enabling TLS can have a performance impact on your system, because of the CPU overhead needed to encrypt and decrypt data.

Encrypting Data at Rest

- Because Apache Kafka does not enable encrypting data at rest, you must rely on your infrastructure's entire disk or volume encryption.

- In general, public cloud providers support this; for example, AWS EBS volumes may be encrypted using AWS Key Management Service keys.

Kafka Security Best Practices

- Monitor and Audit :

- Enable logging and monitoring of security events, including login and authorization actions. Review logs and conduct security audits on a regular basis to uncover any security concerns or unusual activity.

- Use Certificate-Based Authentication:

- Use certificate-based authentication instead than just passwords for SSL/TLS. This provides an additional level of protection by guaranteeing that only authorized customers and brokers with valid certificates may connect.

- Enable Authentication: Use authentication to confirm the identity of clients and brokers. To provide secure communication between them, use techniques such as SASL (Simple Authentication and Security Layer) with SCRAM or Kerberos.

- Secure Cluster setup: Ensure the security of the Kafka cluster setup by using strong passwords, eliminating unneeded features and protocols, and staying up to current on software updates and patches to resolve security issues.

- Effects on Performance: Enabling security mechanisms like encryption and authentication might add overhead and potentially influence Kafka performance. To strike a balance between security and performance, it is critical to examine performance consequences and optimize settings accordingly.

Summary

- Kafka is an open-source distributed event streaming service for high-performance data pipelines.

- It requires proper security measures like authentication, authorization, and encryption to protect sensitive information, prevent unauthorized access, comply with regulations, and stay ahead of evolving cyber threats.

- Authentication and authorization are vital in Kafka to secure communication and restrict access to resources.

- Kafka Encryption safeguards data integrity and privacy between brokers, producers, and consumers.

- Kafka encryption may be used at several levels, including data in transit and data at rest.

Emphasizing the Importance of Kafka Security

- Maintaining Data Integrity: Data integrity is critical in every communications system. SSL/TLS encryption provides secure communication channels that prevent data tampering and unauthorized modification while in transit.

- Preventing Unauthorized Access: By providing authentication and authorization procedures, Kafka security guarantees that only authorized users and applications may access and interact with the Kafka cluster. Unauthorized access can result in data modification, system interruption, or resource exploitation.

- Firewalls: Private networks should have brokers implemented. They should be protected by port-based and web-access firewalls, which are essential for isolating Kafka and ZooKeeper. Port-based firewalls must be configured to limit access to particular port numbers, whereas web-access firewalls can limit access to specific, limited categories of possible requests.

- Insider Threat Protection: Insider threats represent major hazards to data security. Strong authentication, authorisation, and monitoring procedures aid in the detection and prevention of harmful activity by authorised users.

- Securing Real-time Data: Because Kafka is frequently used in real-time data processing, it is critical to secure the data flowing through the cluster in order to maintain data integrity and guarantee that only valid users consume and generate data.

Encouragement to Further Explore and Apply Kafka Security Practices

- Data Security: Kafka security guarantees that your data is secure at all times, both in transit and at rest. This ensures that your sensitive and personal information is protected from unauthorized access and modification.

- Compliance and Legal Requirements: Depending on the industry, severe data privacy and security rules may apply. Implementing Kafka security practices assists you in being compliant and avoiding potential legal ramifications.

- Data Breach Prevention: Data breaches may result in major financial losses, reputational harm, and loss of consumer confidence. Securing Kafka aids in the prevention of data breaches, assuring the protection of your critical information.

- Keeping Ahead of risks: Cyber dangers are ever-changing, and attackers are growing more sophisticated. You can better fight against the newest attacks and vulnerabilities if you keep up with Kafka security practices.