Human Pose Estimation in Deep Learning

Overview

Pose estimation is a computer vision task that involves detecting and tracking human body keypoints from an image or video. Deep learning-based techniques leverage neural networks to improve accuracy and handle complex pose variations. Human pose estimation in deep learning involves training neural networks to detect and locate key points on a human body, such as joints and limbs, from input images or video frames. It can be used to recognize human actions in videos or images, which is useful for surveillance, sports analysis, and human-robot interaction. It can also be used to animate characters and capture motion data for special effects.

Introduction

Pose estimation is a computer vision task that involves detecting and tracking the human body's key points. It has numerous applications, including sports analysis, virtual reality, robotics, and healthcare. It can also be used to track and analyze human movements during workouts, rehabilitation, or medical diagnosis. This requires a large annotated dataset and often involves the use of convolutional neural networks and multi-stage architectures to improve accuracy.

Pre-requisites

- To understand the content of this article, the reader should have a basic understanding of computer vision, machine learning, and deep learning concepts.

- Familiarity with neural networks, specifically convolutional neural networks (CNNs) and recurrent neural networks (RNNs), would be beneficial.

- Additionally, knowledge of keypoint detection and tracking techniques would be useful.

- However, the article will cover the necessary background information to provide a comprehensive overview of pose estimation in deep learning.

What is Pose Estimation?

Pose estimation is a process of estimating the pose of an object or human body from an image or video. It involves detecting and tracking the keypoints of an object or human body, such as joints, limbs, and other body parts. The process of pose estimation can be performed using various techniques, including traditional computer vision techniques and deep learning-based approaches.

Human pose estimation using deep learning techniques has become popular in recent years, mainly due to their ability to handle complex and diverse pose variations. These techniques leverage neural networks, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), to learn a mapping between the input image and the corresponding keypoint locations.

What is Human Pose Estimation?

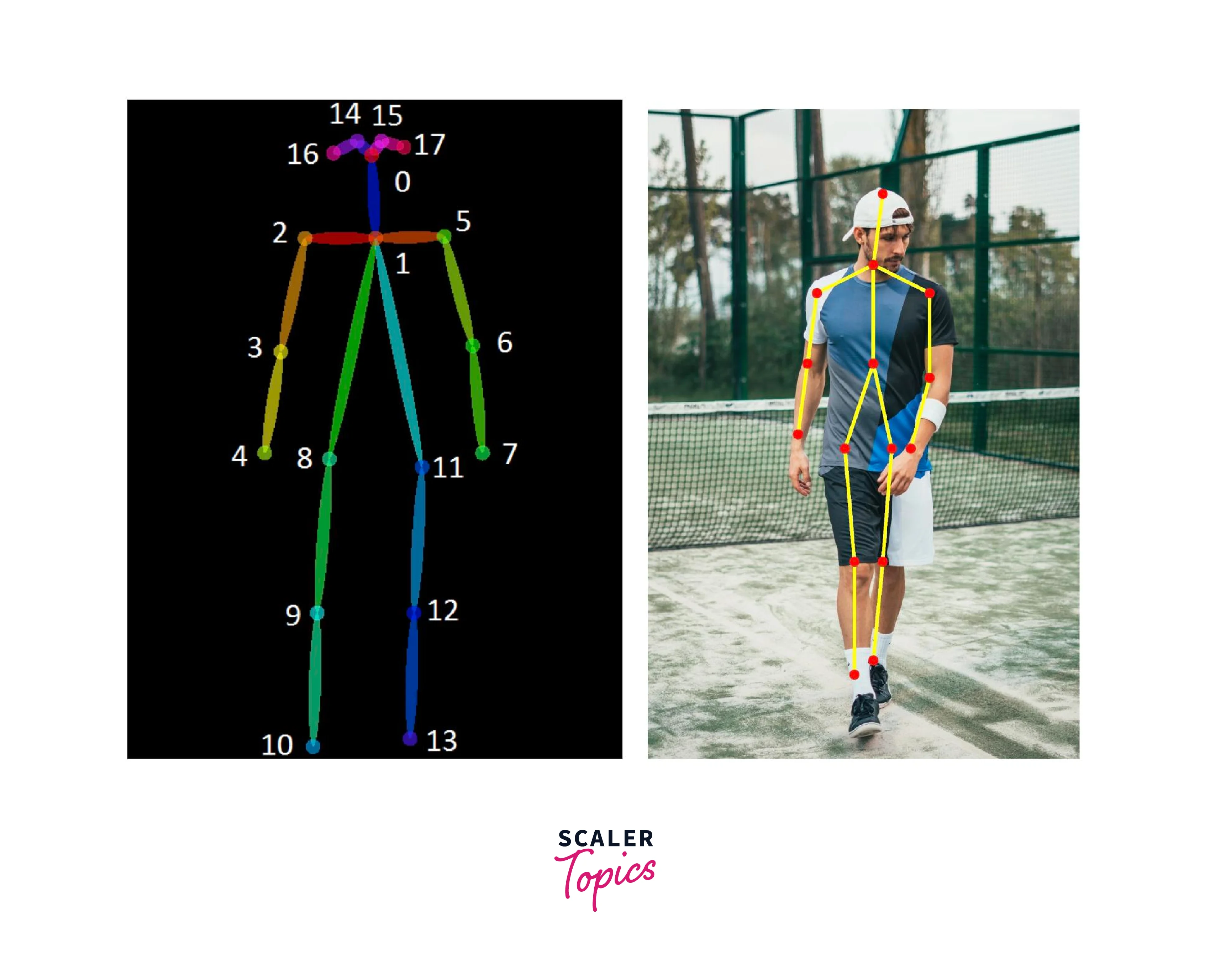

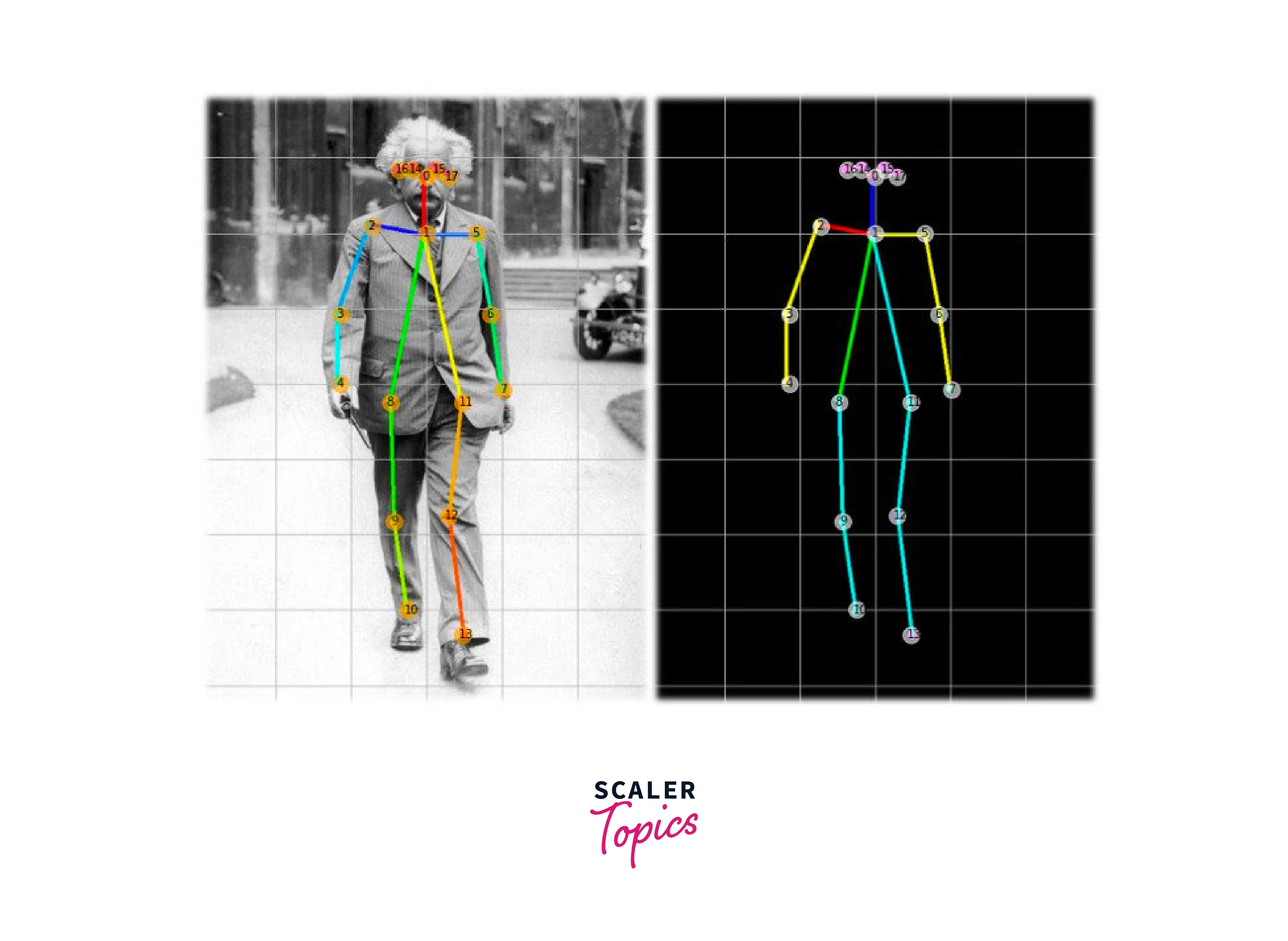

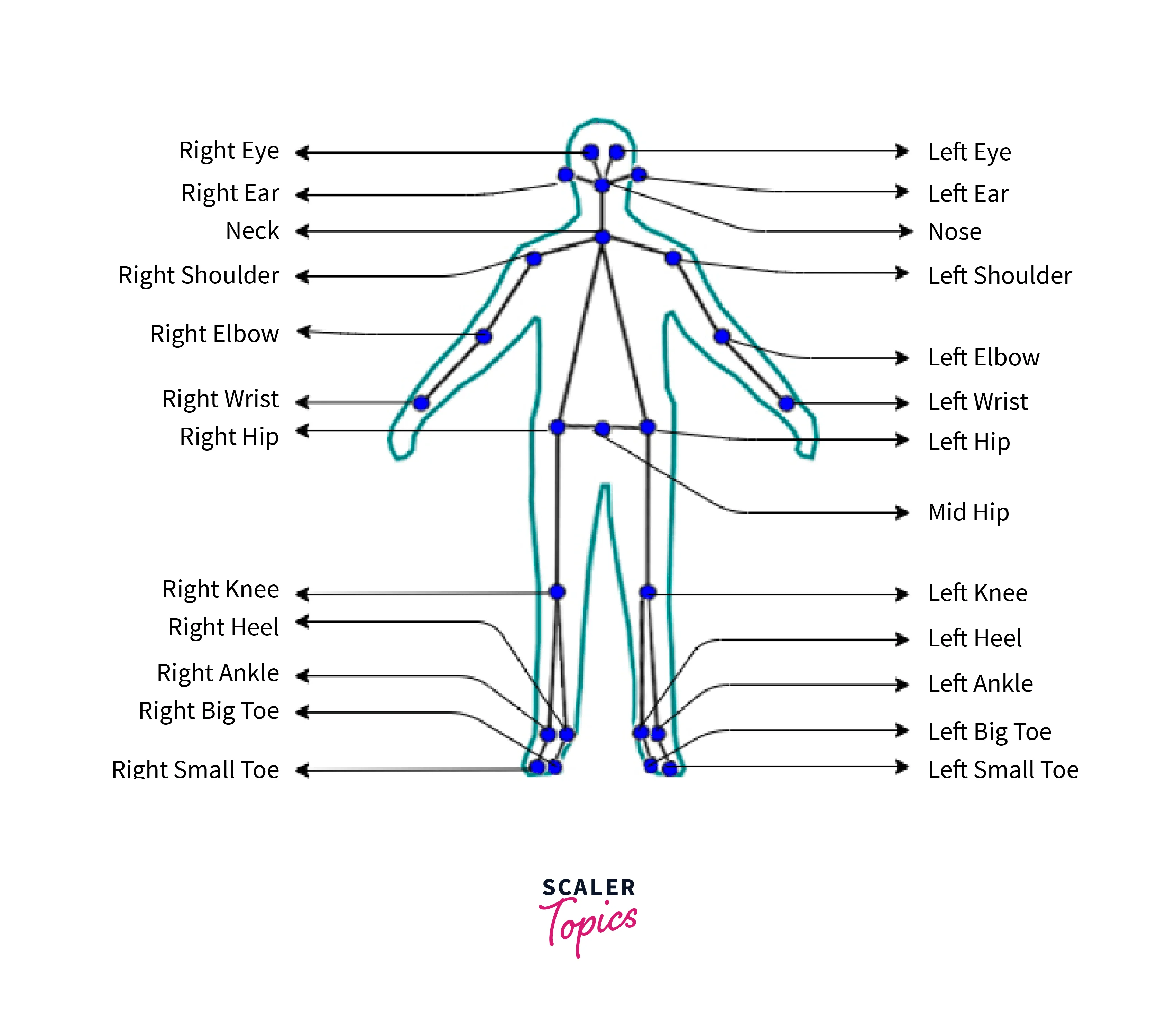

Human pose estimation using deep learning is a computer vision task that involves detecting and tracking the human body's key points, such as joints and limbs, from an image or video. The key points are also referred to as keypoints or landmarks, and they represent the location of various body parts, such as the shoulders, elbows, wrists, hips, knees, and ankles.

Introduction

The goal of human pose estimation using deep learning is to accurately detect the position and orientation of the human body in an image or video. The detected keypoints can be used to estimate the body's pose, which can be represented in various ways, such as joint angles, body segments, or skeletal representations.

Human pose estimation has numerous applications, including sports analysis, virtual reality, robotics, and healthcare. For example, in sports analysis, pose estimation can be used to track the movements of athletes and analyze their performance. In healthcare, pose estimation can be used to monitor the movements of patients and detect abnormalities or injuries.

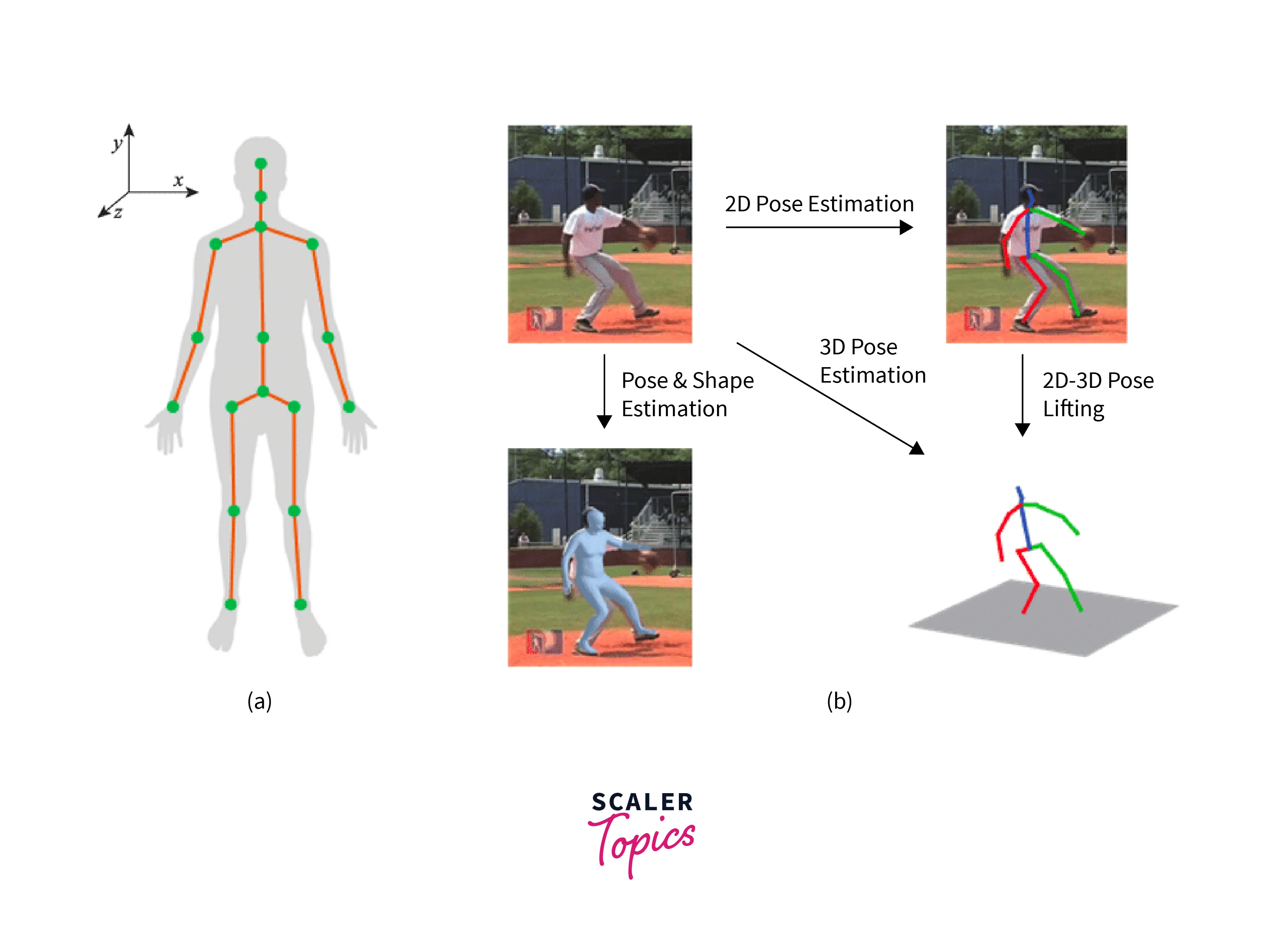

What is 2D Human Pose Estimation?

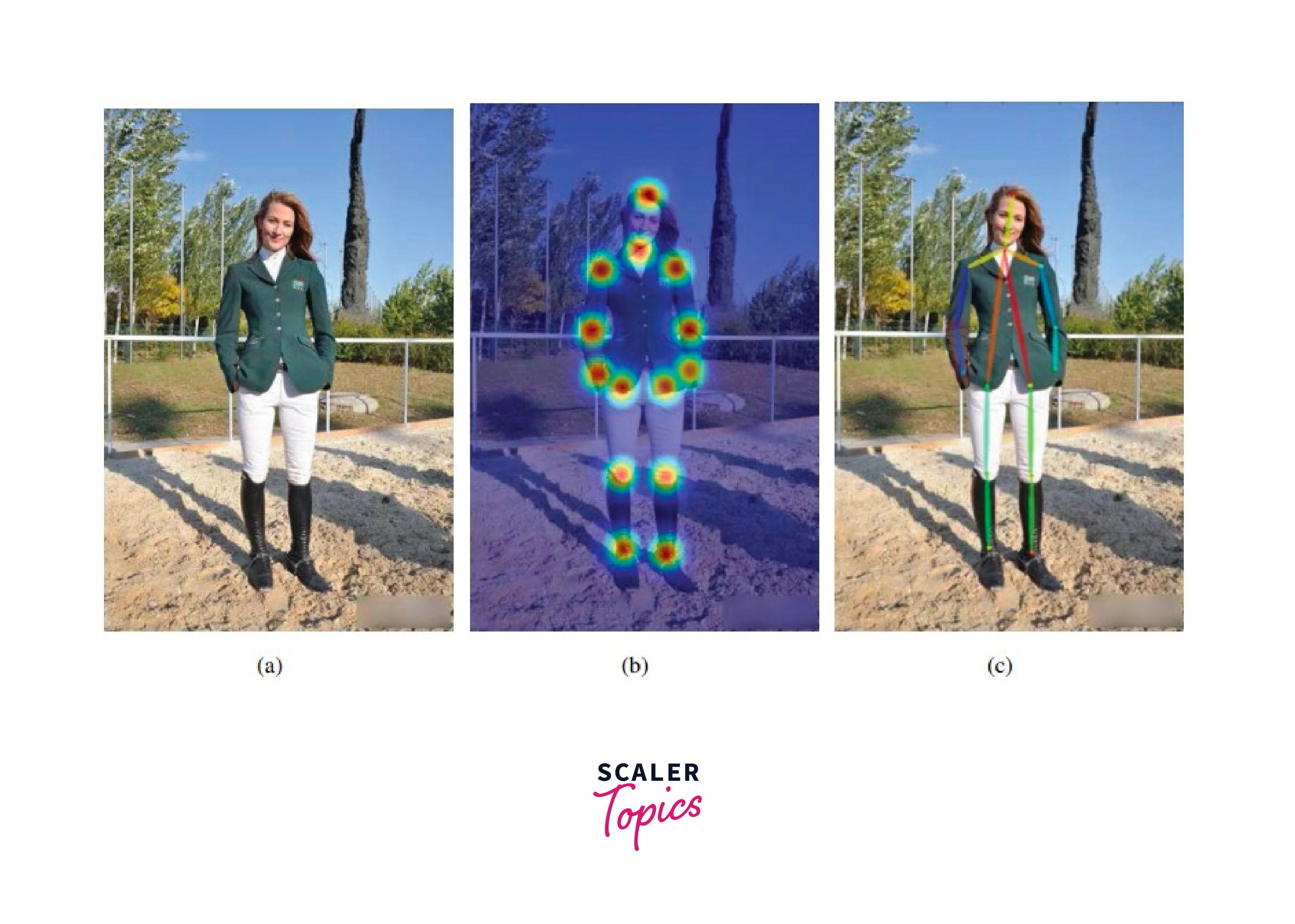

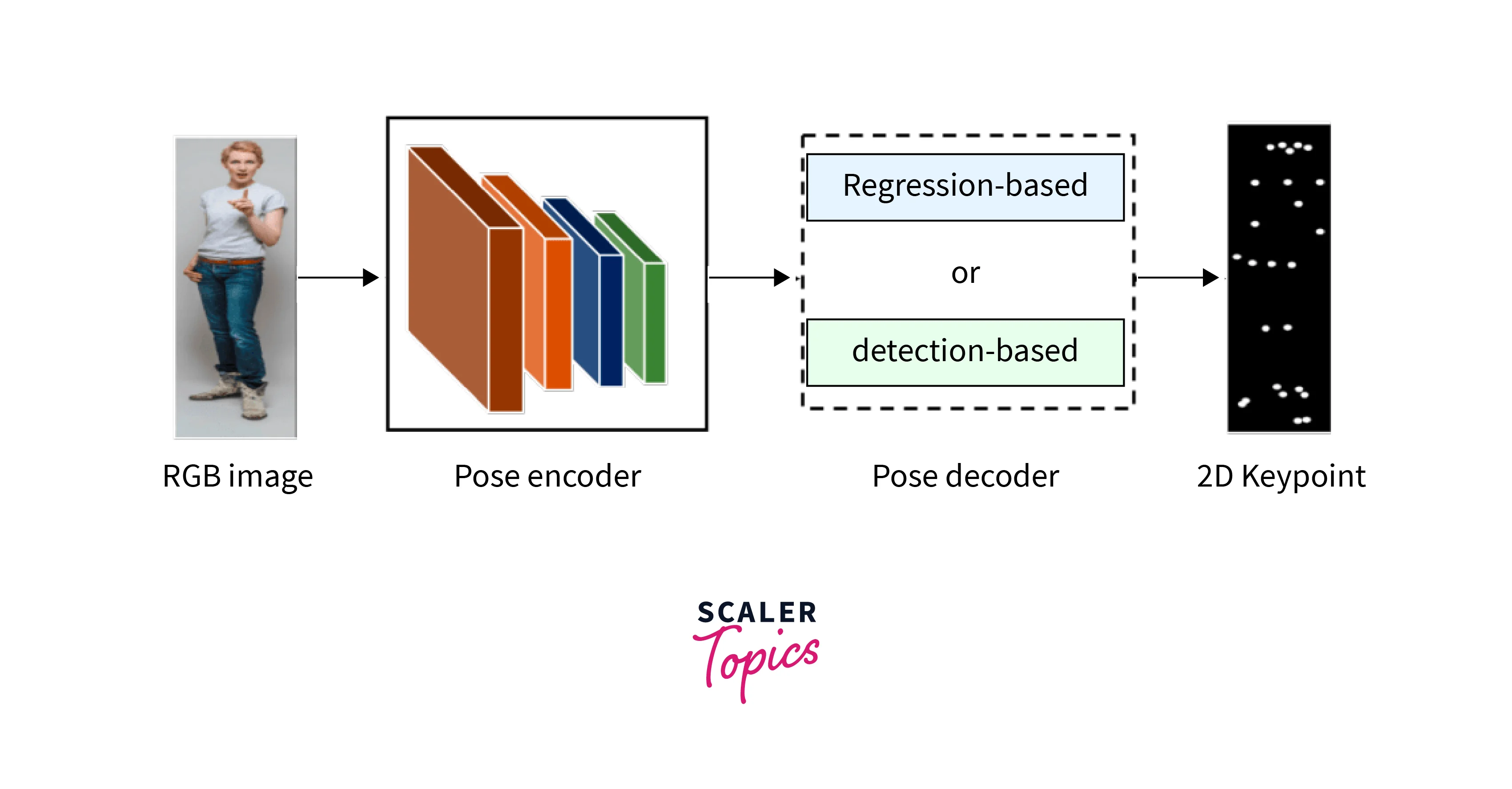

2D Human Pose Estimation using deep learning is a computer vision task that involves detecting and localizing the human body's key points or joints in a 2D image.

The key points are typically represented as 2D coordinates, such as (x, y), and are detected using various techniques such as part affinity fields, heatmap regression, and multi-stage detectors. Once the key points are detected, they can be used to estimate the pose of the human body, which includes the position and orientation of the body parts.

What is 3D Human Pose Estimation?

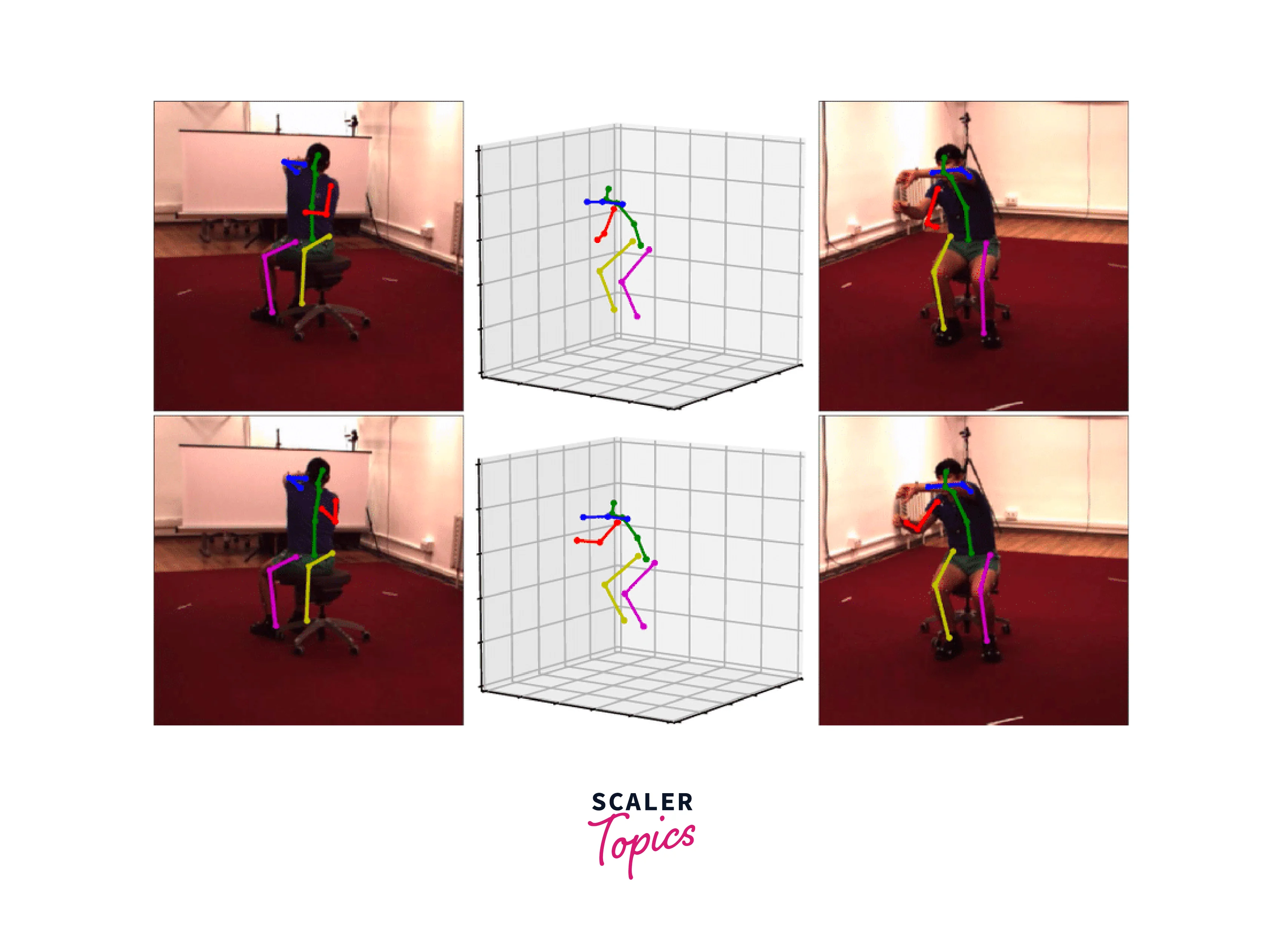

On the other hand, 3D Human Pose Estimation using deep learning involves estimating the position and orientation of the human body in 3D space.

Unlike 2D pose estimation, which only provides the position of the joints in the image plane, 3D pose estimation provides the depth information of the joints as well. This can be done using various techniques, such as multi-view stereo, depth sensors, or monocular depth estimation.

2D vs 3D Pose estimation

2D pose estimation is simpler and faster than 3D pose estimation, but it has limitations in terms of its accuracy and completeness. 3D pose estimation provides more information about the position and orientation of the human body, but it requires more computational resources and additional sensors. Both 2D and 3D pose estimation have their applications and use cases, and the choice depends on the requirements of the specific task.

Here is a table outlining 2D vs 3D pose estimation,

| 2D Pose Estimation | 3D Pose Estimation | |

|---|---|---|

| Definition | Detecting and localizing the human body's keypoints in a 2D image | Estimating the position and orientation of the human body in 3D space |

| Output | 2D coordinates of keypoints (x, y) | 3D coordinates of keypoints (x, y, z) |

| Data Input | Single 2D image | Multiple 2D images, stereo images or depth sensor data |

| Accuracy | Limited by occlusions, lighting conditions, and camera viewpoint | More accurate and complete than 2D pose estimation, but may still be affected by occlusions |

| Computational Requirements | Less computational resources required | More computational resources required |

| Applications | Human activity recognition, action recognition, human-computer interaction | Robotics, Augmented Reality/Virtual Reality, Medical imaging, Sports analysis |

Main challenges of Pose Detection

Human Pose detection using deep learning is a challenging task due to several factors. Some of the main challenges of pose detection are:

- Ambiguity in pose representation: There are different ways to represent the human pose, such as joint angles, body segments, or skeletal representations. The choice of pose representation can affect the accuracy of the pose detection algorithm.

- Variability in human appearance and motion: The human body can have different shapes and sizes, and people can move in various ways. The pose detection algorithm needs to be able to handle this variability and generalize to different people and motions.

- Occlusions: Occlusions occur when body parts are hidden from view, such as when someone is partially behind an object or another person. Occlusions can make it difficult for the algorithm to detect all the key points accurately.

- Limited dataset: Human Pose detection using deep learning uses algorithms that rely on large annotated datasets to learn the human pose variations. However, creating such datasets can be challenging and time-consuming, which limits the size and diversity of the available datasets.

- Real-time performance: Many applications of human pose detection using deep learning, such as robotics or virtual reality, require real-time performance. However, pose detection algorithms can be computationally expensive, making real-time performance a challenge.

- Camera viewpoint and lighting conditions: Pose detection algorithms can be sensitive to camera viewpoint and lighting conditions, which can affect the accuracy of the algorithm. It is important to train the algorithm with data that covers different viewpoints and lighting conditions.

Addressing these challenges requires a combination of algorithmic improvements, training data, and hardware improvements. The development of new techniques and deep learning architectures has helped to improve the accuracy and robustness of pose detection algorithms, and the availability of larger datasets has helped to train these algorithms more effectively.

How does Pose Estimation work?

Pose estimation works by using computer vision techniques to detect and locate key points on a person's body. These key points, also called keypoints, can include joints such as the elbows, wrists, and knees, as well as the nose, eyes, and ears. Once these keypoints are identified, they can be used to estimate the position, orientation, and movement of the person's body.

The process of human pose estimation using deep learning typically involves the following steps:

- Image acquisition: An image or a video frame of a person is captured using a camera.

- Keypoint detection: The image is processed using a pose estimation algorithm to detect and locate key points on the person's body.

- Pose estimation: The detected keypoints are used to estimate the person's pose, including their position, orientation, and movement.

- Post-processing: The estimated pose can be further refined or smoothed using post-processing techniques.

- Output: The final output is the estimated pose, which can be used for various applications such as human-computer interaction, action recognition, and sports analysis.

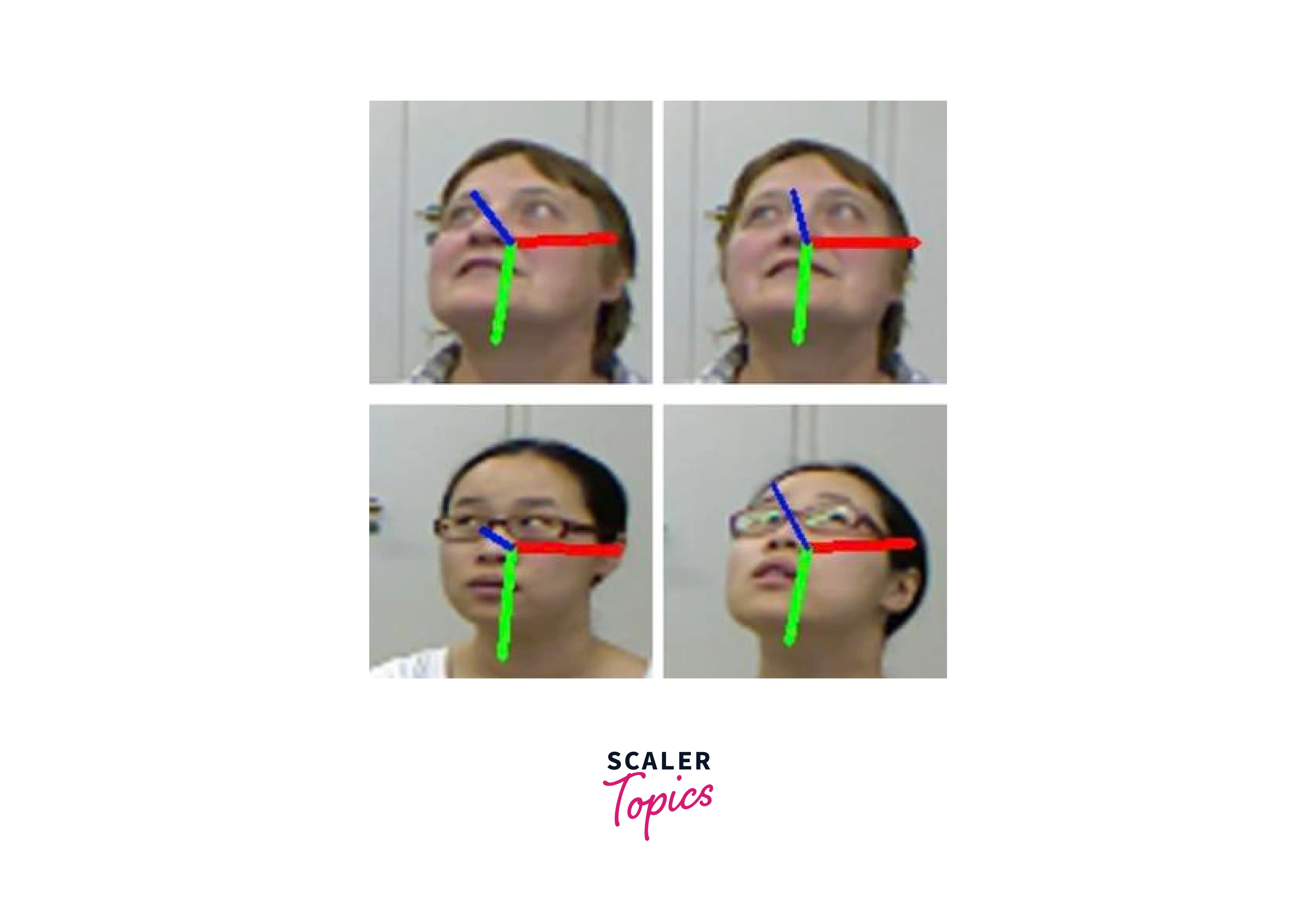

Head Pose Estimation

Head pose estimation is a specific type of human pose estimation using deep learning that focuses on estimating the orientation and position of a person's head. This can be useful in applications such as driver monitoring, gaze tracking, and virtual reality.

Head pose estimation typically involves the following steps:

- Face detection: The first step is to detect and locate the person's face in an image or video frame.

- Facial landmark detection: The position of key facial landmarks, such as the eyes, nose, and mouth, are detected and localized.

- Head pose estimation: Using the detected facial landmarks, the orientation and position of the person's head are estimated.

- Output: The final output is the estimated head pose, which can be used for various applications such as gaze tracking or virtual reality.

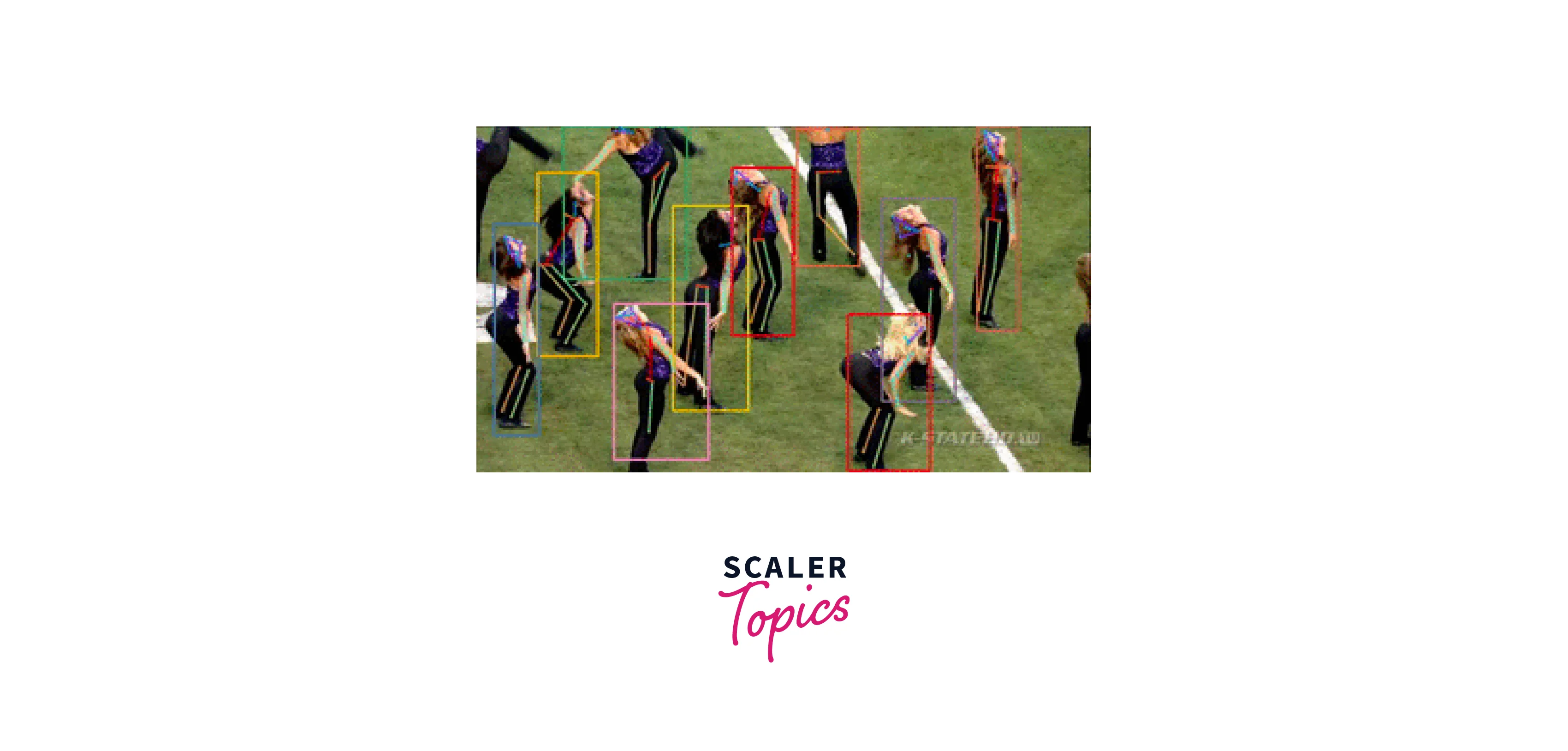

Video Person Pose Tracking

Video person poses tracking involves estimating the pose of a person in a sequence of video frames. This can be useful in applications such as surveillance, sports analysis, and human-computer interaction.

Video person poses tracking typically involves the following steps:

- Pose estimation: The first frame of the video is processed using a pose estimation algorithm to detect and locate keypoints on the person's body.

- Pose tracking: The detected key points are then tracked across subsequent frames of the video using techniques such as optical flow or Kalman filtering.

- Post-processing: The estimated pose can be further refined or smoothed using post-processing techniques.

- Output: The final output is the estimated pose for each frame of the video or an image, which can be used for various applications such as action recognition or sports analysis.

Types of Human Pose Estimation Models

There are several types of models in human pose estimation using deep learning, including:

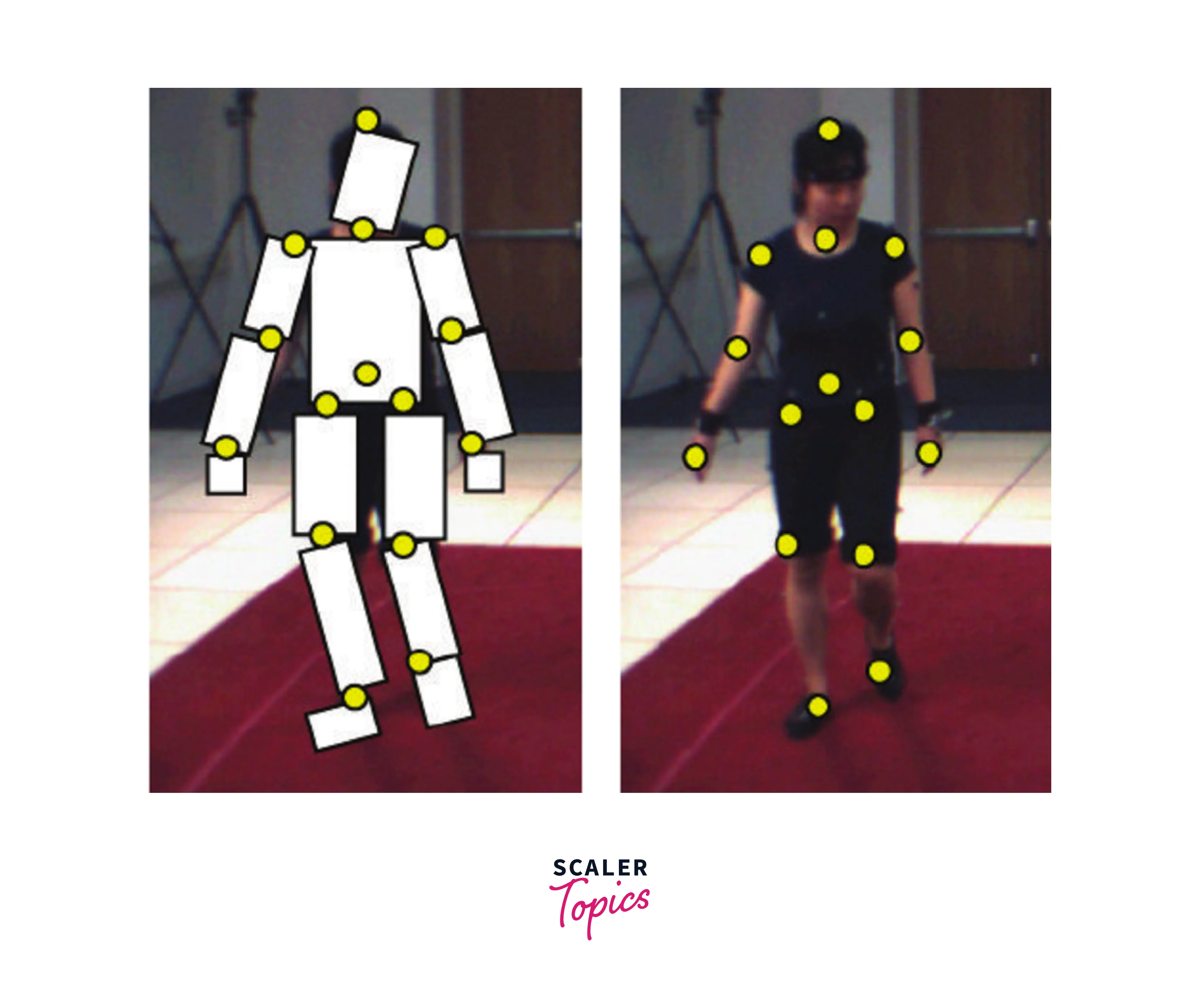

- 2D joint-based models: These models estimate the 2D coordinates of key points, such as joints or body landmarks, on the person's body. They can be trained using supervised learning algorithms and can be used for applications such as action recognition, gesture recognition, and sports analysis.

- 3D joint-based models: These models estimate the 3D coordinates of key points on the person's body. They can be trained using supervised learning algorithms and can be used for applications such as motion capture and virtual reality.

- Part-based models: These models divide the person's body into parts, such as limbs or torso, and estimate the pose of each part separately. They can be trained using both supervised and unsupervised learning algorithms and can be used for applications such as human-computer interaction and robotics.

- Skeletal models: These models represent the person's body as a set of connected bones or links and estimate the joint angles and position of each bone. They can be trained using supervised learning algorithms and can be used for applications such as motion capture and virtual reality.

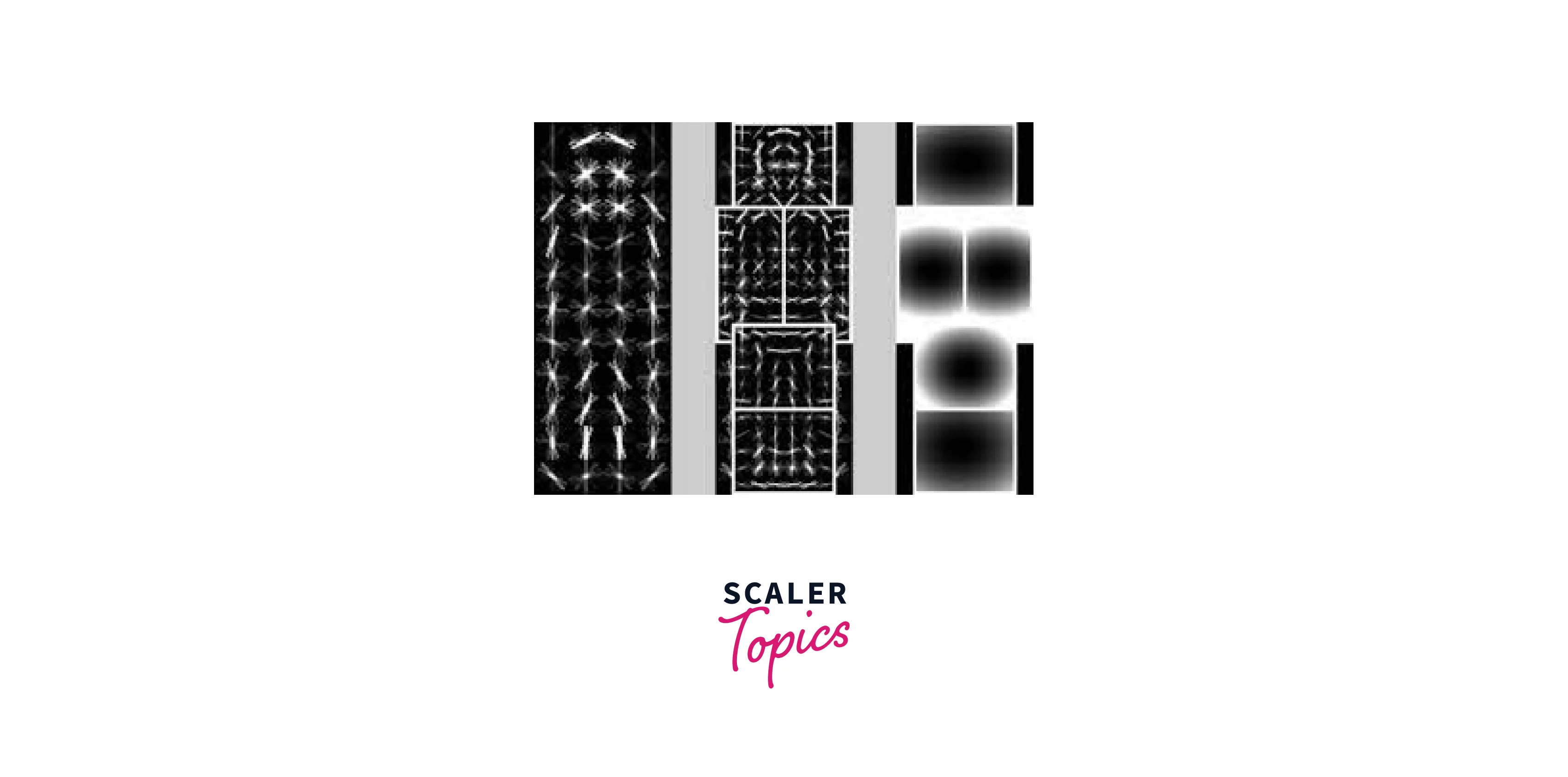

- Heatmap-based models: These models use a heatmap representation to estimate the position of key points on the person's body. They can be trained using supervised learning algorithms and can be used for applications such as action recognition and gesture recognition.

- Regression-based models: These models use a regression approach to estimate the position of key points on the person's body. They can be trained using supervised learning algorithms and can be used for applications such as sports analysis and motion capture.

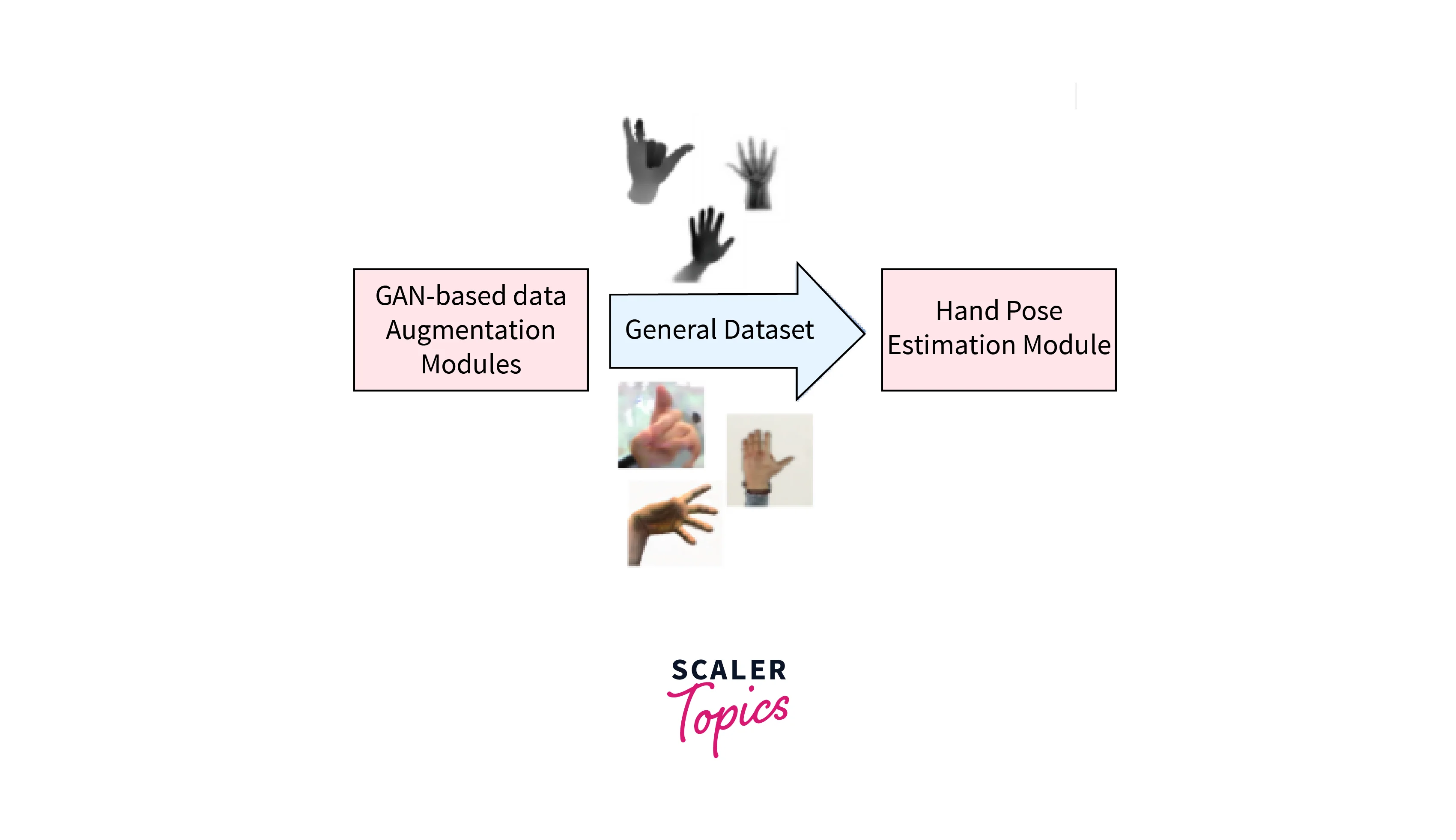

- Generative models: These models use generative approaches, such as generative adversarial networks (GANs) or variational autoencoders (VAEs), to synthesize human poses or generate new poses. They can be trained using unsupervised or semi-supervised learning algorithms and can be used for applications such as animation and virtual reality.

The choice of models for human pose estimation using deep learning depends on the specific application and requirements, such as the level of accuracy needed, the type of data available, and the available computational resources.

Different Libraries For Human Pose Estimation Using Deep Learning

-

OpenPose: An open-source library that provides real-time 2D multi-person pose estimation. It uses deep learning techniques and can detect 25 key points in the human body.

-

PoseNet: A lightweight and efficient deep learning model for 2D human pose estimation. It can detect 17 key points on the human body and can run in real-time on a web browser.

-

DeepPose: A deep learning framework for 2D and 3D human pose estimation. It uses a convolutional neural network (CNN) and can detect 14 key points in the human body.

-

Convolutional Pose Machines (CPMs): A deep learning model for 2D human pose estimation that uses a cascaded network of CNNs to estimate the location of body joints.

-

AlphaPose: An accurate and efficient open-source library for multi-person 2D and 3D pose estimation. It uses deep learning techniques and can detect 18 key points in the human body.

-

Simple Pose: A lightweight deep learning model for 2D human pose estimation that can run in real-time on mobile devices. It can detect 18 key points in the human body.

-

Detectron2: A modular object detection library that includes support for human pose estimation using a variety of techniques such as Mask R-CNN and CPMs.

Here is a table with links to the Github repositories for each of the libraries mentioned:

| Library | Github Repository |

|---|---|

| OpenPose | https://github.com/CMU-Perceptual-Computing-Lab/openpose |

| PoseNet | https://github.com/tensorflow/tfjs-models/tree/master/posenet |

| DeepPose | https://github.com/eldar/deep_pose |

| Convolutional Pose Machines (CPMs) | https://github.com/shihenw/convolutional-pose-machines-release |

| AlphaPose | https://github.com/MVIG-SJTU/AlphaPose |

| Simple Pose | https://github.com/microsoft/human-pose-estimation.pytorch |

| Detectron2 | https://github.com/facebookresearch/detectron2 |

Human Pose Estimation using Deep Learning

Here is a step-by-step tutorial for building a human pose estimation model using deep learning:

1. Imports First, we need to import the required libraries and modules.

2. Dataset Next, we need a dataset for training and testing the model. There are several publicly available datasets for human pose estimation, such as COCO, MPII, and PoseNet. For this tutorial, we will use the MPII Human Pose dataset, which contains over 25,000 images with labeled key points.

3. Data preprocessing Before we can train the model, we need to preprocess the dataset. This includes loading the images, resizing them to a fixed size, and extracting the labeled key points.

4. Brief description of the features The features in this dataset include the input images and the labeled keypoints. The images are represented as RGB arrays of shape (224, 224, 3), and the keypoints are represented as arrays of shape (16, 2), where each row represents a keypoint (such as the nose, left elbow, or right knee) and the two columns represent the x and y coordinates of the keypoint.

5. Standardization of the features To improve the performance of the model, we can standardize the input features by subtracting the mean and dividing by the standard deviation.

6. Splitting the dataset Now, we can split the dataset into training and testing sets using the train_test_split() function from scikit-learn.

7. Building a human pose detection model

Building a human pose estimation model using deep learning involves designing and training a deep learning neural network. In this tutorial, we will use the OpenPose model, which is one of the most popular and accurate models for human pose estimation.

Here are the steps to build the human pose estimation model:

8. Install the required libraries: To start, you need to install the following libraries:

- TensorFlow

- Keras

- NumPy

- Matplotlib

- OpenCV

9. Download the OpenPose model: You can download the OpenPose model from its official repository on GitHub. The model is available in different formats, such as .caffemodel, .pb, etc. In this tutorial, we will use the .pb format.

10. Load the model: Once you have downloaded the model, you can load it into your code using the TensorFlow library. Here's an example code snippet:

11. Define the input and output nodes: After loading the model, you need to define the input and output nodes of the model. The input node is usually the image that you want to perform pose estimation on, and the output node is the estimated poses. Here's how you can define the input and output nodes:

12. Prepare the input image: Before feeding the input image to the model, you need to preprocess it by resizing it to a fixed size, normalizing its pixel values, etc. Here's an example code snippet:

13. Feed the input image to the model: After preprocessing the input image, you can feed it to the model and obtain the estimated poses. Here's an example code snippet:

14. Visualize the output: Finally, you can visualize the estimated poses on the input image using OpenCV or Matplotlib. Here's an example code snippet:

Testing

Applications of Human Pose Estimation

- Gaming and entertainment: Human pose estimation using deep learning is widely used in the gaming and entertainment industries to create interactive games and animations. It helps in detecting and tracking the movement of players to control the actions of the game characters.

- Health and fitness: Human pose estimation is used in various health and fitness applications to monitor the posture and movements of individuals during exercise or physical therapy. It helps in correcting the posture and reducing the risk of injuries.

- Sports analysis: Human pose estimation is used in sports analysis to track the movement and gestures of athletes. It helps in analyzing the techniques and identifying the strengths and weaknesses of the athletes.

- Surveillance and security: Human pose estimation using deep learning is used in surveillance and security systems to detect and track the movements of individuals in restricted areas. It helps in identifying suspicious activities and preventing crimes.

- Robotics and automation: Human pose estimation is used in robotics and automation to enable robots to interact with humans and perform tasks in a human-like manner. It helps in improving the efficiency and accuracy of the robotic systems.

- Virtual and augmented reality: Human pose estimation using deep learning is used in virtual and augmented reality applications to create immersive experiences. It helps in tracking the movements of users and creating realistic virtual environments.

- Fashion and retail: Human pose estimation is used in fashion and retail industries to create virtual try-on solutions. It helps in providing personalized shopping experiences to customers and reduces return rates.

Conclusion

- In conclusion, human pose estimation using deep learning is a rapidly growing field in computer vision and deep learning that has a wide range of applications in various industries.

- With the advancements in` deep learning techniques and the availability of large-scale datasets, the accuracy, and efficiency of human pose estimation models have significantly improved over the years.

- The availability of open-source libraries and pre-trained models has made it easier for developers to integrate human pose estimation into their applications.

- With the increasing demand for human-like interactions between machines and humans, the importance of human pose estimation is expected to grow even more in the coming years.