Jenkins Version Control

Overview

Jenkins is an open-source automation server, to provide continuous integration and continuous delivery (CI/CD). Jenkins is a powerful tool for managing and coordinating the software development lifecycle when used in conjunction with version control systems like Git. Integrating Jenkins with version control systems can strengthen these capabilities and has a variety of benefits that promote a more collaborative, organized, and effective workflow.

Setting Up Jenkins and Version Control Integration

Integrating Jenkins and version control systems typically involves linking your Jenkins server to your chosen version control repository, such as GitHub or GitLab. This enables Jenkins to monitor the storage for changes and automatically trigger predefined workflows (often defined in Jenkins files or pipeline scripts) upon new commits or pull requests. Setting up this integration usually involves configuring Jenkins plugins designed to communicate with the chosen version control system.

Once configured, Jenkins can seamlessly pull in code changes, build, test, and deploy applications based on the defined workflows. Before diving into the technical aspects of integration, it's crucial to select the right version control system for your project. Git, a distributed version control system, is the most popular choice due to its flexibility, speed, and branching capabilities. GitHub and GitLab are widely used platforms that provide both version control and collaboration features.

Configuration and Integration:

- Installing Jenkins Plugins:

Jenkins boasts an extensive plugin ecosystem that allows users to extend its functionalities. To integrate Jenkins with a version control system, you'll need to install relevant plugins that enable communication between Jenkins and your chosen system. For Git, plugins like the "GitHub Plugin" or "GitLab Plugin" can be employed. - Creating a Jenkins Job or Pipeline:

Once the plugins are installed, the next step is to set up a Jenkins job or a pipeline. A job or pipeline defines the steps Jenkins needs to take in response to code changes. This might involve building, testing, packaging, and deploying the application. - Configuring Job Triggers:

One of the primary advantages of version control integration is the ability to trigger automated workflows based on specific events. This might include pushing code changes, creating pull requests, or merging branches. Jenkins can be configured to listen to these events and respond accordingly. - Defining the Jenkinsfile:

Central to Jenkins' version control integration is the concept of the Jenkinsfile. This file is typically stored in your version control repository and defines the complete pipeline as code. It outlines the stages, steps, and conditions for building, testing, and deploying your application. - Automated Workflow Execution:

Once the Jenkinsfile is in place and your job or pipeline is configured, Jenkins can autonomously execute the defined workflow whenever there's a relevant event in the version control repository. This streamlines the process and ensures consistency and reproducibility in the development lifecycle.

Benefits of Version Control for Jenkins

The integration of Jenkins and version control systems introduces a multitude of benefits that significantly impact the development process:

- Real-time Automation:

By linking Jenkins with version control, manual intervention is minimized. Jenkins monitors the repository for changes and triggers automated workflows in real-time, saving developers valuable time. - Consistency and Reproducibility:

The use of Jenkinsfile ensures that each build and deployment is consistent and reproducible. This consistency minimizes discrepancies between development, testing, and production environments. - Collaboration Enhancement:

The project can have multiple developers working on various areas of it at once. Integration makes ensuring that modifications to the code are automatically reviewed, merged, and deployed, resulting in easier cooperation and fewer integration problems. - Rapid Feedback Loop:

Automated testing and deployment provide rapid feedback to developers. If a commit introduces issues, developers are immediately notified, enabling quicker bug resolution. - Reduced Risk:

Automated testing and deployment help catch issues early in the development process, reducing the risk of defects reaching production environments. - Traceability:

By associating builds and deployments with specific commits, it becomes easier to trace issues back to their origin, simplifying debugging and troubleshooting.

Jenkinsfile and Pipeline as Code

Jenkins, a versatile automation server, takes automation to the next level by introducing the concept of "Pipeline as Code" through the use of Jenkinsfile.

A Jenkinsfile is a key component in the Jenkins automation server that defines and describes the entire Continuous Integration/Continuous Deployment (CI/CD) pipeline as code. It encapsulates the complete workflow, from source code integration to deployment, allowing teams to automate and manage their software delivery process efficiently. This approach, central to the DevOps philosophy, allows developers to define, version, and manage their entire CI/CD process as code, providing unparalleled control and consistency.

A Jenkinsfile is a text file that resides in your version control repository alongside your application code. It serves as the blueprint for your entire software delivery pipeline. In essence, a Jenkinsfile defines the various stages, steps, triggers, and conditions for building, testing, and deploying your application.

Pipeline as a Code

At its core, Pipeline as Code is a methodology where the entire CI/CD process is defined, managed, and executed using code. This code-based approach eliminates manual interventions, minimizes errors, and enhances the reliability of software delivery. The "pipeline" in this context refers to the sequence of stages and steps that code traverses from development to production.

Some Key Components:

-

Declarative Pipelines:

Declarative pipelines provide a structured and intuitive way to define your pipeline. By using a series of predefined steps and directives, teams can create a pipeline with minimal effort. -

Scripted Pipelines:

For more advanced customization, scripted pipelines offer the flexibility of Groovy scripting. This allows teams to create complex logic, conditionals, and dynamic behaviors within the pipeline. -

Version Control Integration:

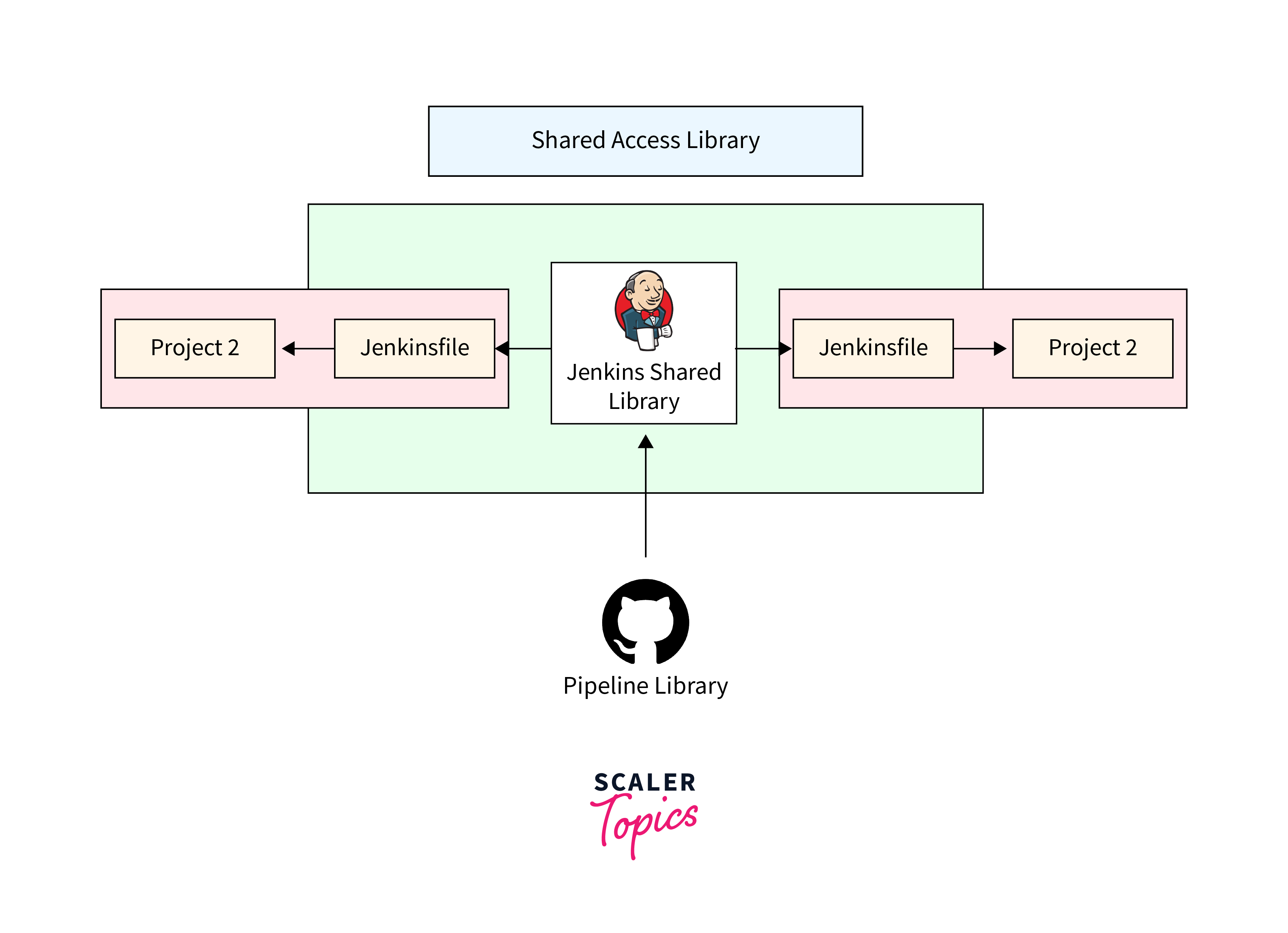

The essence of Pipeline as Code lies in version control integration. Pipelines are stored alongside application code in repositories, making them subject to the same versioning, collaboration, and traceability practices. -

Reusable Components:

Shared libraries and reusable components can be leveraged to standardize certain stages or steps across different pipelines. This promotes consistency and reduces duplication.

Creating and Managing Jenkinsfile

A Jenkinsfile is more than just lines of code; it's the architectural blueprint that governs the deployment journey of your application. Created using the Groovy-based Domain-Specific Language (DSL), the Jenkinsfile outlines the stages, steps, triggers, and conditions that your code traverses from inception to deployment. This scripting approach elevates your CI/CD process to the realm of automation and repeatability. Creating a Jenkinsfile involves sculpting a sequence of stages that reflect the milestones your code passes through. Common stages include "Build," "Test," "Deploy," and "Notify." Within each stage, individual steps are defined to perform specific actions, such as checking out code, running tests, packaging artifacts, and deploying to target environments. The result is a structured, granular representation of your CI/CD process, empowering automation.

Demo Jenkinsfile

In this example, the Jenkinsfile outlines a basic pipeline with five stages:

-

Checkout:

This stage checks out the code from the version control repository (assuming you're using Git). -

Build:

The code is built using npm commands. This could be replaced with any build tool relevant to your project. -

Test:

Automated tests are run to ensure the code's quality and functionality. -

Deploy:

The application is deployed to a staging environment using Kubernetes commands. Adjust this part based on your deployment strategy. -

Notify:

A simple notification is printed to indicate the completion of the pipeline.

Managing Jenkinsfile:

- Version Control Integration:

Jenkinsfiles are code, and just like your application code, they should reside in version control repositories. This practice enables versioning, collaboration, and the ability to roll back changes if needed. - Single Source of Truth:

Maintain a single Jenkinsfile per application or project. This simplifies management and ensures that your pipeline is focused and tailored to the specific requirements of that project. - Pipeline Templating:

If your organization manages multiple projects with similar pipeline structures, consider creating reusable pipeline templates. This promotes consistency and reduces duplication of effort. - Code Review:

Treat Jenkinsfile like any other code. Implement a code review process to ensure quality, adherence to standards, and alignment with your team's goals. - Documentation:

Include comments in your Jenkinsfile to provide context and explanations for stages, steps, and decisions. This documentation aids in understanding and troubleshooting. - Pipeline Visualization:

Use tools like the Blue Ocean plugin in Jenkins to visualize and analyze your pipeline's flow. This visual representation helps identify bottlenecks and areas for optimization. - Testing:

Jenkinsfile can be tested just like any other code. Write unit tests or integration tests to ensure that your pipeline behaves as expected, catching issues early in the development process. - Security Considerations:

Be mindful of sensitive information in your Jenkinsfile, such as passwords or API tokens. Use credentials provided by Jenkins or securely manage environment variables. - Backup and Recovery:

Back up your Jenkins configuration and Jenkinsfile regularly. This ensures that you can quickly restore your CI/CD setup in case of server failures.

In a real-world scenario, you would likely include additional steps, error handling, environment-specific configurations, and integrations with tools like version controlling, Docker, testing frameworks, and more. This basic template and guide should give you a starting point to build upon managing and customizing according to your project's requirements.

Review and Approval Process

Jenkinsfile, which controls how your code moves through phases like building, testing, and deployment, is the foundation of your CI/CD automation. Maintaining the integrity and dependability of your software delivery process depends on making sure that this code is carefully examined and authorized before being incorporated into the pipeline. A thorough review and approval procedure offers the following advantages:

- Quality Assurance:

Reviews identify potential errors, logic flaws, or inconsistencies in your Jenkinsfile, ensuring that the automation behaves as expected. - Security and Compliance:

Reviewing Jenkinsfiles helps identify security vulnerabilities and ensures adherence to compliance requirements. - Alignment with Standards:

The review process ensures that your Jenkinsfile follows coding and organizational standards, maintaining consistency across the pipeline. - Documentation:

Reviews provide an opportunity to document the intent of your pipeline stages and steps, making it easier for team members to understand and contribute.

An effective review and approval process for Jenkinsfile is essential to ensure the reliability, security, and effectiveness of your CI/CD pipeline. By adhering to best practices, involving diverse reviewers, and fostering collaborative discussions, you can streamline this crucial aspect of your development process. Reduced errors, better documentation, and a more effective CI/CD ecosystem are all benefits of getting in a thorough better review process, which eventually results in the successful delivery of high-quality software.

Jenkinsfile Best Practices

- Keep Jenkinsfiles Concise and Focused:

Each Jenkinsfile should focus on one application or project, promoting clarity and maintainability. - Store Jenkinsfiles in Version Control:

Keep Jenkinsfiles alongside your application code in version control. This ensures versioning, traceability, and collaboration. - Use Declarative Syntax:

If possible, opt for Declarative Syntax. It simplifies pipeline definition and promotes readability. - Parameterization for Flexibility:

Use parameters to make your pipeline adaptable to different scenarios or environments. This reduces the need for duplicate pipelines. - Implement Tests for Jenkinsfiles:

Just like application code, write tests for your Jenkinsfiles. Automated tests ensure that your pipeline behaves as expected. - Avoid Hardcoding Credentials:

Use Jenkins' built-in credential management system to handle sensitive information securely. - Avoid Inline Scripts:

Whenever possible, avoid using inline scripts directly in the Jenkinsfile. Instead, use script files or shared libraries. - Regular Review and Refactoring:

Regularly review and refactor your Jenkinsfiles to incorporate new practices, optimize performance, and adapt to changing needs. - Approval Workflow:

Implement an approval process for changes to Jenkinsfiles. This ensures that changes are thoroughly reviewed before being applied.

Jenkinsfile best practices play a pivotal role in shaping the success of your CI/CD pipelines. By adhering to these guidelines, you can build pipelines that are not only reliable and efficient but also adaptable to the evolving landscape of software development.

Job Configuration as Code

Job Configuration as Code involves representing Jenkins job configurations as code snippets, scripts, or files, often using domain-specific languages or configuration formats. This code is then stored in version control repositories alongside application code, enabling the same benefits of versioning, collaboration, and traceability. By treating job configurations as code, you leverage the benefits of version control systems. Changes are tracked, documented, and reversible, ensuring consistency and visibility.

- Collaboration:

Job configurations become collaborative artifacts. Multiple team members can review, suggest changes, and contribute, fostering a sense of shared ownership. - Reproducibility:

JCAC ensures that job configurations are repeatable across environments. This minimizes discrepancies between development, testing, and production setups. - Consistency:

With JCAC, job configurations are maintained in a centralized location. This promotes standardization and prevents configuration drift. - Automation:

JCAC seamlessly integrates with the principles of DevOps by enabling the automation of job creation, updates, and deletion.

Version Control for Shared Libraries

Shared libraries in Jenkins allow you to encapsulate common logic, functions, and steps into reusable components. These libraries can be invoked across multiple pipelines, promoting consistency and reducing duplication of effort.

At the heart of shared libraries lies the principle of consistency. By encapsulating common logic, functions, and steps in one place, you ensure that every pipeline that utilizes these libraries adheres to the same standards. This consistency extends beyond individual pipelines to the entire CI/CD ecosystem, creating a uniform approach to building, testing, and deploying applications. Efficiency becomes the natural byproduct of shared libraries. Rather than recreating the wheel with every pipeline, developers can tap into these pre-existing, well-tested components. This not only saves time but also reduces the potential for errors that might arise from duplicating code. The power of shared libraries extends beyond the present moment. As your organization evolves and new technologies emerge, these libraries can be updated, refined, and expanded to accommodate changing needs. This future-proofing allows your CI/CD automation to remain adaptable and agile in the face of shifting landscapes.

Jenkins Environment Management

Environment management in Jenkins encompasses a symphony of activities that collectively shape the conditions in which your applications evolve. These activities span the spectrum from provisioning fresh instances to nurturing existing environments and orchestrating their retirement. Each environment—whether for development, testing, staging, or production—is carefully tailored to its specific purpose, configurations, and dependencies. The significance of managing environments with precision becomes evident through the lens of key benefits:

- Consistency:

Tailored environments provide a consistent backdrop for your CI/CD pipelines. This consistency mitigates the chances of environment-related errors and discrepancies. - Isolation:

Well-managed environments isolate the effects of changes, ensuring that developments in one environment do not inadvertently impact others. - Optimal Resource Utilization:

Environment management optimizes the allocation of resources. Resources are provisioned and consumed as needed, preventing wastage. - Reproducibility:

Managed environments empower you to recreate and analyze issues with accuracy, speeding up troubleshooting and resolution. - Scalability:

The orchestration of environments allows your CI/CD infrastructure to scale dynamically as your projects grow.

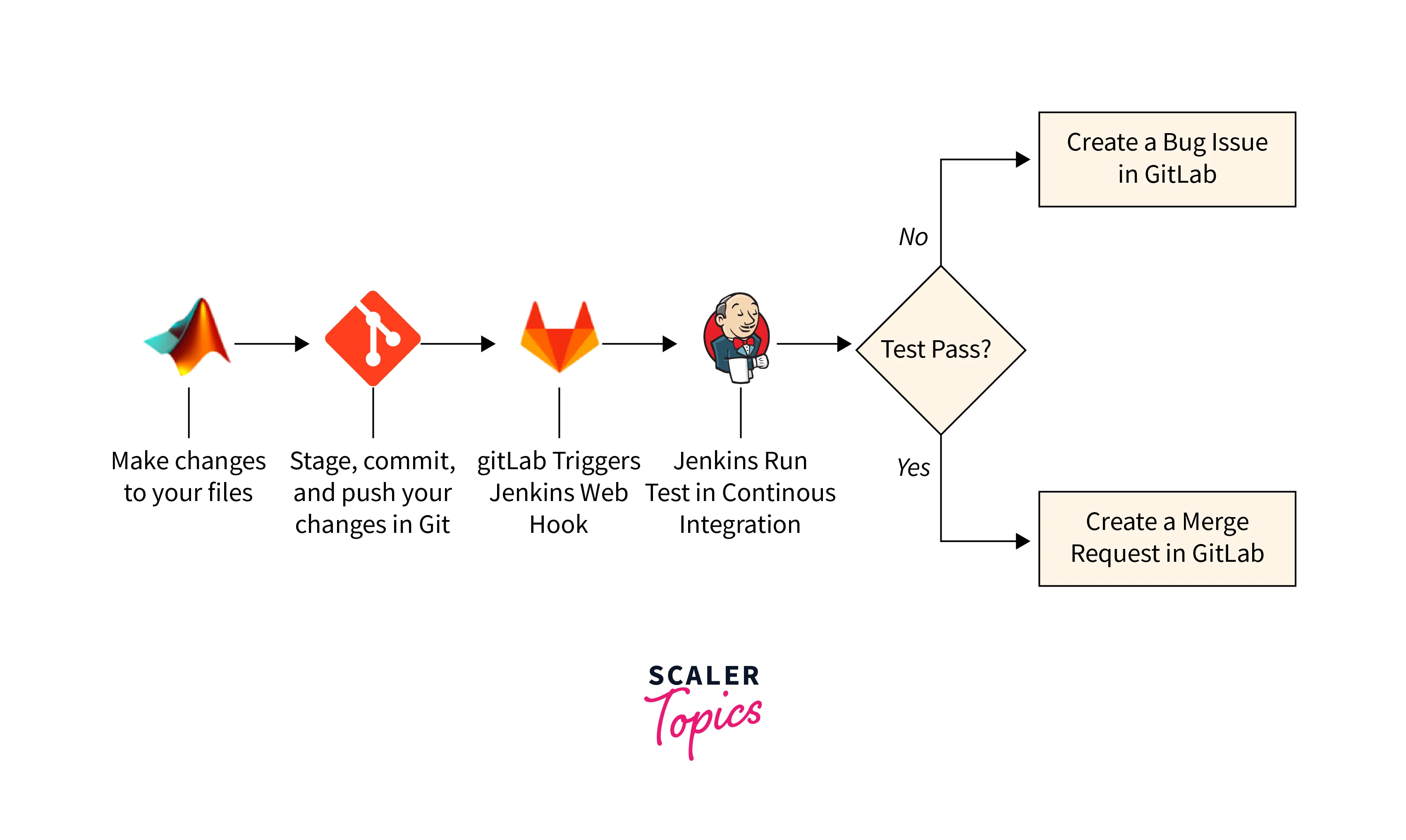

Continuous Integration and Version Control

To handle code changes and enable smooth developer communication, continuous integration primarily relies on version control systems. Git, SVN, and Mercurial version control systems offer a formal framework for maintaining codebase history, branching, and merging. These technologies enable developers to work concurrently on various features or fixes without having their work immediately impact the main source.

- Central Code Repository:

A common repository for code acts as the hub to which all developers commit their code modifications. All team members are working with the most recent version of the codebase thanks to this centralization. - Branching Strategy:

Using a branching strategy, developers can work independently on a variety of features or repairs. So long as they are not yet ready for integration, their updates are kept separate from the main source. - Frequent Commits:

Developers regularly commit their code modifications to each of their branches. This procedure makes sure that work is gradually stored and is not lost in the event of any problems. - Automated testing:

CI is not complete without automated testing. Automated tests are started whenever code changes are committed to make sure no errors or regressions have been introduced. - Build Automation:

Every time new code is pushed to the repository, CI systems instantly construct the application. The code's ability to be successfully compiled and packaged is verified in this stage. - Integration Testing:

After successful builds, integration tests are performed to verify that the different components of the application work together cohesively.

This practice forms the foundation of modern software development, enabling teams to deliver high-quality software at a rapid pace.

Jenkins Backup and Recovery

Jenkins backup and recovery is a critical process aimed at safeguarding your Continuous Integration and Continuous Deployment (CI/CD) pipelines from unexpected disruptions. This involves creating duplicate copies of essential components within your Jenkins environment, including configurations, job definitions, plugins, and related data. These backups act as a safety net, offering protection against a range of potential risks such as hardware failures, data corruption, accidental deletions, and unforeseen incidents that might otherwise disrupt the smooth operation of your CI/CD operations. By regularly generating backups of your Jenkins setup, you ensure that you have up-to-date copies of all the configurations that define how your CI/CD processes are executed. This includes the settings for your Jenkins instance, the definitions of various jobs and pipelines, as well as the plugins and tools integrated into your environment. In the event of a hardware failure, where the server hosting Jenkins becomes inoperable, or if data corruption occurs, your backup copies can be used to restore your Jenkins environment to a functional state. Similarly, if crucial job definitions or configurations are accidentally deleted or altered, having reliable backups allows you to quickly recover those settings without significant downtime.

Recovery Processes

- Server Restoration:

In case of server failure, follow a step-by-step procedure to restore your Jenkins environment using backups.- Identify Issue:

Diagnose server failure and understand the scope of the problem. - Backup Check:

Ensure recent and reliable backups of Jenkins data are available. - Recovery Environment:

Set up a new server or environment for restoration. - Data Restore:

Deploy Jenkins and restore the Jenkins home directory from backups.

- Identify Issue:

- Configuration Restoration:

Restore configurations, plugins, and job definitions from backups to ensure the consistent functioning of your CI/CD pipelines.- Backup Retrieval:

Access backups containing configurations, plugins, and job definitions. - Configuration Reapplication:

Use JCasC or manual setup to restore configurations. - Plugin Reinstallation:

Install plugins, restoring plugin files if necessary. - Job Definition:

Retrieve job definitions from version control or backup.

- Backup Retrieval:

Conclusion

- Jenkins integration with version control systems empowers developers to automate and streamline their CI/CD processes. By closely monitoring repositories and triggering automated workflows, Jenkins elevates the efficiency of code integration and delivery.

- Blueprints of Automation:

Jenkinsfiles, at the heart of "Pipeline as Code," serve as the blueprints that guide code from development to deployment. By scripting the CI/CD process, Jenkinsfiles offer repeatability, consistency, and the power to adapt to evolving requirements. - Implementing review and approval processes, adhering to Jenkinsfile best practices, and managing job configurations as code enriches the CI/CD journey with quality, security, and standardization.

- Pillars of Consistency:

The use of shared libraries encapsulates common logic and fosters uniformity across pipelines. By reusing pre-defined components, development teams can enhance consistency, save time, and minimize errors. - The precision of environment management contributes ta seamless CI/CD orchestration. Tailored environments, resource optimization, and reproducibility collectively ensure the reliability of your pipeline.