Kafka Topic

What is Kafka's Topic?

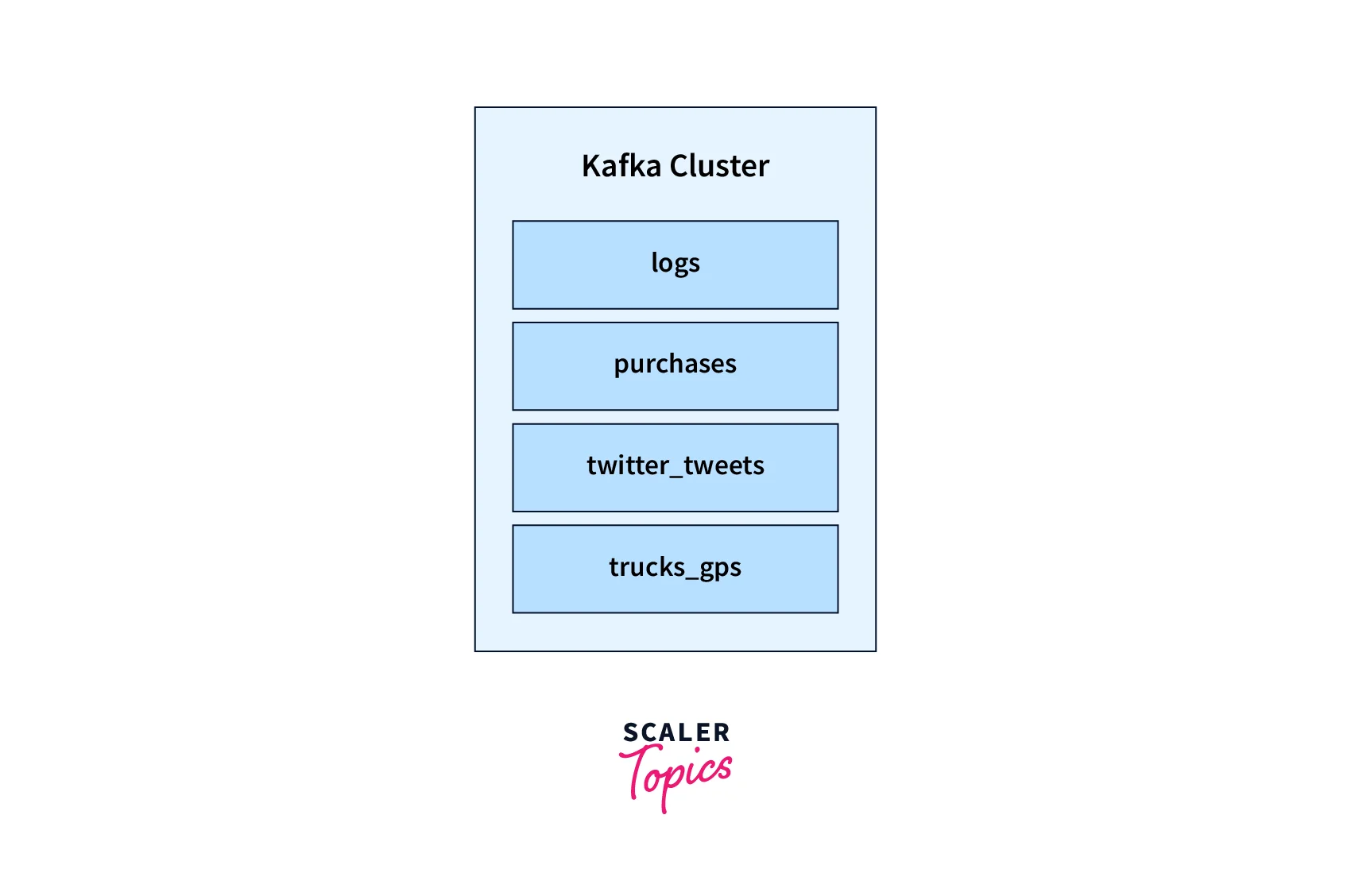

Kafka topics are multi-producer and multi-subscriber, with zero, one, or many producers and subscribers. Logs and purchases topics contain log messages and purchase data from an application.

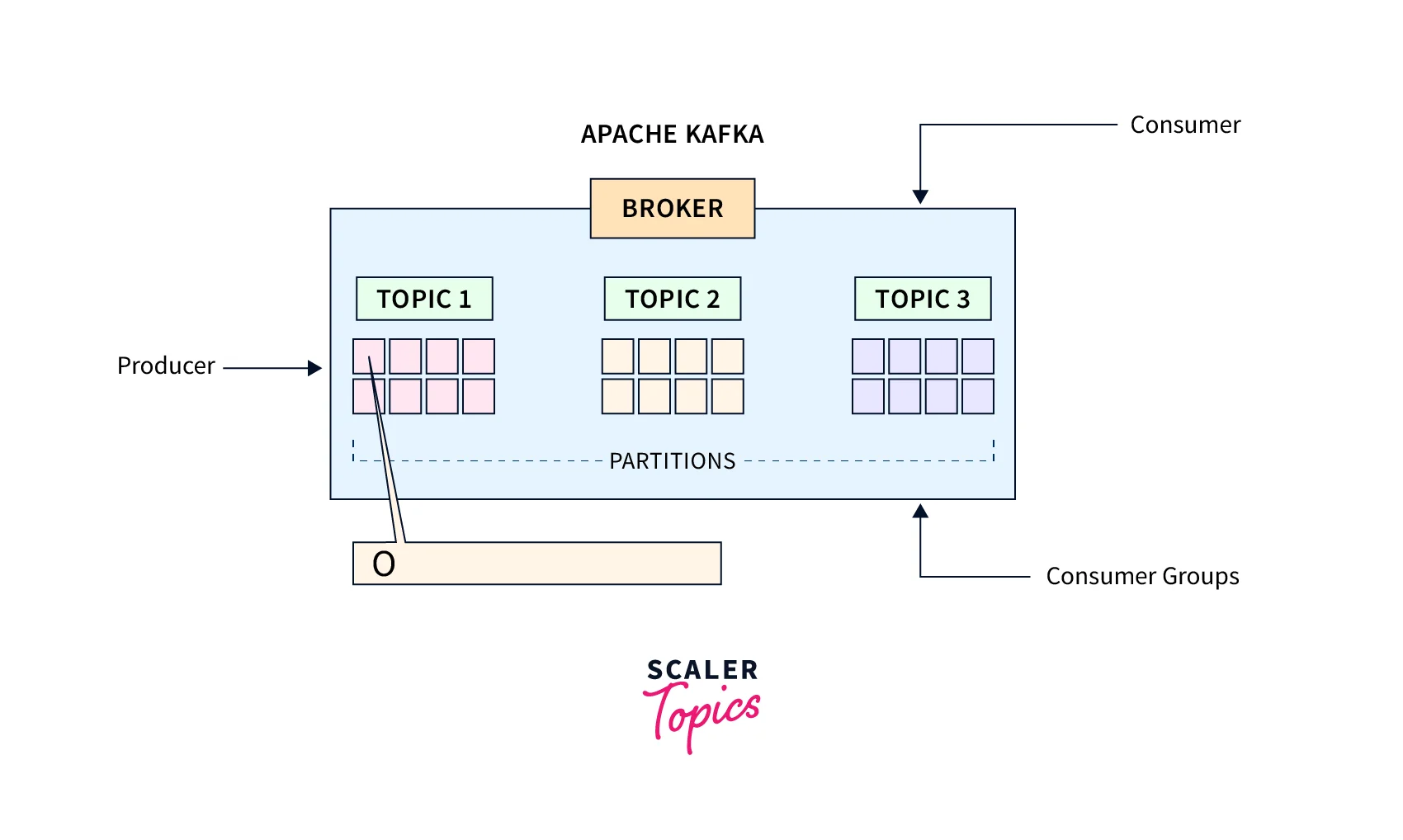

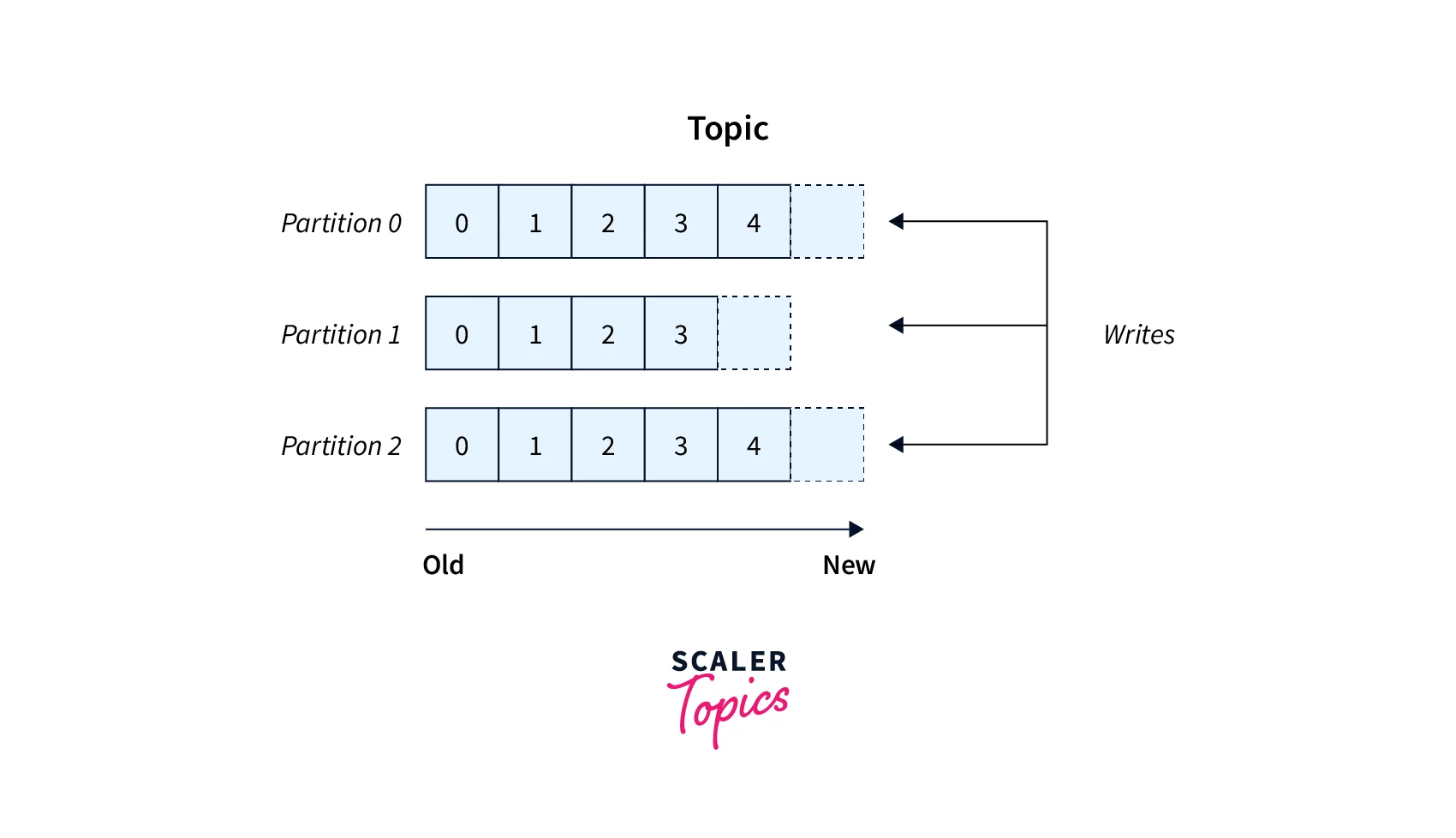

During the implementation, Kafka topics are partitioned and repeated between brokers. Each node in a Kafka cluster is referred to as a broker. Partitions are significant because they allow topics to be parallelized, resulting in high message throughput. To maintain track of the messages in the various partitions of a topic, offsets are assigned to each message in a partition.

Kafka Topic Partition

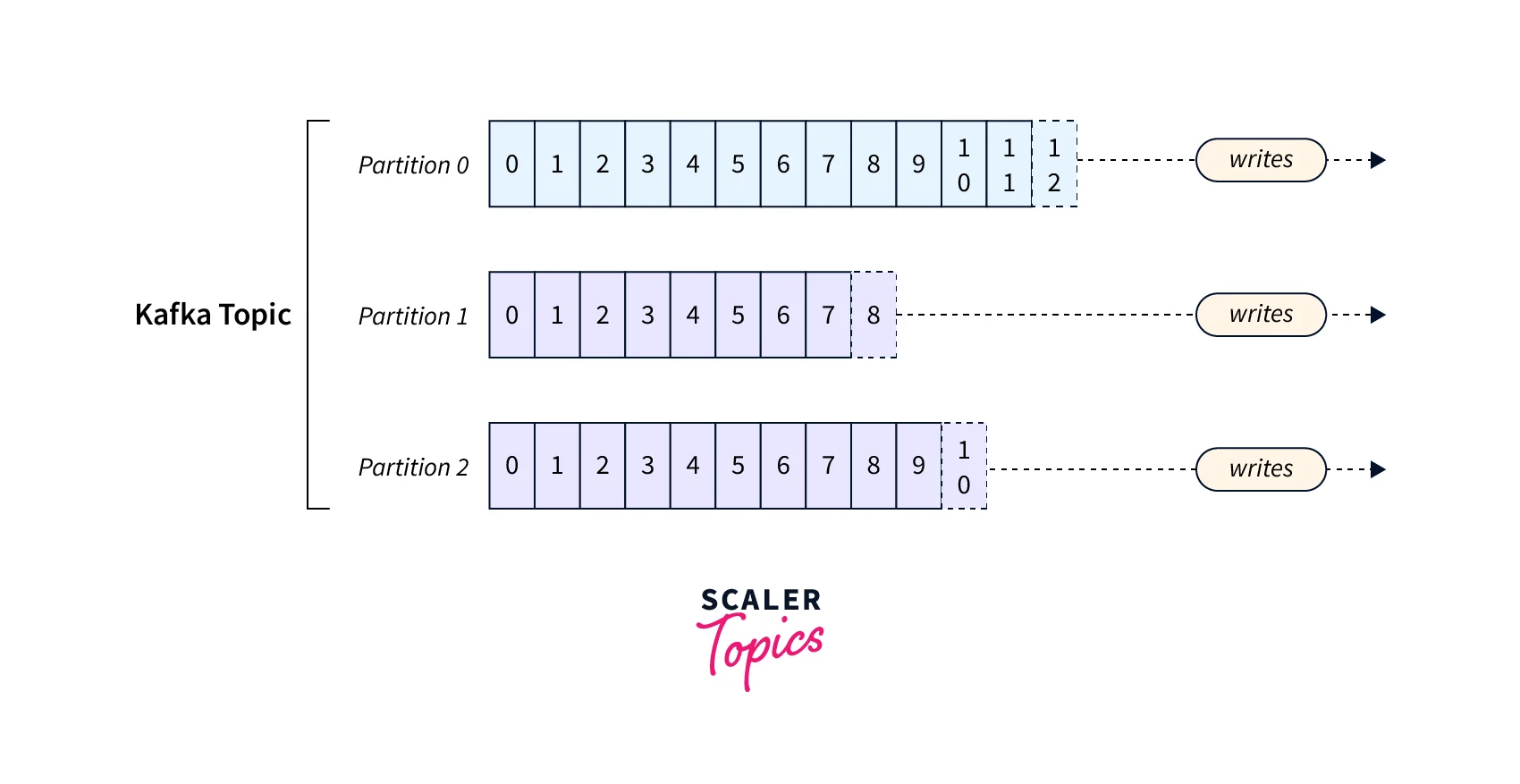

A single topic may contain several partitions; topics with 100 partitions are usual. The number of divisions of a topic is defined when the topic is created. Partitions are numbered from 0 to N-1, where N is the number of partitions. Partitioning a topic log into multiple logs can distribute the work of storing, writing, and processing messages across multiple nodes in the Kafka cluster.

- Kafka partitions a topic using a hash of a key to ensuring messages with the same key always land in the same partition and are always in order. If a message lacks a key, all partitions receive an equal amount of data, but the input messages are not ordered.

- Kafka uses a hash of a key to ensuring that messages with the same key always land in the same partition and are always in order.

Brokers

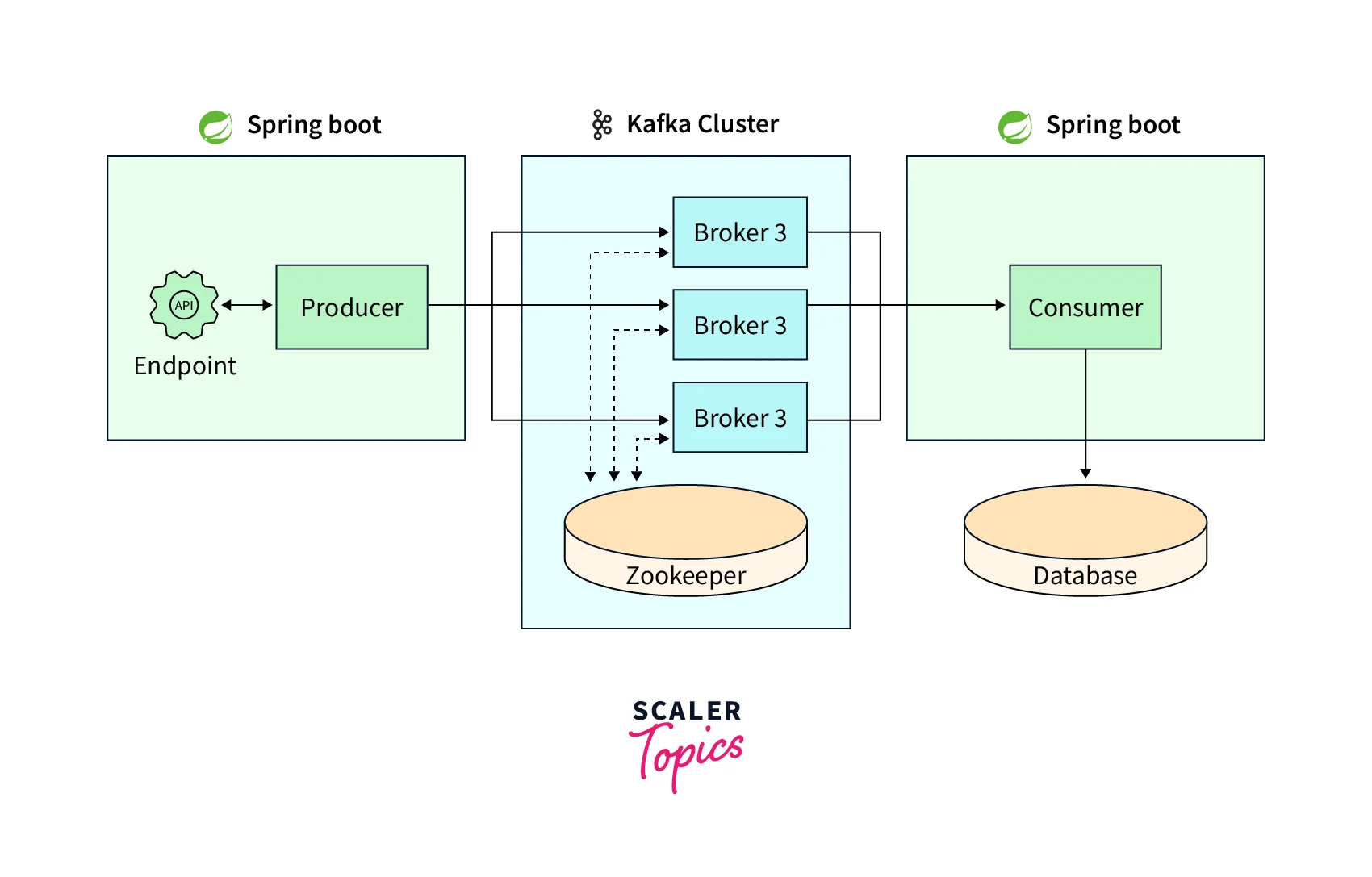

A Kafka server that is part of a Kafka Cluster is known as a broker. A cluster of Kafka brokers exists. Several Kafka Brokers running on numerous servers make up the Kafka Cluster.

To manage their data, organizations like Netflix and Uber employ hundreds of thousands of Kafka brokers. An exclusive numeric ID is used to identify each broker in a cluster. Three Kafka brokers make up the Kafka cluster in the following diagram.

Brokers and Topics in Kafka

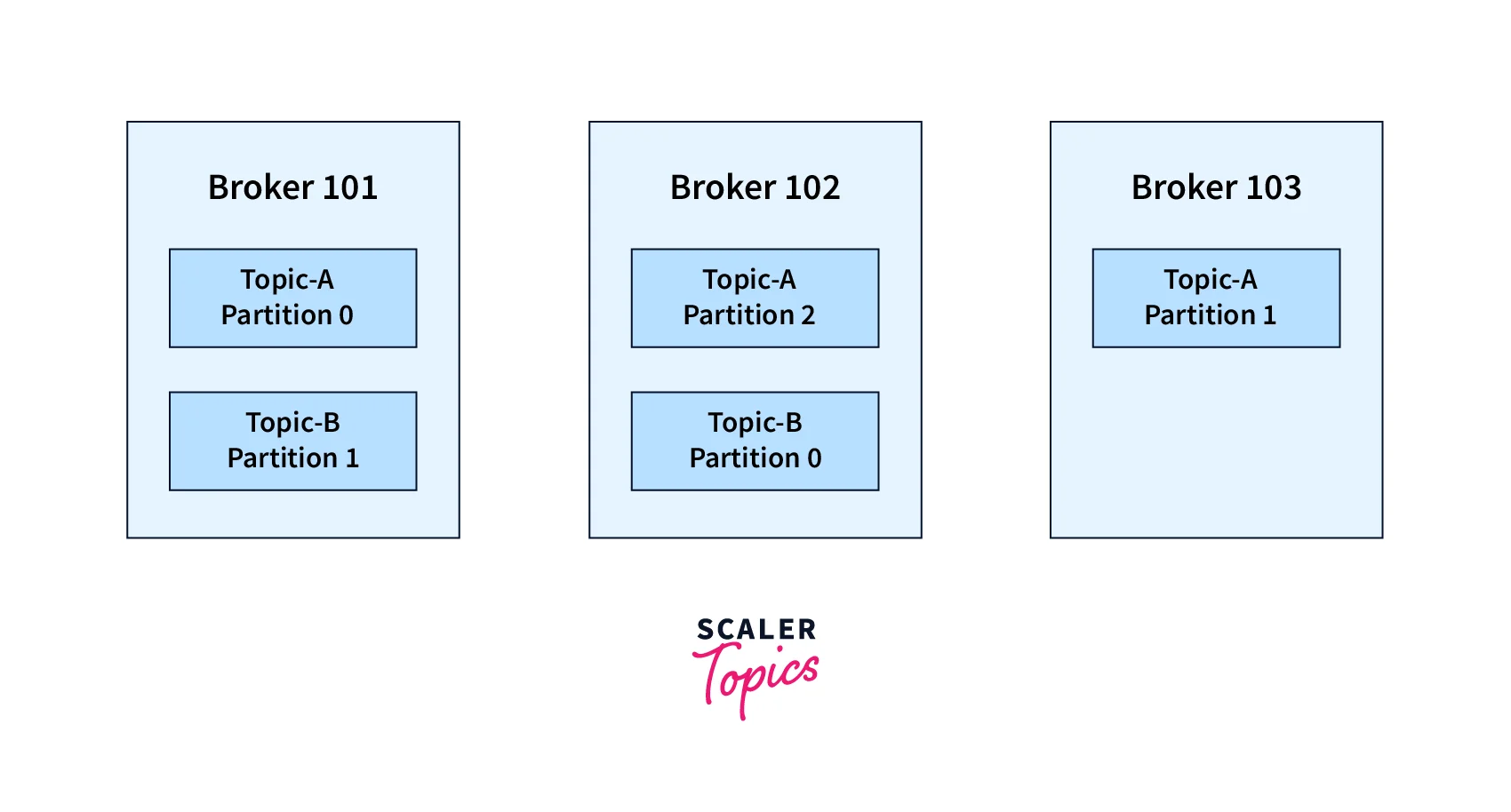

Kafka brokers store their data in a directory with each topic partition as its associated name. Kafka topics are partitioned to provide load balancing and scalability, with partitions evenly distributed among brokers.

Topic-A and Topic-B are equally divided across the cluster's three active brokers. Kafka distributes partitions equally across the available brokers, so there may be fewer or more divisions than the number of brokers in the cluster.

How to Create Apache Kafka Topics?

Step 1 : Setting up the Apache Kafka Environment

The command prompt is used to create and customize Kafka Topics by typing text instructions into the terminal. The procedures for generating Kafka topics and setting them up to transmit and receive messages are listed below.

Set up Kafka, Zookeeper, Java 8+ Version, and Java Home environment variable.

Open a new command prompt and type the following command to launch Apache Kafka:

Zookeeper is used to store metadata and cluster information, so both Kafka and Zookeeper must be running when messages are transferred.

Run the following command:

Now, Kafka and Zookeeper have started and are running successfully.

Step 2: Setting Up and Configuring Topics in Apache Kafka

You will learn how to establish Kafka Topics and set them up for effective message transmission in the following phases.

Open a new command prompt window to start a new Kafka Topic:

The command "Created Topic Test" generated a new topic with a single partition and replication factor, including Create, Zookeeper, localhost:2181, Replication-factor, and Partitions.

- Create: This is the standard Kafka command for starting a new topic.

- Partitions: Separate Topics into Partitions to balance messages/loads.

- Replication Factor: The Kafka Cluster's Replication Factor indicates how many Topics are duplicated or replicated, and if set to 1, a newly generated Topic will be replicated once. This ensures fault tolerance of the Kafka Server.

- Zookeeper localhost:2181: Zookeeper uses port 2181 to generate Topics in Kafka, and diverts command requests to the Kafka Server or Broker to generate a new topic.

You have successfully generated a new Kafka Topic by following the instructions above.

Step 3: Send and receive messages via Apache Kafka Topics

Open parallel instances of the Producer and Consumer Consoles, and write text or messages on the Producer Console and send them to the Consumer Console.Setting up Apache Kafka Producer and Consumer to write and read messages.

Where Does Kafka Store Data?

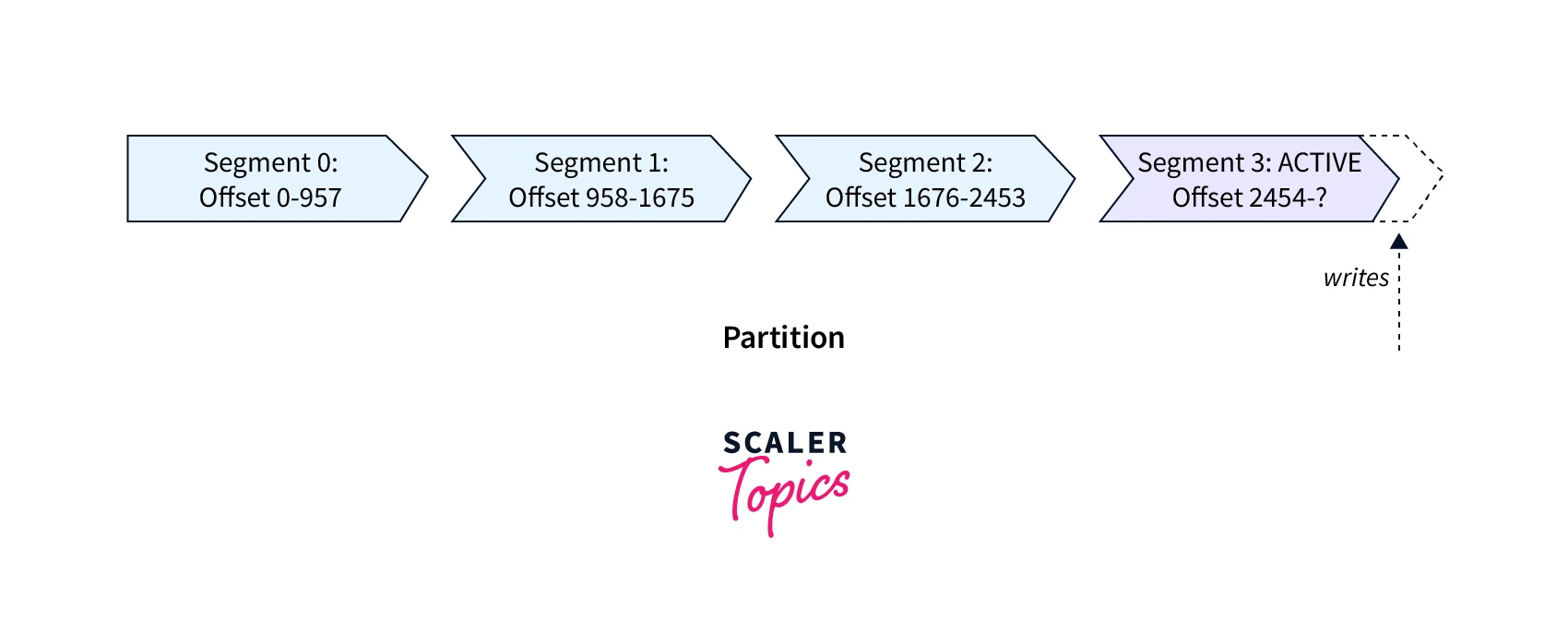

Kafka is a distributed platform for real-time data processing, storing data in topics with partitions. The precise concept of storage is depicted in the picture below.

Kafka's fundamental storage unit is a partition replica. When you establish a topic, Kafka initially selects how to divide the partitions across brokers. It distributes clones evenly across brokers. Kafka distributes partition replicas evenly across brokers when establishing a topic, dividing partitions into 1GB or a week of data.

At any one moment, only one segment is ACTIVE - the one to which data is being written. A section can only be erased after it has been closed. Two Broker setups govern the size of a segment (which can be modified at the topic level too)

At any one moment, only one segment is ACTIVE - the one to which data is being written. A section can only be erased after it has been closed. Two Broker setups govern the size of a segment (which can be modified at the topic level too)

log.segment.bytes: the maximum byte size of a single segment (default 1 GB)

log.segment.ms: the amount of time Kafka will wait before committing a segment if it is not full (default 1 week)

A Kafka broker maintains an open file handle for each segment in each partition, even dormant segments. This frequently results in a large number of open file handles, and the operating system must be tweaked accordingly.

How to Clear a Kafka Topic?

Topics are generally written and recycled over the lifespan of a Kafka cluster. The topics' data may expire, but even if it is empty, the topic will remain. First, the topic may be useful in the future. Second, it may have certain specific parameters imprinted by the originator, and Kafka cannot determine whether or not a topic is still useful.

Kafka - topics. sh When eliminating Kafka topics, the tool Kafka - topics. sh is suggested. If you're not sure how to install or utilize Kafka utils, see How to See Kafka Messages.

To view all discussions, use the utility:

Then choose your topic and remove it:

Kafka will be given the command to remove the topic in combination with Zookeeper.

Conclusion

-

Records, topics, consumers, producers, brokers, logs, partitions, and clusters constitute Kafka. Kafka records are immutable and topics are multi-producer and multi-subscriber, with zero, one, or many producers and consumers writing events.

-

Kafka combines three critical functionalities, allowing you to build your event streaming use cases end-to-end with a single battle-tested solution:

- To publish (write) and subscribe to (read) streams of events, as well as to continuously import and export data from other systems.

- To keep streams of events alive and well for as long as you desire.

- To process streams of events as they happen or in retrospect.

-

Kafka topics are partitioned for maximum throughput and scalability on topics. Distributing partitions for a topic evenly among Kafka brokers provides load balancing and scalability.

-

Kafka’s fundamental storage unit is a partition replica. Kafka distributes partition replicas evenly across brokers when establishing a topic.