Securing Kafka: An In-depth Look at Kafka Authentication

Overview

Kafka Authentication refers to the process of validating the identities of clients and servers in Apache Kafka, a distributed streaming platform. It ensures that only authorized entities can access and interact with Kafka resources. Kafka supports various authentication mechanisms, including SSL/TLS, SASL, and OAuth. SSL/TLS provides secure communication between clients and brokers using certificates. SASL allows authentication using different protocols like Kerberos, PLAIN, and SCRAM. This article provides a comprehensive exploration of Kafka Authentication methods to enhance the security of Apache Kafka. This article delves into the importance of authentication in ensuring authorized access to Kafka resources.

Introduction

Brief Overview of Apache Kafka

Apache Kafka is a distributed streaming platform known for its high-throughput, fault-tolerant, and real-time data processing capabilities. It operates on a publish-subscribe model, where producers publish data to categorized streams called topics, and consumers subscribe to those topics to consume and process the data.

Kafka offers key features such as fault tolerance through data replication, scalability for handling increased data loads, durability by persisting data to disk, and low-latency processing for real-time analytics. It finds applications in real-time analytics, event sourcing, log aggregation, data pipelines, and messaging systems.

With its versatility and reliability, Apache Kafka has become widely adopted for efficiently managing and processing large-scale data streams across various domains.

Importance of Authentication in Kafka

Authentication is crucial for securing Apache Kafka. It verifies client and server identities, controlling access to brokers, data publishing/consumption, and administrative tasks. It prevents unauthorized access and protects sensitive data.

Authentication ensures data protection via client verification and encrypted channels like SSL/TLS. It defends against eavesdropping, tampering, and unauthorized changes, guaranteeing secure data transmission. It aids compliance with regulatory requirements, satisfying data privacy and security rules. Authentication controls and audits Kafka resource access, demonstrating accountability and adherence to standards.

Authentication builds trust and privacy by confirming identities. This trust is vital for maintaining transaction integrity, privacy, and confidentiality.

Understanding Kafka

What is Apache Kafka?

Apache Kafka, an open-source distributed event streaming platform, was initially developed by LinkedIn and later adopted by the Apache Software Foundation. It excels at handling high-volume, real-time data streams and provides scalable and fault-tolerant messaging for integration, processing, and analytics.

Kafka follows a publish-subscribe model, where producers publish messages to topics, acting as data channels. Consumers subscribe to these topics to process data in real time.

Its distributed architecture, utilizing a cluster of servers called brokers, ensures fault tolerance and high availability. Kafka's key feature is fault-tolerant data storage, persisting records on disk with configurable retention periods for data replay and analysis.

With high throughput and low latency, Kafka is ideal for real-time streaming analytics, log aggregation, event sourcing, and messaging between microservices in distributed architectures.

Basic Kafka Architecture

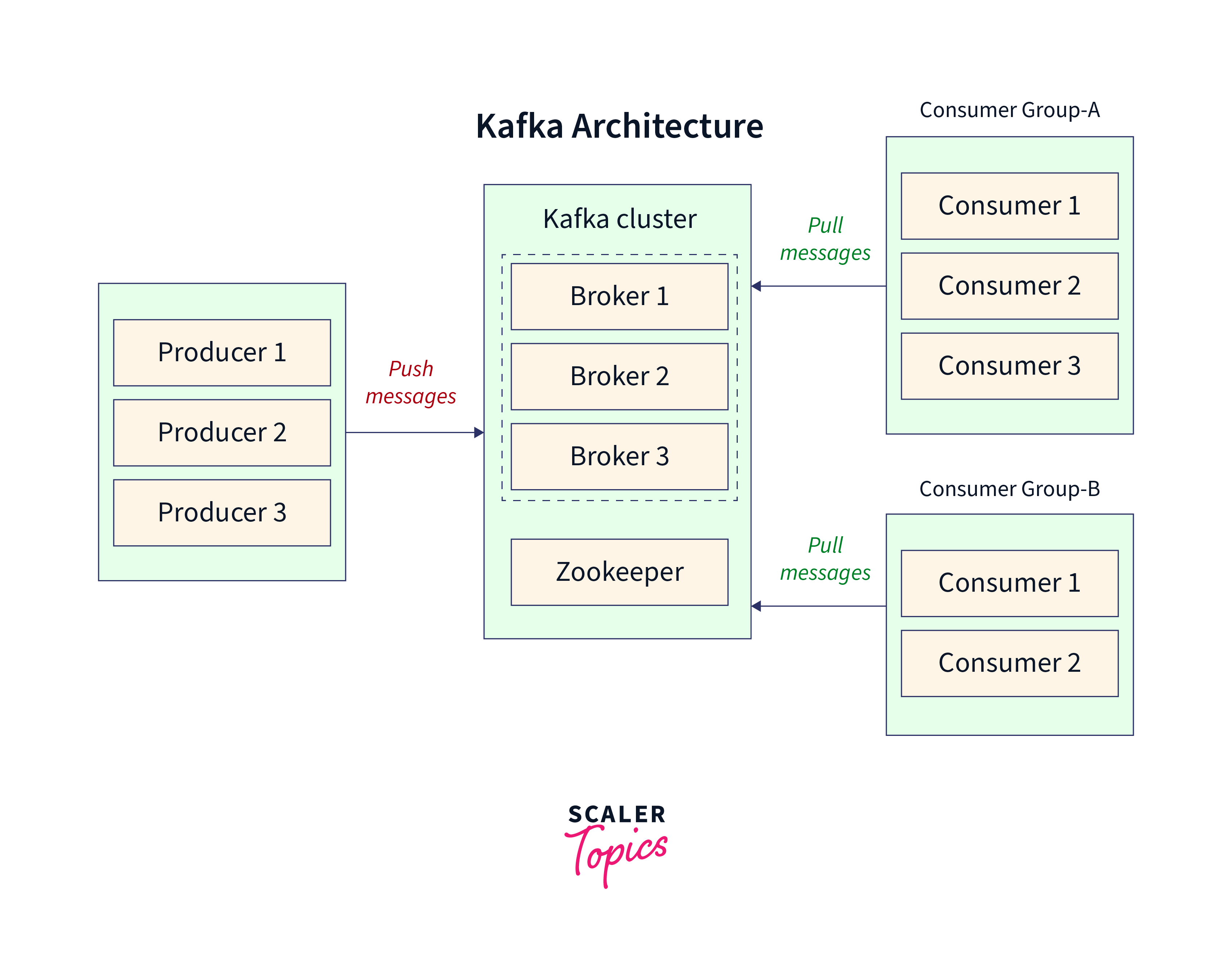

The basic architecture of Apache Kafka revolves around a few key components: producers, consumers, topics, partitions, and brokers.

- Producers: Producers are responsible for publishing data records to Kafka topics. They create messages and send them to a specific topic. Producers can be part of various applications or systems and can publish messages in real time or at their own pace.

- Consumers: Consumers subscribe to one or more topics and read data records from them. They consume the messages published by producers in the order they were produced. Consumers can process and analyze the data, perform real-time computations, or store it in external systems.

- Topics: Topics represent the data channels or feeds in Kafka. They act as categories or labels to which producers publish messages and from which consumers consume messages. Each topic is identified by a name and is typically divided into partitions.

- Partitions: Each topic can be divided into multiple partitions. Partitions are the basic units of scalability and parallelism in Kafka. They allow for horizontal scaling, as multiple brokers can handle different partitions of a topic. Each partition is ordered and immutable, meaning messages within a partition maintain their order and cannot be modified.

- Brokers: Brokers are the servers that form the Kafka cluster. They handle the storage and replication of data. Each broker is responsible for managing one or more partitions. Brokers receive messages from producers, store them on disk, and serve them to consumers.

- Clusters: Kafka clusters consist of multiple brokers that collaborate and share the data across the partitions. The cluster provides fault tolerance and high availability by replicating partitions across multiple brokers. If a broker fails, another broker takes over the responsibility of serving the data, ensuring continuous operation.

- ZooKeeper: ZooKeeper is used as a coordination service in Kafka. It maintains the metadata about the cluster, including information about brokers, topics, partitions, and consumer groups. ZooKeeper ensures synchronization and coordination among brokers, allowing for failover, dynamic partition assignment, and rebalancing of consumers.

Role of Security in Kafka

The Role of Security in Kafka is to safeguard the data and resources within the Kafka ecosystem. It ensures that only authorized individuals or systems can access, publish, or consume data from Kafka topics. By enforcing authentication and authorization mechanisms, security in Kafka helps to prevent unauthorized access, data breaches, and misuse of resources.

- Confidentiality: Security measures in Kafka aim to protect data from unauthorized access or interception. Encryption techniques, such as SSL/TLS, can be employed to secure data during transmission and storage, preventing eavesdropping and unauthorized disclosure.

- Integrity: Kafka ensures data integrity by preventing unauthorized tampering or modifications. By employing digital signatures or message hashes, Kafka can verify the integrity of data records, ensuring that they have not been altered during transit or storage.

- Authentication: Kafka supports authentication mechanisms to verify the identities of clients connecting to the system. This ensures that only authorized users and applications can access Kafka brokers and perform operations. Authentication can be achieved through mechanisms like SSL/TLS certificates, username/password-based authentication, or integration with external authentication providers like LDAP or Kerberos.

- Authorization: Kafka offers authorization mechanisms to control access to topics and partitions based on user roles and permissions. Access control lists (ACLs) can be configured to specify which users or groups have read or write permissions on specific topics. This helps enforce data privacy and prevent unauthorized access or modifications.

- Encryption: Kafka supports encryption of data in transit and at rest to protect data confidentiality. SSL/TLS encryption can be enabled to secure communication between producers, consumers, and brokers over the network. Additionally, Kafka provides support for message-level encryption, where messages are encrypted before being written to disk, ensuring data remains secure even if the storage medium is compromised.

- Audit Logging: Kafka allows for the recording and auditing of various security-related events, such as successful or failed authentication attempts, authorization decisions, and administrative actions. Audit logs are crucial for monitoring and investigating any security incidents, ensuring accountability, and meeting regulatory compliance requirements.

- Secure Cluster Setup: Kafka clusters can be deployed securely by isolating them within a private network, implementing firewalls, and restricting access to authorized entities. Additionally, secure configurations for ZooKeeper, which Kafka relies on for coordination, can be implemented to protect the metadata and coordination processes.

- Integration with External Security Systems: Kafka can integrate with external security systems and tools such as Apache Ranger, Apache Sentry, or other identity and access management (IAM) solutions. This allows for centralized security management, user authentication, and authorization policies across different data platforms and applications.

- Standards for Organizations: Organizations must comply with various industry regulations and standards, such as GDPR, HIPAA, PCI-DSS, and industry-specific regulations. Kafka security measures aid compliance by enforcing authentication, access control, and encryption. Authentication verifies identities, Access control in Kafka restricts data access to authorized entities, and Encryption, supported by Kafka, safeguards data during transmission, aligning with data protection regulations.

Basics of Kafka Authentication

What is Authentication in Kafka?

Kafka Authentication refers to the process of verifying and validating the identities of clients and servers to ensure secure access and interaction within the Apache Kafka ecosystem. It provides a mechanism for establishing trust and controlling access to Kafka resources.

Authentication in Kafka involves the following key components:

- Clients: Kafka clients, such as producers and consumers, need to authenticate themselves before connecting to Kafka brokers. They provide credentials or certificates to prove their identity.

- Kafka Brokers: Kafka brokers, the central servers that handle data streams, verify the authenticity of clients. They authenticate the clients based on the provided credentials or certificates.

- Authentication Mechanisms: Kafka supports various authentication mechanisms, including SSL/TLS, SASL (Simple Authentication and Security Layer), and OAuth. These mechanisms provide different ways to authenticate clients, such as using certificates, usernames/passwords, or tokens.

The purpose of Kafka Authentication is to ensure that only authorized entities can access Kafka resources. It helps protect against unauthorized access, data breaches, and potential security threats.

Authentication works in conjunction with other security features in Kafka, such as encryption and authorization, to provide a robust and comprehensive security framework for protecting sensitive data and maintaining the integrity of Kafka clusters.

Importance of Authentication in Securing Kafka

Authentication is a crucial aspect of securing Kafka, an open-source distributed streaming platform. Kafka is widely used for building real-time data streaming applications, and ensuring the authenticity of users and components interacting with Kafka is essential for maintaining the integrity and confidentiality of data within the system.

Here are some key reasons why authentication is important in securing Kafka:

- Data Protection: Kafka deals with sensitive data that often needs to be protected from unauthorized access. Authentication mechanisms, such as username-password authentication or certificate-based authentication, ensure that only authenticated and authorized users or applications can access the Kafka cluster. This prevents malicious actors or unauthorized parties from gaining access to the data and helps maintain data confidentiality.

- User Accountability: Authentication helps establish user accountability within Kafka. By identifying and verifying users, Kafka can track who is accessing the system and performing specific actions. This accountability is crucial for auditing, compliance, and investigation purposes. If any suspicious activity occurs, the system can trace it back to the authenticated user, allowing for appropriate actions to be taken.

- Securing Interactions: Kafka operates in a distributed environment, where multiple producers and consumers interact with the system. Authenticating these interactions ensures that only authorized applications or components can publish or consume data from Kafka topics. This prevents unauthorized or malicious applications from manipulating or injecting unauthorized data into the system.

- Secure Cluster Administration: Kafka cluster administration involves tasks like managing topics, configuring security settings, and monitoring the system. Authentication ensures that only authorized administrators can perform these actions, preventing unauthorized users from tampering with critical configurations or gaining control over the Kafka infrastructure.

- Integration with External Systems: Kafka often integrates with external systems, such as authentication providers, authorization services, or other components in the broader data infrastructure. By enforcing authentication, Kafka can securely interact with these external systems and validate the authenticity of requests and responses, maintaining the overall security posture of the ecosystem.

Types of Kafka Authentication

SSL/TLS Authentication

SSL/TLS Authentication in Kafka establishes a secure and encrypted channel, safeguarding data confidentiality, integrity, and authenticity. Clients and brokers present SSL/TLS certificates during connection setup, containing public keys and identification from trusted CAs.

During the SSL/TLS handshake, encryption algorithms, keys, and certificate authenticity are negotiated. The broker validates the client's certificate for validity, trust, and revocation.

SSL/TLS authentication in Kafka provides multiple benefits. It encrypts communication, ensuring data privacy and thwarting unauthorized access or eavesdropping. It guarantees data integrity, detecting tampering during transmission.

SSL/TLS authentication verifies client and broker authenticity, preventing impersonation or unauthorized connections. It establishes trust, allowing only trusted entities to interact with Kafka.

SASL Authentication

SASL (Simple Authentication and Security Layer) authentication is a flexible and extensible authentication framework used in Kafka to secure client and broker communication. It provides a pluggable mechanism for authentication, allowing Kafka to support various authentication methods and integrate with external authentication systems.

SASL authentication in Kafka involves the following components and steps:

- SASL Mechanism: SASL supports multiple authentication mechanisms, such as Kerberos, OAuth, PLAIN (username/password-based authentication), SCRAM (Salted Challenge Response Authentication Mechanism), and more. Each mechanism defines its protocol for authentication. Kafka clients and brokers must agree on a common SASL mechanism to authenticate each other.

- Client Configuration: Kafka clients (producers and consumers) need to be configured with the appropriate SASL mechanism and associated credentials. , For example,, if using Kerberos, the client configuration should include the Kerberos principal name and keytab file. If using SCRAM or PLAIN, the client configuration should include the username and password.

- Broker Configuration: Kafka brokers also need to be configured with the supported SASL mechanism and appropriate security settings. This includes specifying the SASL mechanism, configuring authentication settings (e.g., Kerberos realm, keytab locations, or user/password database), and enabling SASL authentication in the broker configuration file.

- SASL Handshake: When a client establishes a connection with a Kafka broker, the SASL handshake takes place. The client initiates the handshake by sending an authentication request to the broker. The broker responds with an authentication challenge, specific to the selected SASL mechanism.

- Client Authentication: The client receives the authentication challenge from the broker and processes it based on the selected SASL mechanism. The client generates a response to the challenge, which depends on the mechanism being used. , For example,, in Kerberos, the client generates a ticket-granting ticket (TGT) or service ticket. In SCRAM, the client generates a hashed response based on the provided credentials. The client sends the response back to the broker.

- Broker Authentication: The broker receives the client's response and verifies it using the appropriate SASL mechanism. The authentication process is mechanism-specific and may involve validating the client's credentials, checking the integrity of the response, or interacting with external authentication servers. If the client's response is valid, the broker considers the client authenticated.

- Secure Communication: Once the SASL authentication is completed, the client and the broker establish a secure and encrypted communication channel. All subsequent data exchanged between them is protected and encrypted.

Comparison of SSL/TLS and SASL

| Aspect | SSL/TLS Authentication | SASL Authentication |

|---|---|---|

| Purpose | Establishes a secure and encrypted communication channel between Kafka clients and brokers | Establishes a secure and encrypted communication channel between Kafka clients and brokers |

| Authentication Type | One-way authentication (server-side) or two-way authentication (mutual authentication between client and server) | Supports a variety of authentication mechanisms, such as Kerberos, OAuth, PLAIN, SCRAM, etc. |

| Communication | Encrypts the data exchanged between clients and brokers, ensuring confidentiality and integrity | Verifies the identity of the client and the server, allowing only authenticated and authorized parties to communicate |

| Implementation | Uses SSL/TLS protocols for secure communication | Relies on the SASL framework for authentication, which supports pluggable authentication mechanisms and external authentication systems |

| Configuration | Requires generating and distributing certificates, configuring trust stores, and setting SSL/TLS-related parameters | Requires configuring the SASL mechanism, credentials, and authentication settings for both clients and brokers |

| Use Cases | Primarily used for securing network communication and data privacy | Used for client and broker authentication within the Kafka ecosystem |

| Example Mechanisms | SSL, TLS | SSL, TLS |

Implementing Kafka SSL/TLS Authentication

SSL (Secure Sockets Layer) and its successor TLS (Transport Layer Security) are cryptographic protocols that establish an encrypted and authenticated connection between two parties over a network. SSL/TLS is commonly used to secure communication channels on the internet, ensuring data confidentiality, integrity, and authenticity.

Implementing Kafka SSL/TLS Authentication involves the following steps:

- Certificate Generation: To enable SSL/TLS authentication, a Kafka broker and client each need to have a digital certificate. Certificates are cryptographic files that contain a public key, identifying information (such as the entity's name), and a digital signature from a trusted certificate authority (CA). The certificate is typically generated using tools like OpenSSL or a certificate management tool.

- Certificate Distribution: Once the certificates are generated, they need to be distributed to the appropriate entities. The Kafka broker's certificate should be deployed on the broker machine, and the client certificates should be installed on the client machines or the systems interacting with Kafka.

- Configuration: The Kafka broker and client need to be configured to enable SSL/TLS authentication. This involves specifying the certificate files, private keys, and other SSL/TLS-related settings in the Kafka broker and client configuration files.

- Truststore Configuration: Along with certificates, a trust store is required on both the Kafka broker and client side. A trust store contains trusted CA certificates that are used to verify the authenticity of the certificates presented by the counterpart during the SSL/TLS handshake. The trust store configuration in Kafka ensures that only certificates issued by trusted CAs are accepted.

- SSL/TLS Handshake: When a Kafka client initiates a connection with a broker, the SSL/TLS handshake takes place. During this handshake, the client verifies the broker's certificate using the trust store, ensuring that the certificate is valid and issued by a trusted CA. The broker, in turn, verifies the client's certificate using its trust store.

- Mutual Authentication: In SSL/TLS authentication, both the client and the broker present their certificates to each other, establishing mutual authentication. This means that the client verifies the broker's identity, and the broker also verifies the client's identity. This two-way authentication ensures that both parties can trust each other's identity.

- Secure Communication: Once the SSL/TLS handshake is completed, a secure and encrypted communication channel is established between the client and the broker. All data exchanged between them is encrypted and protected from unauthorized access.

Best Practices for Kafka Authentication

Implementing authentication in Kafka requires following best practices to ensure a robust and secure setup.

Here are key best practices for Kafka authentication:

- Choose strong authentication mechanisms such as Kerberos or SSL/TLS.

- Enable mutual authentication to verify the identities of clients and brokers.

- Store authentication credentials securely using secure credential stores.

- Regularly rotate authentication credentials to minimize the risk of unauthorized access.

- Consider implementing multi-factor authentication for administrative access.

- Securely distribute keys and certificates used in SSL/TLS authentication.

- Centralize authentication and authorization using systems like LDAP or Active Directory.

- Keep the Kafka cluster and authentication components up to date with security patches.

- Monitor and audit authentication events to detect suspicious access attempts.

- Provide training and awareness programs on authentication best practices.

- Conduct regular security assessments to identify vulnerabilities and address them promptly.

Conclusion

- Apache Kafka is a distributed event streaming platform that enables high-throughput, fault-tolerant messaging and real-time data processing in a scalable manner.

- Authentication in Kafka is crucial for securing data, preventing unauthorized access, maintaining user accountability, and ensuring the integrity of interactions within the system.

- Security in Kafka ensures data confidentiality, integrity, and availability, prevents unauthorized access and protects against data breaches, ensuring the trustworthiness of the system and its interactions.

- Authentication in Kafka verifies the identity of clients and brokers, ensuring that only trusted and authorized parties can access and interact with the Kafka cluster.

- SSL/TLS authentication in Kafka establishes secure and encrypted communication channels, verifying the identities of clients and brokers using digital certificates.

- SASL authentication in Kafka provides a flexible framework for authentication, supporting various mechanisms like Kerberos, OAuth, and SCRAM to verify client and broker identities.

- Implementing Kafka SSL/TLS authentication involves generating and distributing certificates, configuring SSL/TLS settings, and enabling mutual authentication between clients and brokers for secure communication.

- Best practices for Kafka authentication include using strong mechanisms, enabling mutual authentication, securing credentials, regular rotation, centralized management, monitoring, and regular assessments.

- Kafka security features include SSL/TLS encryption, authentication (SASL), authorization (ACLs), secure inter-broker communication, and integration with external authentication systems for enhanced security.