Managing Kafka Consumer Errors Strategies for Retry and Recovery

Overview

Kafka consumer error handling, retry, and recovery are crucial aspects of building reliable and resilient data processing systems. When consuming messages from Kafka, it's essential to handle errors gracefully to ensure data integrity and avoid disruptions in downstream processing. Kafka consumer error handling involves implementing retry mechanisms for transient failures and defining appropriate strategies for handling non-recoverable errors. Recovery mechanisms such as offset tracking and periodic checkpoints are crucial to ensure message processing can be resumed from the last known good position. By combining these techniques, developers can build robust Kafka consumer applications that can handle errors, recover from failures, and ensure reliable data processing.

Introduction

Error handling, retry, and recovery mechanisms play a vital role in ensuring the reliability and resilience of Kafka consumer applications. When consuming messages from Kafka, it is important to handle errors gracefully to maintain data integrity and prevent disruptions in downstream processing. In this topic, we will explore the key concepts and strategies involved in Kafka consumer error handling, including retry mechanisms for transient failures and recovery mechanisms for non-recoverable errors.

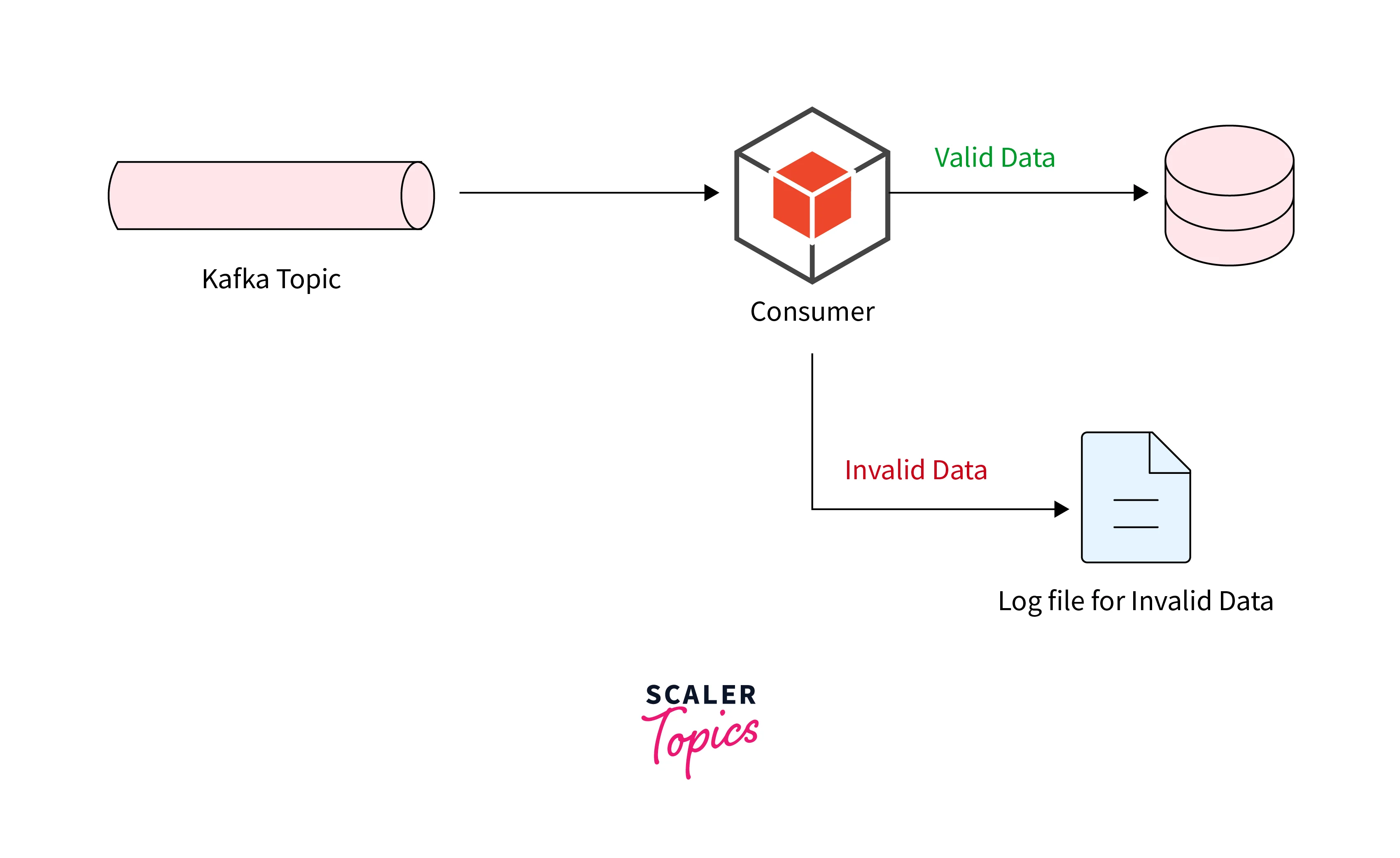

However, not all errors can be resolved through retries. Some errors may indicate more serious issues, such as invalid data formats or violations of business rules. In such cases, consumers need to implement appropriate error handling and recovery strategies. This involves logging the error, marking the message as failed, and potentially sending it to an error queue for further analysis or manual intervention. The consumer can then continue processing the remaining messages while keeping a record of the failed messages for future review.

Brief overview of Apache Kafka

Apache Kafka, developed by the Apache Software Foundation, is a distributed streaming platform that operates as open-source software. Its primary purpose is to effectively manage and process large amounts of data streams in real time. Due to its ability to scale seamlessly and maintain resilience in the face of failures, Kafka has gained significant popularity as a preferred solution for constructing fault-tolerant data pipelines and event-driven applications.

One of the key features of Kafka is its ability to handle real-time data streams at scale. It allows for the processing of massive volumes of data in parallel across multiple partitions and can handle millions of messages per second. This scalability makes it suitable for use cases involving large-scale event streaming, data ingestion, and real-time analytics.

Kafka provides fault tolerance by distributing data and partitions across a cluster of servers called brokers. Each partition is replicated across multiple brokers, ensuring data availability and resilience. In case of broker failures, Kafka automatically handles partition reassignment and failover, allowing for continuous data processing without disruptions.

Importance of error handling in Kafka Consumer

Error handling in Kafka consumers is of utmost importance for ensuring the reliability and robustness of data processing systems. Here are some key reasons why error handling is crucial in Kafka consumers:

- Data Integrity: Error handling ensures that data integrity is maintained throughout the message processing pipeline. By handling errors gracefully, consumers can prevent the propagation of corrupted or invalid data to downstream systems, ensuring the accuracy and consistency of processed data.

- System Resilience: Errors are inevitable in any distributed system, and Kafka consumers are no exception. Robust error-handling mechanisms enable consumers to recover from failures and continue processing messages without causing system-wide disruptions. By implementing proper error-handling strategies, consumers can maintain the stability and resilience of the overall system.

- Transient Failure Handling: Temporary failures, such as network issues or service unavailability, can occur while consuming messages from Kafka. Effective error-handling mechanisms, including retries and backoff strategies, allow consumers to recover from these transient failures. By retrying failed message processing, consumers can overcome temporary errors and successfully process messages when the underlying issues are resolved.

- Recovery and Redelivery: Error handling mechanisms enable consumers to recover from failures and resume processing from the last known good state. Kafka provides features like consumer offsets, which allow consumers to track the position of successfully processed messages. By leveraging these offsets and implementing recovery mechanisms, consumers can ensure that failed messages are not lost and can be reprocessed later, minimizing data loss and ensuring completeness of processing.

Understanding Kafka Consumer Basics

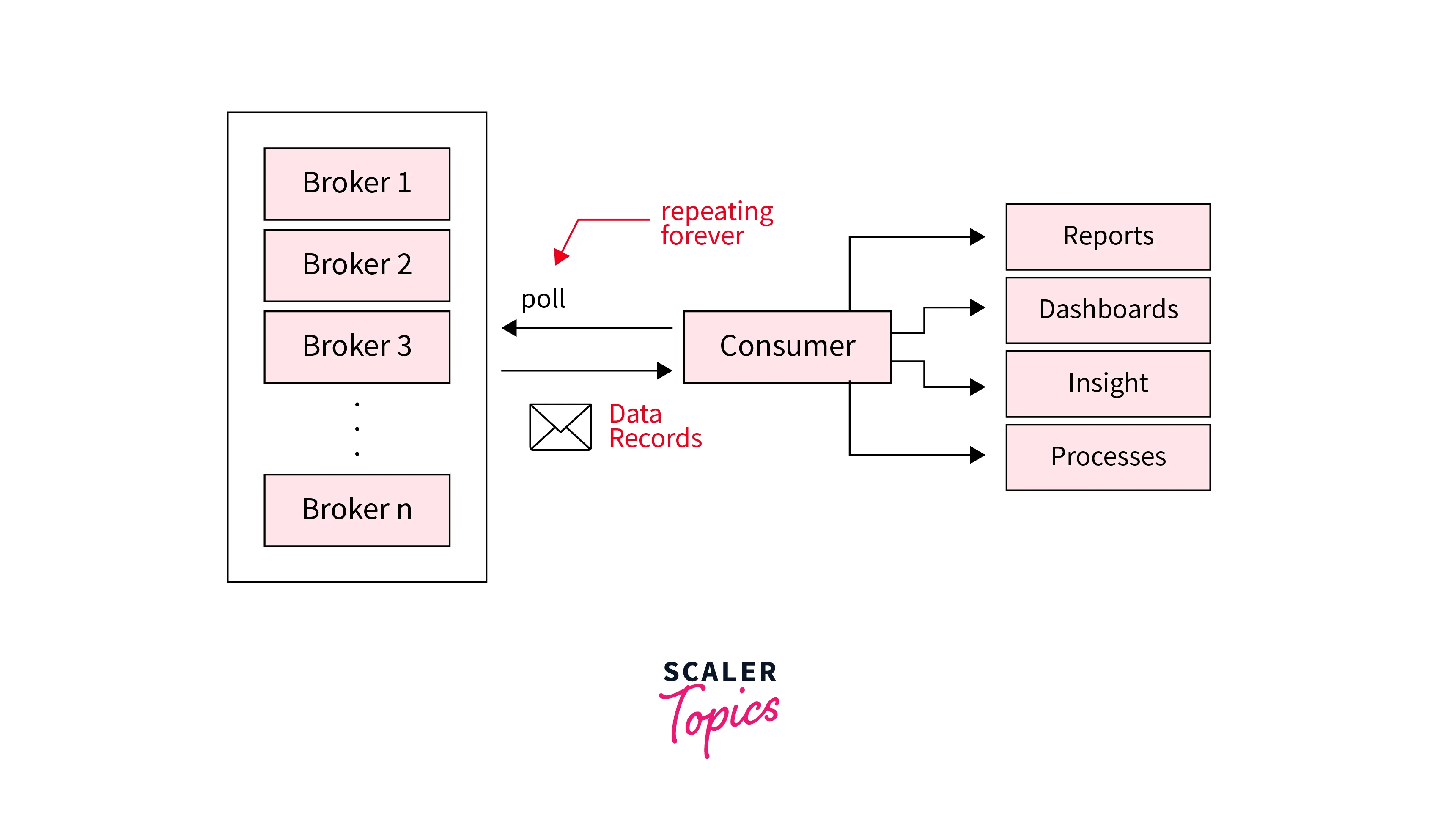

Brief explanation of Kafka Consumer

A Kafka consumer is an application or component that reads and processes data from Kafka topics. It plays a pivotal role in building data pipelines, real-time analytics, and event-driven architectures. Consumers subscribe to specific topics and consume messages published by producers.

When consuming messages, consumers are organized into consumer groups. Each consumer group consists of one or more consumer instances that work together to consume messages from a topic. Kafka automatically balances the workload across the consumers within a consumer group.

Consumers interact with Kafka using the Kafka consumer API or client libraries, which provide the necessary functionalities to subscribe to topics, fetch messages, and process them. Consumers can control the rate at which they fetch messages and can process them according to their application logic.

Kafka topics are divided into partitions, and each consumer is assigned one or more partitions to read from. This partition assignment enables parallelism and allows multiple consumers within a consumer group to process messages concurrently.

To keep track of its progress, each consumer maintains an offset, which represents the position of the last successfully consumed message. The consumer commits the offset to indicate that it has processed the message successfully. Kafka tracks the committed offsets for each consumer group, enabling fault tolerance and allowing consumers to resume from where they left off in case of failures or restarts.

Role of Kafka Consumer in the Kafka Ecosystem

In the Kafka ecosystem, Kafka consumers play a crucial role in enabling the consumption and processing of data from Kafka topics. Here are some key roles and contributions of Kafka consumers in the Kafka ecosystem:

- Data Ingestion: Kafka consumers are responsible for ingesting data from Kafka topics, which act as a central data hub or messaging system. Consumers pull messages from Kafka and process them according to their application logic. This enables the seamless flow of data into downstream systems for further processing or analysis.

- Real-time Analytics: Kafka consumers are instrumental in performing real-time analytics on streaming data. By subscribing to specific topics, consumers receive a continuous stream of messages and can process them in real-time to derive valuable insights. This allows organizations to make data-driven decisions and take immediate actions based on the analyzed data.

- Event-Driven Architectures: Kafka consumers are integral components of event-driven architectures. They consume events or messages published by producers and trigger actions or processes based on those events. Consumers enable decoupled, asynchronous communication between different components and systems, facilitating event-driven workflows and microservices architectures.

- Data Integration: Kafka consumers enable seamless integration across different systems and platforms. By subscribing to Kafka topics, consumers can bridge the gap between disparate systems and consume data from various sources. This enables the consolidation and synchronization of data across different systems, facilitating data integration and ensuring data consistency.

- Fault-Tolerance and Scalability: Kafka consumers are designed to be fault-tolerant and scalable. Consumers can be organized into consumer groups, allowing multiple consumers to work together to consume messages from a topic. This enables load balancing and fault tolerance as consumers can distribute the workload and handle failures gracefully. Kafka consumers can automatically recover from failures and resume processing from the last committed offset, ensuring data continuity and system resilience.

- Stream Processing: Kafka consumers serve as the entry point for stream processing frameworks like Apache Spark, Apache Flink, and Kafka Streams. These frameworks leverage Kafka consumers to read data from Kafka topics and perform advanced processing operations, such as filtering, aggregation, joining, and transformations. Kafka consumers enable the seamless integration of stream processing capabilities into the Kafka ecosystem.

Common Errors in Kafka Consumers

When working with Kafka consumers, several common errors can occur. Understanding these errors can help in troubleshooting and implementing robust error-handling strategies. Here are some of the common errors in Kafka consumers:

- Offset Out of Range: This error typically occurs when a consumer attempts to read messages from an offset that is no longer available in the topic. It can happen when a consumer restarts or if the retention period of messages has expired. Handling this error involves resetting the consumer's offset to a valid position or seeking the latest available offset.

- Group Coordinator Not Available: This error indicates that the group coordinator, responsible for managing consumer group metadata, is currently unreachable or offline. It can occur due to network issues or when the coordinator itself encounters problems. Retry mechanisms or waiting for the coordinator to become available again are typical approaches to handling this error.

- Connection Errors: Connection errors can happen due to network disruptions, unavailability of Kafka brokers, or misconfiguration. Examples include "Connection refused" or "Timeout" errors. It is important to handle these errors gracefully by implementing retry logic or considering appropriate back-off strategies.

- Serialization/Deserialization Errors: When consuming messages, serialization or deserialization errors can occur if the consumer cannot interpret the message format correctly. This can happen if the consumer expects a different data format than what is produced by the producer. Ensuring compatibility between producers and consumers and handling deserialization errors appropriately is necessary to avoid data processing failures.

- Consumer Lag: Consumer lag refers to the delay between the production of messages and their consumption by consumers. It can occur when consumers are unable to keep up with the message production rate or if there are insufficient consumer instances to handle the workload. Monitoring and managing consumer lag is important to ensure timely processing of messages and avoid data backlogs.

- Resource Limit Exceeded: Kafka consumers rely on system resources like memory, CPU, and network bandwidth. Resource limit exceeded errors can occur if the consumer exceeds the allocated resources, leading to performance degradation or crashes. Monitoring resource usage and optimizing consumer configurations can help mitigate these errors.

- Application-Specific Errors: In addition to the above common errors, consumers may encounter application-specific errors based on the logic implemented for message processing. These can include business rule violations, data validation failures, or exceptions specific to the consumer's processing logic. Proper error handling and recovery mechanisms should be implemented to handle such errors and prevent disruptions in the overall system.

Error Handling and Recovery in Kafka Consumer

Error handling and recovery mechanisms are crucial in Kafka consumers to ensure the reliability and fault-tolerance of data processing. Here are key considerations for error handling and recovery in Kafka consumers:

- Invalid (bad) data is received from Kafka topic: This error occurs when the consumer receives data from a Kafka topic that is in an unexpected or invalid format. It could be due to data corruption, serialization issues, or changes in the data schema. The consumer should handle this error by either skipping the invalid data, logging the error, or taking corrective actions such as notifying the producer or moving the message to a dead letter queue.

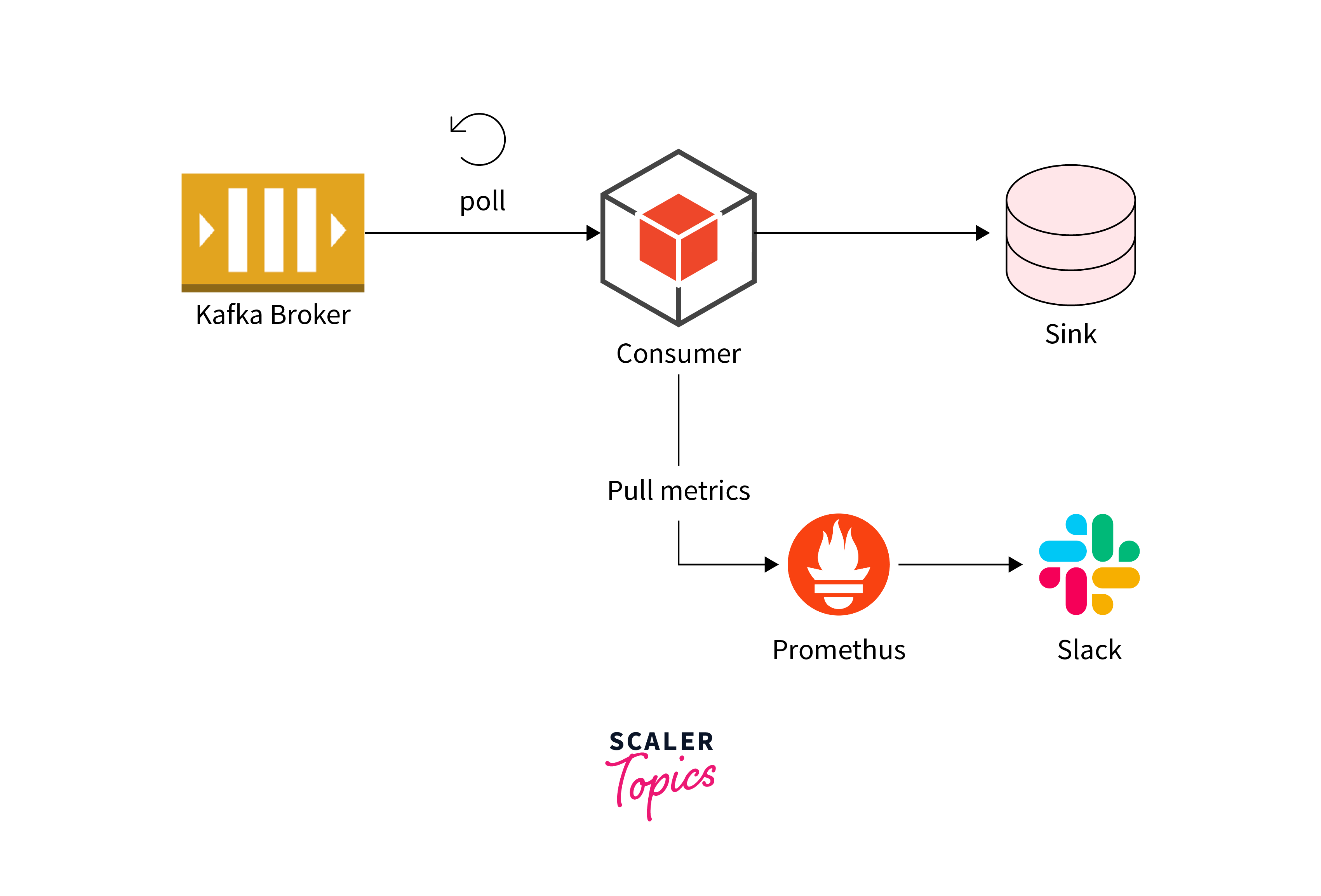

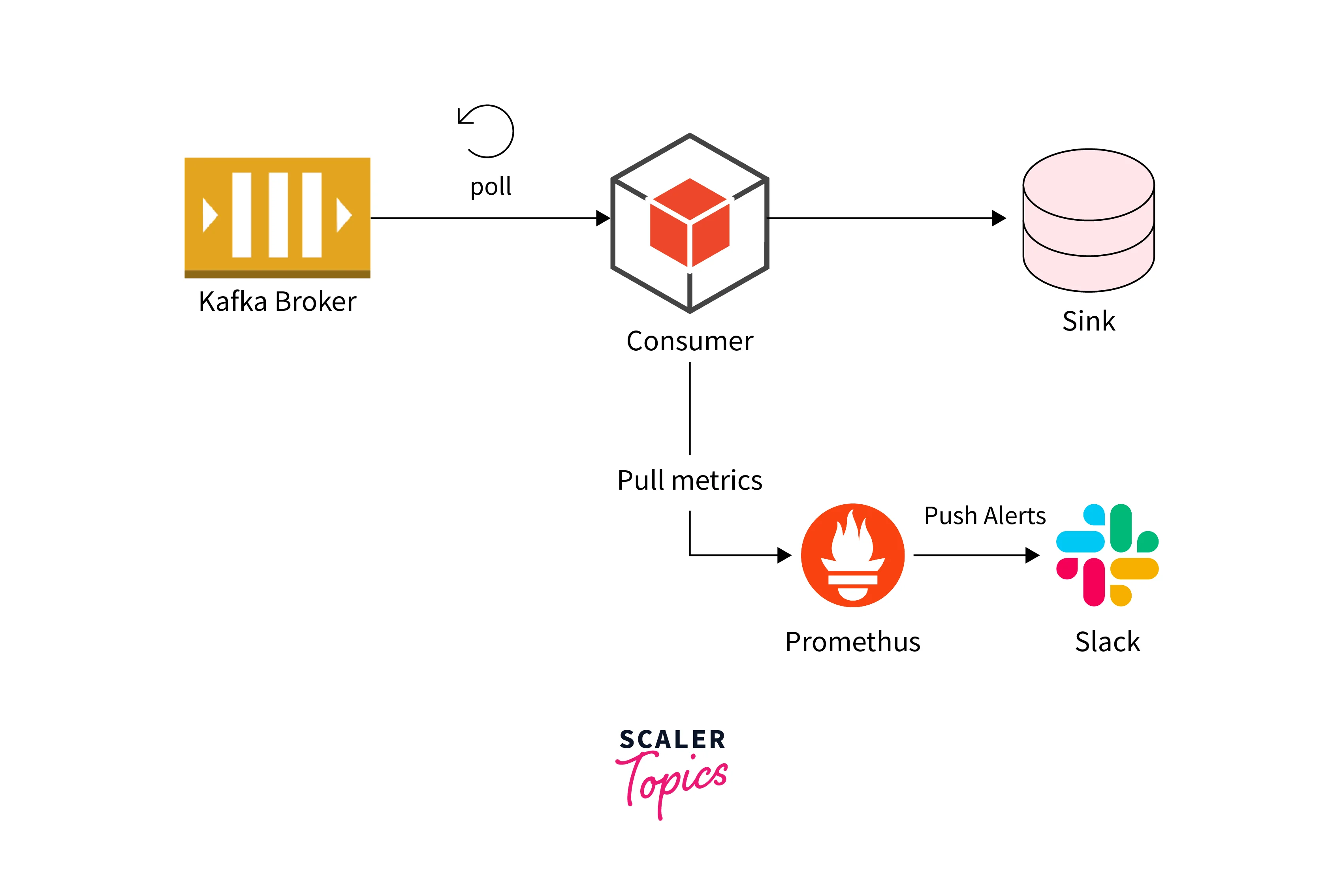

- Kafka broker is not available while consuming your data: This error occurs when the Kafka broker, which hosts the Kafka topics, is not reachable or is down. It can happen due to network issues, hardware failures, or misconfigurations. The consumer should handle this error by implementing a retry mechanism with exponential backoff to periodically attempt to reconnect to the broker. It is also important to monitor the Kafka cluster health and have alerts in place to notify administrators when brokers become unavailable.

- Kafka Consumer could be interrupted: This error occurs when the consumer application is forcefully terminated or interrupted. It could happen due to system shutdown, software crashes, or manual termination. To handle this error, the consumer should gracefully shutdown by committing offsets (if using manual offset management), closing connections, and releasing resources. It is advisable to implement proper signal handling and shutdown hooks to ensure clean termination.

- Kafka Topic couldn't be found: This error occurs when the consumer tries to consume from a Kafka topic that does not exist. It can happen if the topic is not created or if the consumer is misconfigured with an incorrect topic name. The consumer should handle this error by checking for the topic's existence before consuming and taking appropriate actions, such as creating the topic or notifying the administrator.

Any exception can happen during the data processing: While consuming data from Kafka, various exceptions can occur, such as network errors, deserialization errors, data validation failures, or exceptions in the business logic of the consumer application. The consumer should implement proper exception handling mechanisms to catch and handle these exceptions.

Retry Logic in Kafka Consumer

Implementing a retry logic in Kafka consumers can help handle transient failures and improve the resilience of the system. Here are key steps to consider when implementing retry logic in Kafka consumers:

-

Identify Retryable Errors: Determine the types of errors that are eligible for retry. These are typically transient errors such as network timeouts, connection failures, or temporary unavailability of downstream systems. Non-returnable errors, like data validation failures or business rule violations, may require a different error-handling approach.

-

Define Retry Policies:

- Maximum Retry Attempts: Determine the maximum number of retries allowed for a failed message. Setting a limit prevents infinite retries in case of persistent failures.

- Retry Interval: Define the duration between retries. It can be a fixed interval or follow an exponential backoff strategy, where the gap gradually increases with each retry to avoid overloading the system.

- Retry Exponential Backoff: Implement an exponential backoff mechanism, where the interval between retries grows exponentially, allowing the system to recover from transient failures.

- Implement Retry Logic:

- Catch and Handle Retryable Errors: Wrap the message processing logic in a try-catch block to catch the retryable errors. Upon encountering a retryable error, initiate the retry process.

- Delay and Retry: After a failure, introduce a delay based on the defined retry interval before retrying the message processing. This delay allows the system to recover and mitigate the impact of transient failures.

- Retry Attempts: Track the number of retry attempts for each message. Retry the message until the maximum retry attempts are reached or until the processing succeeds.

- Retry with Exponential Backoff: Implement an increasing delay between retries using an exponential backoff strategy. This approach reduces the load on the system during transient failures and provides a higher chance of successful processing upon recovery.

-

Exponential Backoff Variation: Consider using variations of exponential backoff, such as jitter, to introduce randomness in the retry interval. Adding a jitter helps distribute the retry attempts more evenly across consumers and avoids synchronization effects.

-

Error Handling after Exhausting Retries:

- Logging: Log the details of the failed message and the reason for the failure to aid in troubleshooting and analysis.

- Error Handling Mechanisms: Consider redirecting the failed message to an error topic/queue or dead-letter queue for further investigation or manual intervention.

Error Handling Best Practices

Error handling in Kafka consumers is crucial for building robust and reliable data processing systems. Here are some best practices to consider when implementing error handling in Kafka consumers:

- Proper Logging: Implement comprehensive logging to capture detailed information about errors and exceptions. Log relevant data such as error messages, stack traces, and contextual information. This helps with troubleshooting, identifying the root cause of errors, and monitoring the health of the consumer.

- Use Consumer Error Callbacks: Kafka provides error callbacks that can be registered to receive notifications about errors encountered by the consumer. Implement and leverage these callbacks to handle and process errors appropriately. Examples include onConsumeError, onCommitError, and onError.

- Graceful Recovery and Restart: Plan for graceful recovery and restart of the consumer in the event of failures. Ensure that the consumer can resume processing from the last committed offset or a specific checkpoint to avoid data duplication or loss. Consider using frameworks like Apache Kafka Streams or Apache Flink that provide built-in recovery and checkpointing mechanisms.

- Implement Retry Logic: For transient errors, implement retry mechanisms to handle temporary issues. Retry failed message processing after a delay or with an exponential backoff strategy. However, be cautious about retrying indefinitely and set a maximum number of retry attempts to prevent infinite loops.

- Dead-Letter Queue: Redirect failed messages to a separate error or dead-letter queue for further analysis or manual intervention. This allows you to isolate and investigate problematic messages without impacting the main processing flow. Dead-letter queues provide an opportunity to manually handle exceptional cases or fix errors before reprocessing.

- Monitor Consumer Lag: Monitor the consumer lag, which represents the delay between message production and consumption. Excessive consumer lag can indicate issues such as processing bottlenecks, resource limitations, or misconfiguration. Regularly track and analyze consumer lag to ensure timely processing and identify potential performance issues.

- Implement Circuit Breaker Pattern: Consider implementing the Circuit Breaker pattern to detect and handle repeated failures. A circuit breaker allows you to temporarily block message consumption from a particular source or downstream system to avoid overwhelming the consumer with consistently failing operations.

- Error Reporting and Alerting: Set up proper error reporting and alerting mechanisms to notify relevant stakeholders about critical errors or exceptional scenarios. Implement thresholds or triggers to send notifications when error rates exceed predefined thresholds or when specific error patterns are detected.

- Testing and Monitoring: Test error-handling scenarios during development and conduct regular monitoring of the error-handling process. Use tools and frameworks to simulate error conditions and ensure that the consumer behaves as expected in different error scenarios.

- Documentation and Knowledge Sharing: Document the error handling strategies, patterns, and practices followed in the Kafka consumer. Share this information with the development team and stakeholders to ensure a common understanding of error-handling mechanisms and facilitate effective collaboration and troubleshooting.

Conclusion

- Kafka consumers play a critical role in processing data streams in real-time and need robust error-handling mechanisms to ensure reliable and fault-tolerant data processing.

- Retry logic is essential for handling transient failures. Implement retry mechanisms with appropriate retry intervals and back-off strategies to retry failed message processing after encountering errors.

- Proper logging is crucial for capturing error details and facilitating troubleshooting. Log error messages, stack traces, and contextual information for effective analysis and debugging.

- Graceful recovery and restart capabilities are necessary for consumers to resume processing from the last committed offset or a specific checkpoint after failures, ensuring data integrity and avoiding duplicates.

- Dead-letter queues or error topics can be used to redirect failed messages for further analysis or manual intervention, isolating them from the main processing flow.

- Monitoring consumer lag helps identify processing bottlenecks or resource limitations, ensuring timely message consumption and reducing delays between production and consumption.

- Implementing the Circuit Breaker pattern allows consumers to temporarily block message consumption from a failing source or downstream system, preventing cascading failures and avoiding overloading the consumer.

- Error reporting and alerting mechanisms should be set up to notify stakeholders about critical errors or exceptional scenarios, enabling prompt actions and troubleshooting.