Implementing DCGAN for Generating Images in Keras

Overview

DCGAN (Deep Convolutional Generative Adversarial Network) is a generative model that can generate new, previously unseen images by learning from a training dataset.

Implementing a DCGAN in Keras involves: preprocessing the training data and defining a generator, discriminator, and GAN model that combines the two.

The GAN model is then trained using an optimizer and a loss function for several epochs.

Pre-requisites

-

CNN:

A Convolutional Neural Network (CNN) is a deep learning neural network well-suited for image classification and recognition tasks. It passes an image through convolutional and pooling layers, which apply filters to the input image and reduce its size. These filters are trained to recognize specific features or patterns in the image, such as edges, corners, or textures. The output of the CNN is a prediction of the class or label the input image belongs to. CNNs have succeeded in various image classification tasks and are a key component of many state-of-the-art image recognition systems. -

TensorFlow & Keras:

TensorFlow is an open-source software library for machine learning and artificial intelligence developed by Google. TensorFlow offers a wide range of tools and APIs for building and training machine learning models and for visualizing and debugging them. Keras is a high-level deep learning library built on top of TensorFlow (and other libraries). Keras provides a simple and intuitive interface for defining and training deep learning models, making it a popular choice for many machine learning practitioners. -

GANs and DC-GAN:

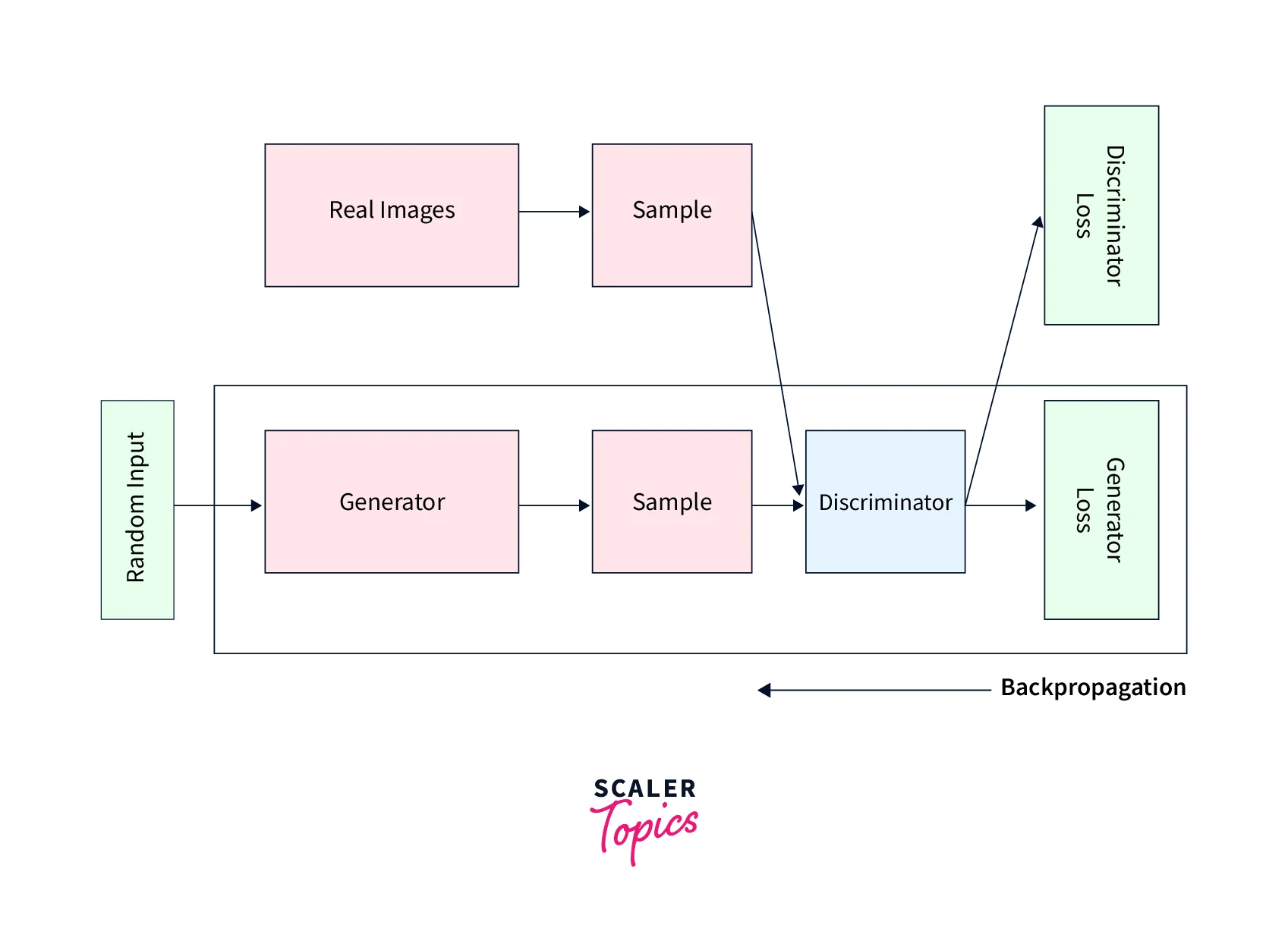

Generative Adversarial Networks (GANs) are a type of deep learning model designed to generate synthetic data that is similar to some training data. They consist of two networks:- a generator network and

- a discriminator network.

The generator network generates synthetic data, while the discriminator network tries to distinguish between synthetic data and real data, such as generating synthetic images, audio, and text.

Deep Convolutional Generative Adversarial Networks (DCGANs) are a variant of Generative Adversarial Networks (GANs) that use Convolutional Neural Networks (CNNs) as the generator and discriminator networks. They are particularly well-suited for generating synthetic images, as CNNs are effective at learning and representing the spatial hierarchies present in images. DCGANs have successfully generated many synthetic images, including photorealistic images of faces, animals, and landscapes. Like other GANs, DCGANs are trained in an adversarial manner, with the generator network trying to produce synthetic images that are indistinguishable from real images and the discriminator network trying to identify whether each image is real or synthetic correctly.

How are we Going to Build This?

A DCGAN (Deep Convolutional Generative Adversarial Network) is a generative model that can generate new, previously unseen data samples similar to a training dataset. You can build a DCGAN in TensorFlow and Keras by following these steps :

- Step - 1:

Define the model architecture for both the generator and discriminator networks. The generator should take in random noise as input and output an image, while the discriminator should take in an image and output a prediction of whether the image is real or fake. - Step - 2:

Compile the model using the Adam optimization algorithm and binary cross-entropy loss. - Step - 3:

Generate a batch of random noise as input for the generator. - Step - 4:

Use the generator to generate a batch of fake images. - Step - 5:

Concatenate the fake images with a batch of real images from the training dataset. - Step - 6:

Train the discriminator on this mixed batch of real and fake images, using labels of "real" for the real images and "fake" for the fake images. - Step - 7 :

Generate a new batch of random noise. - Step - 8:

Use the generator to generate a batch of fake images using this random noise as input. - Step - 9:

Train the generator by using the discriminator's predictions on these fake images as the labels and using the Adam optimization algorithm and the binary cross-entropy loss. - Step - 10:

Repeat steps 3-9 until the generator produces satisfactory fake images.

Final Output

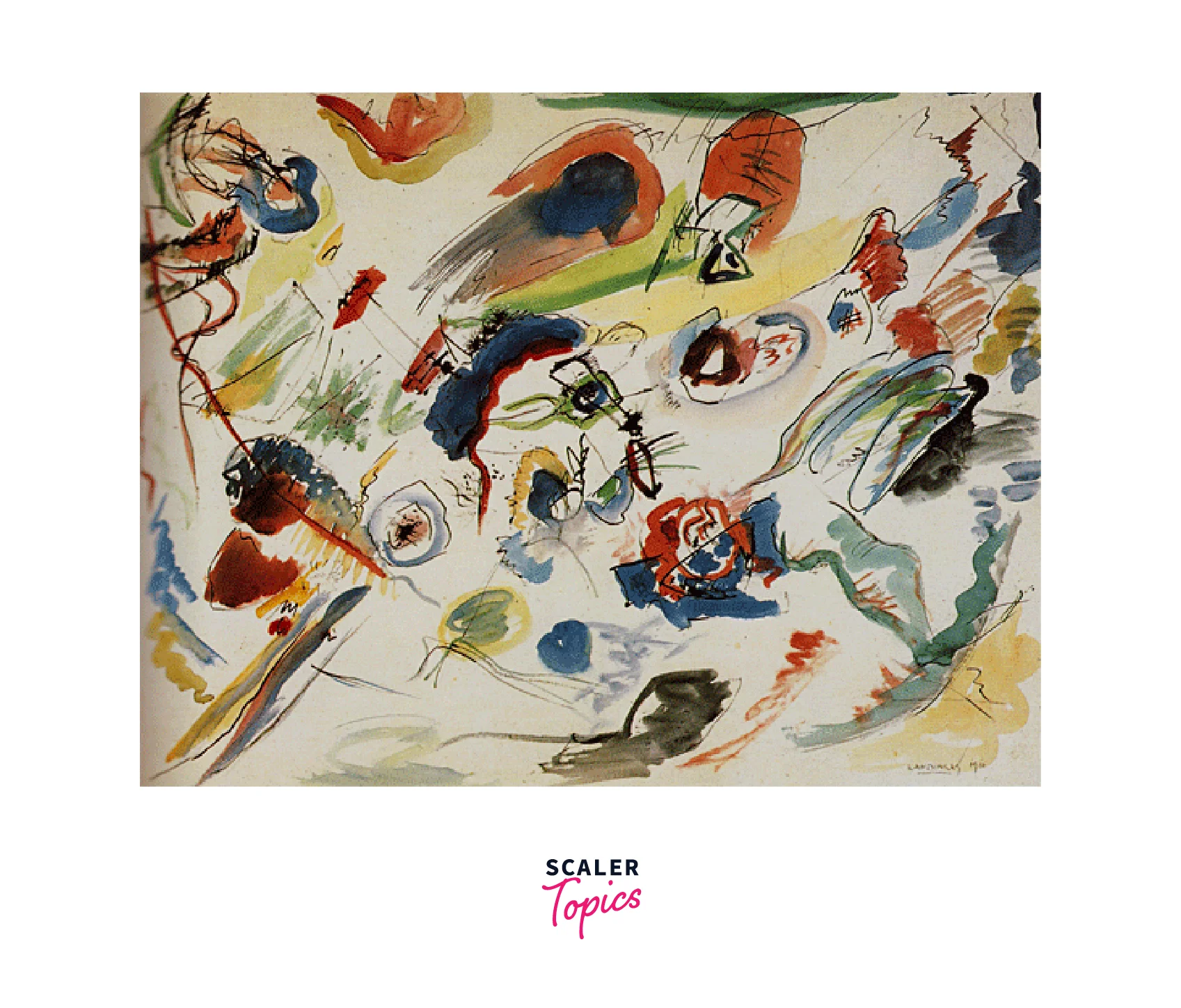

In this project, we will be generating abstract images. But first, let me show you an example of what we will build.

Requirements

The following packages are required to run the code in this blog post :

- os

- cv2

- numpy

- pandas

- glob

- matplotlib

- seaborn

- sklearn

- tensorflow

You can install these packages using pip, for example :

Alternatively, you can install all the required packages at once by running the following command :

Where requirements.txt is a text file containing the names of the required packages, one per line.

Implementing DCGAN for Generating Images in Keras

Get the Sample Dataset and Visualize the Dataset

For gathering the dataset, we require kaggle. For installing kaggle in your environment, you can install it using the pip command. After installing kaggle and exporting the config from the account section of kaggle, we will download the data.

We can download the data by using this command.

After your dataset is downloaded, then we need to unzip the file.

After unzipping the dataset, we can easily view our data. For example, our sample data looks like this.

Make the Input Data Pipeline via tf.data

To build the input data pipeline via tf.data for implementing the DCGAN model using Keras

Define the Hyperparameters

After defining the hyperparameters, let us see how many images are in our dataset.

We need the utility function to read the images to complete the pipeline.

Preprocessor Utilities

Now let's make the pipeline.

Data Pipeline

Visualise the Training Samples

Let's visualize our sample dataset :

Implement DC GAN Using TensorFlow and Keras

We need to build a generator and discriminator models to implement the DC GAN using Keras. Therefore, we will make a deconvolution block and a convolutional block to build the generator and discriminator.

Let's implement deconvolution block. A deconvolution block, also known as a transposed convolution or fractionally strided convolution, is a layer commonly used in convolutional neural networks (CNNs) for image generation and semantic segmentation tasks. It is the reverse operation of a standard convolutional layer, which means it upsamples an image rather than downsampling it.

After implementing the deconvolution block, we will implement the convolution block. A convolution block is a layer commonly used in convolutional neural networks (CNNs) for image processing tasks such as image classification, object detection, and semantic segmentation. It comprises one or more convolutional layers and often includes other types of layers, such as pooling and normalization layers.

Generator Model

In a Generative Adversarial Network (GAN), the generator is a neural network responsible for generating new samples similar to a training dataset. In a Deep Convolutional GAN (DCGAN), the generator is a convolutional neural network trained to upsample a lower-dimensional latent code to a higher-dimensional output image. In addition, the generator is typically trained to maximize the probability that the output image will be classified as real by the discriminator, another neural network trained to classify whether an image is real or generated. Together, the generator and discriminator form a two-part model that can generate new samples similar to a given training dataset.

Let's implement the Generator model.

Discriminator Model

In a Generative Adversarial Network (GAN), the discriminator is a neural network for classifying whether an input image is real or generated. In a Deep Convolutional GAN (DCGAN), the discriminator is a convolutional neural network trained to distinguish between real and fake images produced by the generator. The discriminator is typically trained by trying to maximize the probability of correctly classifying real images as real and fake images as fake. Together with the generator, the discriminator forms a two-part model that can generate new samples similar to a given training dataset.

Let's implement the Discriminator model.

Override train_step

train_step is a TensorFlow function that runs one training step. It is typically used during the training loop to update the model's variables based on a batch of training data. In TensorFlow, train_step is often implemented using an optimizer, which applies gradients to the model's variables to minimize the loss.

In Keras, train_step is typically implemented as a function that takes a batch of data as input and updates the model's weights using the gradient descent algorithm. For example, the fit method of a Keras model uses train_step to iteratively update the model's weights throughout training.

Let's implement the GAN Class and override the train_step :

Create a Callback That Periodically Saves Generated Images

Train the Model

Let's compile the GAN model :

Let's now train our DCGAN model using Keras.

Visualise the Generated Images

After training the model, let's view the generated images.

What’s Next?

Here are a few things you might want to consider doing after learning about DCGANs :

-

Experiment with different architectures:

Try changing the size and number of filters in the convolutional layers or the number of layers in the generator and discriminator. You can also use different layers, such as transposed convolution layers or residual blocks. -

Try different datasets:

DCGANs have been used on a wide range of datasets, from small datasets of handwritten digits to large datasets of high-resolution images. Experimenting with different datasets can help you understand how well DCGANs perform on different types of data and identify any challenges you might need to overcome. -

Explore further applications:

DCGANs have been used for various applications, such as image generation, image translation, and text-to-image synthesis. Consider exploring some of these applications and seeing how DCGANs can be used to solve them. -

Fine-tune the model:

Once you have a working DCGAN, you can try fine-tuning it to improve its performance. This might involve adjusting the hyperparameters, such as the learning rate and batch size, or adding regularization techniques, such as dropout or weight decay. -

Learn about related techniques:

You might want to learn about many other generative models, such as VAEs, GANs, and WGANs. Understanding these techniques will give you a broader perspective on generative modeling and help you identify the best approach for your problem.

Conclusion

This article demonstrated how to implement DCGAN using TensorFlow and Keras.

- We discussed how transformers are required for building DCGAN using TensorFlow and Keras.

- We have demonstrated what we are going to build in this project.

- We understood the working of the GAN class, what is a generator model, the discriminator model as well as their functions.

- We implemented the DCGAN using Keras to generate and save abstract images.