Predicting Sentiments with Keras

Overview

A sentiment can be considered an emotion, attitude, or perception about an entity or a situation. For example, sentiments are evident when we express our opinions about certain situations in our personal or professional life, gadgets, movies, restaurants, and so on. In the same way, we prefer to watch movies based on recommendations and high ratings.

In short, businesses can benefit from sentiment analysis, a type of Natural Language Processing (NLP) that extracts and analyzes review data to categorize them as positive, negative, or neutral. Personalized recommendations are possible using sentiment analysis resulting in a better customer experience.

What are we Building?

We will predict sentiments in Keras using the IMDB movie review dataset.

Description of Problem Statement

Suppose we have a dataset with several reviews about different movies or TV series. We want to mine information from this available text on what people think about these films or series and be able to predict using AI whether a particular user has liked or disliked a certain movie based on their review.

Pre-requisites

You'll need -

- Basic understanding of NLP concepts

- Familiarity with Keras syntax for building Deep Learning models

- Some familiarity with vanishing/exploding gradients and Recurrent Neural Networks (RNN).

How are we Going to Build this?

We will build a custom neural network in Keras using the LSTM (Long-Short-Term-Memory) model for an NLP sentiment analysis task. This model can analyze the provided text input and then predict the user sentiment based on the words in the text.

Final Output

Here is a sample text analyzed using the Keras sentiment analysis model.

Requirements

To build this Sentiment Analysis model, we'll need -

- Python Libraries - Pandas, NumPy to process the dataset, and Matplotlib for Data Visualization.

- To load the dataset and implement the neural network - Keras framework

Building a Sentiment Analysis Model in Keras

Let us begin building a sentiment model in Keras.

Description of Problem Statement

We have to build an NLP model in Keras that analyzes the sentiment in a given review (text) and predicts the sentiment (positive or negative) based on the words present in the text.

Dataset

We will use the IMDB movies dataset that contains 50,000 IMDB user reviews. These reviews are positive (denoted by 1) and negative sentiments (denoted by 0). It is a built-in dataset in Keras; hence, it is quite convenient to load the dataset using a single line of code.

Data Preprocessing

Let us explore the dataset to understand it better. We begin by importing all the necessary libraries along with the Keras framework using the following code -

Splitting the Dataset

Next, we will split the dataset into training and test datasets directly with the following code.

We can also specify a parameter num_words while performing the train-test split to specify the number of unique words to be used.

Let us check the shape of the train-test sets -

Output:

Our dataset is a 50:50 split with 25000 samples in each training and testing set. You can experiment with another ratio for splitting the dataset. For this, you'll need to merge the data after importing and then split it according to your choice. For this article, we'll continue with the existing 50:50 dataset split.

Exploring the IMDB Dataset

After splitting the dataset, let us begin exploring our dataset. First, we can check the average length of the review with the following code -

The average length of the review is ~239 words, with a standard deviation of 176.5.

Next, let us check the first review from the training set with its label.

The movie review appears to be a set of different integers separated by ,. The sentiment for this review is 1, which means it is a positive review by the user.

Here's what we need to know -

-

The numbers in the review are vector representations of word indexes as integers. These are represented in the NumPy format. Hence, you'll find that the words in a review will be indexed based on their overall frequency in the given dataset. This makes it easier to filter out the top most common words in the dataset for prediction tasks. For example, the integers '1', '2', and '3' denote the most frequently occurring words in the dataset. It is also important to note that '0' does not denote any specific word but is used for an unknown word in the text (pad token). Hence, we can specify how many words we want to consider for our model. More information on this can be found later in this article.

-

If the review contained text instead of integers, we would need to properly tokenize the data before feeding it to the neural network. In other words, Keras has already preprocessed the data for dataset users by tokenizing the reviews.

Tokenization In NLP tokenization converts words in a given text to an integer. Generally, smaller integers tend to correspond with more frequently used words. Let us confirm the number of unique words and the number of sentiment classes in the training dataset.

The total number of unique words in this dataset is 88,585, and there are two sentiment classes for the review (0 and 1).

Let us look at a few words and their indexes for this dataset. We will use Keras's get_word_index() function for this. We will print 20 entries in the dictionary with the code as shown below-

Padding/Truncation Now, we consider the requirement of padding or truncation for this sentiment analysis. The reviews in the dataset may be different in length. For this purpose, we use the parameter maxlen.

Here's what we need to know -

maxlen: This parameter indicates the maximum length of a given text i.e. movie review. To properly analyze the text, we need to specify a limit for the review length. This will ensure that any reviews longer than it will be shortened to the value of maxlen.

Before setting up the LSTM model in Keras, we must truncate the longer reviews' length. Similarly, we need to pad, i.e., stretch the text with dummy words to the shorter reviews to match the maxlen value. This way, all reviews can be the same size for our neural network. We will use the pad_sequences function from Keras to achieve this.

In the above code, the pad_sequences() second argument after the dataset is the maxlen. Here, we have specified maxlen=500. It tells Keras to resize all the reviews to a size of 500 words. So, what this pad sequences function does is that it transforms the provided text review samples into a 2D NumPy array. So, text reviews, i.e., sequences that are shorter than 500, will be padded with a value till they reach this maxlen, while for longer reviews, these would be truncated till they reach the maxlen.

We have our datasets ready. Now, let us build our Keras model for sentiment analysis.

Build the IMDB review sentiment model

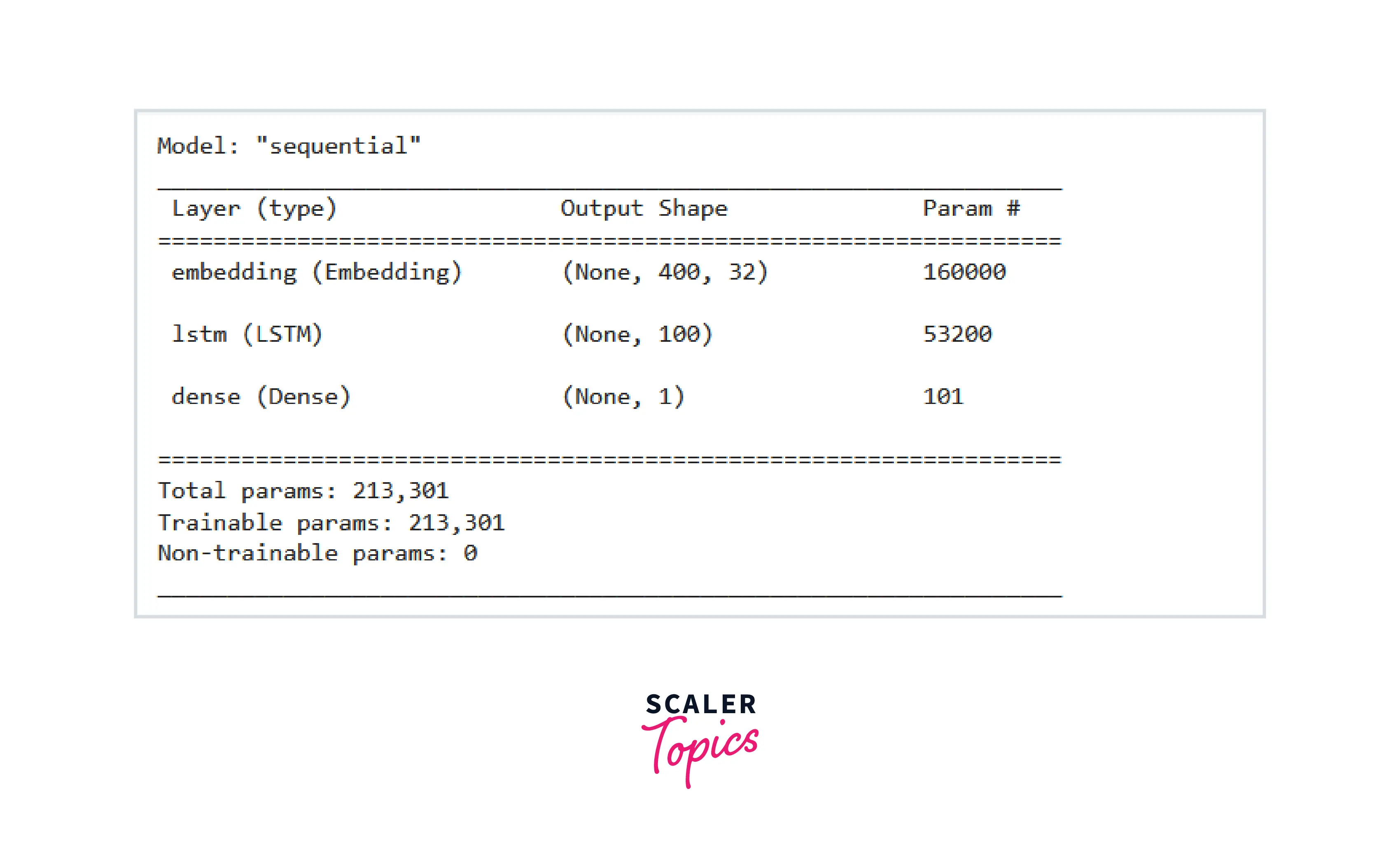

We can now proceed with the Neural network model development. We will build this model with an embedding layer, a simple LSTM layer, and a Dense layer (for a fully connected neural network). You can also use a bidirectional LSTM layer instead to build the model.

Neural networks such as RNN experience the problem of vanishing and exploding gradients. LSTM models are preferred to address this issue as they can handle sequential data and are better for maintaining long-range connections. In addition, these can recognize the relationship between values at the beginning and end of a sequence.

Hence, NLP tasks can benefit from the use of LSTM as it can handle input in the form of a sentence for prediction instead of one word. Using LSTM is, thus, more efficient and convenient in NLP tasks.

The embedding layer will convert each word in the input to a dense vector of a given size (embedded dimensions). We also need to specify the hyperparameters, such as batch size, epochs, LSTM units, etc., which can be tuned later to improve the model accuracy.

Generally, an LSTM model has multiple layers where each layer takes one input from the previous layer and advances the output to the next layer. However, it is important to note that the first layer accepts inputs as numerical sequences, whereas the final layer provides output as the prediction label.

Generally, an LSTM model has multiple layers where each layer takes one input from the previous layer and advances the output to the next layer. However, it is important to note that the first layer accepts inputs as numerical sequences, whereas the final layer provides output as the prediction label.

Here, we will define a sequential() model in Keras to embed the layers of LSTM. You can experiment with the number of layers in the model to improve the model or add dropout layers to avoid overfitting. However, to keep this tutorial simple, we have not added any Dropout layers to the model.

Here's what we need to know -

num_words: The number of unique words to be used in the analysis. By specifying the num_words equal to 5000, we restrict our analysis to 5000 most common words only. Since this is a randomly selected value, you can also try another value to evaluate how the model performs.

We will use the Dense function to ensure fully connected layers in our neural network with the default ReLU activation function. You can try another function for experimentation. You find more details on Dense layers here.

We will choose the sigmoid activation for the output layer as there are only two labels, negative and positive. So the sigmoid function can help us map the prediction between 0 and 1, which would be easier for our understanding.

We will use an adam optimizer with binary_crossentropy as we have only two labels, but you can try training the model with another optimizer of your choice.

Train and evaluate the model

We can train the model by specifying the training set, validation_data as X_test, and y_test to analyze our loss and accuracy for both the training and validation set on each epoch.

We will use model.fit() to train our model with 10 epochs.

Since we have already used (X_test, y_test) for validation_data in the previous code, we got a validation accuracy with the training accuracy by training a simple LSTM network. The model accuracy could further be improved by options such as -

- Using more words in the dictionary

- Using back-to-back LSTM layers

The model can be separately evaluated (if no validation data is specified while training the model) using the following code -

The training and validation accuracies can be different in your case based on the hyperparameters chosen at the training time. As the training accuracy of 96% and a validation accuracy of 86% are acceptable for tutorial purposes, we can save the trained model -

Testing

Let us load our saved model first -

We must define a function user_input to make predictions using this trained model.

This function will adjust the length of the review, convert the words to index and then make predictions about the sentiment in the review.

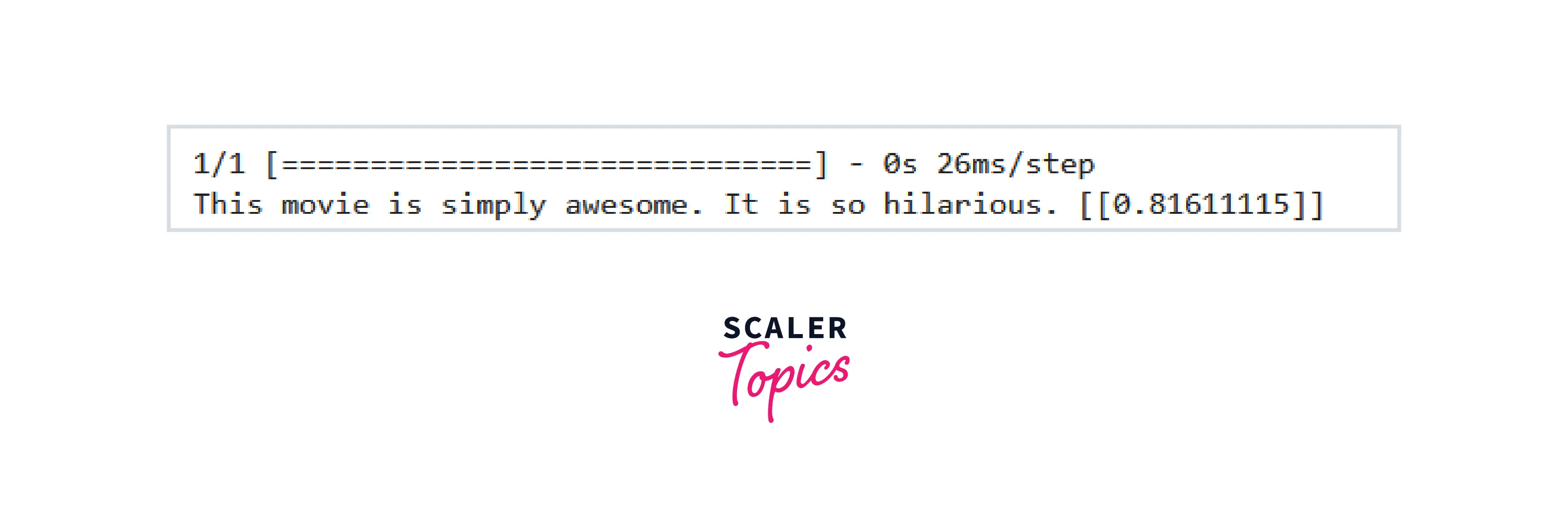

Next, we pass a sample line of text as user input for the review prediction function (These are user inputs and can be considered unseen text).

A positive sentiment is involved as the user likes the television drama mentioned in the review. Let us check if our model predicts the same sentiment for the review -

We get a score of 0.816, closer to 1, i.e., positive sentiment. Hence, our sentiment analysis model accurately predicted a positive sentiment.

We can try another sample text -

The prediction for this user input was 0.15, which is closer to 0. Hence, our model identified this as a negative sentiment well.

That's it for this article. You can further experiment with different values of num_words and epochs to re-train the model and improve its performance.

Conclusion

In this tutorial for the Predicting Sentiments with Keras, we learned-

- the concepts of text tokenization and embedding

- importing a Keras built-in dataset imdb for movie reviews

- How to predict sentiment (positive or negative) in movie review text using a trained LSTM model in Keras.