Building a Text Entailment Model Using Keras

Overview

Text entailment is a fundamental task in natural language processing in which the relationship between two pieces of text, such as a premise and a hypothesis, is determined. Many NLP applications rely on it, including question answering, customer review classification, news article categorization, and machine translation.

The purpose of text entailment is to assess whether or not the hypothesis follows logically from the premise. To do so, we must represent the text's meaning and the relationship between the premises and hypothesis.

What are we Building?

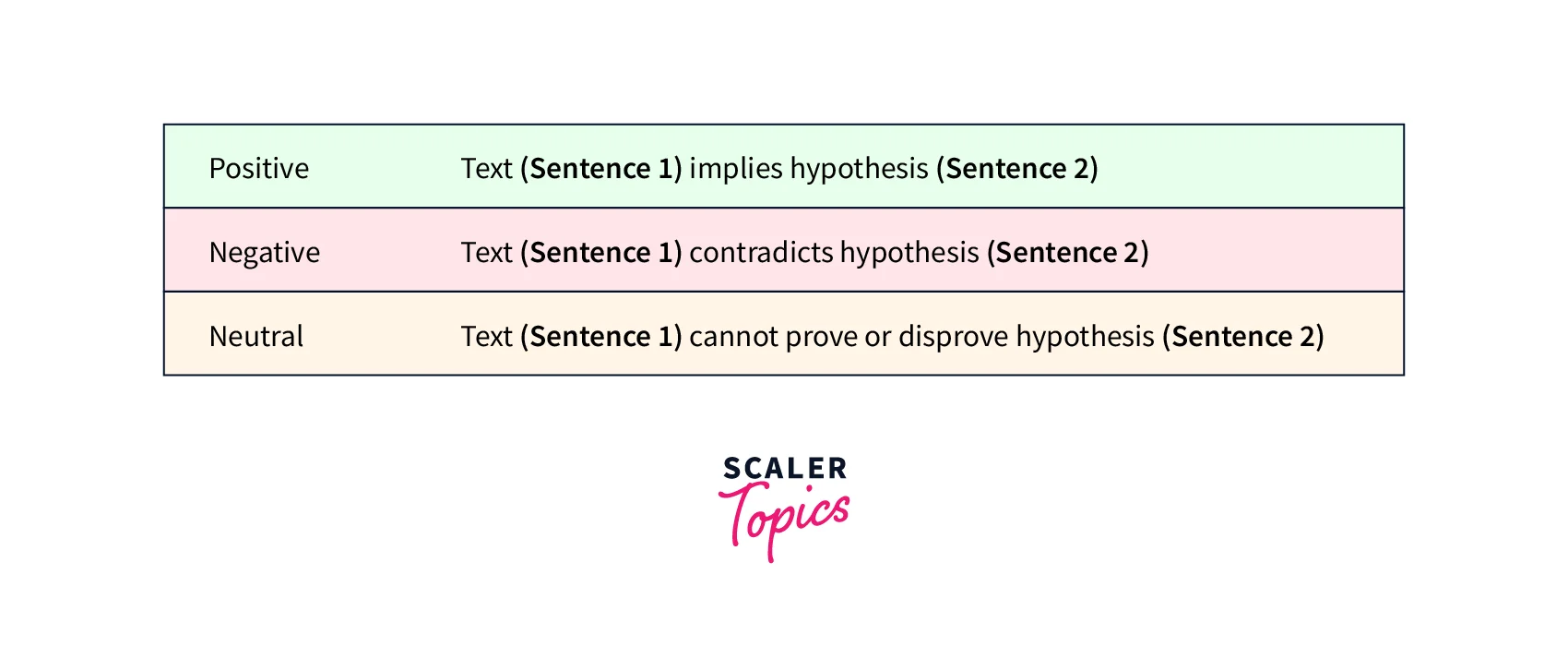

Textual entailment is a straightforward logic exercise that seeks to determine if one statement may be inferred from another. A textual entailment algorithm attempts to classify an ordered pair of phrases into one of three groups. The first type, known as "positive entailment," happens when the first phrase may be used to verify the truth of a second phrase. The negative entailment category is the converse of positive entailment. This happens when the first phrase may be used to refute the second. Finally, if there is no association between the two phrases, they are said to have "neutral entailment."

Textual entailment may be used in much bigger projects as a component. For instance, question-answering systems may use textual entailment to validate an answer based on information that has been stored. In addition, textual entailment may help improve document summary by removing phrases that do not include relevant information. Several other natural language processing (NLP) methods apply entailment similarly.

This article will use Keras to construct a straightforward, quick-to-train neural network to build a text entailment model.

Prerequisites

To build a text entailment model using Keras, the following prerequisites will be a benefit:

- Understanding of Python Programming Language.

- Implementation of libraries like Pandas, Numpy, Seaborn, Matplotlib, and Keras

- Familiarity with Deep Learning algorithms like Multilayer Prectron (MLP) Model, Recurrent Neural Networks (RNNs), Long Short Term Memory (LSTMs) Models, and Bidirectional Long Short Term Memory (Bi-LSTMs) Models.

- Basic acquaintance with concepts like Data Cleaning, Processing, Model Evaluation, Model Testing, Model Training, and Feature detection.

How are we Going to Build this?

Let us have a walkthrough of building the project:

- Libraries and Data

Importing the necessary libraries and dataset - Pre-process The Data

Cleaning up our data, which includes eliminating stop words, extending contractions (combining two or more words), and so on. - Vectorization

Capture the semantic meaning of words and can be used to represent the meaning of a sentence. - Defining model architecture

Next, we define the architecture of our model. We can use a recurrent neural network (RNN) or a **convolutional neural network (CNN)**for text entailment. In this example, we'll use an ELMo Embedding with a Bi-LSTM layer. - Callbacks and loss function

To validate or modify particular characteristics of our text entailment model. - Compiling the model

After defining the architecture, we compile the model by specifying the optimizer, loss function, and metrics to track during training. - Training the model

We train the model using the pre-processed data. During training, the model learns to predict if a text is entailed by another text based on the training data. - Evaluating the model

Finally, we evaluate the model on a held-out test set to assess its performance. This allows us to check if the model overfitted to the training data and generalizes well to new data. - Using the model

Once the model is trained, we can use it to predict new data. To do this, we pass the new data through the model and interpret the output as the probability of entailment.

Final Output

Statistical Output:

Given below is the score of the models applied to the dataset.

- The training accuracy is 78.60%, while the validation accuracy is 75.98%.

- On the test set, the accuracy is 71.58%.

Analytical Output:

Once we've trained our model, we can forecast whether one statement can be inferred from another. We can see this in action by using the illustration provided below.

Code:

Output:

Requirements

In addition to installing Keras version 2.11.x, ensure that you have installed the following:

- Jupyter

- Pandas: To import and read our data

- Matplotlib: For plotting graphs

- NumPy: To perform Numerical calculation

- Tensorflow: To import the keras library and build our model architecture

- You're also encouraged to install TQDM, while unnecessary, to gain a better feel of your progress throughout network training.

The code in this post might be executed on an online environment like Google Colab or an offline one like Jupyter Notebook. We'll use Stanford's SNLI data set for our training, but we'll download and retrieve the required data via a Python script, so you don't have to download it manually.

Building a Text Entailment Model Using Keras

a. Problem Formulation

Here, the goal is to build a model that could predict the relationship between two pieces of text if another text logically entails one text.

b. Overview of the Components Involved

Dataset:

The Stanford Natural Language Inference (SNLI) Corpus will be used, which is a collection of 570,000+ human-written English phrase pairs manually labeled for balanced classification with the labels Positive entailment, contradiction (or Negative entailment), and neutral, assisting the task of natural language inference (NLI), also identified as recognizing textual entailment (RTE).

The model's input is pairs of phrases, and its output is one of 'Positive entailment,' 'Negative entailment,' or 'Neutral'.

Positive, Negative, Neutral:

In this part, we'll look at several textual entailment instances to see what we imply by positive, negative, and neutral entailment. To begin, we'll consider positive entailment; for instance, when you read "The Assassination of Kennedy by Oswald," you can infer that "Oswald killed Kennedy." In this illustration phrase set, we can infer the second phrase (also known as a "hypothesis") from the first phrase (commonly known as the "premise"), indicating that this is a positive entailment. It's worth noting that " assassination " and " killing " have similar connotations but are not the same word. Indeed, entailment does not always imply that the sentences share terms, as seen by this phrase pair, which shares the words "Oswald" and "Kennedy."

Consider another pair of phrases. How does the phrase "The National Institute for Psychobiology in Israel was founded in May 1971 as the Israel Center for Psychobiology by Prof. Joel." imply "Israel was founded in May 1971."? If the National Institute for Psychobiology was founded in May 1971, Israel had to be founded before May 1971. This is negative entailment since the second phrase opposes that concept.

Finally, to demonstrate neutral entailment, analyze how the phrase "I played soccer with the kids" includes "The kids enjoy ice cream," if at all. Playing soccer and enjoying ice cream are not related to one another. I could play soccer with ice cream admirers and ice cream haters. Both are equally feasible. As a result, the first phrase communicates nothing about whether the second sentence is true or untrue, indicating neutral entailment.

c. Implementation and Training

Libraries and Data:

We'll begin by performing the necessary imports and instructing our Google colab notebook to render graphs and images within the notebook itself.

Code:

We'll load our data now that we've completed all necessary imports.

Code:

Pre-process The Data:

Pre-processing the data is the initial stage in creating a text entailment model. This process includes cleaning the data, tokenizing it, and transforming it into numerical representations. We will use the Natural Language Toolkit (NLTK) package to carry out these actions. We'll start by cleaning up our data, including eliminating stop words, extending contractions (combining two or more words), and so on.

Code:

Vectorization:

Unfortunately, neural networks only function with numerical values. To avoid this, we must portray our words as numbers. These numbers should represent something; for instance, we might use the character codes of the letters in a word. However, it tells us very little about the word's definition. Word vectorization is the act of converting related meanings into something that a neural network can grasp.

Word vectorizations are commonly created by having each word reflect a single point in a high-dimensional space. Words with nearly identical interpretations should be grouped nearby in this space.

We won't have to build a new encoding of words as integers for our needs. There are already several wonderful general-purpose vector encoding of words and methods to learn even more sophisticated data if the general-purpose data is insufficient.

This article is intended to function with the ELMo embeddings. Allen NLP's ELMo embeddings are among several excellent pre-trained models accessible on Tensorflow Hub. ELMo embeddings provide contextual elements of the input text and are learned from a bidirectional LSTM internal state. It has been demonstrated to outperform GloVe and Word2Vec embeddings on a diverse range of NLP tasks.

Code:

Defining Model Architecture:

Now that we have our data, we can begin working on our model. We used a combination of LSTM cells and Bi-LSTM cells to develop our model. Before we go any further, let's define what we mean by LSTM and Bi-LSTM.

-

LSTM:

Long Short-Term Memory Networks (LSTMs) is a sophisticated RNN, or sequential network, that permits storing information. It can solve the vanishing gradient problem that RNN has. However, the diminishing gradient makes RNNs unable to recall long-term dependencies. LSTMs are expressly designed to eliminate long-term dependence issues. -

Bi-LSTM:

Bidirectional recurrent neural networks (RNN) are just two independent RNNs combined. This approach gives the architectures both backward and forward knowledge about the sequence at each time step. When employing bidirectional, your inputs will be processed in two directions: one from the past to the future and the other from the future to the past. This method varies from unidirectional, in which data from the future is preserved in the LSTM that goes backward. By combining the two hidden states, you may maintain data from the present and the future at any moment.Now that you understand what LSTM and Bi-LSTM represent let us design our model.

Code:

Output:

Callbacks and loss function:

Now that we've defined our model let's construct the callback and loss function that will be used to train it.

Callbacks:

Loss Function:

Compiling our Model:

Code:

Training our Model:

Now that we have our model, let us train it for a maximum of 10 epochs with a batch size of 128.

Code:

Output:

d. Expected Outputs

Accuracy should be approximately , which may be increased by carefully modifying hyperparameters and expanding the data set to cover the whole training set. However, this is usually accompanied by a longer training time.

e. Inference

- The model took 3.63 hours to train on the GPU-P100.

- The training accuracy is , while the validation accuracy is .

- On the test set, the accuracy is .

Testing

Let us now put the model to the test using the example from earlier in the article.

Code:

Output:

What’s Next?

-

The findings can be considerably better when using more complex model architectures and extended time limitations, but my experiment offers an easier-to-understand approach. Consider the following to achieve more accurate results:

- Adding extra Bidirectional Long short-term Memory(Bi-LSTMs) layers.

- Employing different RNN layers, which include Gated Recurrent Units (GRUs). Keras also supports GRU implementation.

- More hidden units could be added. Also, increase regularization and dropout intensities to adjust for the increased number of parameters in the deep neural network.

- Experiment with different types of Deep Neural Networks networks!

-

The SNLI corpus has been the subject of several significant research works, and now that you are familiar with it, you should review some of the research work to go deeper into this area of research.

Conclusion

- Establishing the relationship between two phrases, such as premise and hypothesis, is known as text entailment.

- We can obtain up to test accuracy using Elmo embeddings followed by layered bidirectional LSTM layers. Of course, the findings can be significantly improved by using more intricate model structures and longer periods, but this provides an excellent starting point and an easier-to-understand model.