GKE Cluster

Overview

Google Kubernetes Engine simplifies containerized app management. It managed Kubernetes service automates deployment, scaling, and orchestration, enabling developers to focus on coding while Google handles infrastructure intricacies. Features include auto scaling, load balancing, seamless Google Cloud integration, security tools, and easy upgrades. GKE streamlines app development and boosts efficiency. In this blog, we are going to learn about GKE Cluster.

Getting Started with GKE

Jumping into Google Kubernetes Engine (GKE) is like stepping into a world where your apps can be super organized and work really well. GKE helps you put your apps into containers, making them easier to manage and run smoothly.

First, you make something called 'clusters' where your apps live. GKE takes care of making sure these clusters are safe and can handle lots of people using your apps. You can choose between two modes: one where things are done automatically (Autopilot) or another where you can customize things more (Standard).

It works really well with other Google Cloud stuff, like storage and databases. And if you're worried about how secure your apps are, GKE has your back. It helps you control who can do what with your apps and keeps them safe.

But how do you know if your clusters are doing everything the right way? That's where GKE Policy Automation comes in. It's like a helper that checks your clusters and makes sure they're following the best rules.

So, if you're ready to make your apps awesome, 'Getting Started with GKE' is your guide. It shows you how to start, what to do, and how to make sure your apps and clusters are running happily ever after."

Cluster Management and Monitoring

Cluster management and monitoring are vital for Google Kubernetes Engine (GKE) success. Effective management involves infrastructure setup, scaling, and updates using tools like Infrastructure as Code (IaC) and GitOps. Monitoring ensures real-time visibility into cluster health and performance using tools such as Google Cloud Monitoring and logging solutions like Prometheus and Grafana. Implementing role-based access control (RBAC), securing secrets, and configuring network policies safeguard applications. Regularly updating nodes, optimizing resource usage, and adhering to best practices enhance GKE's reliability. Overall, seamless management and vigilant monitoring are essential for maintaining a well-functioning, secure, and highly available GKE environment.

Node Management and Auto-Scaling

Node management and auto-scaling are crucial aspects of managing a Kubernetes cluster, particularly when using Google Kubernetes Engine (GKE). GKE is a managed Kubernetes service provided by Google Cloud Platform (GCP) that simplifies the process of deploying, managing, and scaling containerized applications using Kubernetes.

-

Node Pools: In GKE, a cluster is composed of one or more node pools. A node pool is a group of nodes that have the same configuration, such as machine type, operating system, and other instance attributes. You can create multiple node pools within a GKE cluster to accommodate different types of workloads with varying resource requirements.

-

Auto-Scaling Node Pools: GKE provides built-in auto-scaling functionality for node pools. This means that based on the demand of your applications, GKE can automatically adjust the number of nodes in a node pool. When the cluster's workloads increase, GKE can add more nodes to the pool to handle the load, and when the load decreases, it can scale down the number of nodes to save resources and costs.

-

Horizontal Pod Autoscaling (HPA): While node auto-scaling handles the cluster's capacity, Kubernetes itself provides Horizontal Pod Autoscaling (HPA) to adjust the number of pod replicas within a deployment or replica set. HPA ensures that the appropriate number of pods are running to handle varying levels of traffic. GKE integrates with Kubernetes' HPA functionality seamlessly.

-

Cluster Autoscaler: GKE integrates with the Kubernetes Cluster Autoscaler to manage the size of the cluster itself. The Cluster Autoscaler watches for pending pods that cannot be scheduled due to resource constraints and automatically increases the number of nodes if necessary. It also scales down the cluster when nodes are underutilized.

-

Vertical Pod Autoscaling (VPA): GKE also supports Vertical Pod Autoscaling, a feature that adjusts the resource requests and limits of containers based on their actual usage. This can help optimize resource utilization within pods.

Overall, GKE's node management and auto-scaling features help you ensure optimal resource utilization, high availability, and cost efficiency for your Kubernetes applications without needing to manage the underlying infrastructure manually. It's important to understand these features and configure them properly to meet the requirements of your workloads.

Application Deployment on GKE

First, you'll learn about 'pods,' which are like mini-houses for your apps. GKE helps you manage these pods so they work nicely together. GKE also helps you to discover how to make sure different parts of your app can talk to each other using 'services.' GKE's magic load balancing makes sure everyone can use your app without any problems.

When you want to update your app, GKE lets you do it very easily, and your app's secrets, like passwords, stay safe in GKE.

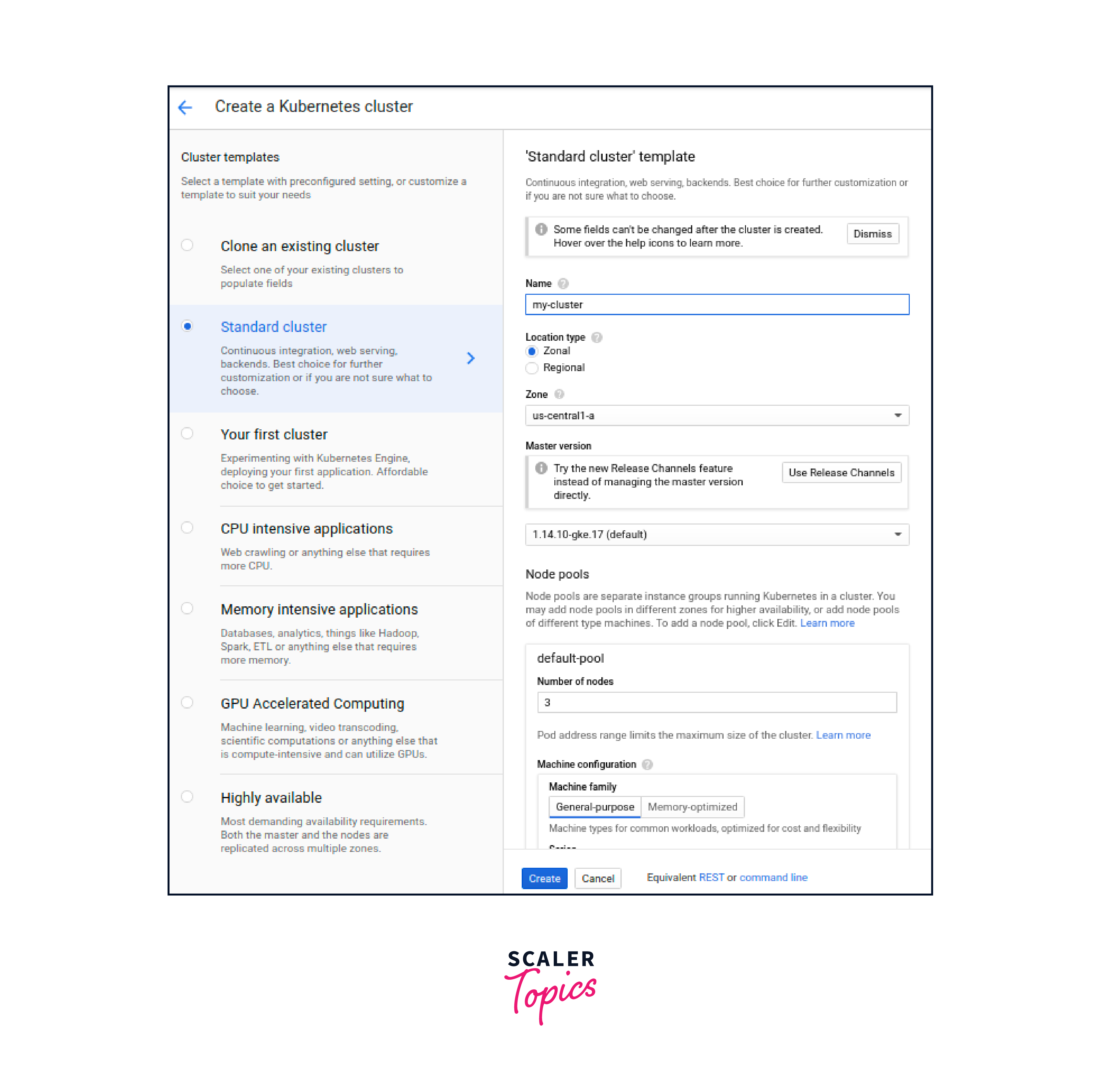

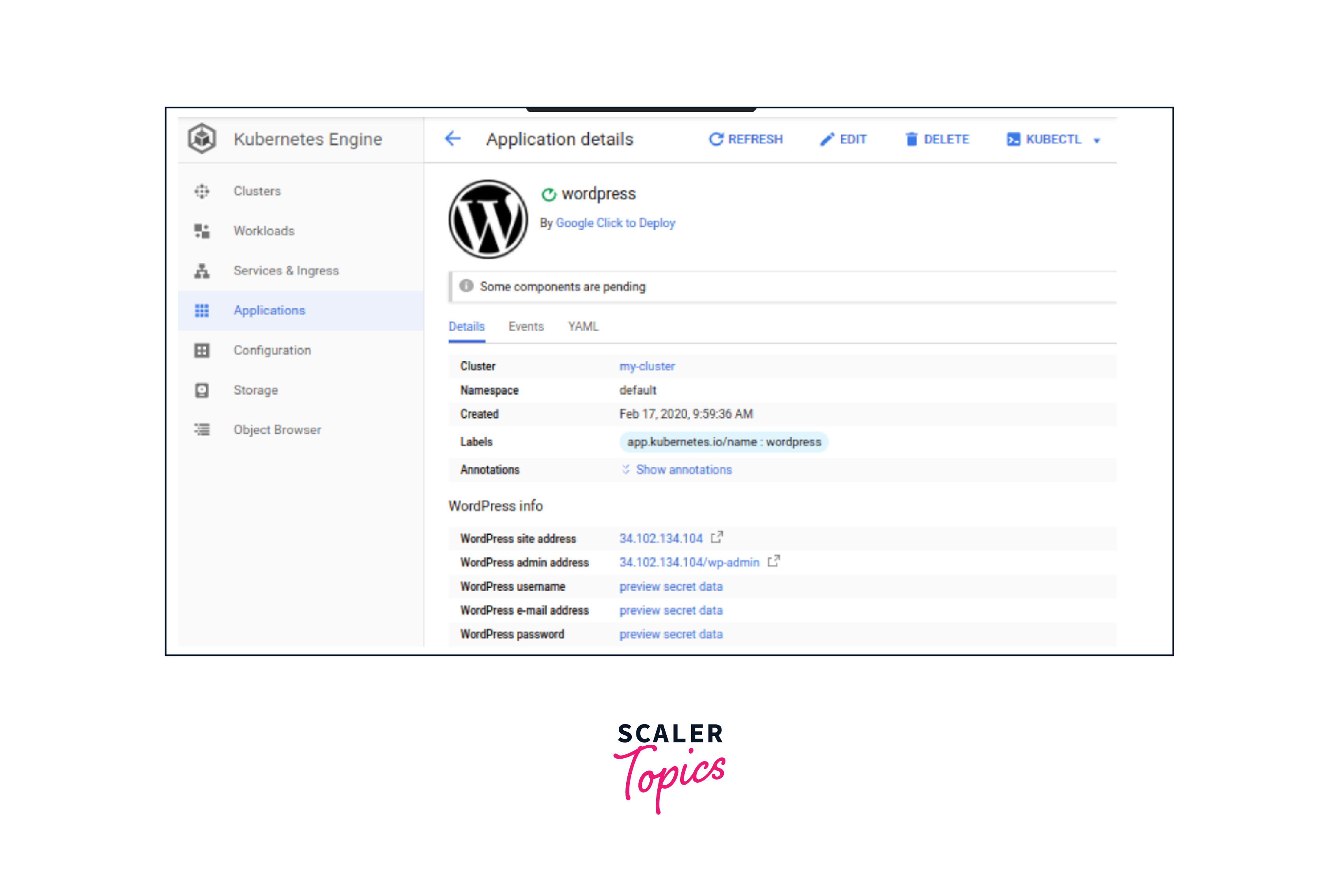

Step-1: To setup a GKE cluster, click here OR navigate as follows on the GCP Console: Kubernetes Engine > Clusters > Create Cluster (name: my-cluster, leave other fields as is)

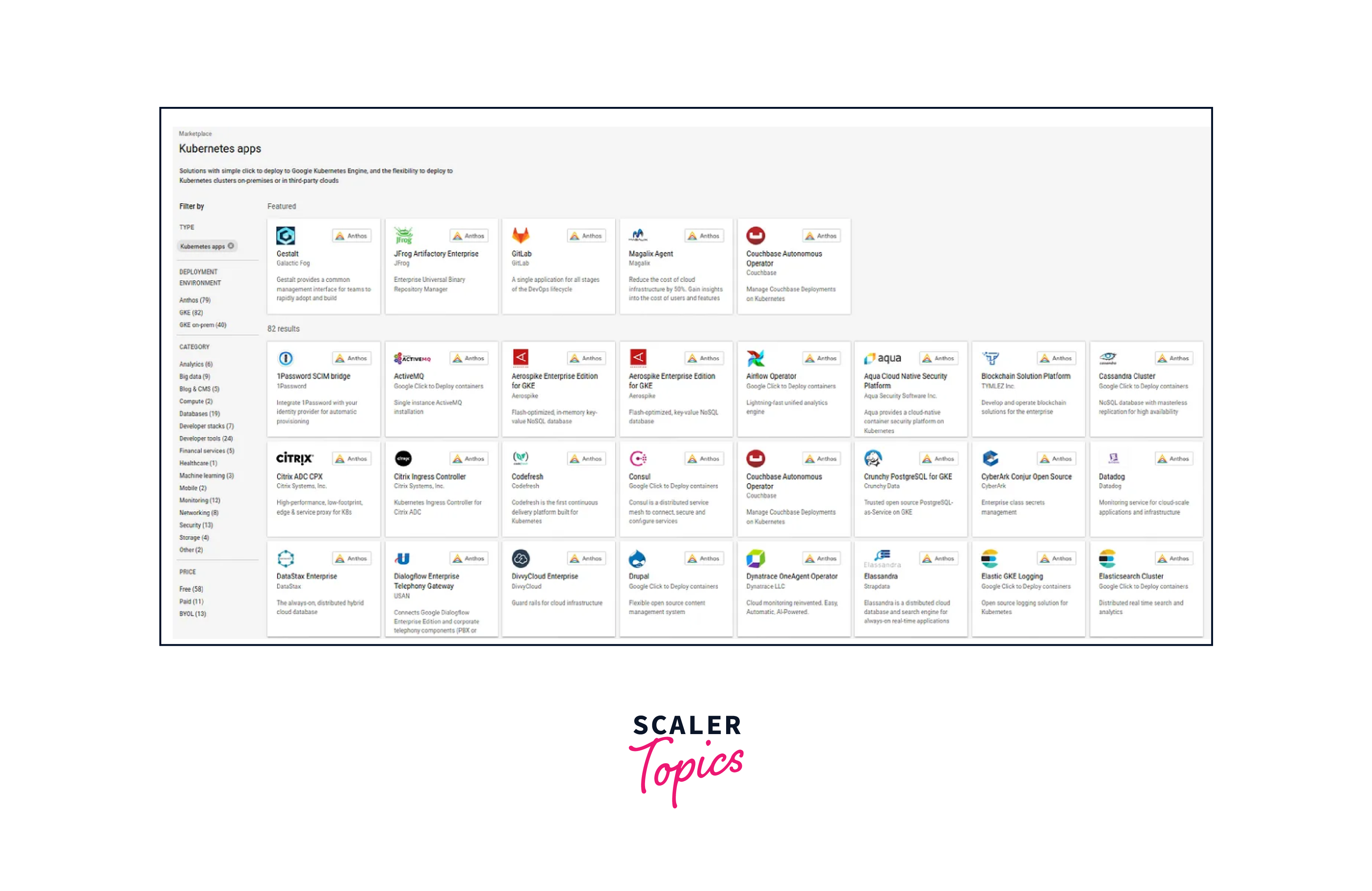

Step-2: To see the Kubenetes applications that you can deploy on your GKE cluster, click here OR navigate as follows on the GCP Console: Marketplace > Filter by Kubernetes Apps

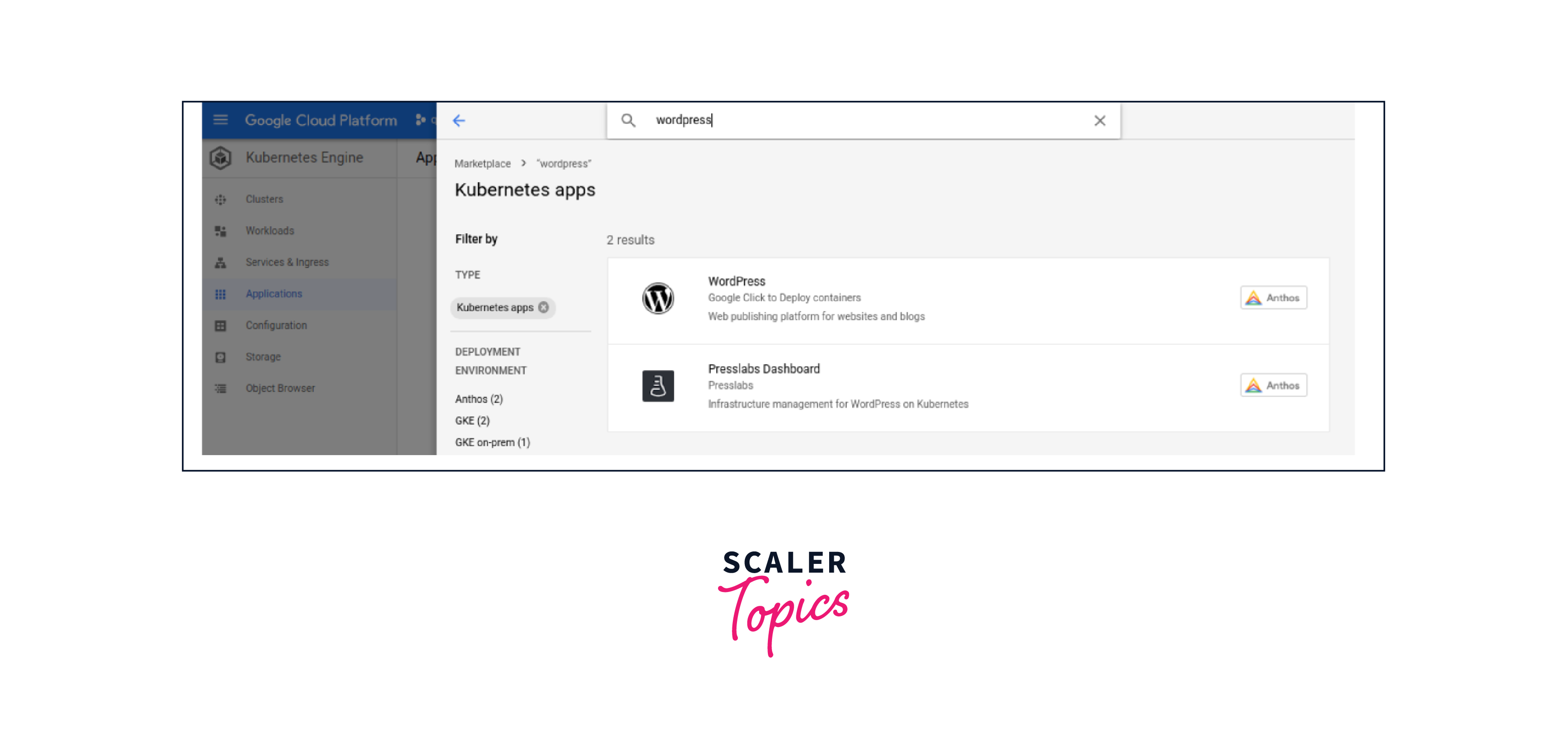

Step-3: Search for or select an application, to see its information, including its pricing.

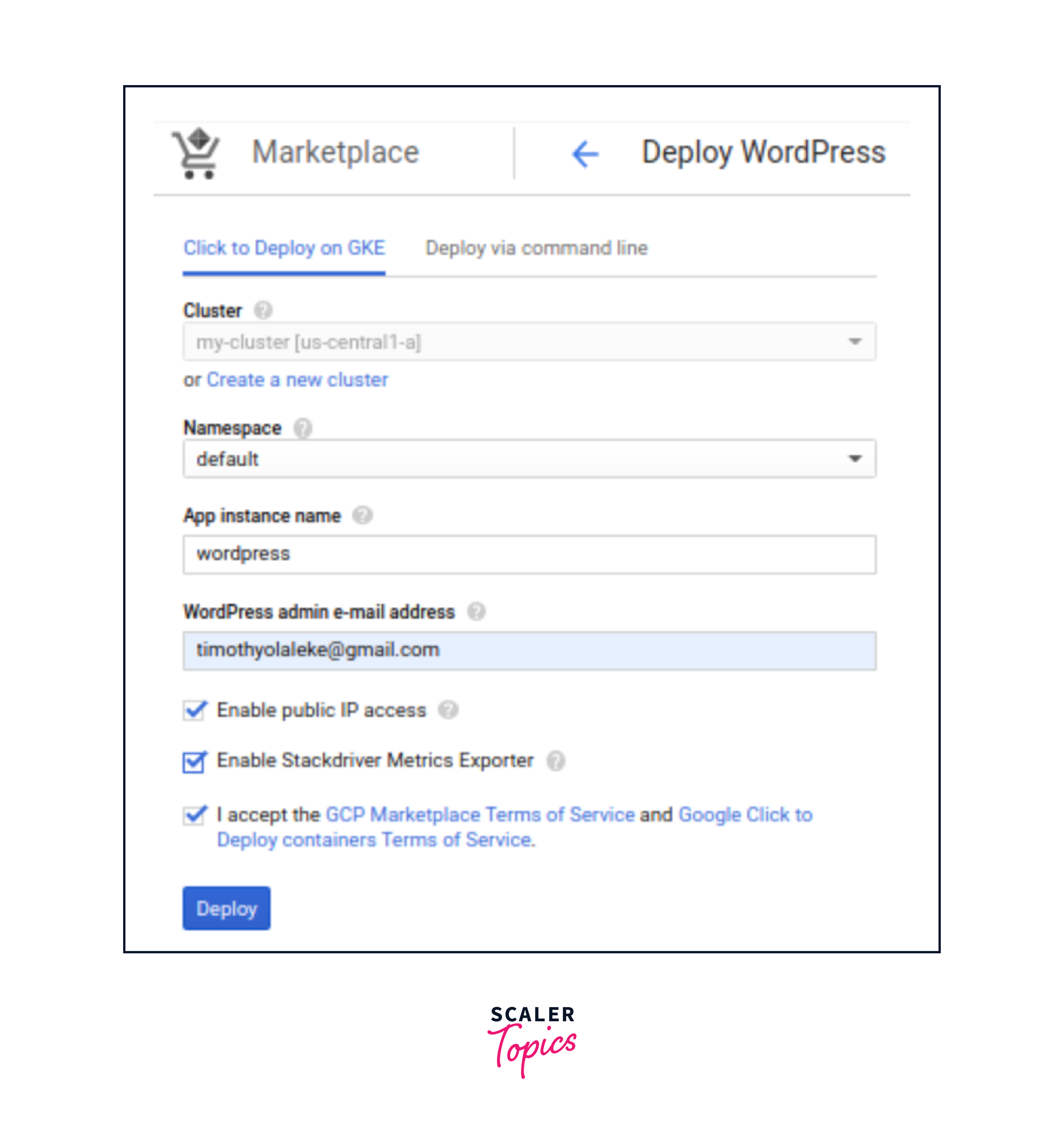

Step-4: Click on your app of choice > Configure. Enter a name for your application, then click Deploy.

Step-5: Your application will be deployed, with all the resources created for you. To manage, edit, or delete your application deployment, do so from the GKE cluster’s Applications page.

Networking and Load Balancing

In Google Kubernetes Engine (GKE), networking and load balancing are essential components to ensure the availability, reliability, and performance of your applications.

-

Cluster Networking:

-

Virtual Private Cloud (VPC): Each GKE cluster is associated with a VPC, which provides isolated networking for the cluster. You can configure the VPC's IP ranges and subnets according to your organization's requirements.

-

Private Clusters: GKE supports private clusters, which means the nodes of the cluster can be isolated from the public internet. This adds an extra layer of security to your applications.

-

-

Load Balancing:

-

Google Cloud Load Balancing: GKE integrates seamlessly with Google Cloud's load balancing services, which provide global and regional load balancing for your applications. There are several load balancing options available:

-

HTTP(S) Load Balancing: Used for distributing HTTP and HTTPS traffic. It supports content-based routing and SSL termination.

-

TCP/UDP Load Balancing: Distributes traffic at the transport layer, suitable for non-HTTP applications.

-

Internal Load Balancing: Used for distributing traffic within a VPC network, without exposing the load balancer's IP to the public internet.

-

-

Ingress: GKE supports Kubernetes Ingress resources, which allow you to define how external traffic should be routed to your services. Google Cloud's Ingress controller can automatically configure the appropriate load balancer based on your Ingress definitions.

-

-

Service Networking:

-

ClusterIP: By default, Kubernetes Services within GKE are assigned a ClusterIP, which is an internal IP address accessible only within the cluster. This is suitable for communication between services within the cluster.

-

NodePort: With NodePort Services, a high-port range is exposed on each node's IP. This allows external traffic to reach services by targeting a node's IP and the allocated port.

-

LoadBalancer: For exposing services to the public internet, you can create a LoadBalancer Service type. This automatically provisions a Google Cloud load balancer and assigns an external IP to the service.

-

ExternalName: This type of service maps a service to an external DNS name without load balancing. It's often used for integrating with external services.

-

-

Network Policies:

- GKE supports Kubernetes Network Policies, which allow you to define ingress and egress rules to control the flow of traffic between pods within the cluster. This adds a layer of security and isolation to your applications.

-

VPC Peering and VPN:

- You can establish VPC peering to connect GKE clusters with other VPC networks in the same project or in different projects. Virtual Private Network (VPN) tunnels can also be set up for secure communication between GKE clusters and on-premises networks.

Secrets and Configuration Management

Managing secrets and configuration within Google Kubernetes Engine (GKE) is crucial for securely deploying and maintaining your applications. Kubernetes provides features for managing secrets and configuration data, and GKE extends these capabilities with additional integration and security features. Here's how you can handle secrets and configuration management in GKE:

-

Kubernetes Secrets:

Kubernetes provides a built-in resource called "Secrets" for storing sensitive data, such as API keys, passwords, and certificates. In GKE, you can create Secrets and attach them to your application pods.

To create a Secret in GKE, you can use the kubectl create secret command or define Secrets in your deployment manifests. Secrets can be stored in different formats, such as opaque secrets (binary data) or as key-value pairs.

Example:

-

Secrets Management in GKE:

GKE enhances Kubernetes Secrets management with the following features:

-

Secrets Encryption at Rest: GKE automatically encrypts Secrets at rest using Google Cloud KMS keys. This provides an extra layer of security for sensitive data.

-

Workload Identity: GKE supports Workload Identity, which allows you to associate Kubernetes service accounts with Google Cloud service accounts. This can be used to securely access Google Cloud services without the need to manage API keys.

-

-

Configuration Management:

Managing configuration data is equally important for your applications. Kubernetes ConfigMaps are used to store configuration data in key-value pairs, which can be consumed by your application containers as environment variables or mounted as files.

Example:

-

GKE Config Connector:

GKE offers an advanced feature called Config Connector, which extends Kubernetes to manage Google Cloud resources as if they were native Kubernetes objects. This allows you to define and manage Google Cloud resources, such as Cloud Storage buckets or Pub/Sub topics, using Kubernetes manifests.

-

Secrets and ConfigMap Updates:

When you update a Secret or ConfigMap, the changes are automatically propagated to the pods that are using them. This ensures that your application containers always have access to the latest configuration and secrets.

-

Third-Party Solutions:

While Kubernetes Secrets and ConfigMaps are suitable for basic use cases, more complex environments might require third-party solutions for enhanced secrets management. Tools like HashiCorp Vault or dedicated Kubernetes-native solutions can provide advanced features such as dynamic secrets, audit trails, and integration with external authentication providers.

Proper secrets and configuration management in GKE is essential to maintaining the security and reliability of your applications. Be sure to follow best practices, like restricting access to Secrets, using encryption, and avoiding hardcoding sensitive information in your application code or configuration files.

Cluster Security and Identity Management

Cluster security and identity management are critical aspects of managing applications in Google Kubernetes Engine (GKE). Ensuring the security of your cluster and managing user and service identities are essential for maintaining the integrity and confidentiality of your workloads. Here's how you can address cluster security and identity management in GKE:

-

Access Control and RBAC (Role-Based Access Control):

- GKE supports Kubernetes RBAC, which allows you to define fine-grained access controls for users and services. You can create roles and role bindings to control who can perform specific actions within the cluster.

-

Google Cloud Identity and Access Management (IAM):

- GKE is integrated with Google Cloud IAM, allowing you to manage access to GKE resources using IAM roles. IAM roles can be granted to users, groups, and service accounts, controlling access to GKE clusters and related resources.

-

Node Identity:

- GKE provides a feature called "Workload Identity," which allows you to associate Kubernetes service accounts with Google Cloud service accounts. This enables pods to use the identity of the associated Google Cloud service account to authenticate and access Google Cloud resources.

-

Pod Identity:

- GKE supports Workload Identity and Pod Identity, which enable your application pods to assume identities to access Google Cloud services securely. This eliminates the need to manage and distribute sensitive credentials within your application code.

-

Node Security and OS Hardening:

- GKE manages the underlying infrastructure, including the operating system of the nodes. Google ensures regular security updates for the nodes, but you can also apply additional security measures to the node instances, such as using Google-provided COS (Container-Optimized OS), adjusting firewall rules, and implementing intrusion detection.

It's important to design your GKE cluster with security in mind from the beginning. Following best practices, regularly reviewing and updating your security measures, and educating your team on security practices will help you maintain a secure and resilient Kubernetes environment.

Upgrades and Maintenance

Managing upgrades and maintenance in Google Kubernetes Engine (GKE) is crucial to keep your clusters secure, reliable, and up to date. GKE simplifies this process while minimizing disruptions to your applications. Here are the key points for upgrades and maintenance in GKE:

-

Automated Upgrades: GKE provides automated Kubernetes version upgrades for the control plane. This ensures that your cluster's management plane is regularly updated with the latest stable Kubernetes version, which includes security patches and new features.

-

Node Pools and Maintenance Windows: GKE allows you to define maintenance windows for node pools. During these windows, GKE schedules necessary maintenance tasks like updating node images, applying security patches, and performing node replacements. This reduces the impact on your workloads.

-

Node Auto-Repair: GKE's node auto-repair feature automatically monitors the health of your nodes and proactively replaces nodes that are unhealthy or failing. This helps maintain the overall health and availability of your cluster.

-

Cluster Upgrades: You can initiate cluster upgrades manually if needed, ensuring that your applications are tested against newer Kubernetes versions before automatic upgrades. This gives you control over the upgrade process.

-

Rolling Updates: For application workloads, GKE supports rolling updates. When you deploy new versions of your applications, GKE performs rolling updates, gradually replacing old pods with new ones to maintain application availability.

By following best practices and utilizing GKE's automation features, you can ensure that your Kubernetes clusters are up to date, secure, and reliable while minimizing disruptions to your applications and services.

Integration with Google Cloud Services in GKE

Google Kubernetes Engine (GKE) seamlessly integrates with Google Cloud services, enhancing application capabilities. It connects to Google Cloud Identity and Access Management (IAM) for access control. Applications can utilize Google Cloud Storage for persistent storage and Google Cloud Pub/Sub for event-driven communication. GKE also supports managed databases like Google Cloud SQL and Cloud Spanner, and integrates with Google Cloud Monitoring and Logging for monitoring and alerts. Load balancing services, such as HTTP(S) Load Balancing, enhance application availability. GKE applications can leverage machine learning through AutoML and AI Platform, and interact with various Google Cloud APIs. Google Cloud Build and Container Registry enable CI/CD pipelines, and the Google Cloud Marketplace offers preconfigured applications for streamlined deployment.

Cost Optimization and Billing

Cost optimization in GKE involves strategic resource management. Utilize autoscaling, preemptible VMs, and accurate resource requests to match workload needs. Implement Kubernetes HPA for dynamic pod scaling and set up Google Cloud Budgets for spending alerts. Right-size node pools and pods based on actual requirements and monitor usage patterns. Employ resource quotas, consider reserved instances, and explore GKE Autopilot mode for efficient resource allocation. Regularly analyze idle resources and workloads. By employing these measures and monitoring expenses, you can optimize costs while maintaining application performance in Google Kubernetes Engine.

Multi-Region and Hybrid Cloud Deployments

Multi-region and hybrid cloud deployments in Google Kubernetes Engine (GKE) enable you to achieve high availability, disaster recovery, and flexibility in managing your applications across different geographic locations and cloud environments. Here's how to approach these deployment strategies:

-

Multi-Region Deployments:

- GKE supports multi-region deployments, allowing you to create clusters that span multiple Google Cloud regions. This enhances application availability by distributing workloads across geographically separated zones.

-

Load Balancing and Traffic Management:

- Utilize Google Cloud's global load balancers to distribute traffic to different clusters in multiple regions. Use Traffic Director for advanced traffic management and routing.

-

Data Replication and Disaster Recovery:

- Implement data replication strategies using Google Cloud Storage or managed databases like Google Cloud SQL to ensure data redundancy and disaster recovery across regions.

-

Geo-Redundancy and Failover:

- Design applications with geo-redundancy in mind, allowing for failover between regions in case of outages. Implementing active-active configurations enhances reliability.

-

Latency Optimization:

- Deploy applications in regions that are geographically closer to your users to minimize latency and improve user experience.

By carefully planning and implementing multi-region and hybrid cloud strategies in GKE, you can achieve high availability, disaster recovery, and flexible application management while meeting your organization's performance, compliance, and business continuity requirements.

Best Practices and Tips

-

Cluster Design and Configuration:

- Plan cluster architecture based on workload requirements. Consider factors like node pools, machine types, and resource requests.

- Use regional clusters for high availability and spread nodes across zones for fault tolerance.

- Leverage GKE Autopilot mode for managed cluster operations and efficiency.

-

Resource Optimization:

- Set accurate resource requests and limits for pods to avoid resource wastage.

- Right-size node pools and pods based on actual usage patterns.

- Utilize node autoscaling to dynamically adjust capacity as needed.

-

Security and Access Control:

- Implement least privilege access by using Kubernetes RBAC and Google Cloud IAM.

- Leverage workload identity for secure communication with Google Cloud services.

- Use Network Policies to control traffic flow between pods.

-

Deployment Strategies:

- Embrace GitOps for declarative and version-controlled deployments.

- Utilize Canary deployments and Blue-Green deployments to minimize user impact during updates.

-

Monitoring and Observability:

- Implement centralized logging and monitoring using Google Cloud's tools.

- Use Kubernetes-native tools like Prometheus and Grafana for in-depth observability.

- Set up alerts for critical events and resource thresholds.

-

Scaling and Autoscaling:

- Use Horizontal Pod Autoscaling (HPA) to ensure optimal resource utilization.

- Configure GKE's Cluster Autoscaler to handle node scaling based on demand.

-

Backup and Disaster Recovery:

- Regularly back up etcd data to ensure data resiliency.

- Create snapshots of your cluster's state for disaster recovery.

-

Testing and Staging:

- Implement testing and staging environments to validate changes before deploying to production.

- Use CI/CD pipelines for automated testing and deployment.

Conclusion

- In conclusion, effectively managing Google Kubernetes Engine (GKE) requires strategic planning, security implementations, and efficient resource usage.

- Employing Infrastructure as Code (IaC) and GitOps workflows simplifies deployments, while monitoring tools enhance observability.

- Security measures like RBAC, network policies, and secrets management bolster protection.

- Multi-region and hybrid cloud deployments ensure high availability and flexibility.

- Adhering to best practices, optimizing costs, and staying informed about updates ensures optimal performance.

- GKE remains a pivotal platform for scalable, modern application deployment, demanding continuous learning and adaptation for successful container orchestration.