Kubernetes Advanced Networking

Overview

As Kubernetes deployments become more complex and diverse, advanced networking concepts become essential to ensure security, scalability, and efficient communication between various components. Advanced Kubernetes networking goes beyond the basics and involves more sophisticated solutions to address the challenges of modern application architectures.

Here are some key aspects of Kubernetes advanced networking:

- Network Policies:

Network policies allow you to define fine-grained rules for controlling the communication between pods in a namespace. - Service Mesh:

A service mesh is a dedicated infrastructure layer that handles communication between services within a Kubernetes cluster. It offers features like traffic routing, load balancing, observability, and security. - Multi-Cluster Networking:

Multi-cluster networking allows these clusters to communicate with each other seamlessly. This can be achieved using technologies like Federation, which enables unified management and communication across clusters, or solutions like Anthos by Google Cloud, which provides a multi-cluster management platform. - Advanced Load Balancing:

These solutions can integrate with external load balancers, provide advanced routing rules, and even support global load balancing for applications deployed across multiple regions. - Network Function Virtualization (NFV):

In Kubernetes, NFV can be applied to create virtualized network functions that offer capabilities such as network monitoring, intrusion detection, and deep packet inspection. - eBPF and Data Plane:

Enhanced Berkeley Packet Filter (eBPF) is a technology that allows you to attach custom programs to the Linux kernel's data plane. This enables fine-grained observability, network monitoring, and even network enforcement at the kernel level. - Hybrid and Multi-Cloud Networking:

In hybrid or multi-cloud environments, kubernet advanced networking solutions help connect on-premises infrastructure, multiple cloud providers, and Kubernetes clusters seamlessly.

Kubernetes Advanced networking addresses the challenges that arise as Kubernetes clusters grow in complexity and scale. It focuses on enhancing security, observability, and performance while accommodating modern application architectures and deployment models.

Understanding Kubernetes Networking Basics

Kubernetes advanced networking ensures that applications can communicate with each other, external users can access the applications, and services can be discovered and load-balanced effectively.

There are two main aspects of Kubernetes networking that need to be understood: pod networking and service networking.

a. Pod Networking:

Pods are the smallest deployable units in Kubernetes and are designed to hold one or more containers. Containers within a pod share the same network namespace, which means they share the same IP address and port space. Kubernetes assigns each pod a unique IP address within the cluster's network range.

b. Service Networking:

Services ensure that applications remain accessible and discoverable even as pods are dynamically created, scaled, or replaced.

There are several types of services:

- ClusterIP:

This is the default service type. It exposes the service to a cluster-internal IP address that is accessible only from within the cluster. It provides basic load balancing among the pods. - NodePort:

This type exposes the service on a static port on each node's IP address, allowing external access. However, it's not recommended to expose it directly to external users due to security concerns. - Load Balancer:

When using a cloud provider that supports load balancers, this type creates an external load balancer that forwards traffic to the service. It is often used to expose services externally. - ExternalName:

This type allows you to use a DNS alias to point to an external service, effectively providing a way to integrate external services into your Kubernetes cluster. - Ingress:

Ingress resources define rules for routing external traffic to services within the cluster.

Understanding these Kubernetes advanced networking basics is crucial for deploying and managing applications effectively within a Kubernetes cluster. Proper networking configuration ensures seamless communication between pods, reliable service exposure, and efficient load balancing.

Network Overlays

A network overlays refers to a virtualized network infrastructure that is built on top of an existing physical network. It's a way to create a logical abstraction of the underlying physical network, allowing for increased flexibility, scalability, and management.

Here's a more detailed explanation of the concept of a network overlays:

- Abstraction Layer:

This abstraction enables network administrators to work with virtual networks and devices without needing to understand the intricacies of the underlying physical infrastructure. - Virtual Network Creation:

With a network overlay, you can create multiple virtual networks on top of a single physical network. - Logical Isolation: This isolation prevents interference between the network traffic of different applications, projects, or tenants.

- Tunneling Protocols:

Network overlays often rely on tunneling protocols to encapsulate and carry virtual network traffic over the physical network. - Centralized Management:

The management of network overlays can often be centralized through software-defined networking (SDN) controllers. SDN controllers provide a single point of control for configuring and managing the overlay networks, making it easier to orchestrate complex networking tasks. - Flexibility and Agility:

Network overlays decouple the virtual network configuration from the physical network infrastructure. This flexibility allows for rapid deployment of new services, quick network changes, and easier migration of workloads across different environments. - Hybrid and Cloud Environments:

Network overlays are particularly beneficial in hybrid cloud environments, where workloads can move between on-premises data centers and public cloud platforms. - Challenges:

While network overlays offer numerous advantages, they also come with challenges. Managing the increased complexity introduced by overlays, ensuring efficient use of network resources, and handling potential performance bottlenecks are some of the issues that need careful consideration.

Service Meshes

A service mesh is a dedicated layer of infrastructure that handles service-to-service communication within a Kubernetes cluster. It provides features like traffic routing, load balancing, security, and observability. Service meshes are particularly useful in microservice architectures, where communication between services can become complex and challenging to manage. A service mesh helps address these challenges by abstracting away the complexities of communication, providing a set of tools, practices, and services to streamline and control inter-service communication.

Popular service mesh solutions like Istio, Linkerd, and Consul provide features such as:

- Traffic Management:

Service meshes allow you to define rules for traffic routing, load balancing, and retries. You can implement A/B testing, canary releases, and blue-green deployments easily. - Security:

Service meshes offer mutual TLS authentication and authorization between services. They can encrypt communication, verify the identity of services, and enforce access control policies. - Service Discovery:

Service meshes handle service discovery, automatically updating routing configurations as new services are added or removed. This eliminates the need for manual configuration adjustments. - Observability:

Service meshes provide detailed insights into service-to-service communication. You can monitor traffic, latency, error rates, and trace requests across the entire application. - Resilience:

Service meshes can automatically handle retries, timeouts, and circuit breaking, enhancing the reliability of your microservices. - Control and Configuration:

Service meshes allow centralized control over communication between services. Changes in communication policies, timeouts, and routing rules can be managed dynamically without requiring code changes in individual microservices.

Two popular service mesh implementations are Istio and Linkerd. These tools provide the infrastructure needed to deploy, manage, and monitor a service mesh in a microservices environment.

Service meshes are particularly beneficial in large and complex applications with numerous microservices, where maintaining consistent communication, security, and observability can become challenging. By providing a standardized way to manage these aspects, service meshes contribute to the overall stability and maintainability of the application.

Network Policies

Network policies in Kubernetes allow you to define rules that control the communication between pods and services. They enhance security by specifying which pods are allowed to communicate with each other based on labels, namespaces, and port ranges. Network policies are especially important in environments with multiple applications and teams sharing the same cluster.

One essential characteristic of network policies is their capacity to specify both ingress and egress rules, thereby affording administrators fine-grained control over both incoming and outgoing network traffic. Ingress rules dictate how incoming traffic is permitted to access specific resources or destinations, while egress rules govern the outbound traffic from those resources. This bi-directional control empowers network administrators to craft comprehensive security strategies that encompass not only safeguarding against external threats but also ensuring that internal communications adhere to organizational requirements and best practices.

With network policies, you can:

- Isolate Services:

Ensure that only specific pods can communicate with each other, reducing the attack surface and preventing unintended interactions. - Segment Traffic:

Enforce rules for communication between different components, even if they're part of the same application, to prevent unauthorized access. - Fine-Grained Control:

Define policies that specify both allowed and denied traffic, giving you granular control over network communication.

Ingress Controllers and Load Balancers

Ingress controllers and load balancers are essential for exposing services externally and managing incoming traffic to your Kubernetes cluster.

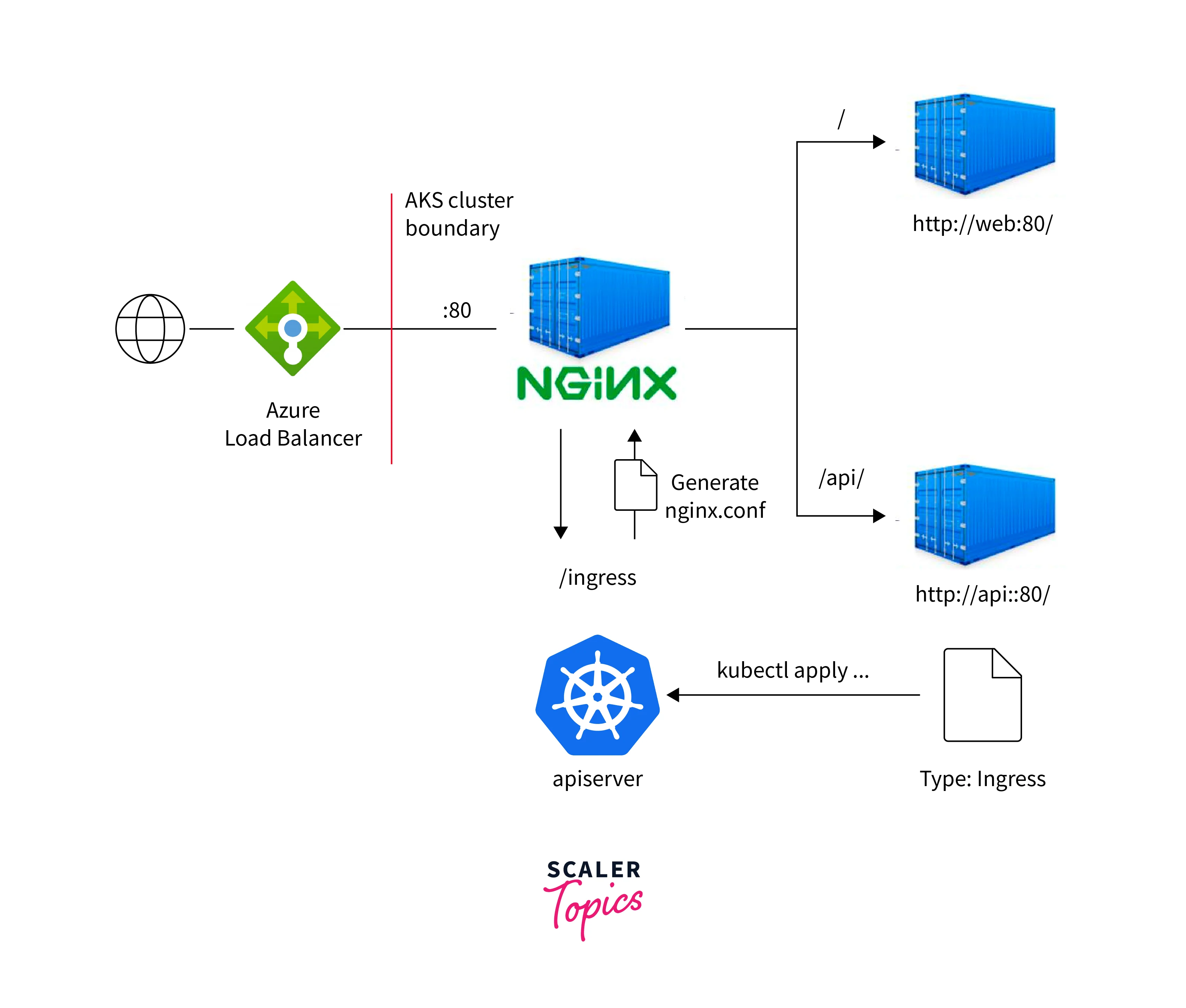

- Ingress Controllers:

An Ingress controller is a Kubernetes resource that manages external access to services within the cluster. It routes incoming HTTP and HTTPS traffic to the appropriate services based on rules defined in Ingress resources. Common ingress controllers include Nginx Ingress Controller, Traefik, and HAProxy Ingress.

- Load Balancers:

Kubernetes provides several types of service objects for load balancing. LoadBalancer services, when supported by the underlying cloud provider, automatically provision external load balancers to distribute traffic to pods. NodePort services expose services on a static port on each node's IP address, making them accessible externally.

Integrating External Networking Solutions

Integrating external networking solutions with Kubernetes involves incorporating third-party tools, services, and technologies to enhance the networking capabilities of your Kubernetes clusters.

Here's an elaborate overview of integrating external networking solutions with Kubernetes:

-

Motivation for Integration:

- Hybrid Cloud Environments:

Many organizations have hybrid cloud setups with applications and services spread across on-premises infrastructure and multiple cloud providers. Integrating external networking solutions enables seamless communication and management across these diverse environments. - Specialized Services:

Some organizations require specialized networking services that might not be provided out-of-the-box by Kubernetes. Integration allows them to leverage existing investments and expertise in these solutions.

- Hybrid Cloud Environments:

-

Networking Integration Challenges:

- Compatibility:

Ensuring that the external networking solution is compatible with Kubernetes and other components in your environment can be challenging. - Security and Compliance:

Integrating external solutions should not compromise security or compliance standards. Proper authentication and encryption mechanisms need to be in place. - Performance and Latency:

External networking solutions may introduce latency, affecting application performance. This needs to be carefully considered and mitigated.

- Compatibility:

-

Types of External Networking Integration:

- Load Balancers:

Integrating with external load balancers provided by cloud providers to distribute traffic efficiently to Kubernetes services. - Virtual Private Networks (VPNs):

Setting up secure connections between Kubernetes clusters and on-premises resources using VPNs - Direct Connections:

Establishing direct connections between Kubernetes clusters and cloud providers' resources for improved network performance and reliability - Software-Defined Networking (SDN):

Leveraging SDN solutions for advanced networking features such as dynamic routing and traffic shaping - Edge Networking Solutions:

Integrating Kubernetes with edge networking solutions to provide services closer to end-users for reduced latency

- Load Balancers:

-

Benefits of Integration:

- Seamless Communication:

Integration ensures that applications in Kubernetes can communicate efficiently with external resources without network-related bottlenecks. - Enhanced Capabilities:

External networking solutions can provide features like advanced routing, security, and load balancing that might not be available in Kubernetes natively. - Optimized Performance:

Integration with specialized networking solutions can lead to improved network performance and reduced latency.

- Seamless Communication:

-

Implementation Best Practices:

- Compatibility Testing:

Thoroughly test the compatibility of external solutions with your Kubernetes setup before full-scale integration. - Security Considerations:

Implement strong authentication, encryption, and access controls to secure communication between Kubernetes and external resources. - Monitoring and Troubleshooting:

Set up monitoring tools to keep track of communication between Kubernetes and external resources. This helps identify and resolve issues promptly. - Documentation and Training:

Properly document the integration process and provide training to your team to ensure effective management and troubleshooting.

- Compatibility Testing:

Network Performance and Monitoring:

Network performance and monitoring are critical aspects of managing a Kubernetes cluster. Monitoring the network helps ensure that applications are running smoothly and potential issues are identified and resolved promptly.

Here's an elaborate overview of network performance and monitoring in Kubernetes:

Importance of Network Monitoring:

Network monitoring is crucial for several reasons:

- Performance Optimization:

Monitoring helps identify bottlenecks, latency issues, and network congestion that might affect the performance of applications running in the cluster. - Reliability:

Monitoring enables early detection of network failures or anomalies, allowing for proactive troubleshooting and reducing downtime. - Security:

Monitoring helps detect unusual network behavior that might indicate security breaches or unauthorized access. - Resource Management:

Monitoring assists in understanding the network resource utilization of different pods, helping in efficient resource allocation and scaling decisions.

Key network metrics to monitor:

Several network metrics should be monitored in a Kubernetes cluster:

- Latency:

Measure the time it takes for data to travel between pods or services. - Throughput:

Monitor the amount of data transferred per unit of time to assess network bandwidth usage. - Packet Loss:

Track the rate at which data packets are lost or dropped during transmission. - Jitter:

Measure the variation in latency over time, which can impact application performance. - Network Errors:

Monitor network errors and collisions that might affect communication. - Traffic Patterns:

Analyze inbound and outbound traffic patterns to understand application behavior and usage.

Monitoring Tools and Solutions:

There are various monitoring tools and solutions that can be used to monitor network performance in a Kubernetes cluster:

- Prometheus:

is a popular open-source monitoring and alerting toolkit that gathers and stores metrics from various sources, including Kubernetes. - Grafana:

Often used alongside Prometheus, Grafana provides visualization and dashboarding capabilities to help visualize network metrics. - Elasticsearch, Logstash, and Kibana (ELK) Stack:

The ELK stack can be used for monitoring and analyzing logs, including network-related logs. - Jaeger and Zipkin:

Distributed tracing tools that help track the flow of requests through microservices and identify latency issues. - CNI-Specific Tools:

Some Container Network Interfaces (CNIs) like Calico and Flannel offer their own monitoring tools for network-specific insights.

Implementation and Best Practices:

- Define Monitoring Objectives:

Determine the specific network metrics that are critical for your application's performance and reliability. - Instrumentation:

Ensure that your applications, services, and Kubernetes components are properly instrumented to emit relevant network metrics. - Use Alerts:

Set up alerts based on predefined thresholds to receive notifications when network performance degrades or anomalies occur. - Visualize Data:

Utilize visualization tools like Grafana to create dashboards that provide a real-time view of network performance. - Historical Analysis:

Regularly analyze historical network data to identify trends and patterns that can help in capacity planning and optimization. - Integrate with Incident Response:

Network monitoring should be part of your incident response strategy to quickly address network-related issues.

Best Practices for Kubernetes Advanced Networking

- Plan for Security: Implement network policies to isolate and control communication between pods. Use service mesh solutions for strong encryption and authentication between services.

- Use Network Policies: Define network policies early in your cluster setup to enforce segmentation and access controls.

- Use load balancers wisely:

choose the appropriate service type (ClusterIP, NodePort, load balancer) based on your needs. Consider using an ingress controller for routing external traffic to services. - Optimize Overlay Networks:

Choose a CNI that suits your application requirements and supports efficient overlay networking. - Implement Multi-Cluster Networking with Care:

When dealing with multi-cluster setups, consider latency, security, and data synchronization challenges.

Limitations and Challenges

Here are some of the limitations and challenges you might encounter when working with advanced networking in Kubernetes:

-

Complexity:

Kubernetes Advanced networking configurations can be complex to set up and manage. Kubernetes offers various networking plugins and options, such as CNI (Container Network Interface) plugins, network policies, and service types. Configuring these components correctly requires a deep understanding of both networking concepts and Kubernetes internals. -

Performance Overhead:

Certain kubernetes advanced networking features, such as network policies that involve packet inspection or encryption, can introduce performance overhead. It's essential to carefully consider the trade-offs between security and performance when implementing these features. -

Service-to-Service Communication:

Kubernetes services enable service discovery and load balancing, but they might not suit all use cases. For example, if you need advanced load balancing algorithms or more granular control over traffic routing, you might need to integrate Kubernetes services with external load balancers or use a service mesh. -

Cross-Cluster Networking:

Kubernetes supports multi-cluster deployments, but networking across clusters can be challenging. Establishing secure and efficient communication between clusters while maintaining visibility and control can be complex and might require third-party solutions. -

Network Policies:

While Kubernetes offers network policies for controlling traffic flow between pods, implementing and managing these policies can be intricate. Enforcing policies consistently across a large and dynamic environment requires careful planning and ongoing maintenance. -

Overlay Networks:

Many Kubernetes networking solutions rely on overlay networks to provide connectivity between pods across nodes and clusters. While overlays are effective, they can introduce additional latency and complexity to the network stack. -

External Connectivity:

Allowing pods to communicate with resources outside the cluster can be challenging, especially when dealing with security considerations and managing ingress and egress traffic. Kubernetes provides Ingress controllers and NodePort services, but integrating them with external load balancers or other solutions might be necessary for more advanced scenarios. -

Network Plugins Compatibility:

Kubernetes supports various network plugins, and not all plugins are compatible with every Kubernetes version or deployment type. Ensuring compatibility and stability can be a challenge, particularly when upgrading or migrating clusters. -

Debugging and Troubleshooting:

When networking issues arise in Kubernetes, debugging can be complex due to the distributed nature of microservices and the potential involvement of various networking components. Tools for monitoring, tracing, and logging are essential for diagnosing and resolving networking problems. -

Consistency Across Environments:

Achieving consistent network configurations across development, staging, and production environments can be difficult. Configuration drift and differences in networking policies might lead to unexpected behavior when moving applications between environments.

To address these limitations and challenges, it's important to thoroughly plan your Kubernetes networking strategy, choose appropriate networking solutions, and stay up-to-date with best practices and tools within the Kubernetes ecosystem. Additionally, collaborating with experienced network engineers and Kubernetes administrators can help navigate these complexities effectively.

Conclusion

- Advanced Kubernetes networking involves various concepts to enhance communication, security, and scalability.

- Network overlays provide virtual networks for efficient pod-to-pod communication.

- Service meshes offer traffic management, security, and observability for microservices.

- Network policies ensure controlled communication between pods.

- Ingress controllers and load balancers manage external access to services.

- Integrating external networking solutions improves connectivity to external resources.

- Monitoring network performance is essential for cluster health.

- Best practices include security planning, optimal use of load balancers, and thoughtful implementation of multi-cluster networking.

- Challenges include complexity, potential performance overhead, and compatibility issues.