kubernetes and containerization

Overview

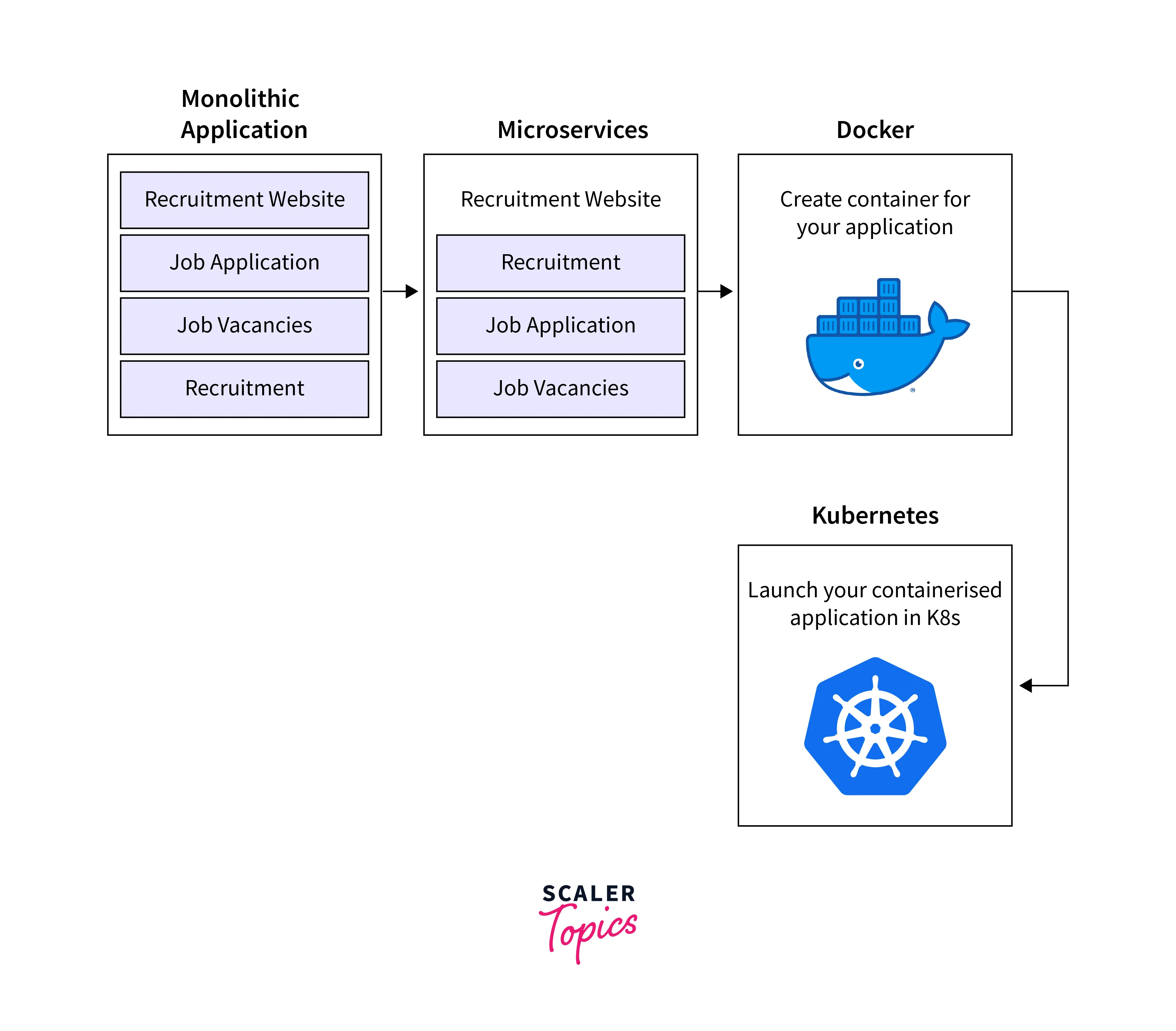

Containerization has transformed application deployment. Unlike traditional monolithic apps, containers encapsulate everything an app needs—runtime, dependencies, and code—into a portable package. Enter Kubernetes, an open-source platform that streamlines managing, scaling, and deploying these containerized apps. It's a game-changer for app management and scalability. Containerization, paired with Kubernetes orchestration, revolutionizes app deployment, management, and scaling. This blog explores the fundamental concepts of containerization and Kubernetes, their benefits, key considerations, and their synergy with HPE solutions.

What is a Kubernetes Container?

Containers within Kubernetes mark a significant departure from traditional app deployment. Formerly, apps resided in separate VMs to dodge dependency clashes, but this wasted resources. Kubernetes containers reimagined this, using a container engine to host multiple apps on one OS, trimming waste. Unlike the old VM-per-app approach, each app now sits in an isolated container, apart from the host OS. This shields apps from each other's changes and dependencies, ensuring harmony without interference.

Kubernetes containers also embrace container images self-contained app packages. These bundles hold everything needed: code, runtime, vital libraries, and default settings. This simplifies deployment and boosts resource efficiency, shunning the outdated VM model. Containers economize by sharing the OS kernel, optimizing resources.

Benefits:

- Isolation and Portability: Containers encapsulate applications and their dependencies, ensuring consistent behavior across various environments, from development to production.

- Efficiency: Containers share the host OS kernel, resulting in minimal overhead compared to traditional virtualization. This enables higher density and efficient resource utilization.

- Rapid Deployment: Containers can be spun up or down in a matter of seconds, enabling faster application deployment and scaling to meet demand spikes.

- Consistency: Containers eliminate the "it works on my machine" problem by ensuring consistent behavior across different development and deployment stages.

What is Containerization in Kubernetes?

Containerization in Kubernetes involves deploying and managing containerized applications using the Kubernetes platform. Kubernetes abstracts away the underlying infrastructure and provides a declarative model for defining, deploying, and scaling applications. It orchestrates containers across a cluster of machines, ensuring that the desired state of applications matches the actual state.

-

How Does it Work?

Utilizing the potency of container technology, Kubernetes' implementation of containerization allows for the scalable and effective packaging, deployment, and management of applications. The deployment, scaling, and maintenance of these containerized apps across a cluster of servers are all automated by the orchestration framework known as Kubernetes. Let's explore the inner workings of Kubernetes containerization.

- Defining Application Configuration: Kubernetes manifests, which are YAML files, are used by developers to define the intended configuration of their apps. These manifests specify how the program should operate, including the number of replicas, the resources needed, the networking needs, the storage requirements, and other variables.

- Container Images: Programme code, runtime, dependencies, and the libraries needed for the program to operate are all included in container images, which are packages that hold applications. These images are kept in either a private registry or a container registry like Docker Hub.

- Creating Pods: In Kubernetes, a pod is the smallest deployable unit. One or more containers that use the same IP address, network namespace, and storage volumes can be hosted by a pod. Localhost can be used for communication between containers that are part of the same pod.

- Master Node and Scheduler: The Kubernetes master node controls the cluster and all of its elements. The Kubernetes scheduler sends the application's pods to particular worker nodes based on the resources and limitations that are available when an application has to be deployed.

- Worker Nodes: The machines in the cluster that operate the actual containers are known as worker nodes. To manage and run the containers, each node uses a container runtime (such as Docker).

- Controller Managers: Controller managers are a part of Kubernetes that guarantee the application is kept in the desired condition. They keep track of the cluster's present condition, compare it to the ideal state specified in the manifests, and make adjustments as needed.

Key Considerations in Kubernetes Containerization

Kubernetes introduces a paradigm shift in how we deploy and manage applications through containerization. While its capabilities are formidable, strategic considerations play a pivotal role in achieving optimal results. Here are essential aspects to look when delving into Kubernetes containerization:

- Architecture Design: Building for Containers: Kubernetes is a game-changer, but its effectiveness hinges on the initial architecture of your applications. Embrace microservices, where applications are divided into smaller, independent components. This modular structure aligns perfectly with containerization, allowing components to be individually scaled, updated, and maintained.

- Networking and Security: Containerized applications coexist within the same infrastructure, necessitating stringent network policies and security configurations. Kubernetes offers Network Policies, enabling the definition of communication rules between pods.

- Resource Management: Resource management is critical for efficient containerization. Over-provisioning results in wastage, while underutilization diminishes returns. Kubernetes allows precise resource allocation using Resource Quotas and LimitRanges.

Successful Kubernetes containerization is a harmonious blend of architectural ingenuity, security foresight, resource optimization, and vigilant monitoring. Embrace these considerations to unlock the full potential of containerization within Kubernetes, empowering your applications to thrive in the dynamic world of modern software deployment.

Why Kubernetes Containerization?

Kubernetes containerization offers several compelling advantages:

- Scalability: Kubernetes can automatically scale applications up or down based on demand, ensuring optimal resource utilization.

- Multi-Cloud Deployment: Kubernetes abstracts away the underlying infrastructure, making it easier to deploy applications across different cloud providers.

- Resource Efficiency: By leveraging Kubernetes' intelligent scheduling and resource allocation capabilities, containerized applications can fully optimize resource utilization. This eliminates the inefficiencies associated with traditional monolithic deployments and minimizes waste.

- Fast Deployment: Kubernetes accelerates application deployment by leveraging container images. These images contain everything an application needs to run, from code to dependencies. As a result, spinning up new instances is a matter of seconds, drastically reducing time-to-market.

- Horizontal Scaling: Kubernetes enables horizontal scaling by adding or removing instances of containers based on traffic volume. This ensures your application can handle sudden spikes in demand without manual intervention.

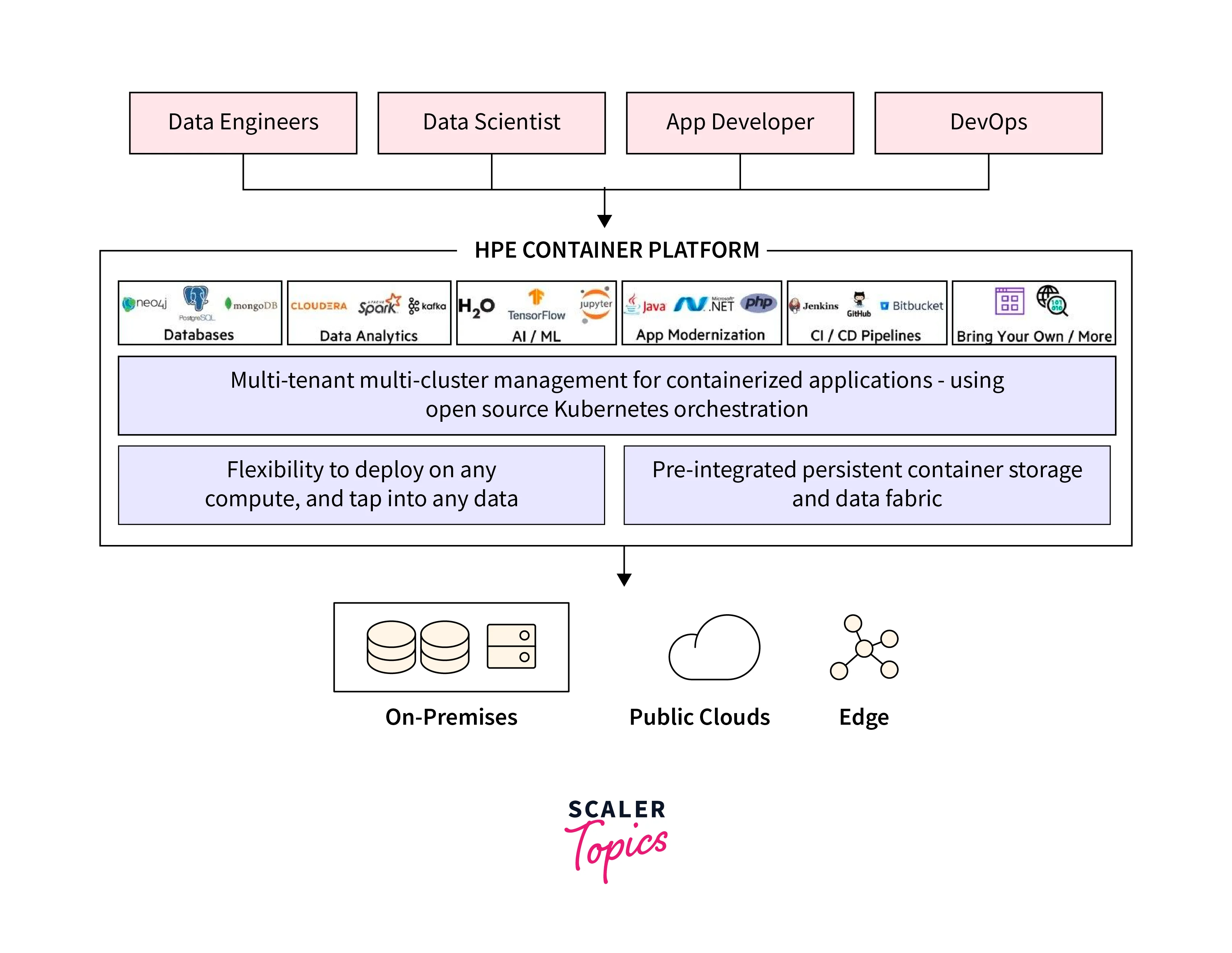

Kubernetes Containers and HPE

The union of Kubernetes containers and Hewlett Packard Enterprise (HPE) stands as a transformative alliance. This collaboration harnesses the prowess of Kubernetes' container orchestration and HPE's innovative offerings, ushering in a new era of streamlined application deployment, scalability, and management.

- Kubernetes Empowered by HPE: The union of Kubernetes and HPE isn't just about the tools; it's about maximizing the potential of containerization. By leveraging HPE's offerings, Kubernetes deployments are elevated to new heights:

- Seamless Scalability: HPE's scalable infrastructure solutions complement Kubernetes' automatic scaling, enabling applications to effortlessly expand to meet demand spikes without compromising performance.

- Enhanced Reliability: HPE's reliability solutions, coupled with Kubernetes' self-healing capabilities, form a formidable defense against application failures. This ensures the uninterrupted availability of critical services.

- Optimized Resource Utilization: The synergy between Kubernetes' resource management and HPE's infrastructure optimization leads to efficient resource allocation. This results in higher performance levels and cost-effective operations.

- Innovation Acceleration: The amalgamation of Kubernetes' agility and HPE's innovation ecosystem accelerates the development and deployment of new applications, enabling businesses to remain at the forefront of their industries.

FAQs

Q. What role does Kubernetes play in containerization?

A. Kubernetes is an open-source platform for container orchestration. It automates the installation, expansion, and administration of containerized applications across a network of computers. By abstracting away the difficulties of the underlying infrastructure, Kubernetes enables developers to concentrate on the logic of the applications.

Q. What considerations are essential for successful Kubernetes containerization?

A.Key considerations include architecture design suitable for containers, networking and security configurations, efficient resource management, and robust monitoring and logging practices. These aspects collectively ensure optimal application deployment, security, scalability, and performance.

Conclusion

- Combining containerization and Kubernetes has revolutionized application deployment, management, and scalability.

- Containerization addresses traditional challenges by encapsulating applications and their dependencies into portable units. Paired with Kubernetes' orchestration capabilities, this dynamic duo simplifies complex deployment tasks.

- Kubernetes shines as an open-source platform for managing containerized applications. Its automation and abstraction of infrastructure intricacies empower applications to scale seamlessly, self-heal, and update efficiently.

- The collaboration of Kubernetes with Hewlett Packard Enterprise (HPE) augments this synergy, offering enhanced scalability, reliability, resource optimization, and innovation.

- As technology evolves, this partnership between containers, Kubernetes, and innovative solutions like HPE promises to drive businesses toward success in the ever-changing digital landscape.