Kubernetes Ecosystem

Overview

In this overall Kubernetes Ecosystem, Kubernetes manages containers with clusters, nodes, and pods through a control plane. Key components include kube-apiserver, etcd, kube-scheduler, and kube-controller-manager. Add-ons enhance clusters, communication, deployments, and app health. KubeLinter protects clusters, and multi-cluster tools streamline administration. Future trends include AI/ML, cloud migration, and IoT edge computing.

Core Components of Kubernetes

Kubernetes Ecosystem creates a Kubernetes cluster, where worker nodes run containerized applications and host Pods. The control plane manages worker nodes and Pods, distributed across multiple computers for fault tolerance and high availability. Clusters are distributed across nodes for efficient application workload management.

Control Panel Components:

The control plane manages the entire Kubernetes cluster in the kubernetes ecosystem, including tasks like scheduling and reacting to cluster events. Control plane components run on designated control plane nodes, separate from user containers.

Key Control Plane Components:

- Kube-apiserver: Exposes the Kubernetes API, serving as the entry point to the control plane.

- etcd: Reliable and accessible key-value store for storing all cluster data.

- Kube-scheduler: Assigns nodes to Pods, selecting an appropriate node for each.

- Kube-controller-manager: Controller processes are run by the control plane component. Each controller is an individual process logically, but they are all compiled into a single binary and operated in a single process to decrease complexity.

- Cloud-controller-manager: Manages cloud-specific control logic. Separates cloud-related components from the cluster-specific ones.Handles controllers relevant to your cloud provider.

Node Components:

Each node in the cluster has specific components responsible for maintaining and managing Pods and the Kubernetes runtime environment in the kubernetes ecosystem.

- Kubelet: Ensures that containers within pods are operational. Accepts multiple PodSpecs in various ways and ensures the functioning of given containers. The agent is present on every node. Only controls Kubernetes-created containers; not any other containers.

- Kube-proxy: Runs a network proxy on all cluster nodes. Executes a component of the Kubernetes Service architecture. Maintains and changes network rules on nodes.Allows for internal and external network connectivity to Pods.

- Container Runtime: Responsible for executing containers. Numerous container runtimes, including containerd, CRI-O, and others adhering to the Kubernetes CRI (Container Runtime Interface) standard, are supported by Kubernetes.

Add On Components:

Add-ons are extensions that enhance cluster functionality using Kubernetes resources (DaemonSet, Deployment, etc.) in the kubernetes ecosystem. Add-ons are usually located in the kube-system namespace to provide cluster-level features.

- DNS (Cluster DNS): Required for the majority of Kubernetes clusters. Provides DNS records for Kubernetes services in addition to current DNS servers. For DNS queries, Kubernetes containers utilize this DNS server.

- Web UI (Dashboard): A web-based UI for managing and debugging Kubernetes clusters and applications. Visibility into both the cluster and its applications is provided.

- Container Resource Monitoring: Gathers time-series metrics on container resource usage. Provides a user interface for analyzing and evaluating container metrics.

- Cluster-level Logging: Log storage for container logs is centralized. Access logs using a search and browsing interface.

- Network Plugins: Supports the container network interface (CNI) specification. Manages the assignment of IP addresses to pods and supports pod communication inside the cluster.

Container Runtimes

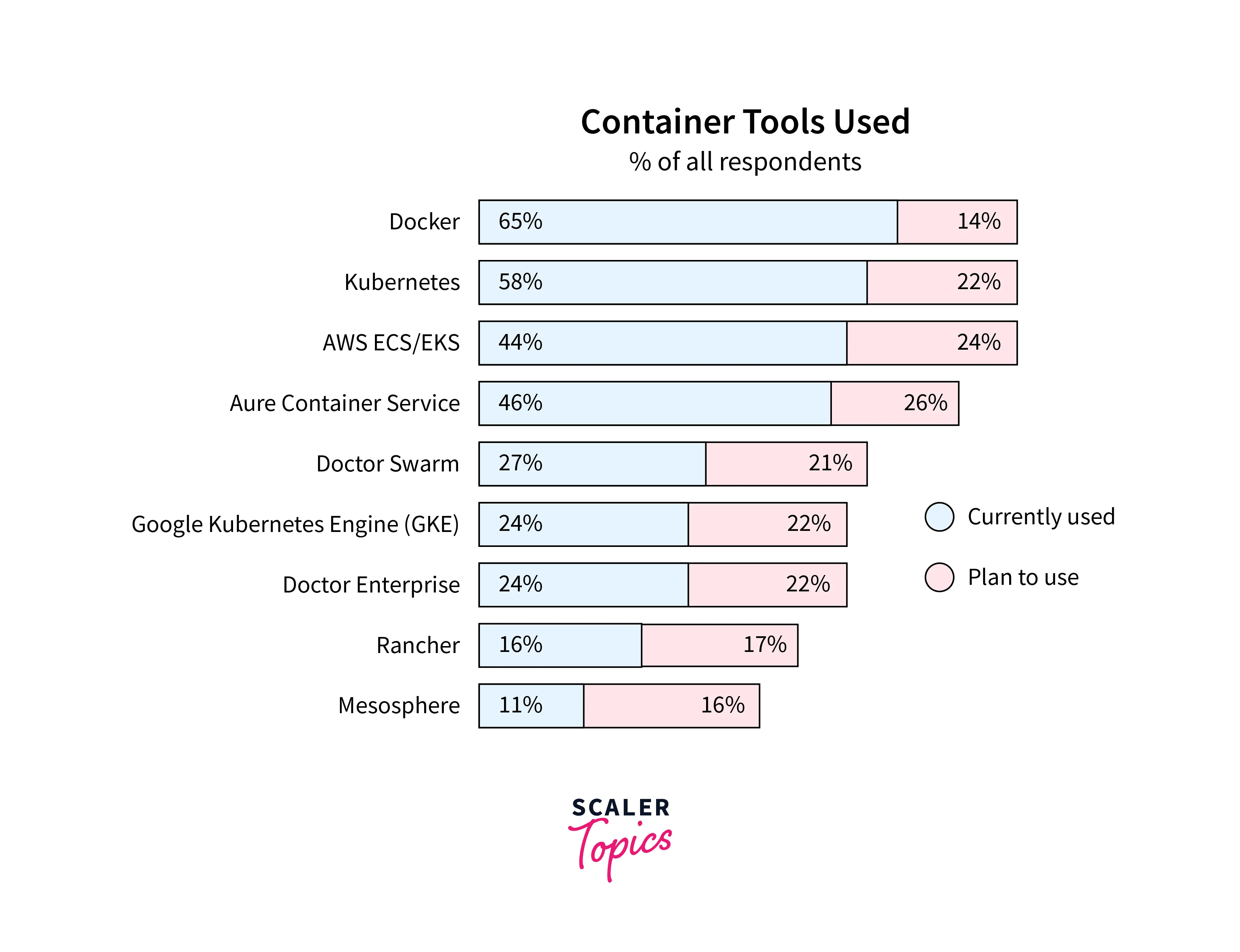

In the Kubernetes Ecosystem, Software enables the execution of containers on a host operating system. Also known as container engines. Responsibilities include loading container images, monitoring local resources, isolating system resources for containers, and controlling container lifecycles.

Container Orchestration and Runtimes:

Container orchestrators and common runtimes work together in a containerized architecture. Orchestrator manages container clusters, addressing scalability, networking, and security. The container engine manages containers on each computing node in the cluster.

Types of Container Runtimes

- Low-Level Container Runtimes

- OCI is a Linux Foundation project launched by Docker. Its goal is to establish open standards for Linux containers.runC, developed by OCI, is a low-level container runtime.

- Released in 2015, runC adheres to the OCI specification.

- Serves as a foundational component for various container runtime engines.OCI defines runtime specifications, focusing on container lifecycle management.

The following are the most common low-level runtimes:

- runC: Docker and the OCI collaborated to produce runC. It is currently the de-facto standard low-level container runtime. runC is written in Go. It is maintained as part of moby, Docker's open-source project.

- crun: crun is a Redhat-led OCI implementation. crun is written in C. It is intended to be lightweight and performant, and it was one of the first runtimes to support cgroups v2.

- containerd: an open-source daemon supported by Linux and Windows that allows for the administration of container life cycles via API queries. The container API provides a layer of abstraction and improves container portability.

- High-Level Container Runtimes

-

Docker (Containerd):

- Offers a comprehensive range of features. Provides both free and paid options.

- Default container runtime for Kubernetes.

- Includes image specifications, CLI, and image-building service.

-

CRI-O

- An open-source container runtime interface (CRI) implementation for Kubernetes, providing a lightweight alternative to rkt and Docker.

- It allows you to execute pods using OCI-compatible runtimes, particularly runC and Kata (but any OCI-compatible runtime may be used).

-

Package Managers: Kubernetes lacks the usual "package manager" present in various OS systems. However, Kubernetes provides a framework for managing apps and their dependencies:

- Helm:

- Helm is frequently referred to as the "package manager for Kubernetes."

- It allows you to create, install, and administer Kubernetes applications using "Helm charts," which are bundles of pre-configured Kubernetes resources.

- Helm streamlines application deployment, version control, and configuration.

- Example: Installing an Application using Helm:

- Kubectl Apply:

- While not a standard package manager, kubectl apply is a command-line utility for applying configuration manifests (YAML or JSON files) to a Kubernetes cluster.

- It is used in Kubernetes to create, edit, and remove resources, making it a crucial way to manage applications.

- Example: Applying a Deployment Manifest:

- Operators:

- Operators are Kubernetes-native applications that enhance the cluster's capability.

- They include operational information about operating and administering individual applications, as well as automation of common operations and higher-level abstractions.

- Example: Installing a Database Operator:

Service Meshes

In the Kubernetes Ecosystem , Service mesh is an infrastructure layer in microservice architecture, providing observability, security, and dependability for applications. It enables safe, encrypted communication between containers or pods, outweighing communication issues.

Service Mesh:

- Comprises Layer 7 proxies, not actual services.

- Used to address challenges of communication between microservices.

- Provides abstraction for network complexities within a Kubernetes cluster.

- Resolves issues related to remote endpoint communication.

- Utilizes Kubernetes to abstract inter-process and service-to-service communications.

- Similar to how containers abstract the operating system from applications.

Kubernetes Service Mesh:

- Adds security, observability, and reliability features at the platform layer.

- Addresses challenges of microservices communication within Kubernetes.

- Service mesh technology predates Kubernetes but has gained prominence due to Kubernetes-based microservices.

- Microservices architectures heavily depend on network communication.

- Service mesh manages network traffic between services efficiently.

- Reduces operational burden compared to other network traffic management methods.

- Implemented as a set of network proxies in Kubernetes.

- Proxies (data plane) manage communication and introduce service mesh features.

- Kubernetes (control plane) orchestrates dynamic scheduling of services.

- Essential for ensuring reliability, security, and performance of complex service-to-service communications in cloud-native applications.

Networking Solutions

One of the most difficult components of Kubernetes Ecosystem is network architecture. The Kubernetes networking paradigm requires specific network capabilities but allows for significant implementation freedom. As a consequence, several projects addressing specific contexts and requirements have been published.

Common CNI plugins include flannel, calico, weave, and canal, defining container networking for Kubernetes and providing cluster admins with networking functionalities.

By default, Docker can set up the following networks for a container:

- None: Adds the container to a container-specific network stack with no connection.

- Host: Adds the container to the host machine's network stack with no isolation.

- Default Bridge: The default networking mode. Each container may communicate with the others using their IP addresses.

- Custom Bridge: User-defined bridge networks provide greater flexibility, isolation, and convenience features.

Docker also allows you to create more complex networking, such as multi-host overlay networking, using extra drivers and plugins.

Comparison of the CNI plugins Flannel and Calico in Kubernetes:

- Flannel:

- CoreOS has created a mature and popular CNI plugin. Inter-container and inter-host networking has been streamlined.

- Simple to install and set up; bundled as a flanneld binary. Layer 3 IPv4 overlay network is used.

- Each node has its subnet for assigning IP addresses. For intra-host communication, the Docker bridge is used.

- Encapsulates communication in UDP packets for inter-host routing.

- Provides alternative encapsulation and routing backends; VXLAN is preferred for simplicity and performance.

- Calico:

- Focused on performance, flexibility, and power. Not just network connectivity, but also network security and administration.

- Uses BGP routing protocol for layer 3 network configuration.

- No need for additional encapsulation; simplifies troubleshooting.

- Easy deployment with a single manifest; optional network policy capabilities.

- Advanced features include robust network policy and Istio integration.

- Supports both service mesh and network infrastructure layer policy enforcement.

Continuous Integration and Continuous Deployment (CI/CD) Tools

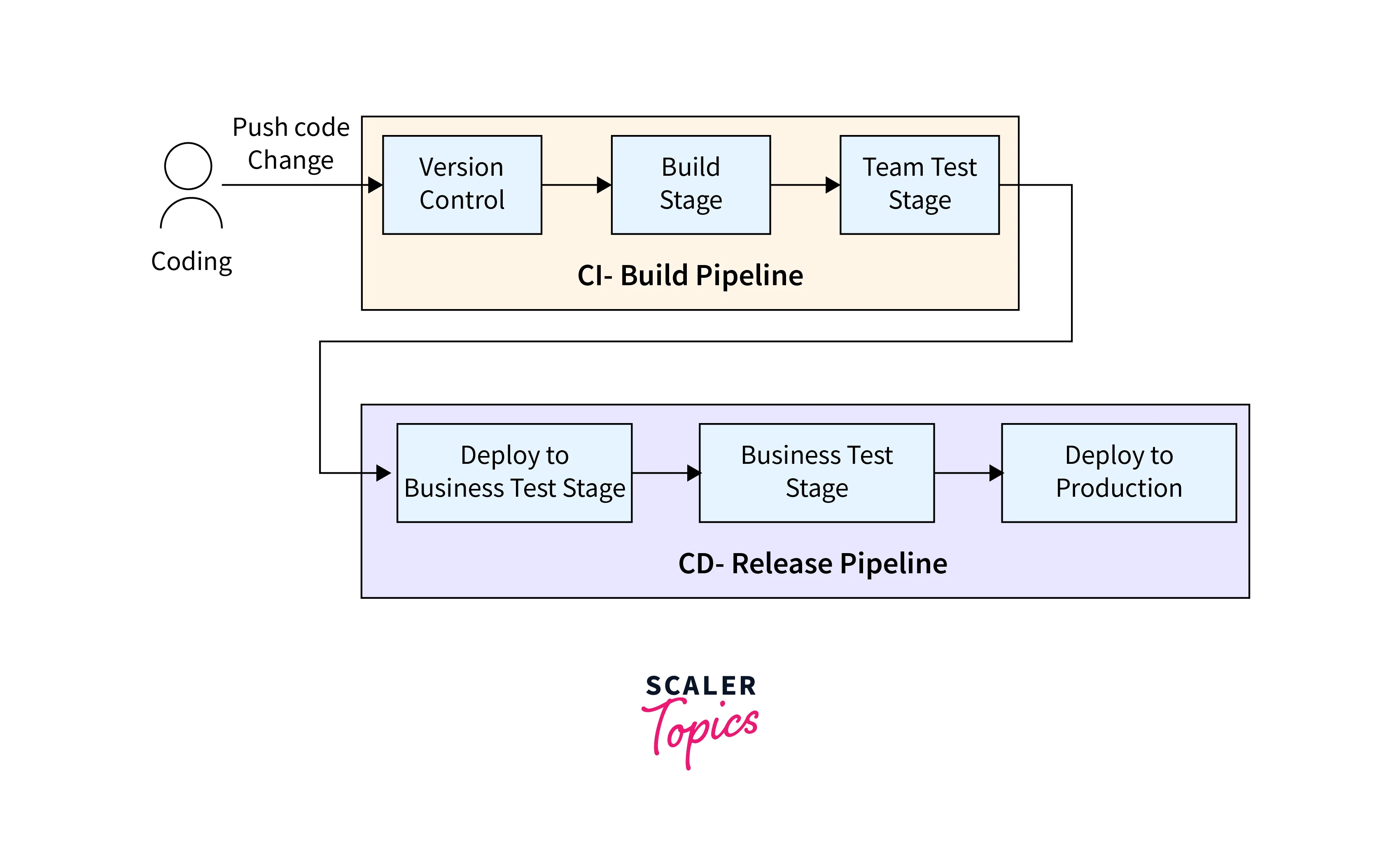

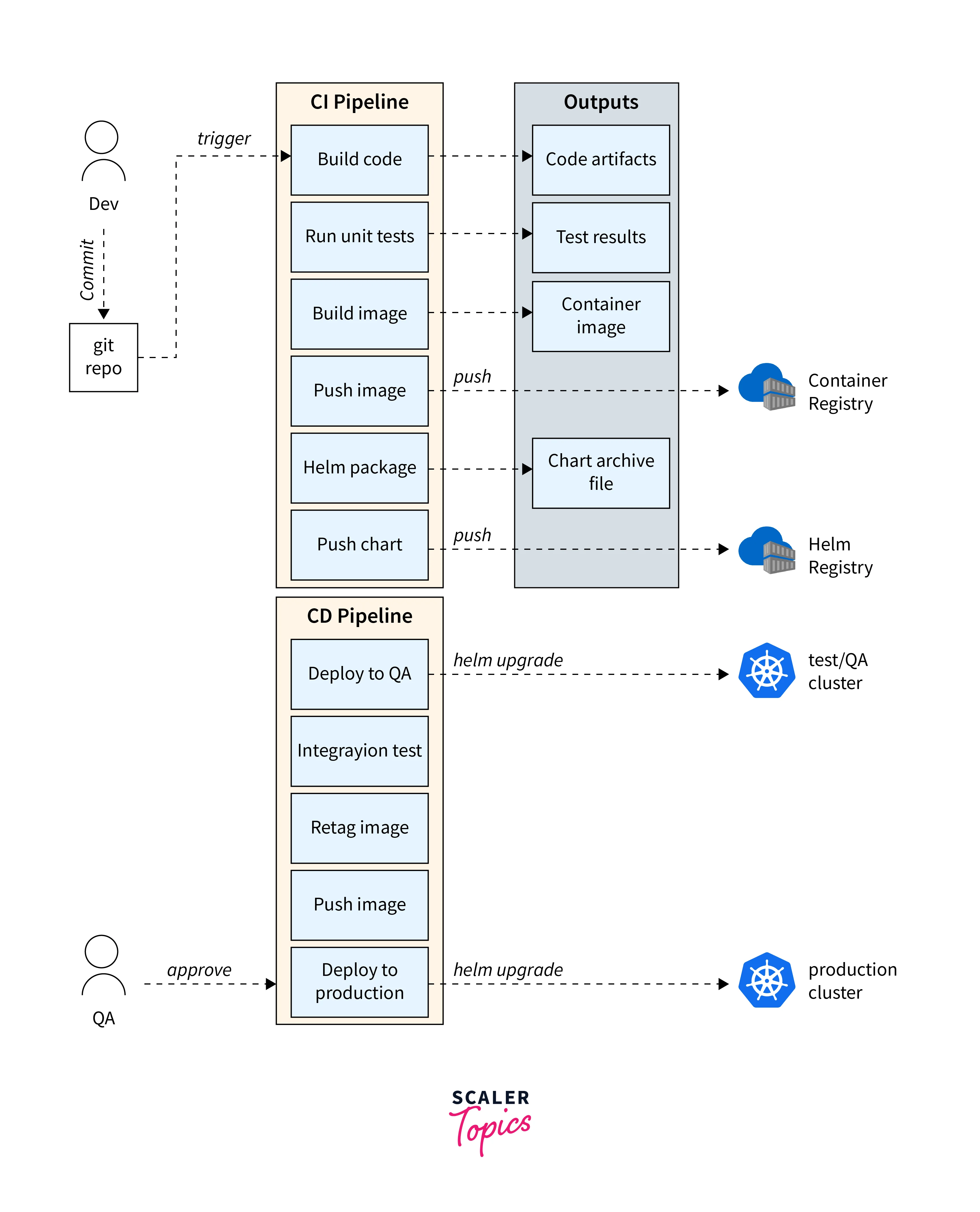

In Kubernetes Ecosystem ,CI/CD aligns with Kubernetes' automation capabilities, providing autonomy for app development and deployment, enabling cloud-native, modern, and streamlined application lifecycle management.

- Utilise a pipeline technique to automate stages from source code to deployment.

- Replace manual tasks like as source updates, compilation, testing, and deployment.

- Pipelines in Kubernetes need modifications such as a container registry, a configuration manager, Helm, and several cluster environments.

- Improve automation for more effective application development and deployment on Kubernetes.

- Establishing reliable CI/CD for microservices in Kubernetes Ecosystem aims to ensure quick, reliable releases without instability.

- The above picture presents a CI/CD pipeline example for deploying microservices to AKS, serving as a foundation for creating custom processes.

- CI/CD goals involve independent service deployment, automated changes to production-like environments, quality gates enforcement, and parallel new version deployment.

- Assumptions include monorepo, trunk-based development, Azure Pipelines, Azure Container Registry, and Helm charts.

- Alternatives include Kustomize and GitHub Actions instead of Helm.

- Pipeline stages include validation and full builds, with Docker-contained test runners.

- Container best practices entail naming conventions, image isolation, and least privilege.

- Helm charts simplify microservice management, supporting dynamic templates and versioned packaging.

- Helm charts can be stored in repositories like Azure Container Registry for structured deployment.

- Versioned Helm charts enable controlled deployments with change-cause annotations.

- Azure DevOps Pipeline handles CI/CD with build and release pipelines.

- Build pipelines create Docker containers and Helm charts.

- Release pipelines deploy Helm charts to different environments with manual approvals.

Kubernetes CI/CD Tools

- GitHub Actions:

- GitHub hosts a CI/CD tool for software development, testing, and deployment.

- Focuses mostly on CI, although may lack some CD features.

- Suitable for automating operations with GitHub source code.

- GitLab CI:

- CI/CD tool with full integration with GitLab.

- Build, test, validation, cluster management, and canary deployments are all available.

- Leading CI/CD pipeline solution for Kubernetes, excellent for GitLab users.

- Jenkins X:

- With a strong focus on Kubernetes and a strong point of view.

- Provides full CI/CD for Kubernetes apps.

- Extendable with other tools and cloud providers.

- For strong Kubernetes CI/CD, more maturity may be required.

- Argo CD:

- Kubernetes declarative continuous delivery tool.

- Implements the GitOps pattern by retrieving app definitions from the source repository.

- Installed directly in a Kubernetes cluster and operates on the cluster side.

- Well-adopted, with an active community, and suited for GitOps deployments.

Monitoring and Logging Solutions

Monitoring and logging are critical components of ensuring the health, performance, and stability of your apps and infrastructure in the Kubernetes Ecosystem. In the Kubernetes Ecosystem, there are numerous tools and solutions for monitoring and logging.

Monitoring Solutions:

- Prometheus: Prometheus is an open-source monitoring and alerting solution for your Kubernetes cluster and other targets. It has a versatile query language as well as visualization features.

- Grafana: Grafana is a popular open-source dashboard and visualization tool that is frequently used in combination with Prometheus. It enables you to construct personalized dashboards that display monitoring data.

- Datadog: A commercial monitoring and analytics tool with Kubernetes connections that provides insights into performance, logs, and traces.

- New Relic: Another commercial application and infrastructure monitoring solution that integrates with Kubernetes.

Logging Solutions:

- Elasticsearch, Fluentd, and Kibana (EFK): A popular stack for log gathering and analysis. Elasticsearch stores logs, Fluentd gathers and sends data, and Kibana offers a visual log exploration interface.

- Fluent Bit: An alternative to Fluentd that is a lightweight and efficient log collector and forwarder.

- Loki: Loki is a horizontally scalable, multi-tenant log aggregation system inspired by Prometheus that is part of the Grafana ecosystem. It is intended for low-cost log storage and searching.

- Splunk: A commercial log management and analysis system with Kubernetes integration.

Storage Solutions

In the Kubernetes Ecosystem, by using the power of an effective Kubernetes storage solution, you can streamline and simplify storage management in Kubernetes settings while offering several advantages including scalability, resilience, flexibility, automation, and performance.

Choosing the Best Storage Provider for Kubernetes

- StorageClasses: Quality of Services (QoS)

- Storage classes specify how Kubernetes apps can store data.

- Customizable in terms of performance and dependability.

- Developers define QoS requirements based on application requirements.

- Ensures adequate levels of performance and dependability.

- Storage companies give monitoring tools for visibility into performance.

- Multiple Data Access Modes:

- Access modes determine how pods and nodes interact with storage.

- RWO (Read Write Once): A single node that handles both reading and writing.

- ROX (Read Only Many): Multiple nodes can read from storage at the same time.

- RWX (Read Write Many): Multiple nodes can read and write at the same time.

- RWOP (Read Write Once Pod): A single pod has exclusive read and write access.

- Important for maximizing flexibility and supporting a wide range of application circumstances.

- PV (Persistent Volume):

- Data abstraction for managing and storing data outside of containers.

- Storage is decoupled from the container lifecycle, increasing flexibility.

- To allocate or generate storage resources, persistent volume claims (PVCs) are used.

- Backup, restore, and data protection are all supported.

- Storage setup is decoupled from application deployment, facilitating mobility.

- Improves application deployment across several platforms and clouds.

- Dynamic Provisioning:

- Creates persistent volumes automatically when apps request them.

- Simplifies the deployment and administration of storage resources.

- Storage classes and policies are predefined.

- Storage capacity, performance, and redundancy are all specified by developers.

- Reduces expenses and waste while optimizing resource utilization.

- Reduces the complexity of application migration between Kubernetes clusters and environments.

Security and Identity Management

In Kubernetes Ecosystem,Security and identity management involve fundamental principles, practices, and technologies for ensuring safety and access control in containerized applications and clusters.

-

Authorization and Authentication:

- Authentication is the process of verifying the identity of users, apps, or services that access the cluster. Certificates, tokens, and authentication providers such as LDAP, OIDC, and OAuth are all common authentication techniques.

- Authorization is the process of determining which activities and resources a user, application, or service is permitted to access. For authorization, Kubernetes RBAC (Role-Based Access Control) is typically utilized.

-

RBAC (Role-Based Access Control):

To handle permissions and access control at a granular level, RBAC defines roles, role bindings, and cluster roles. It guarantees that only authorized entities have access to certain resources.

3. Service Accounts: Service accounts are used to grant particular rights to apps and pods operating in a Kubernetes cluster. They are used to authenticate and authorize workloads to access the Kubernetes API.

- Security Policies for Pods:

Pod Security Policies create pod security limits, limiting the usage of certain security-sensitive functionalities. They aid in the enforcement of security rules for pod configurations.

- Security for Pods and Nodes:

To limit privileges and improve the security posture of pods and nodes, use methods such as PodSecurityPolicies, Pod Security Context, and Node Restriction.

KubeLinter:

- A vulnerability scanner akin to the Unix lint tool.

- Detects explicit security flaws and unethical practises.

- Checks for mistakes, security flaws, waste reduction, performance, and availability.

- It is possible to include it into automated CI/CD procedures.

- Currently has 46 checks available.

- Development is in its early stages.

kube-bench:

- Based on criteria from the Centre for Internet Security (CIS).

- Extensive static analysis is performed.

- Checks for system conformity to benchmarks.

- Runs statically or against an active cluster.

- Aqua created and published as free source

kube-hunter:

- Python-based Kubernetes security tool.

- Provides 23 passive and 13 active tests.

- Runs dynamically, checking IP addresses, domain names, or networks.

- Performs intrusion detection using technologies such as port scanners and penetration testing.

- Released as open source by Aqua.

Falco:

- Originally a static checker for the Linux kernel.

- Developed into a Kubernetes threat detection engine.

- Hooks into Kubernetes audit logging.

- Detects improper pod privileges and other Kubernetes-related risks.

- Project incubation at the Cloud Native Computing Foundation (CNCF).

Kubescape:

- Open-source tool developed by ARMO.

- Evaluates Kubernetes clusters, container content, and identifies risky deployments.

- Organizations continually learn about cluster security despite experience.

- JW Player's 2019 incident highlighted cluster security challenges.

- Kubescape could have prevented such breaches by detecting excessive container permissions during deployment.

- Kubescape integrates over 70 security safeguards.

- Informed by NSA, CISA, and Microsoft principles.

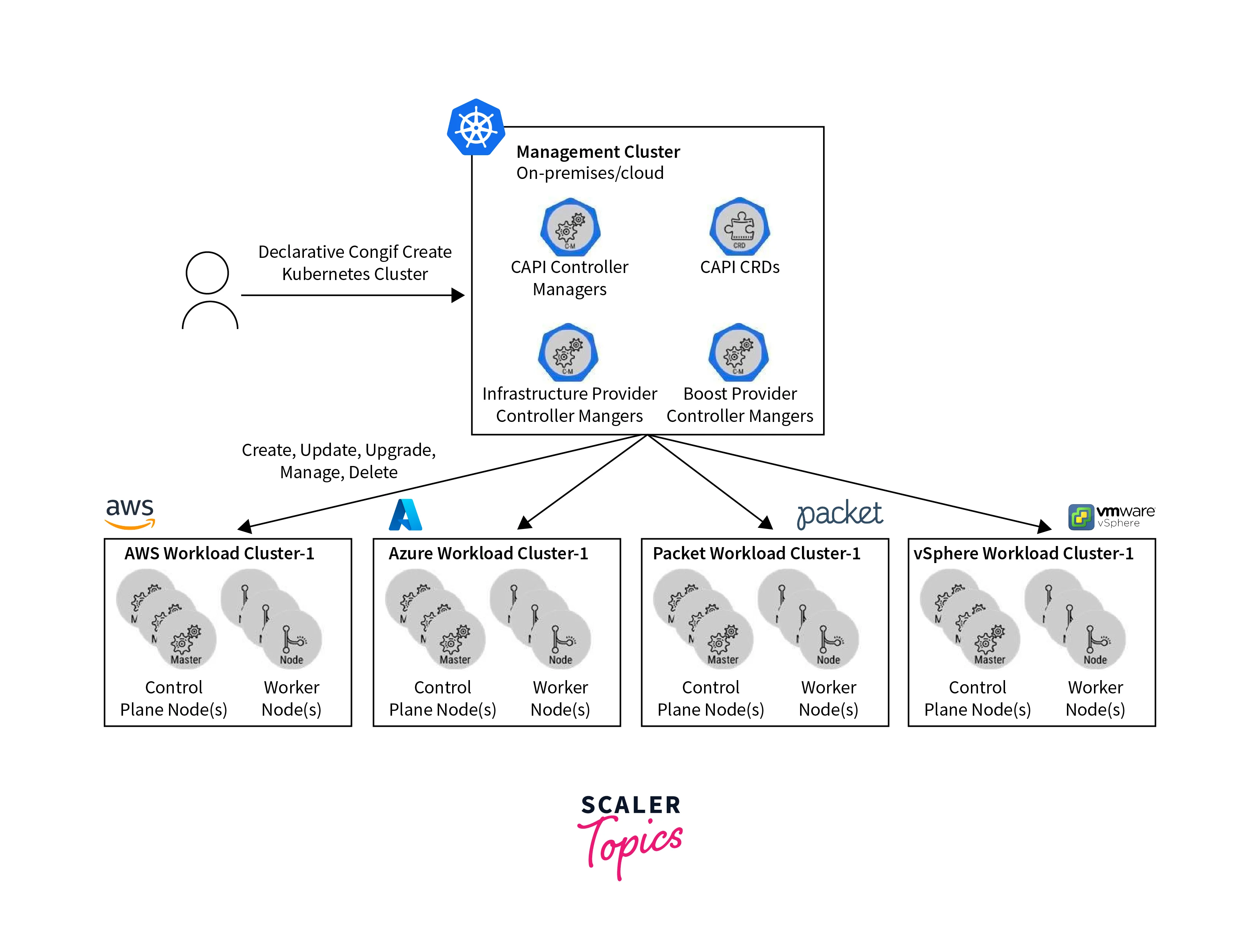

Multi-Cluster Management

In Kubernetes Ecosystem, Kubernetes deployments must be highly distributed because of the high performance of current cloud-native apps. Proper multi-cluster administration guarantees that operations are uniform across all environments, as well as enterprise-grade security and workload control.

A graphic illustration of a Kubernetes multi-cluster architecture is shown below:

Leveraging Multiple Kubernetes Clusters:

- Multiple clusters solve various problem scenarios.

- Managing multiple clusters is challenging.

- Cluster complexity increases with cluster growth.

- Operations include adding/removing nodes, securing, upgrading, and maintenance.

- Complex nature at scale demands proper multi-cluster management.

List of popular tools for Kubernetes multi-cluster management,

- Cluster Management:

- D2iQ Kommander

- Rancher

- Red Hat Advanced Cluster Management

- Deployment:

- CloudBolt

- Google Anthos

- Helm

- Kubespray

Advantages of Multi-Cluster Adoption:

Risk Mitigation:

- Security and compliance are ensured through policy-based governance.

- Security and configuration controls are monitored and enforced through automation.

- Maintains the required state model in order to meet industry compliance and requirements.

Cloud Locations or Geolocations:

- Improves performance across several data centers and clouds.

- Reduces latency, which improves the user experience.

- It improves disaster recovery capability.

Team-Based Structure:

- Smaller multi-clusters with several environments (dev, prod) per cluster.

- Separates issues and reduces the impact of unplanned events.

- Teams retain control of their resources.

- Across the organisation, Kubernetes technologies such as service discovery are used.

Future Trends and Emerging Projects

Kubernetes Trends to Watch in 2023

In Kubernetes Ecosystem, Kubernetes is extensively utilized in container orchestration, and its allure remains strong. However, this does not mean that progress in the field of container orchestration has come to a standstill. We recommend that you look at recent Kubernetes trends.

AI/ML and Kubernetes have Become a Powerful Couple

- AI and machine learning (ML) are two of the most famous instances of Kubernetes Ecosystem maturity and aptitude to handle more complicated use cases.

- AI/ML workloads are increasingly being serviced in production using Kubernetes.

- AI and ML are influencing nearly every aspect of modern business, from improved customer interactions to improved data decisions and things like driverless car modelling.

The Cloud Migration accelerates the shift

- Actually, 94% of firms are currently using hybrid, multi-cloud, public, or private cloud services.

- Containers are also used by businesses to accelerate software delivery and increase the flexibility of cloud migration in kubernetes ecosystem. *

- In fact, Gartner predicts that by 2022, firms will have spent more than $1.3 trillion on cloud migration. *

- Hyperscalers like Amazon, Microsoft, and Google are expected to offer tools to simplify container-native environments usage.

IoT and Edge Computing Will Drive Kubernetes Networking Trends

- You may have made the decision to follow the majority of people and choose Kubernetes Ecosystem as your primary platform for future IoT and Edge applications.

- Service mesh applications necessitate the use of service mesh tools.

- MicroK8s and K3s are two examples of lightweight Kubernetes implementations.

Conclusion

- Kubernetes is a powerful container orchestration technology that includes important aspects such as clusters, nodes, and pods that are managed by the control plane.

- To ensure cluster efficiency, the control plane includes kube-apiserver, etcd, kube-scheduler, and kube-controller-manager.

- Container execution is managed by node components such as kubelet, kube-proxy, and container runtimes.

- In Kubernetes Ecosystem, Service meshes improve communication, while CI/CD solutions such as GitHub Actions and Argo CD simplify app deployment.

- In Kubernetes Ecosystem,Multi-cluster management technologies make administration easier and provide geographical benefits.

- Kubernetes' progress is being driven by emerging trends such as AI/ML integration, cloud migration, and IoT edge computing.