Load Balancing in Kubernetes

Overview

Kubernetes load balancing enhances application availability and scalability by efficiently distributing traffic among backend services. It provides methods like load distribution, stable virtual IP addresses, and components like Deployments, Services, and Service Selectors for system resilience. The framework includes pods, containers, services, and Ingress Controllers.

What is Kubernetes Load Balancer?

- Kubernetes Load balancing is an important approach for increasing availability and scalability by distributing network traffic across different backend services.

- There are several approaches for balancing external traffic to pods, each with advantages and cons. Load distribution, which is handled at the dispatch level using the kube-proxy feature, is the most fundamental kind of load balancing in Kubernetes. This functionality maintains the virtual IP addresses that Kubernetes services utilise.

- Load balancing in Kubernetes involves rerouting traffic when a server goes down and providing resources to a new server when introduced to the server pool. It ensures high availability during system updates and maintenance.

Configuring Load balancing in Kubernetes

The Kubernetes load balancing feature is a feature that distributes network traffic across multiple instances of an application or service to ensure high availability and optimal resource utilization. The feature is implemented through its built-in service objects, enabling efficient resource allocation across multiple instances of the application or service. Here's how to set up load balancing with Kubernetes:

- Create a Deployment or ReplicationController: First, you must create a Deployment or ReplicationController that handles the number of pods that will execute your application.

- Create a Kubernetes Service: A Kubernetes Service is an abstraction that defines pods and access policies, providing consistent IP address and DNS name for external access, which can be specified during service creation.There are several options:

- ClusterIP: Makes the Service available through a cluster-internal IP address. This is the standard kind, and it is only available within the cluster.

- NodePort: Exposes the Service on each Node's IP at a static port. This means you can access the service from outside the cluster using <NodeIP>:<NodePort>.

- LoadBalancer: Creates an external load balancer in cloud settings that support it, such as AWS or Google Cloud Platform. It assigns an external IP address and distributes traffic to the underlying pods automatically.

- ExternalName: The Service is mapped to the contents of the externalName field, allowing you to refer to external services by name.

- Define the Service Selector: The Service Selector field in the Service definition matches the pods handled by your Deployment or ReplicationController, determining which pods to route traffic to.

- Apply the Configuration: Create or apply the Service configuration with the kubectl apply -f command and the YAML or JSON file that describes the Service.

Example YAML file for a LoadBalancer service:

- Check the LoadBalancer IP: After completing the Service configuration, run kubectl get services to view the external IP address allocated to your LoadBalancer service.

- Access Your Service: You may now access your application using the LoadBalancer service's external IP address.

TThe Kubernetes Service and LoadBalancer's behavior varies based on architecture and cloud provider, with load balancer types providing specific resources in AWS or GCP environments.

Different Load Balancing Techniques in Kubernetes

Load balancing solutions in Kubernetes are classified into two types: internal load balancing and external load balancing. These strategies aid in the distribution of network traffic, ensuring the availability, scalability, and effective use of resources for your applications.

Internal Load Balancing

Internal load balancing distributes traffic within a Kubernetes cluster, sending requests to backend services or pods, ensuring high availability and optimal resource utilization across application components, making it a useful technique.

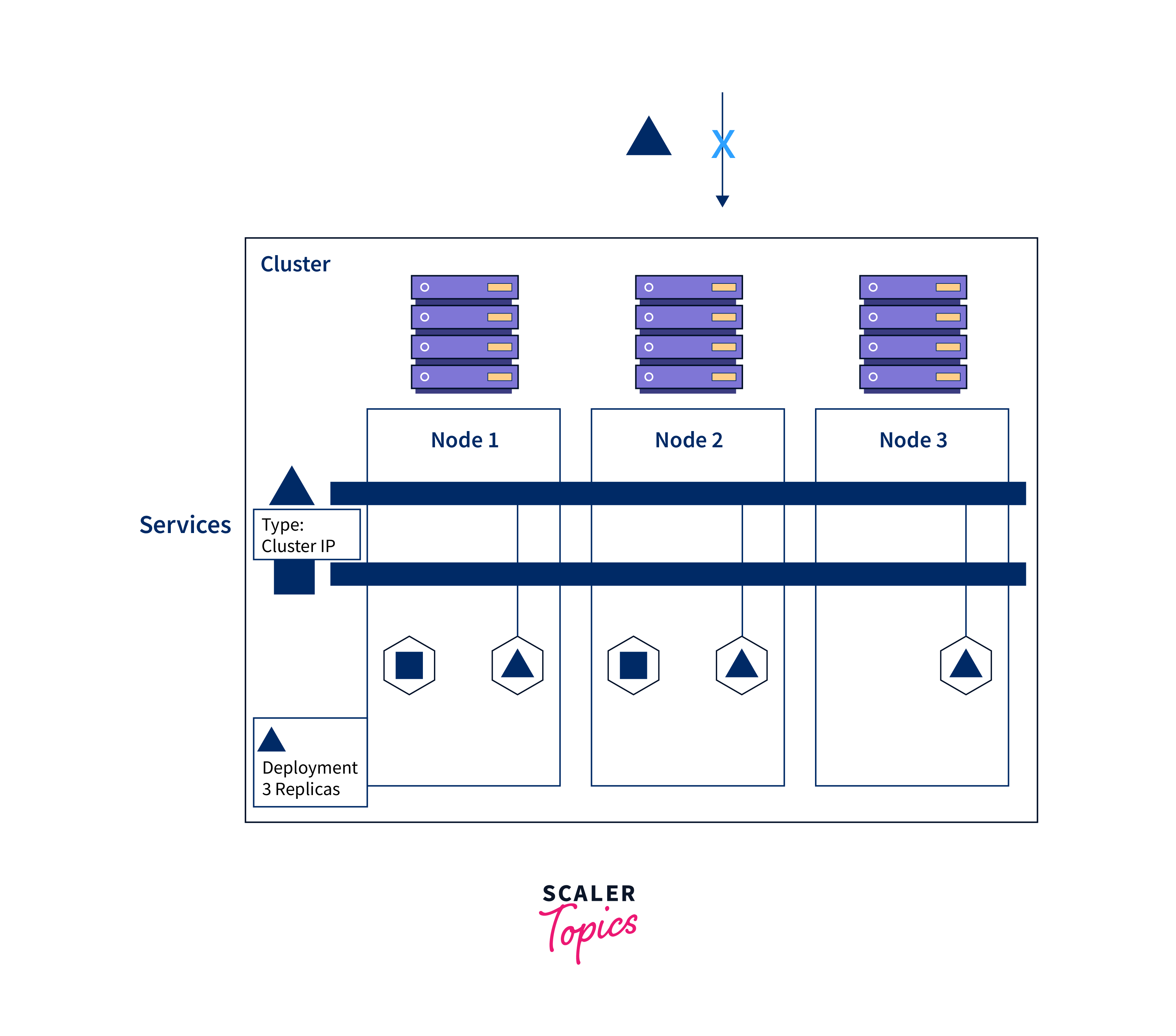

Service with ClusterIP:

- A ClusterIP service assigns an internal IP address within the cluster, letting pods and services to communicate with one another.

- This service is intended for internal communication and is not available from outside the cluster.

Headless Service:

- A Headless service is used when you wish to directly contact individual pods behind the service without using load balancing.

- It supports DNS-based service discovery but does not do load balancing.

External Load Balancing

External load balancing is used to provide services to external customers, allowing them to access your Kubernetes cluster-hosted apps. Load balancing of this sort is frequently employed to handle incoming internet traffic.

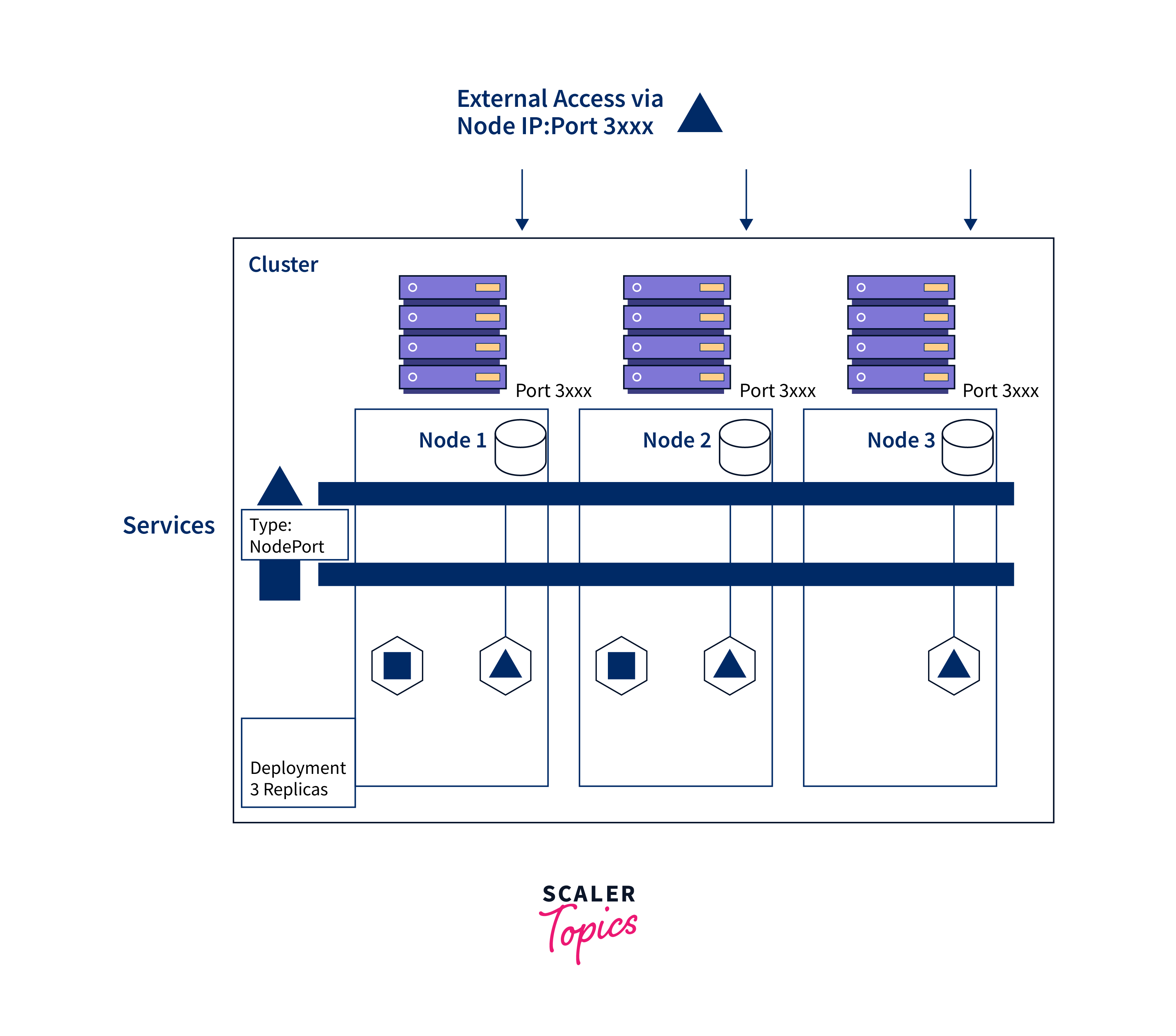

- NodePort:

- A NodePort service exposes a specified port on each node's IP address.

- Clients can connect to the service using <NodeIP>:<NodePort>.

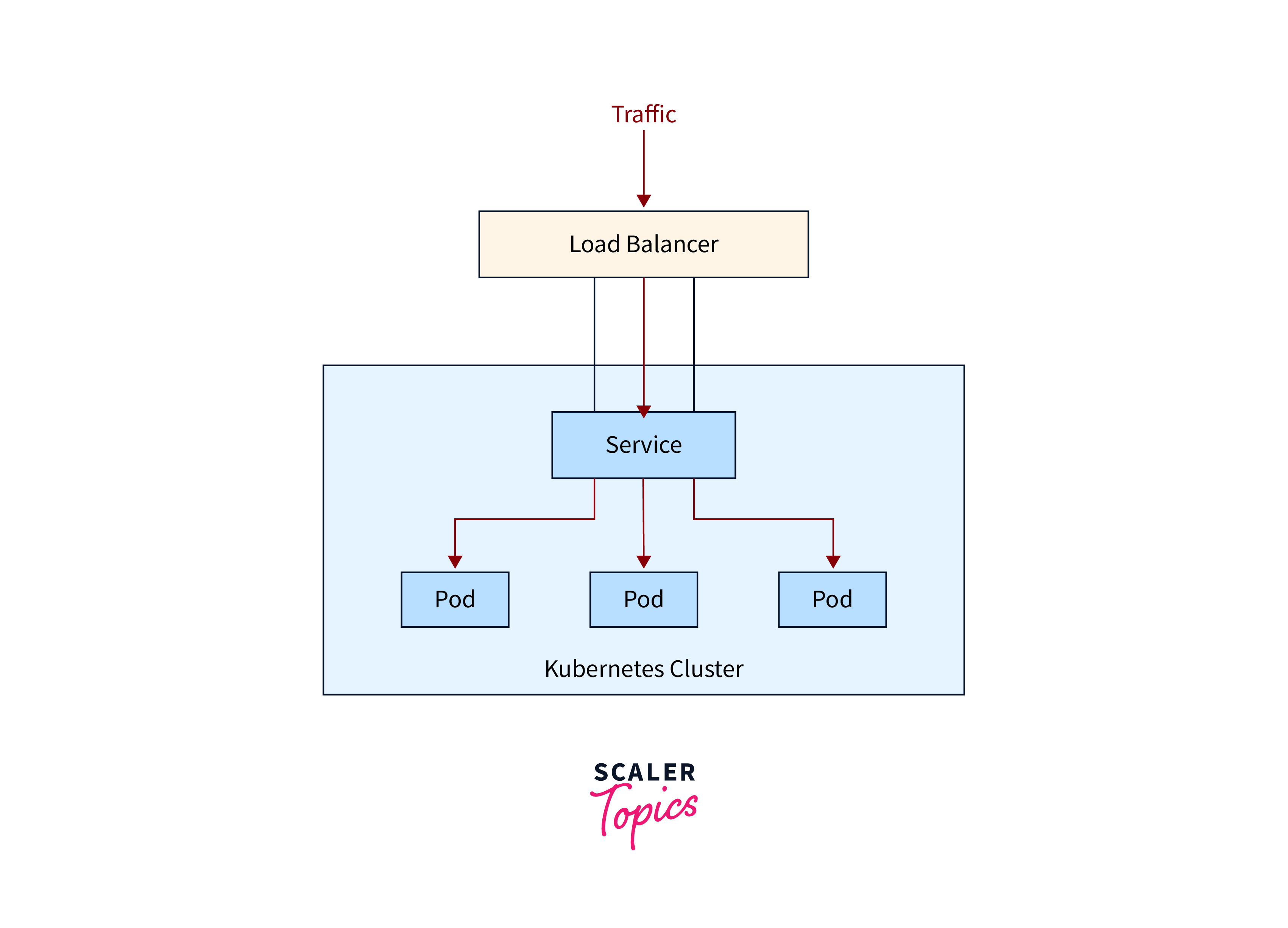

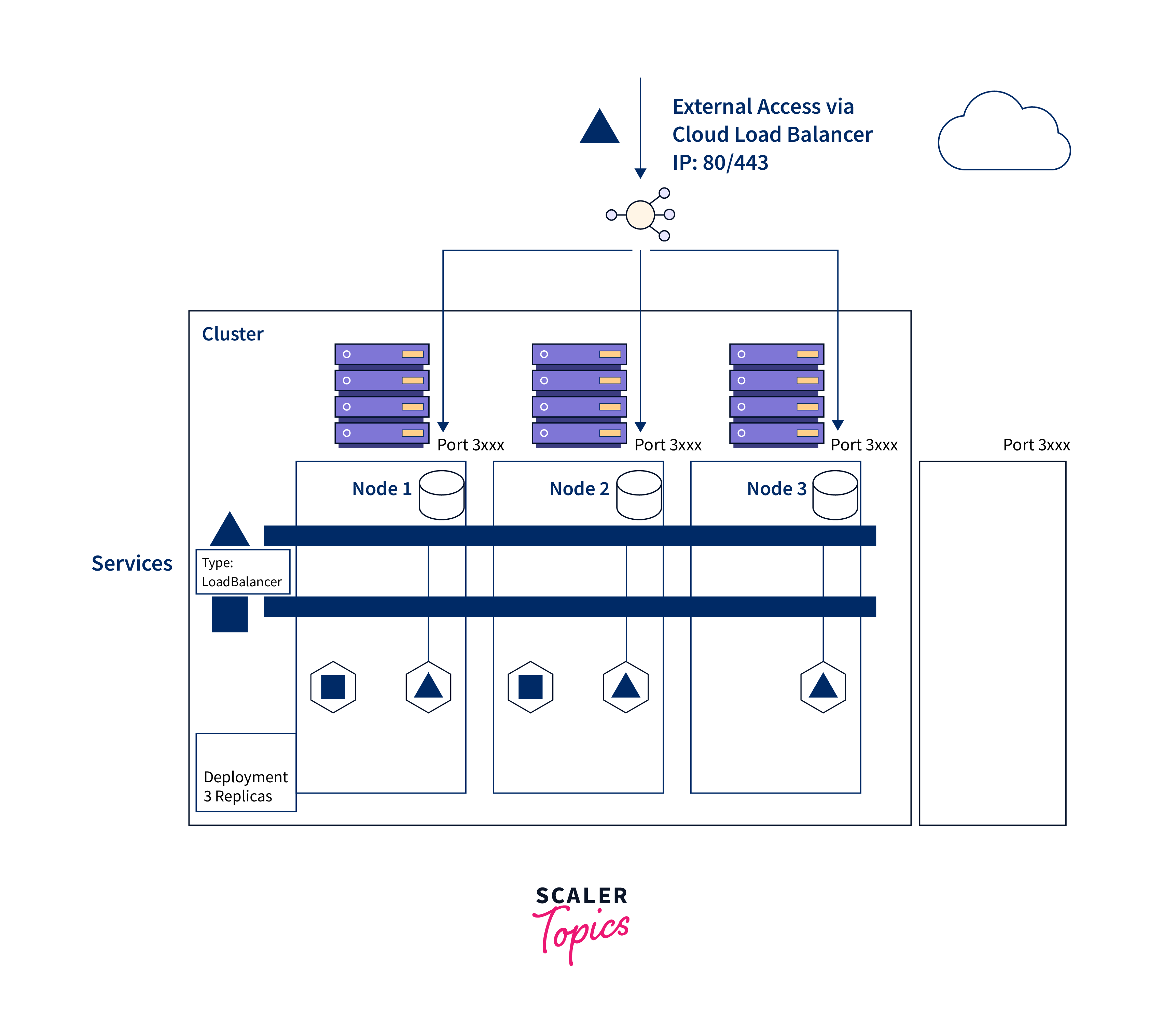

- LoadBalancer:

- A LoadBalancer service type uses an external load balancer, often provided by cloud providers, to distribute traffic to underlying pods, particularly useful in dynamically supplied cloud setups.

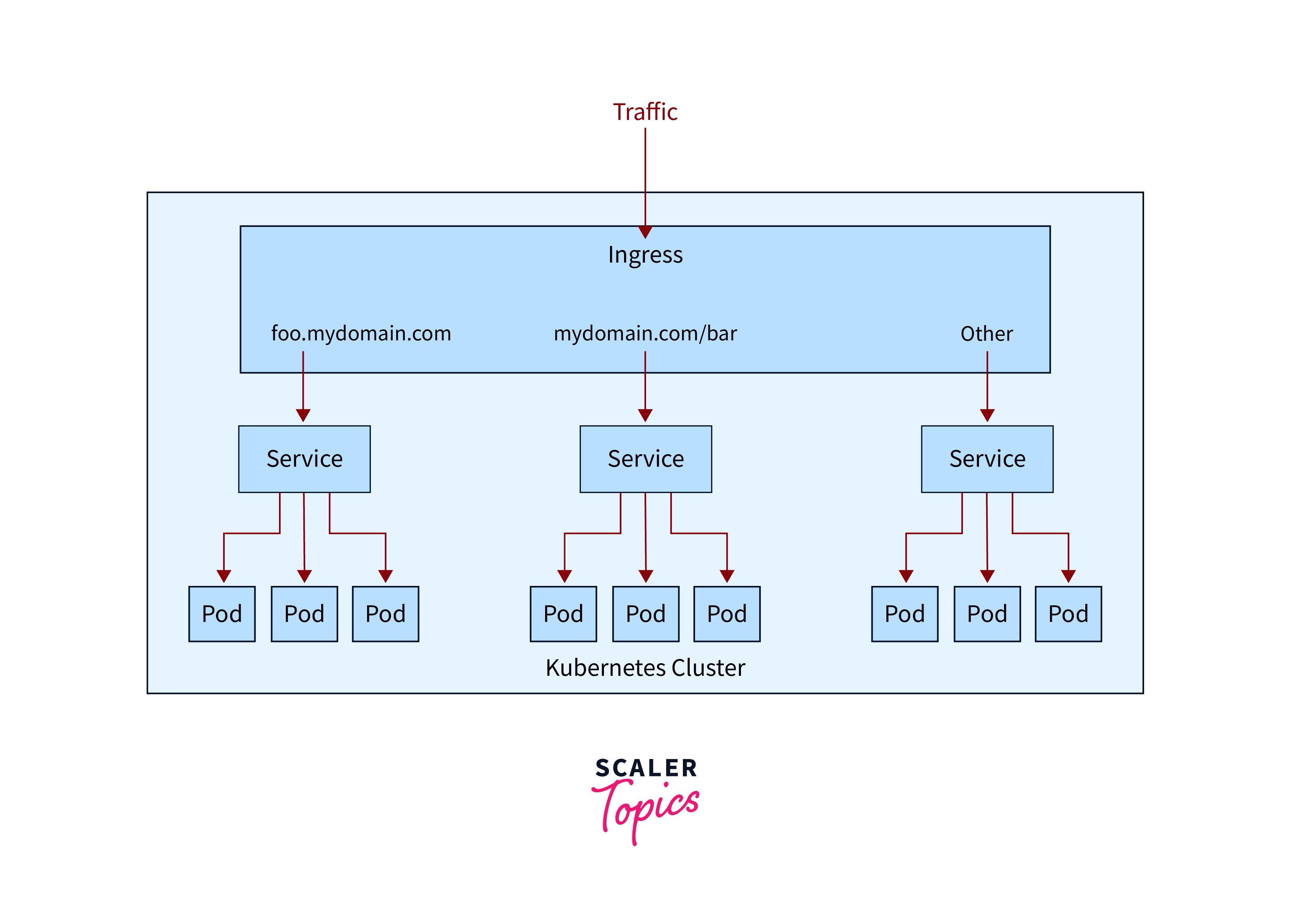

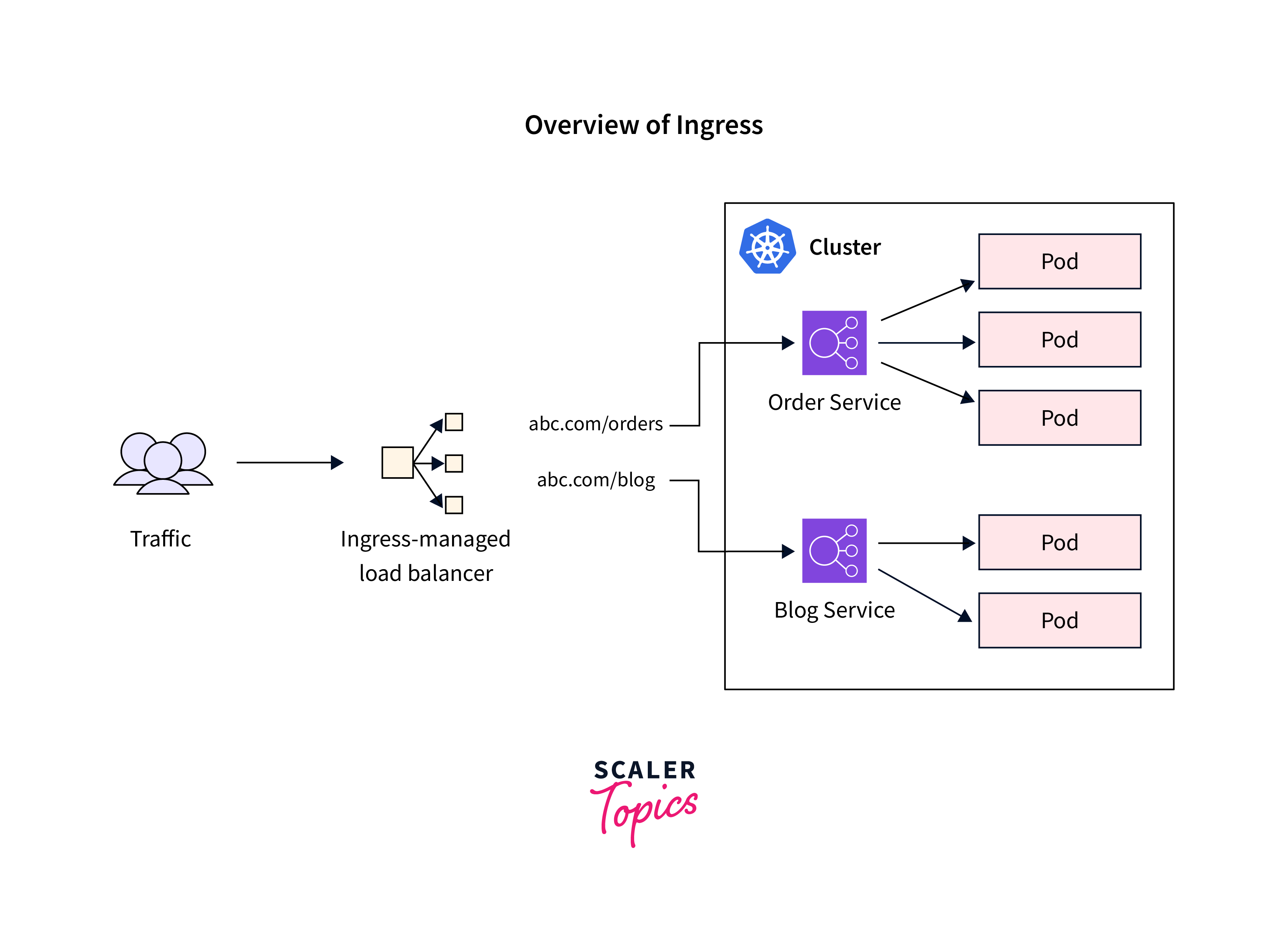

- Ingress:

- An Ingress resource sets routing rules for incoming traffic, supporting SSL termination and can execute path-based or host-based routing.

- An ingress controller, like Nginx Ingress or Traefik, controls and enforces these rules.

- ExternalName:

- An ExternalName service exposes services situated outside of the cluster.

- It functions as a DNS alias, allowing you to refer to remote services by name.

Components of Load Balancing

1.Pods and Containers

- Linux containers are a popular solution for packaging software on Kubernetes, allowing for the deployment of pre-built images.

- Containerization creates self-contained Linux execution environments, integrating applications and dependencies into a single file for easy online distribution. This allows for robust CI and CD pipelines.

- Limit processes per container for simplicity, as pods are temporary, scalable, and flexible objects used for projects involving services, replicating application-specific environments for dynamic work situations.

2.Service

- Kubernetes services are groupings of pods having a common name. Services, which have stable IP addresses, serve as the point of access for outside clients.

- Services, like traditional load balancers, are meant to distribute traffic to a set of pods.

3.Ingress or Ingress Controller

- Ingress is a set of routing rules that governs how users access services outside the network.

- It can perform name-based virtual hosting, SSL termination, and load balancing.

- Ingress can function at layer 7, sniffing packets and acquiring additional data for intelligent routing.

- To operate in a Kubernetes cluster, an ingress controller is required, such as Nginx, HAProxy, and Traefik.

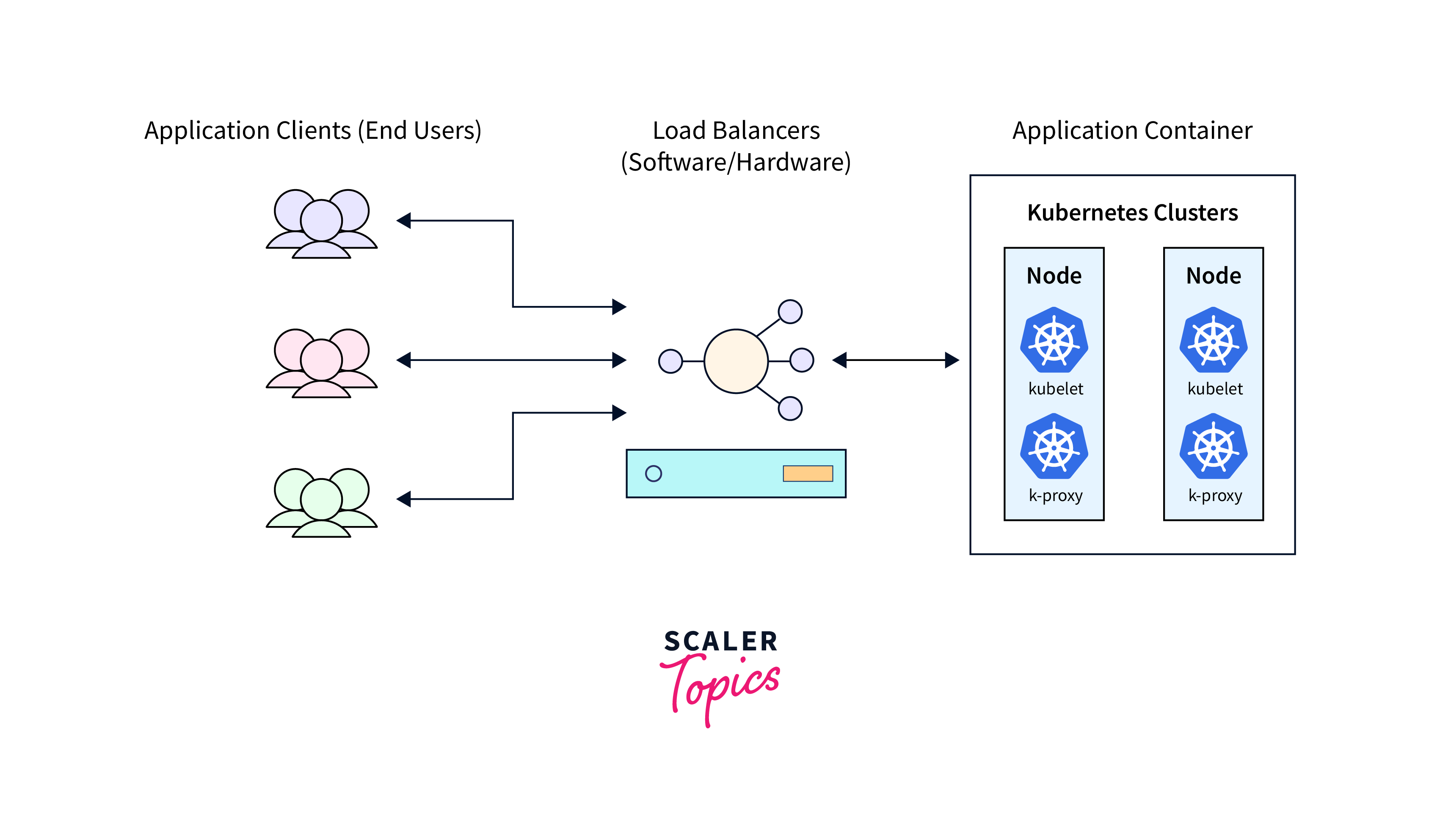

Kubernetes load balancer

- The Kubernetes cluster's distributed architecture relies on multiple services, which can be complicated by improper load distribution.

- Load balancers distribute incoming traffic over a pool of hosts to ensure optimal workloads and high availability.

- They direct client requests to nodes that can process them efficiently, redistribute their duties when one host fails, and automatically forward requests to PODs associated with new nodes when they join the cluster.

Kubernetes Services and Load Balancing

Kubernetes Services are essential for managing network connectivity in a Kubernetes cluster, providing load balancing and reliable communication across pods, ensuring high availability and optimal resource utilization, thereby enhancing application performance.

Here's a more in-depth description of Kubernetes Services and their relationship to load balancing:

1.Service Categories:

Kubernetes has many service types that determine how services are exposed and accessed:

- ClusterIP: The service is exclusively accessible within the cluster through an internal IP address, facilitating communication between application components.

- NodePort: The service is exposed at a static port on each node's IP address, enabling external traffic to connect using any node's IP address and defined port.

- LoadBalancer: Constructs an external load balancer that routes traffic to the service. In cloud settings, this is generally used to expose the service outside and divide incoming traffic over numerous pods.

- ExternalName: This attribute maps the service to an external DNS name, allowing you to refer to external services by name.

- Headless: This service is used to access individual pods directly and does not provide load balancing. It's mostly utilised for stateful applications that require distinct network identities.

Load Balancing:

Kubernetes Services is a crucial feature for managing pods that execute multiple application instances, automatically handling load balancing for services with multiple replicas.This is how it works:

- When a client submits a request to a service, it is dispersed over all available pods that match the selector label of the service.

- Kubernetes guarantees that traffic is distributed equally across the available pods as pods are scaled up or down.

Service Discovery:

- Service discovery is also provided by Kubernetes Services.

- Each service is assigned an internally resolvable DNS name, allowing other pods in the cluster to connect with the service without knowing the IP addresses of individual pods.

How to Use Ingress for Load Balancing in Kubernetes?

The LoadBalancer service type generates an Application/Network Load Balancer for each service, which is acceptable for a few services, but managing multiple services can be costly and difficult, and it lacks URL routing and SSL termination.

What is Ingress

Ingress is a NodePort or LoadBalancer service extension that analyzes traffic between external traffic and the Kubernetes Cluster, determining which pods or services to route, focusing on load balancing, name-based virtual hosting, URL routing, and SSL termination.

The definition of such Ingress object would be:

The rules in the spec section of the YAML representation above define how end-user traffic flows. In this scenario, the Ingress Controller will route all traffic to https://abc.com/oders to an internal service called the order service, which is accessible through port 8080.

Ingress is not a Kubernetes service type but a set of rules that utilizes LoadBalancer or NodePort service types, and an Ingress Controller is necessary for its functionality.

Benefits of Load Balancing in Kubernetes

Kubernetes' load balancing is a crucial feature that provides numerous advantages for managing applications in containerized environments. Here are the key advantages of load balancing in Kubernetes:

High Availability: Load balancing ensures applications remain accessible and responsive even when pods or nodes fail, by automatically redirecting traffic to healthy pods, reducing downtime and service disruptions.

Optimal Resource Utilization: Load balancing distributes incoming requests evenly among multiple application pods, ensuring optimal resource utilization by preventing overburding and underutilization of resources.

Scalability: Kubernetes automatically grows applications by adding or deleting pods based on resource utilization or preset parameters, allowing load balancing to adjust traffic to newly generated pods, facilitating easy handling of additional load.

Advanced Routing: Ingress controllers enhance load balancing by offering complex routing features like host-based or path-based routing and SSL termination, thereby enhancing traffic control and service visibility.

Cost Efficiency: Load balancing optimizes infrastructure expenditures by efficiently distributing traffic, preventing over-provisioning, and preserving application performance, ultimately saving money on infrastructure costs.

FAQs

Q. What components are involved in Load Balancing in Kubernetes?

A. Kubernetes load balancing system comprises pods, services, and Ingress controllers, which manage application containers, services provide abstraction, and Ingress controllers handle advanced routing and traffic distribution.

Q. How does an Ingress Controller work with Load Balancing in Kubernetes?

A. An Ingress Controller enhances Load Balancer or NodePort services by offering advanced routing and traffic distribution rules, acting as an intermediary between external traffic and the Kubernetes cluster, enabling URL routing, SSL termination, and load balancing.

Q. What is Kubernetes Load Balancer?

A. Kubernetes Load balancing enhances availability and scalability by distributing network traffic across backend services, maintaining virtual IP addresses, and automatically rerouting traffic during server downtime.

Q. How can I configure Load balancing in Kubernetes?

A. To configure load balancing in Kubernetes, follow these steps:

- Create a Deployment or ReplicationController.

- Create a Kubernetes Service with the desired type (e.g., ClusterIP, NodePort, LoadBalancer).

- Define the Service Selector to match the pods you want to load balance.

- Apply the Service configuration using kubectl apply -f.

- Check the LoadBalancer IP and access your service.

Conclusion

- Kubernetes load balancing enhances application availability and scalability by efficiently managing network traffic across backend services.

- Kubernetes offers a variety of load balancing methods, with load distribution as a core aspect, ensuring the stability of virtual IP addresses for services.

- Configuration involves creating key components such as Deployments, Services, and Service Selectors, each customized to specific needs.

- Kubernetes Load balancers are crucial for maintaining high availability and resource allocation during failures, contributing to system resilience.

- Kubernetes provides both internal and external load balancing techniques to meet diverse traffic distribution requirements.

- The combined components of pods, containers, services, and Ingress Controllers form a robust load balancing framework in Kubernetes.