Complete Guide to Kubernetes Networking

Overview

Kubernetes networking serves as the communication backbone for its components and other applications, forging interconnectedness within the cluster.

What sets Kubernetes apart from traditional networking platforms is its unique adoption of a flat network structure, eliminating the burden of mapping host ports to container ports. This novel approach to networking empowers the platform to host distributed systems, facilitating resource sharing between applications without the need for dynamic port allocation.

The Kubernetes Networking Model

The Kubernetes networking model comprises a well-defined set of principles that govern communication and connectivity within the cluster. These fundamental principles can be summarized as follows:

- Unique IP Addresses for Pods

- Seamless Intra-Pod Communication

- Effortless Pod-to-Pod Communication

- Enforced Isolation through Network Policies

Emphasizing VM-like Characteristics of Pods

With each pod possessing a unique IP address, the Kubernetes model bestows pod entities with attributes reminiscent of virtual machines or hosts. Moreover, containers within pods can be likened to processes running within a virtual machine or host, as they operate in the same network namespace and share an IP address. This design simplifies the migration of applications from conventional VMs and hosts to Kubernetes-managed pods, resulting in enhanced flexibility and scalability.

The Elegance of a "Flat Network" Design

The "flat network" approach championed by Kubernetes emphasizes simplicity and comprehensibility. By decoupling isolation from intricate network structures, administrators can effectively manage the network and readily adapt to evolving demands.

Rare Cases:

Mapping Host Ports and Host Network Namespace

Although seldom required, Kubernetes allows for flexibility in mapping host ports to pods or even running pods directly within the host network namespace, effectively sharing the host's IP address. Such scenarios remain exceptional and typically cater to specific, specialized use cases.

Network Implementations

Kubernetes offers built-in network support through kubenet, providing fundamental network connectivity. Nevertheless, in practice, many opt for third-party network implementations that integrate with Kubernetes via the Container Network Interface (CNI) API.

CNI encompasses various plugin types, with two primary ones being:

- Network plugins:

Responsible for establishing connections between pods and the network. - IPAM (IP Address Management) plugins:

Tasked with allocating pod IP addresses.

Various Kubernetes networking solutions provide several integrations with cloud services. These integrations encompass AWS, Azure, and Google network CNI plugins, alongside the host local IPAM plugin. The flexibility offered empowers users to select the most suitable networking options tailored to their specific needs and deployment environment.

Services

Kubernetes services offer a means to abstract access to a collection of pods as a unified network service. This group of pods is typically defined through a label selector. Within the cluster, the network service is represented by a virtual IP address, and kube-proxy takes charge of distributing incoming connections to this virtual IP across the associated pods supporting the service.

The virtual IP becomes discoverable through Kubernetes DNS. Throughout the service's lifetime, both the DNS name and the virtual IP address remain constant, even as the underlying pods may be created or removed, and the number of pods supporting the service may fluctuate over time.

Kubernetes services also enable the specification of how the service can be accessed from outside the cluster, with the following options:

- Node Port:

In this setup, the service becomes accessible via a specific port on each node in the cluster. - Load Balancer:

Alternatively, a network load balancer can provide a virtual IP address that allows access to the service from outside the cluster.

In essence, Kubernetes services simplify the interaction with groups of pods, providing seamless network access both within and outside the cluster. The ability to maintain constant access to the service through virtual IP addresses, combined with various access methods, contributes to an efficient and reliable networking environment in Kubernetes.

Network Policies

Kubernetes offers an essential feature known as Network Policies, facilitating layer-3 segmentation for applications deployed on the platform. While Network Policies lack the advanced capabilities of modern firewalls, such as layer-7 control and threat detection, they provide a foundational level of network security, serving as an effective starting point.

In the Kubernetes environment, workloads are organized into pods, comprising one or more co-located containers. Each pod is allocated a unique IP address that remains reachable from all other pods, even across underlying servers. Network Policies in Kubernetes define access permissions for groups of pods, akin to security groups in cloud environments controlling access to VM instances.

By employing Kubernetes Network Policies, administrators can enforce a security perimeter around their applications, restricting communication between pods based on defined rules. This fine-grained control helps prevent unauthorized access and enhances the overall security posture of the Kubernetes cluster.

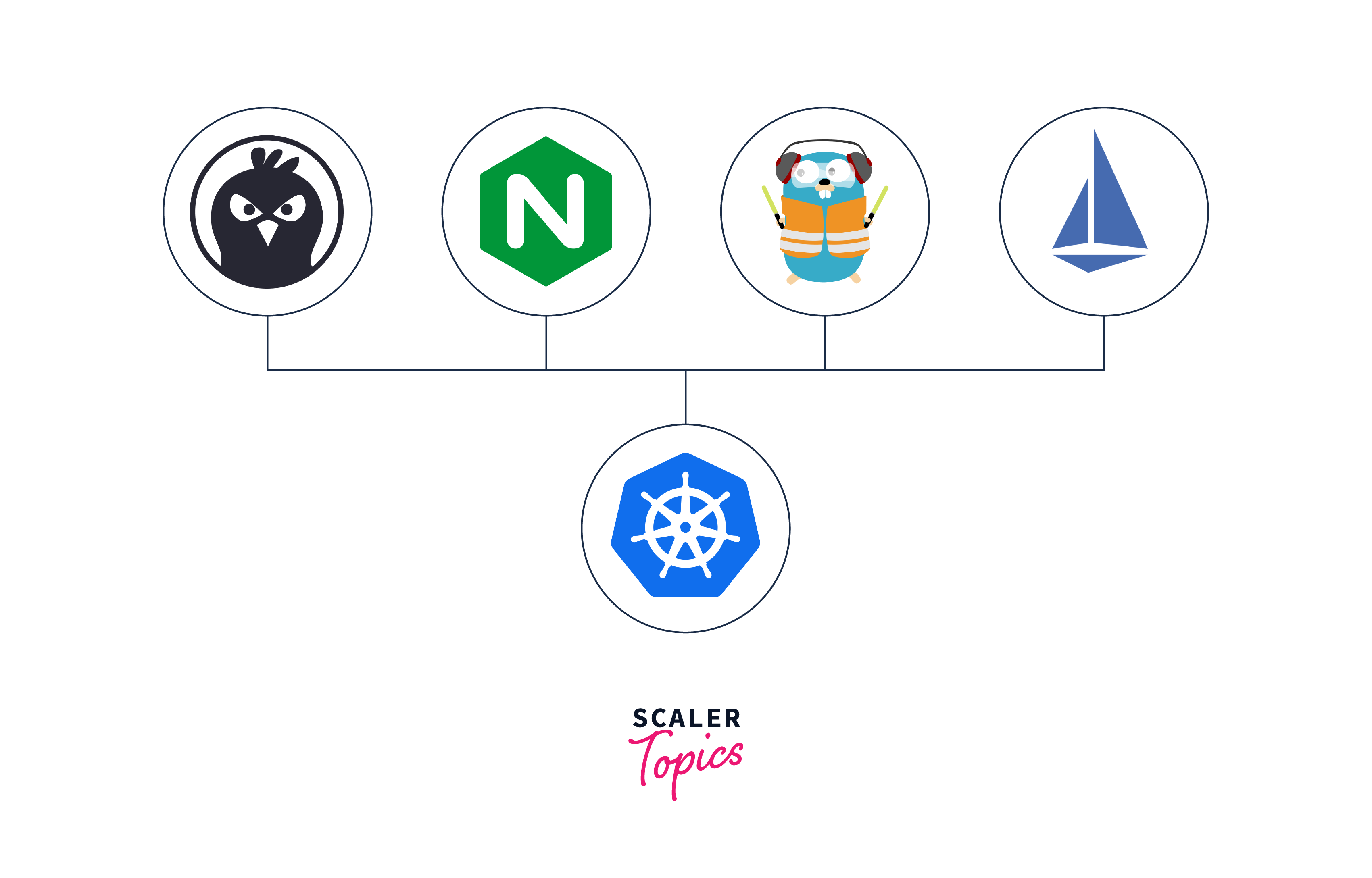

Ingress Controllers

Kubernetes Ingress leverages Kubernetes Services to deliver application-layer load balancing, effectively directing HTTP and HTTPS requests to specific domains or URLs mapped to Kubernetes services. Additionally, Ingress can handle SSL/TLS termination before load balancing occurs, enhancing security.

The implementation specifics of Ingress depend on the chosen Ingress Controller. This Controller takes on the responsibility of monitoring Kubernetes Ingress resources and configuring one or more ingress load balancers to achieve the desired load balancing behavior.

In general, there exist two primary types of ingress solutions:

- In-Cluster Ingress:

With this approach, ingress load balancing is performed by pods residing within the Kubernetes cluster itself. - External Ingress:

In contrast, external ingress solutions involve implementing load balancing outside of the cluster. This can be achieved through dedicated appliances or cloud provider capabilities.

Kubernetes networking, in conjunction with Ingress, extends load balancing capabilities and facilitates seamless routing of incoming requests to the appropriate services within the cluster, enhancing the overall performance and accessibility of applications.

Load Balancing

Load balancing in Kubernetes is a fundamental mechanism for distributing incoming traffic among multiple pods. At the dispatch level, load distribution is easily implemented, and Kubernetes offers two methods of load balancing, both managed through the kube-proxy feature. Services in Kubernetes utilize virtual IPs managed by kube-proxy to achieve load balancing.

In the past, the default kube-proxy mode was "userspace," which employed round-robin load distribution on an IP list, followed by rotation or permutation of the list to allocate the next available Kubernetes pod. However, the modern default mode for kube-proxy is "iptables," which introduces sophisticated rule-based IP management. In this mode, load distribution is achieved through random selection, ensuring that incoming requests are directed to one of the service's pods chosen randomly.

Kubernetes networking relies on these load balancing mechanisms, allowing efficient utilization of resources and optimized distribution of traffic among pods, enhancing the overall performance and resilience of applications within the cluster.

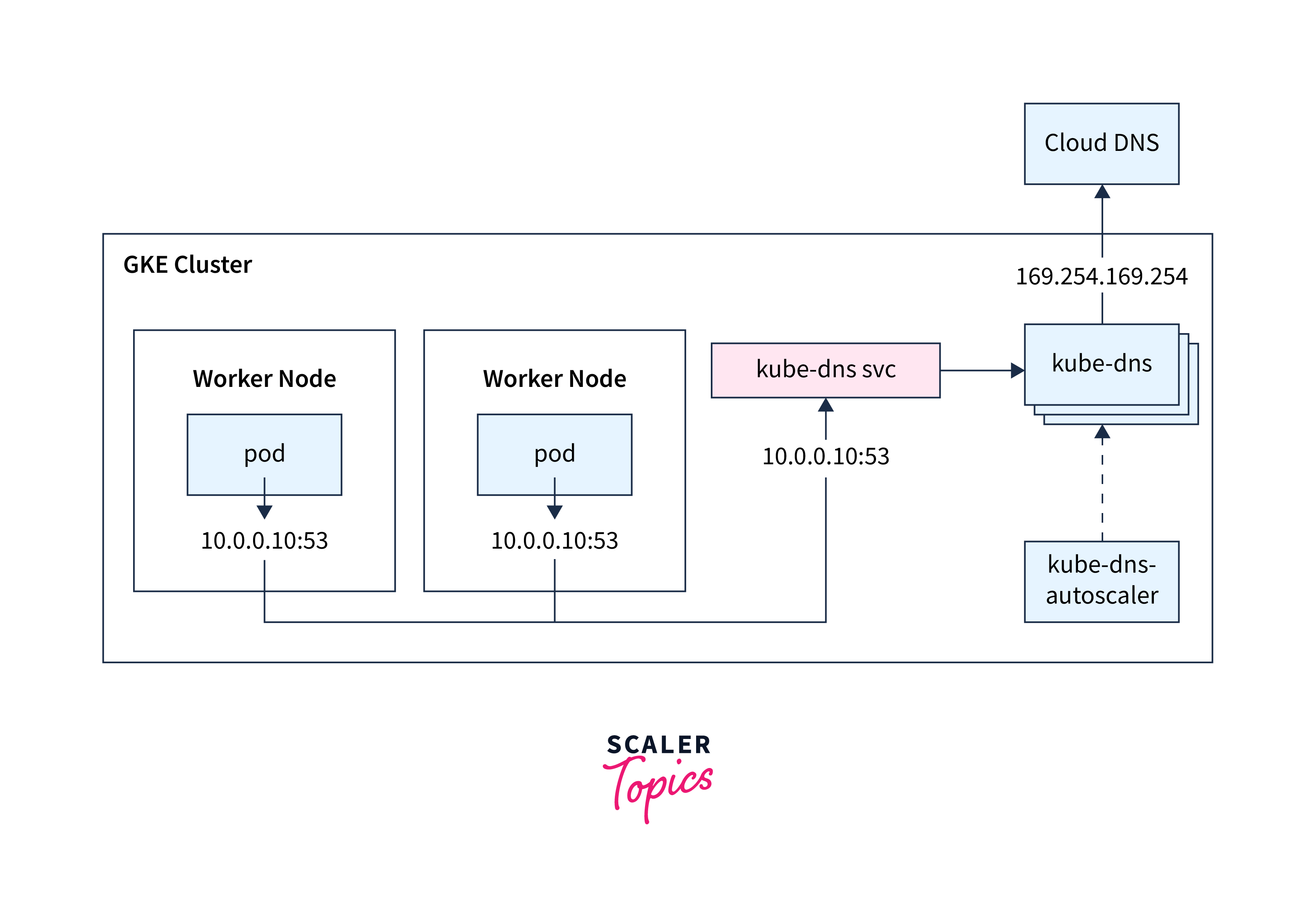

DNS

In every Kubernetes cluster, a DNS service is provided, ensuring that each pod and service becomes discoverable through this Kubernetes DNS service.

For instance:

Service:

sample-svc.sample-namespace.svc.cluster-domain.sample

Pod:

pod-ip-address.sample-namespace.pod.cluster-domain.sample

Final pod created:

pod-ip-address.deployment-name.sample-namespace.svc.cluster-domain.sample

The DNS service is realized as a Kubernetes service, which is responsible for mapping to one or more DNS server pods, commonly implemented using CoreDNS. These DNS server pods are scheduled just like any other pods in the cluster. Pods within the cluster are configured to use this DNS service, equipped with a DNS search list comprising the pod's own namespace and the cluster's default domain.

As a result, a service named "foo" within the Kubernetes namespace "bar" can be accessed as "foo" by pods within the same namespace. Likewise, pods from other namespaces can access the same service as "foo.bar."

Kubernetes offers an extensive array of options for DNS control across various scenarios, allowing users to tailor DNS configurations to suit their specific networking needs. Further details on managing DNS for services and pods can be found in the Kubernetes guide dedicated to this topic.

NAT Outgoing

The Kubernetes network model emphasizes the necessity of direct pod-to-pod communication using pod IP addresses. However, it does not mandate that pod IP addresses should be reachable outside the cluster's boundaries. To facilitate communication beyond the cluster, many Kubernetes network implementations employ overlay networks. In such deployments, when a pod establishes a connection to an external IP address, the hosting node employs SNAT (Source Network Address Translation) to map the packet's source address from the pod IP to the node IP.

This process allows the network to establish connections to the intended destination by utilizing a mappable node IP. When the return packets arrive, the node effortlessly reassigns them to the corresponding pod IP before forwarding them to their respective pods.

By offering this level of customization and adaptability, Kubernetes networking services enhance Kubernetes networking, empowering users to optimize network performance and connectivity as per their specific requirements.

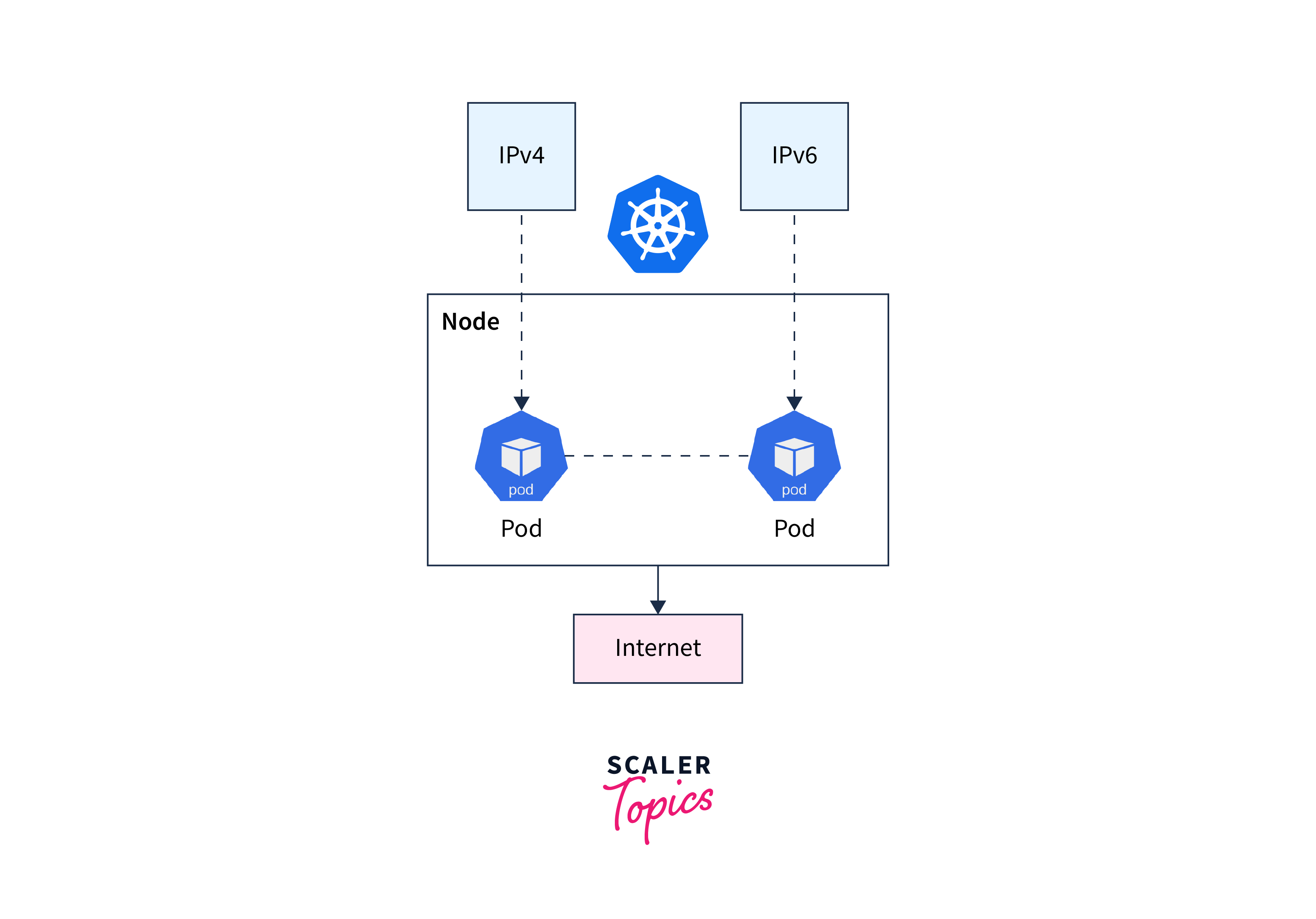

Dual Stack Kubernetes

For environments where a combination of IPv4 and IPv6 is desired, Kubernetes offers a dual-stack mode. Enabling this mode ensures that all pods receive both IPv4 and IPv6 addresses. Additionally, Kubernetes services can be configured to expose themselves with either IPv4 or IPv6 addresses, providing the flexibility to accommodate different networking requirements.

By utilizing Kubernetes' dual-stack capability, administrators can seamlessly incorporate both IPv4 and IPv6 protocols within their clusters, promoting enhanced communication and connectivity options for applications and services.

Storage Networking

In Kubernetes, storage networking plays a crucial role in managing various types of storage resources for containerized applications. The most fundamental form of storage in Kubernetes is non-persistent, often referred to as ephemeral storage. By default, each container possesses ephemeral storage, which relies on a temporary directory within the Kubernetes pod's host machine. While this storage is portable, it lacks durability, making it suitable for temporary data requirements.

To address the need for persistent storage, Kubernetes offers support for multiple types of persistent storage solutions. This includes file, block, or object storage services provided by cloud providers (such as Amazon S3), storage devices within local data centers, or dedicated data services like databases. The key advantage is that Kubernetes abstracts these storage resources from applications running within containers. Consequently, applications do not directly interact with the underlying storage media, leading to improved efficiency, scalability, and ease of management.

Kubernetes ensures that pods and nodes have access to the appropriate storage resources through various mechanisms. PersistentVolume (PV) and PersistentVolumeClaim (PVC) are Kubernetes resources that facilitate dynamic provisioning and binding of persistent storage volumes to pods. Kubernetes uses the CSI (Container Storage Interface) to manage and integrate various storage solutions seamlessly.

Troubleshooting Kubernetes Networking

When encountering network issues within a Kubernetes cluster, efficient troubleshooting is essential to maintain application performance and stability. Here are five key steps to troubleshoot Kubernetes networking problems:

- Verify Pod Connectivity

- Examine Service Reachability

- Inspect Network Policies

- Monitor Network Traffic

- Check DNS Resolution

CNI

Container Network Interface (CNI) plays a pivotal role in Kubernetes networking. It serves as an essential plugin framework, enabling seamless integration of various network solutions with Kubernetes clusters. CNI facilitates communication and connectivity between pods and nodes within the cluster.

With CNI, administrators can choose from a wide array of network plugins to suit their specific networking requirements. These plugins handle tasks such as pod-to-pod communication, network segmentation, load balancing, and access control. Each CNI plugin follows the CNI specification, ensuring consistent and standardized network operations across different environments.

CNI simplifies the management of networking aspects in Kubernetes, providing flexibility and scalability to accommodate diverse network architectures and topologies. It empowers users to optimize their networking setups and seamlessly adapt to different cloud providers or on-premises infrastructures.

CNI is a vital component of Kubernetes networking, enabling efficient communication and coordination between containers and nodes, ultimately enhancing the overall performance and reliability of applications in Kubernetes clusters.

How Does Kubernetes Networking Work?

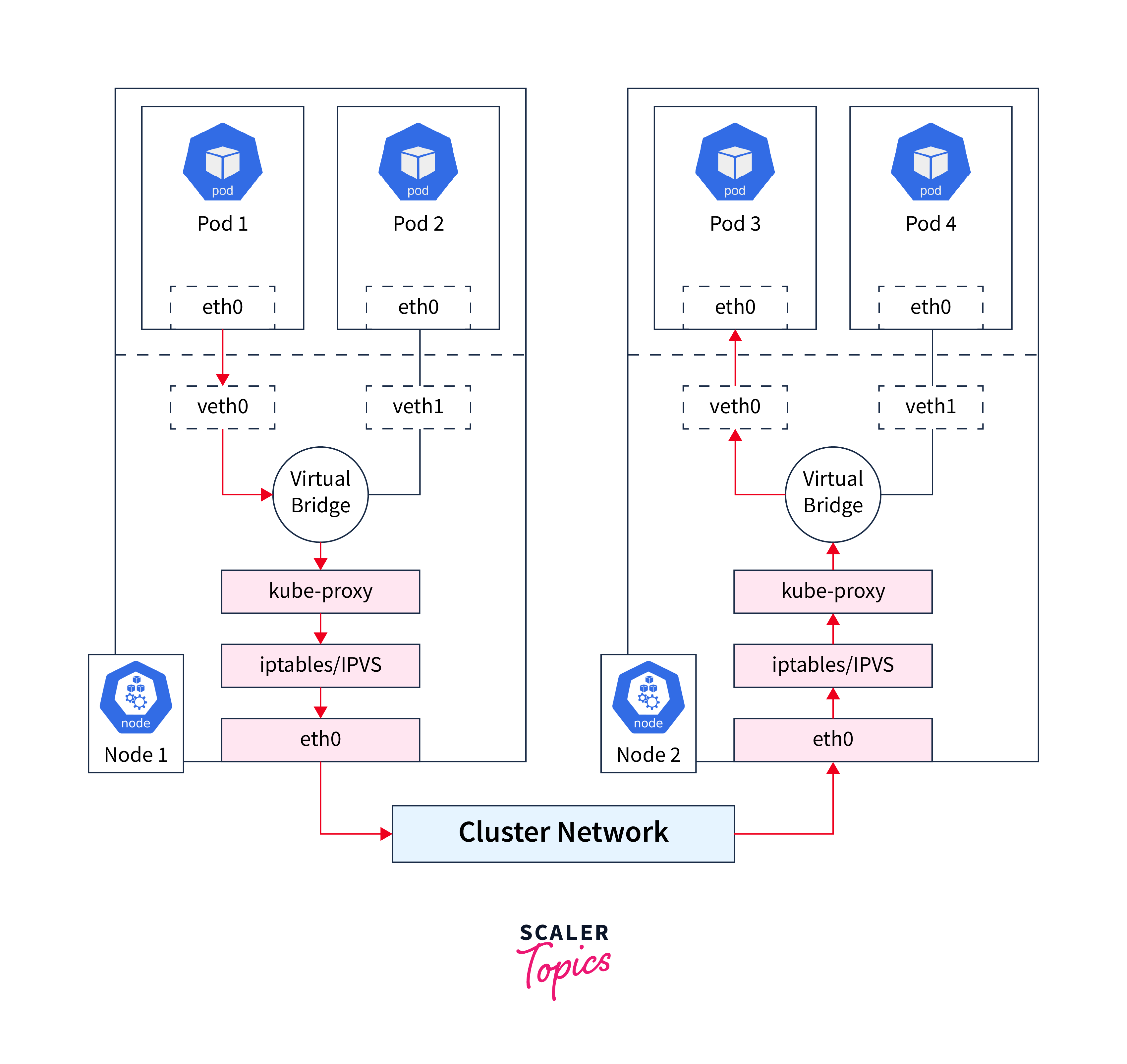

In the Kubernetes platform, various components, including Pods, containers, nodes, and applications, employ distinct networking methods to communicate effectively. Kubernetes networking encompasses container-to-container, Pod-to-Pod, Pod-to-service, and external-to-service communication.

Pod-to-Pod communication serves as the foundational aspect of Kubernetes networking. Pods interact with each other based on network policies defined by the network plugin. Without the need for explicit links or port mapping, Pods can communicate effortlessly. By sharing the same network namespace and possessing individual IP addresses, they locate and interact with other Pods across all nodes through "localhost," without relying on network address translation (NAT).

A significant challenge in Kubernetes networking revolves around the interplay between internal (east-west) and external (north-south) traffic, as the internal network remains isolated from the external network. However, external traffic can flow between nodes and external physical or virtual machines. Kubernetes offers several methods to direct external traffic into the cluster:

- LoadBalancer:

Using a LoadBalancer, a service can be externally connected to the internet. A network load balancer forwards external traffic to the service, with each service having its own IP address. - ClusterIP:

ClusterIP is the default Kubernetes service for internal communication, but external traffic can access it via a proxy. This can be useful for debugging services or displaying internal dashboards. - NodePort:

NodePort opens ports on nodes or virtual machines, forwarding traffic from those ports to the service. It is commonly used for services that don't require continuous availability, such as demo applications. - Ingress:

Ingress acts as a router or controller, directing traffic to services via a load balancer. It is beneficial when using the same IP address to expose multiple services.

Another crucial aspect of Kubernetes networking is the Container Networking Interface (CNI). CNI facilitates Pod-to-Pod communication across nodes and serves as an interface between a network namespace, a network plugin, and a Kubernetes network. It enables various CNI providers and plugins to offer different sets of features and functionality. CNI plugins can dynamically configure networks and resources as Pods are created or deleted, managing IP addresses efficiently during container provisioning.

FAQs

Q. What is Kubernetes networking, and why is it important?

A: Kubernetes networking refers to the system of communication and connectivity between various components within a Kubernetes cluster, such as Pods, containers, nodes, and services. It plays a vital role in enabling seamless data exchange, load balancing, and service discovery within the cluster. Properly configured networking ensures that applications function efficiently and securely in the Kubernetes environment.

Q. How does Pod-to-Pod communication work in Kubernetes?

A. In Kubernetes, Pod-to-Pod communication forms the foundation of networking. Pods communicate with each other without creating explicit links between them or mapping container ports to host ports. This is achieved through network policies set by the network plugin. With their individual IP addresses and shared network namespace, Pods can effortlessly discover and communicate with other Pods across all nodes within the cluster.

Q. What are the different methods of external traffic access in Kubernetes?

A. Kubernetes offers various methods to enable external traffic access to services within the cluster. These methods include:

- LoadBalancer:

It connects a service externally to the internet through a network load balancer, providing each service with its own IP address. - ClusterIP:

This is the default Kubernetes service for internal communications, but external traffic can access it through a proxy, facilitating debugging or displaying internal dashboards. - NodePort:

It opens ports on nodes or virtual machines, forwarding traffic from those ports to the service. NodePort is often used for services that don't require continuous availability, such as demo applications. - Ingress:

Ingress acts as a router or controller, routing traffic to services via a load balancer. It is useful when multiple services need to be exposed using the same IP address.

Conclusion

- Kubernetes networking is a fundamental aspect of the platform, enabling seamless communication and connectivity between components within the cluster, such as Pods, containers, and services.

- Pod-to-Pod communication forms the backbone of Kubernetes networking, allowing Pods to interact without explicit links or port mappings, and ensuring efficient data exchange across all nodes.

- External traffic access in Kubernetes is facilitated through various methods like LoadBalancer, ClusterIP, NodePort, and Ingress, providing flexible options for connecting services externally.

- Dual-stack mode in Kubernetes allows for the coexistence of IPv4 and IPv6 addresses, enhancing compatibility and adaptability in diverse networking environments.

- The Container Networking Interface (CNI) plays a crucial role in integrating network solutions with Kubernetes clusters, managing communication and resources for Pods across nodes.

- By understanding Kubernetes networking and its diverse features, users can harness the platform's full potential, ensuring robust and scalable application deployments.