What is Kubernetes?

Overview

Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It was developed by Google and later donated to the Cloud Native Computing Foundation (CNCF). It abstracts the underlying infrastructure and allows developers to focus on application logic without worrying about the intricacies of the underlying infrastructure.

What is Kubernetes?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. Kubernetes used for managing the complex task of running and coordinating multiple containers within a cluster, enabling developers to deploy applications consistently across various environments while maintaining scalability and availability.

Benefits

Here's a comprehensive explanation of its key advantages:

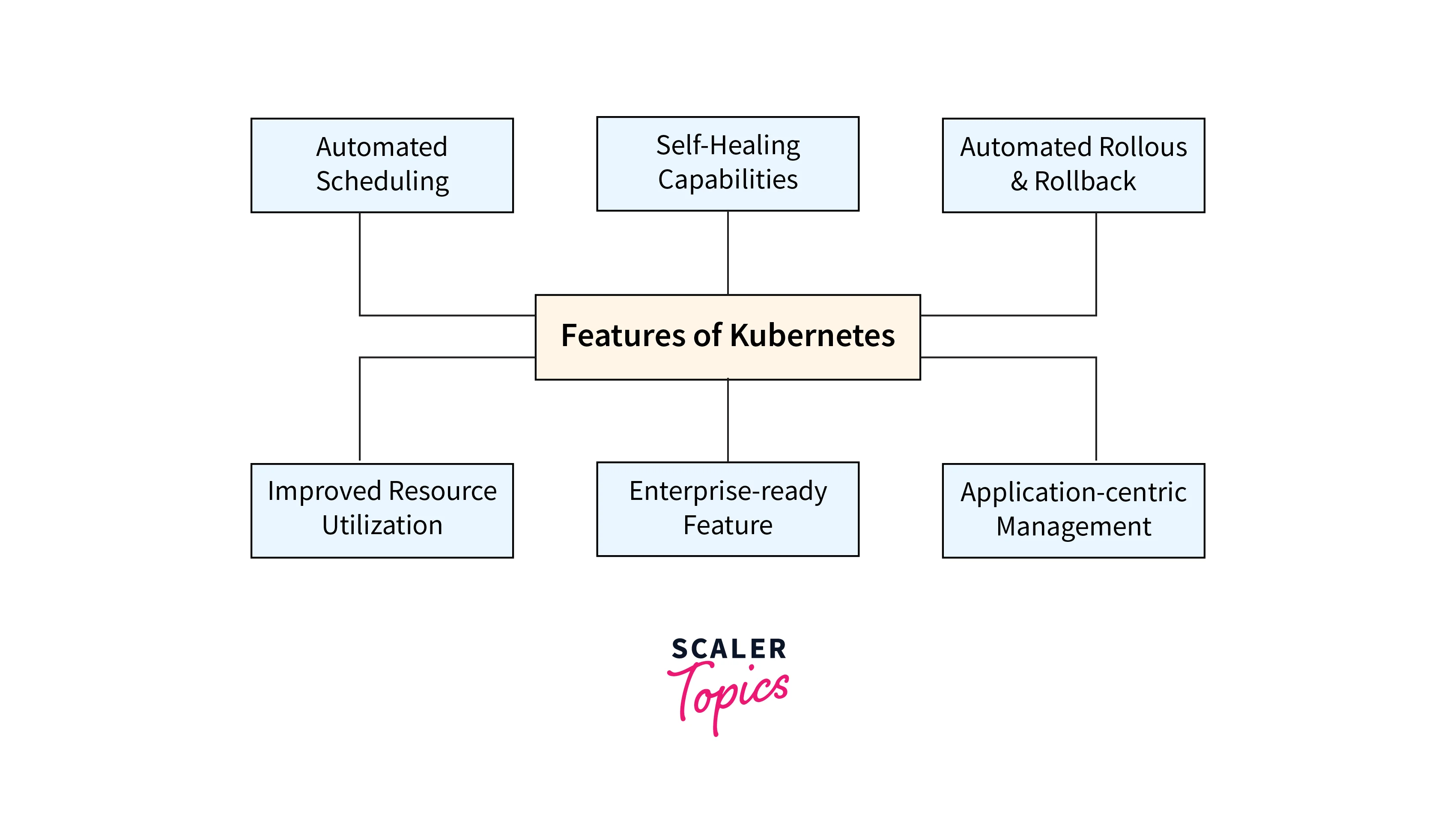

- Automated Orchestration: Kubernetes used for automating complex application deployment and scaling tasks, eliminating manual intervention. It orchestrates containers seamlessly, ensuring consistent and error-free deployments.

- Scalability: With Kubernetes, applications can effortlessly scale based on demand. Horizontal and vertical scaling options allow for resource adjustment without service disruption, ensuring optimal performance even during traffic spikes.

- High Availability: Kubernetes used for ensuring high availability by distributing containers across nodes, monitoring their health, and automatically replacing failed instances. This minimizes downtime and maintains seamless operation.

- Resource Efficiency: Resource utilization is optimized through intelligent scheduling and bin-packing algorithms. Kubernetes used for ensuring efficient use of computing resources, which translates to cost savings and better hardware utilization.

- Declarative Configuration: Applications are described declaratively using YAML or JSON files, allowing for easy replication and version control. This simplifies management and reduces configuration errors.

- Self-Healing: Kubernetes detects and automatically replaces failed containers, nodes, or even entire clusters. This self-healing capability minimizes manual intervention and enhances application reliability.

- Rolling Updates and Rollbacks: Application updates can be performed seamlessly with rolling updates, ensuring zero-downtime deployments. If issues arise, Kubernetes used for enabling quick rollbacks to previous versions, reducing risk.

- Load Balancing: Built-in load balancers distribute network traffic to containerized applications, ensuring optimal resource utilization and preventing overloading of individual instances.

- Secrets and Configuration Management: Kubernetes provides secure mechanisms for managing sensitive information, such as passwords and API keys, through secrets. This enhances security and compliance.

Contributing Resources to Kubernetes

Here are the key contribution resources that make up Kubernetes:

a. Nodes: These machines run containerized applications managed by Kubernetes.

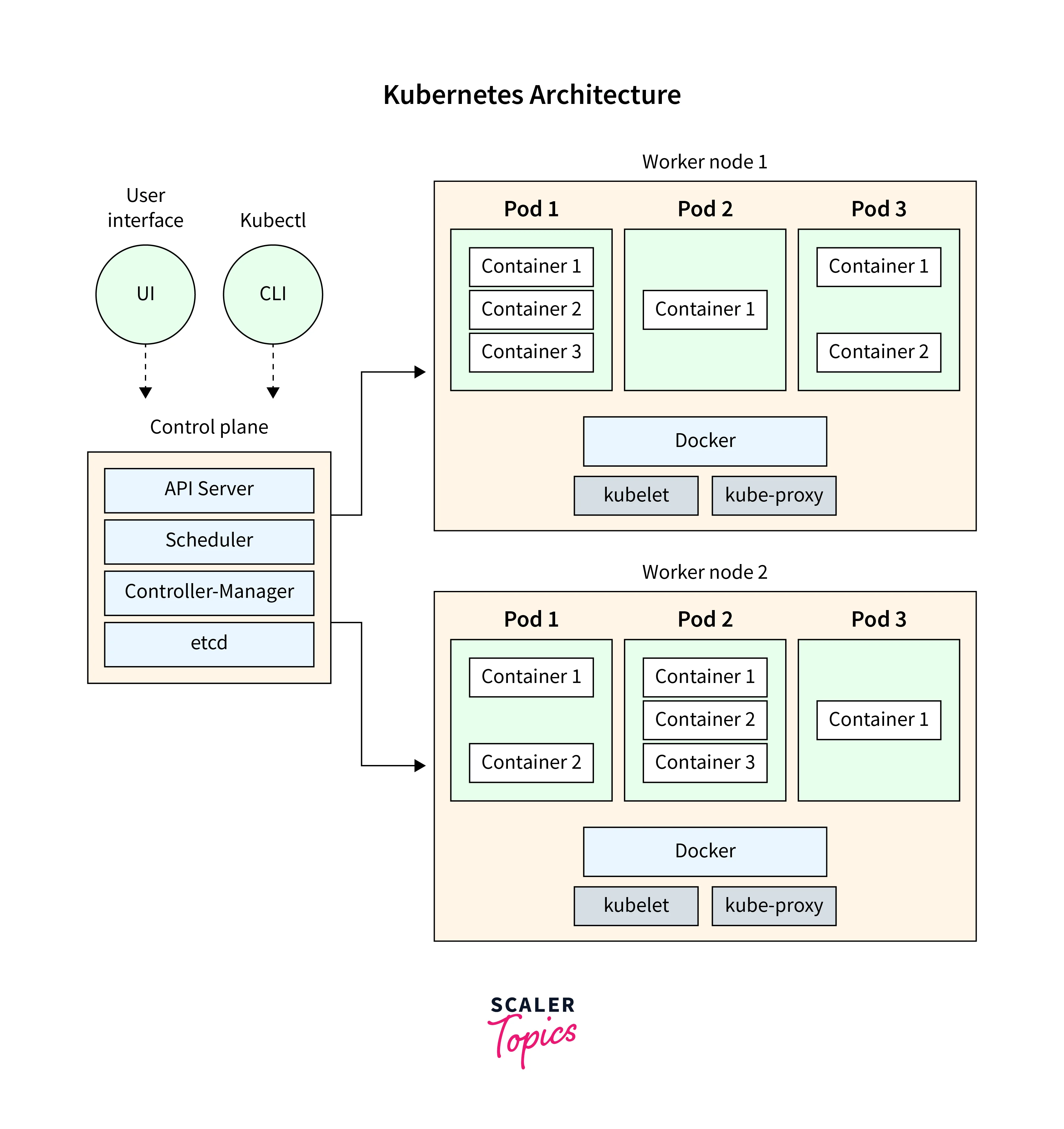

b. Master: It is the control plane of the Kubernetes cluster. It manages the overall cluster state, including scheduling, scaling, and monitoring.

c. API Server: It is a central component that exposes the Kubernetes API, allowing users and external tools to interact with the cluster.

d. Controller Manager: These controllers ensure that the desired state of the system matches the current state, handling tasks like replication, endpoints, and namespaces.

e. Scheduler: The scheduler is responsible for assigning containers to nodes based on resource requirements, policies, and constraints.

f. etcd: It serves as the persistent backing store for all cluster data, making it a critical component for maintaining cluster state.

g. Kubelet: Kubelet is an agent that runs on each node and communicates with the master.

h. Pod: A pod is the smallest deployable unit in Kubernetes. It represents a single instance of a running process in a cluster.

Kubernetes Clusters

What is Kubernetes in terms of clusters? Kubernetes operates within clusters, which consist of interconnected machines, or nodes, that collectively run containerized applications. These clusters include:

- Master Node: Hosts the Kubernetes control plane, responsible for managing and controlling the cluster's operations.

- Worker Nodes: Run application containers and are managed by the control plane.

- Control Plane Components: It includes various components such as the API server, etcd, scheduler, and controller manager.

- Networking and Services: It enable load balancing and routing.

- Namespaces: It allow the partitioning of resources within a cluster, providing isolation and resource quotas for different teams or projects.

- ConfigMaps and Secrets: What is Kubernetes' configuration management tool? This is where ConfigMaps and Secrets come in. ConfigMaps store non-confidential configuration data, while Secrets store sensitive information like passwords and API keys, keeping them separate from your Pod's code.

How does Kubernetes work?

Kubernetes operates by automating the deployment, scaling, and management of containerized applications through these key steps:

- Desired State Configuration: Define the desired state of your application using Kubernetes manifests, specifying containers, resources, and more.

- API Server: The Kubernetes API server exposes the API for interactions, while etcd stores the cluster's configuration data.

- Control Loop: Controllers monitor and reconcile the differences between desired and actual states, ensuring the cluster aligns with the desired state.

- Scheduler: The scheduler assigns containers to nodes based on resource availability and application requirements.

- Worker Nodes and Pods: Worker nodes run pods, the smallest deployable units, containing containers sharing network and storage.

- Services and Networking: Kubernetes provides load balancing, service discovery, and routing for effective communication between pods.

- Scaling and Self-Healing: Kubernetes enables automatic scaling and self-healing by restarting or replacing failed containers.

Kubernetes and other projects

Here's a brief overview of Kubernetes and some related projects, along with links to their respective articles or sources for more detailed information:

i. Kubernetes It provides a powerful and flexible solution for managing complex application architectures in dynamic environments.

- Learn more about Kubernetes: https://kubernetes.io/docs/home/

ii. Istio Istio is a service mesh that enhances observability, security, and traffic management for microservices-based applications running on Kubernetes. It allows fine-grained control over network traffic and enables advanced features like load balancing, circuit breaking, and more.

- Learn more about Istio: https://istio.io/

iii. Prometheus Prometheus is an open-source monitoring and alerting toolkit that integrates seamlessly with Kubernetes. It collects metrics from applications and infrastructure and provides insights into application performance, allowing proactive troubleshooting and optimization.

- Learn more about Prometheus: https://prometheus.io/docs/introduction/overview/

iv. Helm Helm is a package manager for Kubernetes that simplifies application deployment and management. It uses charts, which are packages of pre-configured Kubernetes resources, to streamline the process of defining, installing, and upgrading applications.

- Learn more about Helm: https://www.digitalocean.com/community/tutorials/an-introduction-to-helm-the-package-manager-for-kubernetes

v. Knative Knative is an open-source platform built on Kubernetes that simplifies deploying and managing serverless workloads. It provides abstractions for event-driven and scalable applications, enabling developers to focus on writing code without dealing with infrastructure complexities.

- Learn more about Knative: https://www.ibm.com/topics/knative

vi.OpenShift OpenShift is a Kubernetes-based container platform that extends Kubernetes with additional features and tools It offers developer-friendly workflows, enhanced security features, and integrated developer tools to simplify application deployment and management.

- Learn more about OpenShift: https://docs.openshift.com/container-platform/4.10/getting_started/openshift-overview.html

vii. Spinnaker Spinnaker is an open-source continuous delivery platform that supports multi-cloud deployments, including Kubernetes. It automates application deployment pipelines, enabling organizations to release software more frequently and reliably.

- Learn more about Spinnaker: https://spinnaker.io/

How to Use Kubernetes in Production

Using Kubernetes in production requires careful planning and implementation.

- Infrastructure Setup: Choose a suitable environment and set up a cluster of machines for Kubernetes.

- Cluster Installation: Install Kubernetes on nodes and configure networking and security.

- Resource Planning: Determine resource requirements and configure resource requests and limits for pods.

- Containerization: Dockerize applications and create Kubernetes manifests for deployment.

- Monitoring and Observability: Set up monitoring tools like Prometheus and Grafana for performance tracking.

- Scaling and Autoscaling: Implement Horizontal Pod Autoscaling for dynamic scaling based on demand.

- Backup and Disaster Recovery: Back up, etc., regularly and establish a disaster recovery plan.

FAQs

1. What is Kubernetes used for? Kubernetes is used to manage the deployment, scaling, and operation of containerized applications across a cluster of machines.

2. Is Kubernetes only for large-scale applications? No, Kubernetes can be used for applications of varying sizes. While it's often associated with large-scale applications, its benefits can be realized even for smaller projects.

3. What are pods in Kubernetes? Pods are the smallest deployable units in Kubernetes, containing one or more containers that share the same network namespace and storage.

4. What is a Kubernetes namespace? A Kubernetes namespace provides a way to partition resources within a cluster, allowing multiple virtual clusters to coexist and ensuring resource isolation.

5. How does Kubernetes handle application scaling? Kubernetes can automatically scale applications using Horizontal Pod Autoscaling (HPA) based on factors like CPU utilization or custom metrics.

Conclusion

- Kubernetes is an open-source container orchestration platform used to manage containerized applications across a cluster of machines.

- It automates the deployment, scaling, and management of applications, abstracting away infrastructure complexities.

- Kubernetes consists of a control plane (master node) and worker nodes running containers (pods).

- Key concepts include pods, services, controllers (e.g., deployments), namespaces, and networking solutions.

- Kubernetes enhances application scalability, self-healing, load balancing, and resource utilization.

- Integration with monitoring tools like Prometheus and Grafana ensures observability.

- Kubernetes requires careful planning, security measures, and regular maintenance for successful production use.