The Origin of CNN - LeNet

Overview

The LeNet architecture, developed by Yann LeCun in the late 1980s, is considered the first convolutional neural network (CNN). LeNet was used for postal automation tasks, such as reading zip codes on mail. It consisted of convolutional, pooling, and fully connected layers and was trained using the backpropagation algorithm. It achieved high accuracy on the MNIST dataset of handwritten digits.

Introduction

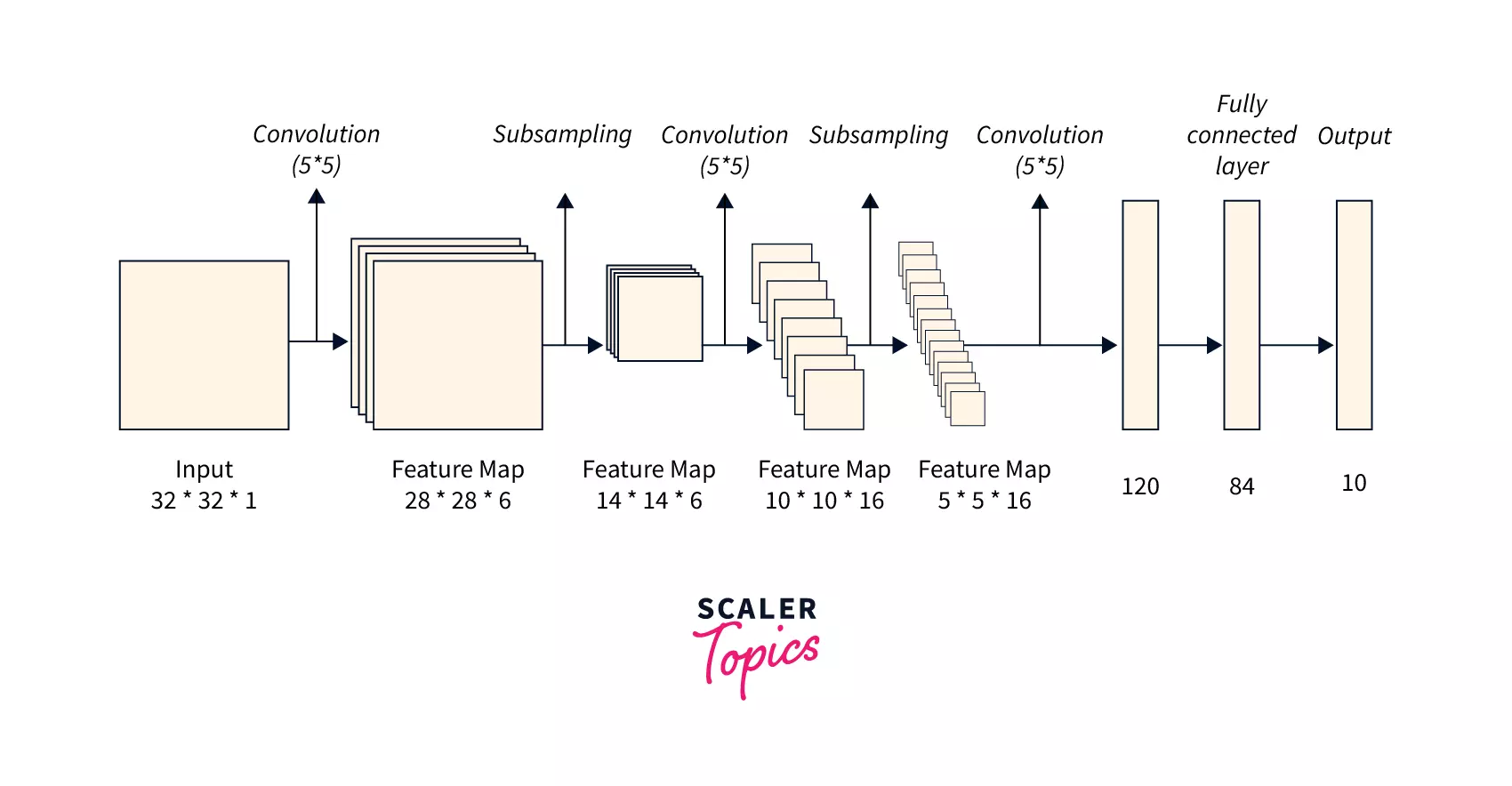

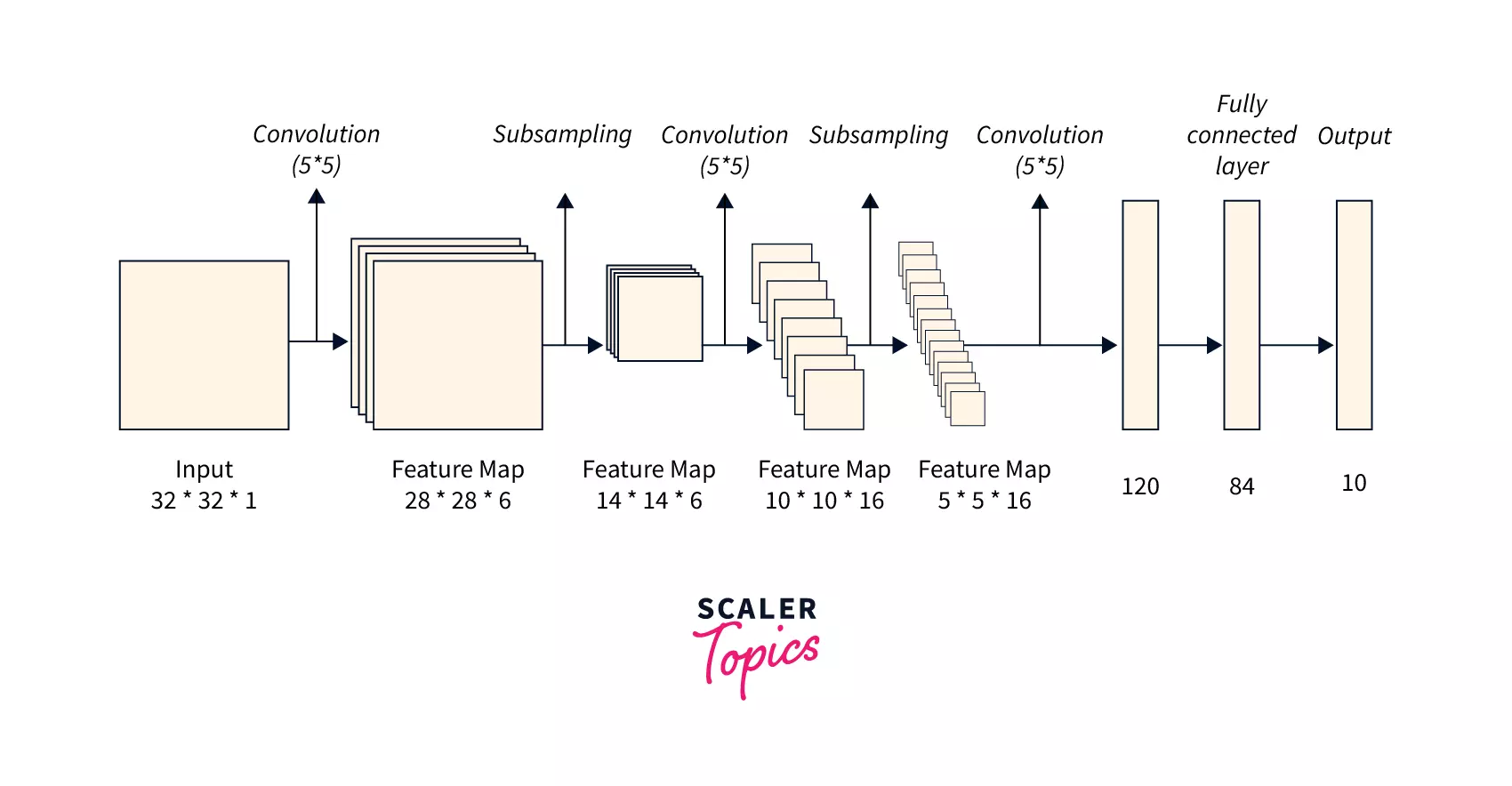

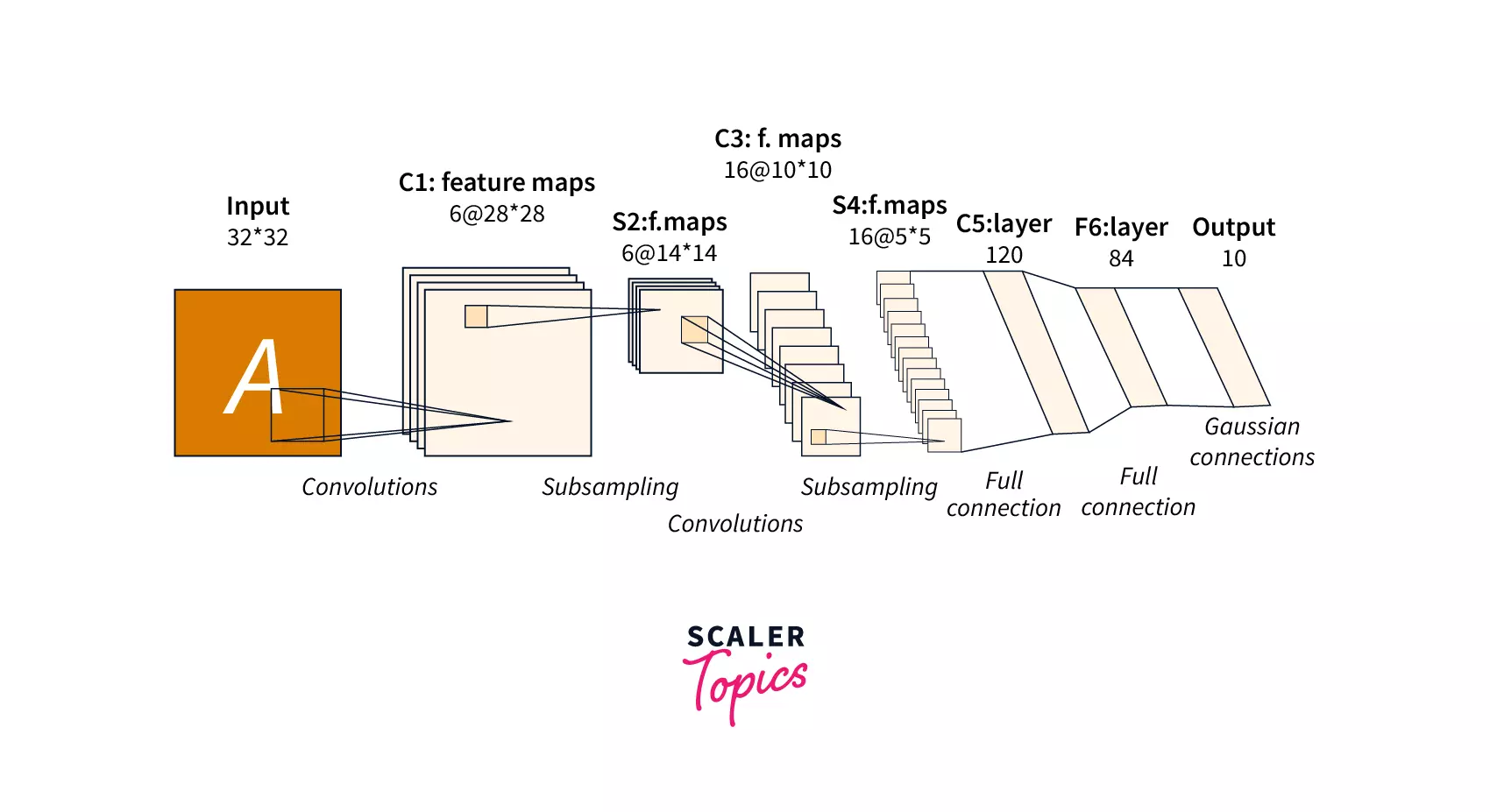

LeNet is a convolutional neural network (CNN) architecture that Yann LeCun developed in 1998. It was designed to recognize handwritten digits and was one of the first successful applications of CNNs for this task. LeNet architecture consists of several convolutional and pooling layers, followed by fully connected layers. The convolutional layers are responsible for learning features from the input image, while the pooling layers reduce the spatial dimensions of the feature maps. The fully connected layers are used for classification. The architecture of LeNet-5, the most widely used variant of LeNet, comprises two sets of convolutional and pooling layers, followed by three fully connected layers. The output of the final fully connected layer is used to produce the final classification. LeNet was trained on the MNIST dataset, a dataset of handwritten digits, and achieved high accuracy in recognizing the digits.

What is LeNet?

LeNet is a convolutional neural network (CNN) architecture. It was one of the first successful applications of CNNs for recognizing handwritten digits. At the time, LeNet was considered a breakthrough in machine learning because it achieved high levels of accuracy in recognizing digits, despite the variability in handwriting.

LeNet's architecture consists of several convolutional and pooling layers, followed by fully connected layers. The convolutional layers are responsible for learning features from the input image, while the pooling layers reduce the spatial dimensions of the feature maps. The fully connected layers are used for classification.

LeNet was a significant step forward in the development of CNNs and is considered one of the pioneers in the field. It served as a foundation for many further developments and improvements in CNN architectures, and its basic design principles are still used in many modern CNN architectures.

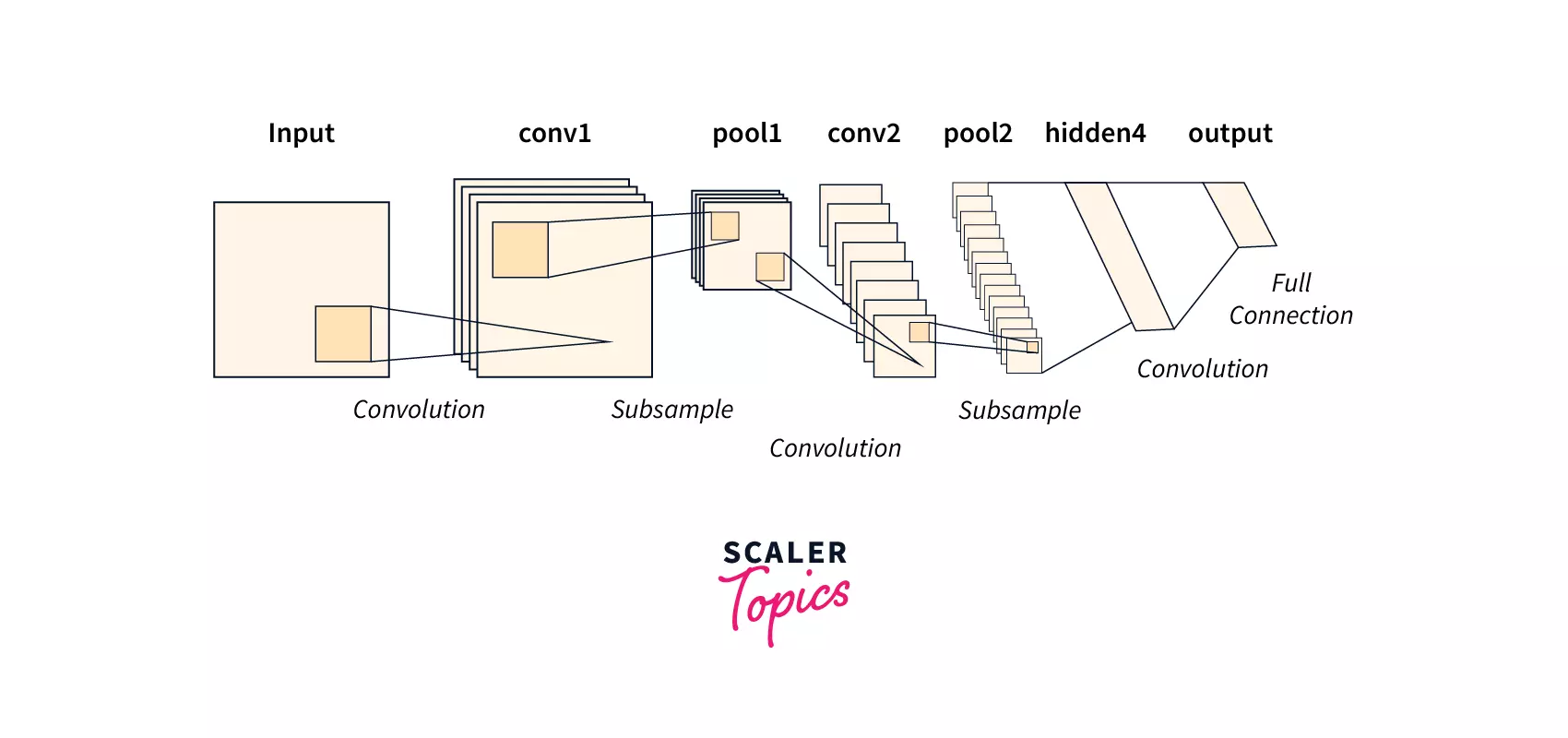

Structure of the LeNet Network

LeNet consists of several layers: convolutional, pooling, and fully connected. The layers are connected in a feedforward manner, meaning that the output of one layer is used as the input to the next layer.

The LeNet architecture consists of the following layers:

- Input layer

- Convolutional layers

- Pooling layers

- Fully connected layer

- Output layer

Input Layer

- In a Convolutional Neural Network (CNN), the input layer is responsible for receiving the input data and passing it along to the next layers in the network.

- In LeNet, the input layer consists of a grid of neurons, with each neuron representing a pixel in the input image. This layer is usually followed by one or more convolutional layers, which apply filters to the input data and extract features from it.

- The output of the convolutional layers is then passed through one or more fully connected layers, which process the features and make predictions based on them.

- Overall, the input layer in LeNet and any CNN plays a crucial role in the network by providing the raw data that the network uses to learn and make predictions.

The Architecture of LeNet

LeNet's Convolutional Neural Network (CNN) architecture consists of layers that process input data and make predictions based on it.

LeNet consists of the following layers:

- Input layer: The input layer is the first layer of the network, which receives the input data, such as images, and passes it along to the next layers in the network.

- Convolutional layers: The convolutional layers apply filters to the input data and extract features from it. LeNet has two convolutional layers responsible for learning features from the input image.

- Pooling layers: The pooling layers downsample the convolutional layers' output, reducing the data's spatial dimensions and increasing invariance to small translations in the input. LeNet has two pooling layers that are used to reduce the spatial dimensions of the feature maps.

- Fully connected layers

fully connected layers process the features extracted by the convolutional and pooling layers and make predictions based on them. LeNet has three fully connected layers that are used for classification. - Output layer: The output layer produces the final prediction based on the output of the fully connected layers. It gives the final output of the model.

Overall, the architecture of LeNet is designed to process image data and make predictions based on the extracted features. The network uses a combination of convolutional, pooling, and fully connected layers to analyze the input data and make informed decisions based on it.

Convolutional Layers

The convolutional layers in LeNet, apply filters to the input data and extract features from it. These layers consist of filters that scan over the input data and produce a set of feature maps as output. The convolutional layers are typically followed by pooling layers, which downsample the output of the convolutional layers and reduce the spatial dimensions of the data. Combining convolutional and pooling layers allows LeNet to learn hierarchical representations of the input data and extract useful features for making predictions.

Sub-sampling Layers

In LeNet, the sub-sampling layers are also known as pooling layers. These layers are responsible for downsampling the output of the convolutional layers, reducing the spatial dimensions of the data, and increasing invariance too small translations in the input.

There are two pooling layers in LeNet, and each pooling layer consists of a set of filters that scan over the previous layer's output and produce a downsampled version of the data as output. The pooling filters are typically much smaller than those used in the convolutional layers and are applied to non-overlapping regions of the input data.

The pooling layers in LeNet serve several important functions:

- They reduce the computational complexity of the network by reducing the number of parameters that the network needs to learn.

- They increase invariance to small translations in the input, making the network more robust to small changes in the position of the objects in the input data.

- They allow the network to learn hierarchical representations of the input data, with the lower layers learning basic features such as edges and corners and the higher layers learning more complex features such as shapes and patterns.

:::

Implementation of LeNet

Convolutional Layers

- The code defines convolutional layers, conv1 and conv2, which apply a convolution operation to the input data using a set of filters (W1 and W2). The convolution operation extracts feature from the input data by sliding the filters over the input and computing a dot product between the filter weights and the input values. The biases (b1 and b2) are added to the output of the convolution operation.

- The code applies a ReLU activation function to the output of each convolutional layer, which introduces non-linearity to the model.

Sub-sampling Layers

- The code defines two pooling layers, pool1 and pool2, which reduce the size of the output of the convolutional layers by applying a max pooling operation. The max pooling operation selects

Implementing LeNet with TensorFlow

Load MNIST

- The command "pip install tensorflow" installs the TensorFlow library.

- Next, you can use the Keras module from TensorFlow to load the MNIST dataset. You can do this by using the load_data() function from keras.datasets. This function returns two tuples: (x_train, y_train) and (x_test, y_test), which contain the training and test data, respectively.

Preprocess the Dataset:

- You can then preprocess the dataset by standardizing the pixel values and preparing a separate validation split from the original training split. To standardize the pixel values, you can divide each pixel value by 255.0 to scale the values between 0 and 1.

- To prepare a separate validation split, use the train_test_split function from sklearn.model_selection. This function splits the training data into two datasets: training and validation sets.

Training the Model Using the Legacy compile() and fit() Methods

- Now that you have prepared the dataset, you can define the LeNet model using the Sequential class from keras.models. The LeNet model consists of two convolutional layers and three fully connected layers.

- Next, you can compile the model using the compile method and specify the loss function, optimizer, and metrics to track.

- Now that you have compiled the model, you can train it using the fit method. This method takes in the training data and labels, the number of epochs to train for, and the validation data.

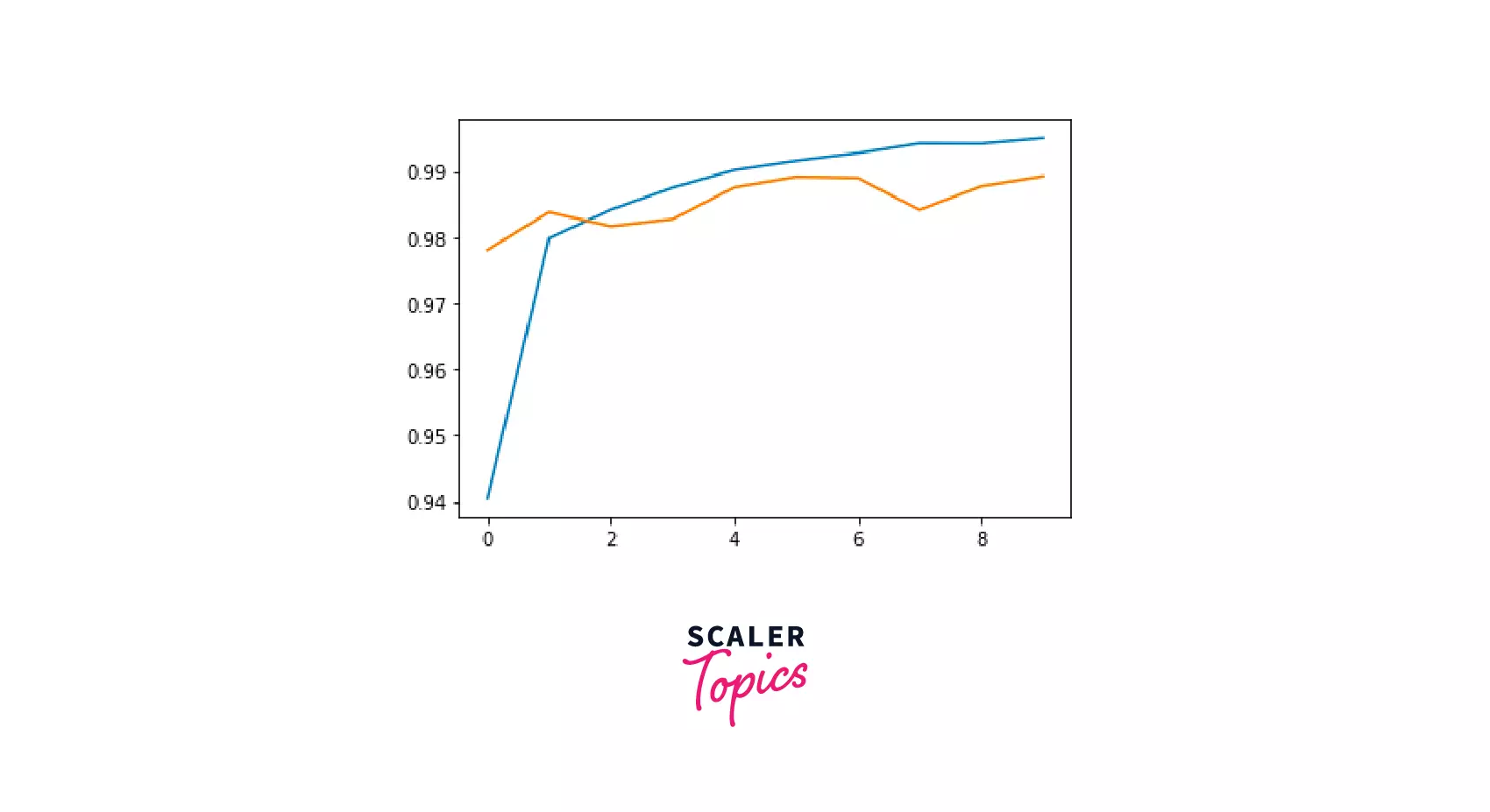

Accuracy of the Model

- The fit method returns a History object containing each epoch's loss and metric values. Using the history dictionary, you can access the loss and metric values for the training and validation sets.

- You can also visualize the training and validation loss and accuracy using Matplotlib.

Conclusion

- LeNet is a convolutional neural network (CNN) architecture developed in the 1990s. It was one of the first deep-learning models specifically designed for image classification tasks.

- LeNet consists of convolutional and pooling layers, followed by a fully connected layer.

- It was originally used for classifying handwritten digits but has also been applied to other tasks such as object recognition and natural language processing.

- LeNet has been influential in developing modern CNNs, and many of its key ideas have been widely adopted in designing other CNN architectures. However, more sophisticated CNN architectures have been developed since LeNet, offering improved performance on various tasks.