Load Balancing in Cloud Computing

Overview

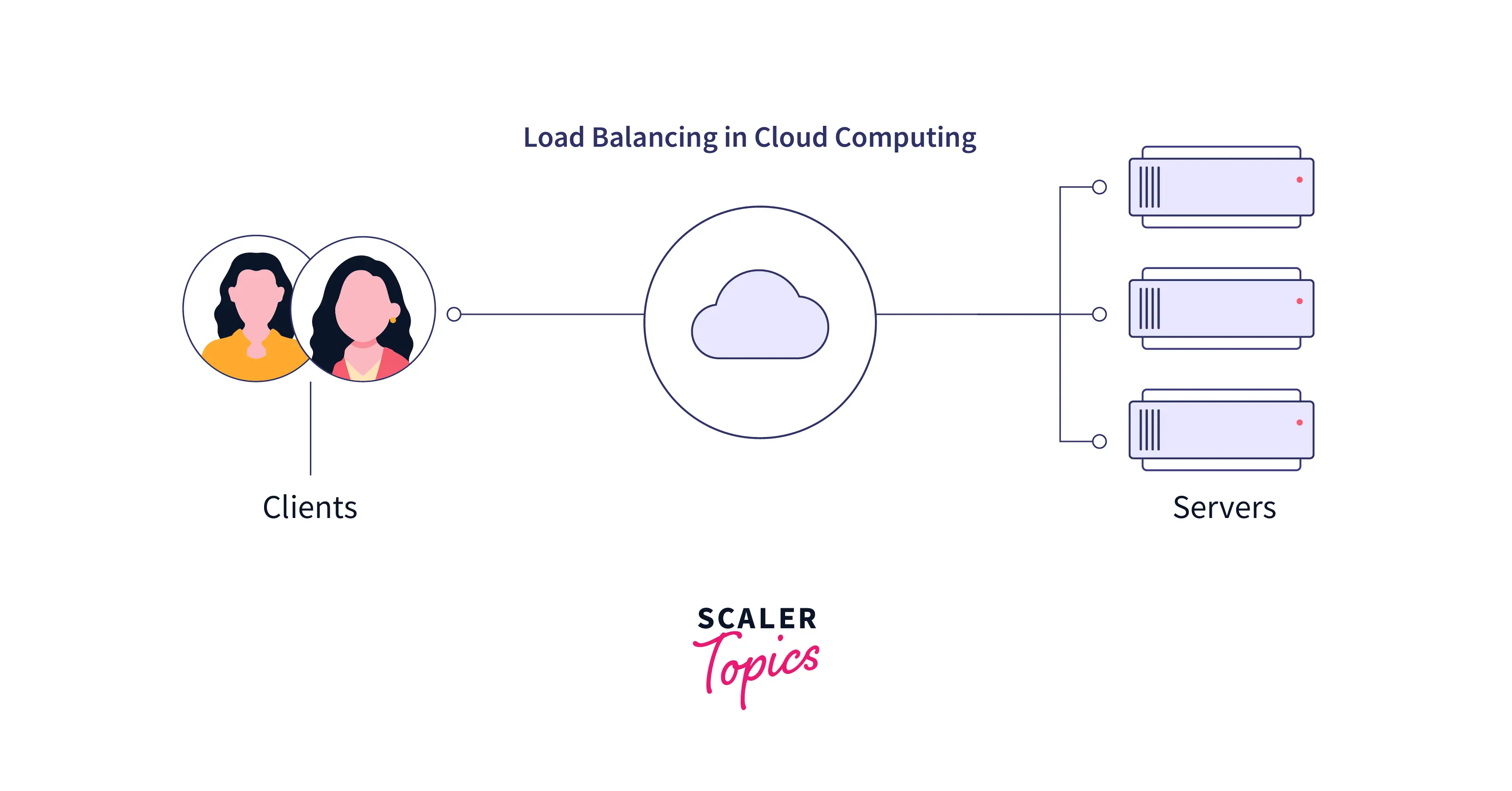

The process of dividing workload and traffic in a cloud computing environment is known as cloud load balancing. Distributing resources among several computers, networks, or servers lets businesses manage workload demands or application demands. Holding the flow of workload traffic and Internet-based demand is part of cloud load balancing.

Introduction

In cloud computing, load balancing spreads traffic and workloads to prevent any server or computer from underloading, overloading, or sitting idle. To enhance overall cloud performance, load balancing optimizes various limited characteristics like execution time, response time, and system stability. A load balancer stands between servers and client devices to control traffic and makes up the load-balancing architecture used in cloud computing. To improve the effectiveness and durability of cloud applications, load balancing in cloud computing evenly distributes traffic, workloads, and computing resources throughout a cloud environment. Cloud load balancing allows companies to distribute host resources and client requests among many computers, application servers, or computer networks. Increased organizational resources with faster application response times are the significant goals of load balancing in cloud computing.

Examples of Cloud Load Balancing Services

The following are some examples of load-balancing solutions offered by different cloud providers.

- There are four load-balancing services provided by Microsoft Azure. For distributing services throughout several Azure regions worldwide, Azure Traffic Manager is a layer 7 DNS-based traffic load balancer (according to the OSI model). A layer 4 network load-balancer for relaying traffic across VMs is Azure Load Balancer. A layer 7 delivery controller for regional applications is Azure Application Gateway. A layer 7 global load balancer for microservices, Azure Front Door offers extremely high security.

- Elastic load balancing from Amazon Web Services (AWS) distributes incoming client traffic and directs it to registered targets like EC2 instances. AWS supports two types of load balancers : Application load balancers that work on layer 7 and network load balancers that work on the transport layer. The functions provided, the network layers at which they operate, and the supported communication protocols vary between load balancers.

- The front-end server infrastructure that drives Google is the foundation for the Cloud Load Balancing service offered by the Google Cloud Platform. The service provides a variety of load balancers that differ depending on the customer's needs, including whether they require a proxy or pass-through services, global or regional load balancing, Premium or Standard network service tiers, or external or internal load balancing.

Types of Load Balancing Solutions in Cloud Computing

To prevent any server from becoming overburdened, load adjusting in cloud computing takes up enormous responsibilities and disperses traffic among cloud servers. Execution is improved, and downtime and latency are decreased accordingly. High-level load-balancing solutions in cloud computing spread traffic north of a few servers to diminish idleness and increment server accessibility and dependability. Using an assortment of load-balancing approaches, pragmatic cloud load-adjusting executions diminish server failure and improve execution. Before rerouting traffic in case of a failover, a load-balancer, for example, can survey the distance between servers or the load on those servers.

Software-based Load Balancers

Software-based Load balancers can operate in any environment and location, making them better suited for cloud infrastructures and applications. Standard equipment (work areas and laptops) and operating systems are used to run programming/software-based load balancers. Domain Name System (DNS) load balancing is a software-defined technique used in cloud computing that distributes client requests for a domain among numerous servers. Each time a new client request is made, the DNS system returns a different list of IP addresses, ensuring that DNS requests are distributed evenly among servers. DNS load balancing offers automatic failover or backup and removes inactive servers without human intervention.

Hardware-based Load Balancer

Application Specific Integrated Circuits (ASICs) are tailored for a specific function in dedicated boxes called hardware-based load balancers. Since they are typically ineffective at controlling cloud traffic, hardware load balancers are frequently not allowed to operate in vendor-managed cloud settings. As hardware-based load balancing is quicker than software solutions, ASICs enable high-speed network traffic promotion and are frequently employed for transport-level load balancing.

Virtual Load Balancer

Dispersing traffic to a few network servers permits a virtual load balancer to adjust the load on a server. Virtual load balancing attempts to copy software-driven frameworks through virtualization. It uses a virtual PC to run the load-balancing machine's software.

Different Types of Load Balancing Algorithms in Cloud Computing

The following are the different types of load-balancing algorithms that are used in cloud computing.

Static Algorithm

Static algorithms are created for systems that see relatively little load variance. The static method equitably distributes all traffic among the servers. For improved processor performance, this algorithm needs an in-depth understanding of server resources, which is decided at the start of development. However, the choice to move the load is independent of the system's current condition. One of the main downsides of static load-balancing algorithms is that load-balancing tasks only function after they have been established. It couldn't be used for load balancing on other devices.

Dynamic Algorithm

The dynamic approach initially identifies the network's lightest server and gives it a precedence for load balancing. Real-time connection with the network is necessary for this, which may increase system traffic. Here, the load is managed based on the system's present status. Dynamic algorithms can make load transfer decisions based on the system's present condition. In this approach, processes can switch in real time from a machine that is frequently used to a machine that is rarely used.

Round Robin Algorithm

The round-robin algorithm for load balancing assigns tasks using the round-robin method, as the name suggests. It starts by randomly picking the first node, then distributes duties to the remaining nodes in a round-robin fashion. It is one of the most straightforward approaches for load balancing. Processors randomly assign each process without specifying a priority. Assuming the workload is uniformly dispersed among the cycles, it answers rapidly. Different processes load at various rates. As a result, certain nodes can be overloaded while others might continue to be underutilized.

Weighted Round Robin Load Balancing Algorithm

The most difficult problems with Round Robin Algorithms have been improved with the development of Weighted Round Robin Load Balancing Algorithms. A predetermined combination of weights and functions is used in this algorithm, and they are dispersed based on the weight values. Higher values are given to processors with more capacity. So, additional jobs will be assigned to the more heavily loaded servers. The servers will experience constant traffic once the load is at its maximum.

Opportunistic Load Balancing Algorithm

Each node can be active thanks to the opportunistic load balancing method. The present burden of each system is never taken into account. OLB assigns all outstanding tasks to these nodes, regardless of the burden that is currently present on each node. Even when certain nodes are available, the processing task will be carried out slowly because it is an OLB and does not account for node implementation time, which results in some bottlenecks.

Minimum To Minimum Load Balancing Algorithm

First of all, the jobs are completed in the shortest amount of time under the minimum to minimum load balancing methods. The function with the lowest value is chosen among them all. The work on the system is scheduled based on that minimum amount of time. The job is deleted from the list after other tasks on the computer are updated. The final assignment won't be issued until this process is finished. Where there are more tiny jobs than large tasks, this algorithm performs well.

IP Hash

This basic load-balancing method circulates demands as indicated by IP address. With the assistance of a unique hash key it makes, the load-balancing calculation utilized in this strategy distributes client requests to servers. The source, destination, and IP address are undeniably encrypted and utilized as hash keys.

Least Connections

The Least Connections technique, one of the more famous unique load-adjusting strategies in distributed computing, is the most appropriate for circumstances where there are spikes in rush hour gridlock. The last connections direct the traffic to the server with minimal dynamic connections, distributing it uniformly among every single available server.

Least Response Time

The least response time dynamic method is like minimal associations in that it directs traffic to the server with the most reduced average reaction time and the least dynamic associations.

Major Examples of Load Balancers

Direct Routing Request Despatch Technique

This request dispatching technique is comparable to that used by IBM's NetDispatcher. A virtual IP address is shared by a load balancer and a real server. The load balancer adopts a virtual IP address interface that receives request packets and passes them straight to the chosen server.

Dispatcher-Based Load Balancing Cluster

To control where TCP/IP requests are dispatched, a dispatcher employs intelligent load balancing using server availability, workload, capacity, and other user-defined criteria. A load balancer's dispatcher module can distribute HTTP requests among various cluster nodes. Consumers connect as if it were a single server without being aware of the back-end technology because the dispatcher divides the load among numerous servers in a cluster, making services from different nodes behave like virtual services on only one IP address.

Linux Virtual Load Balancer

It is possible to create highly scalable and highly available network services using this open-source enhanced load balancing method, including POP3, FTP, HTTP, media and caching SMTP, and Voice over Internet Protocol (VoIP). It is a straightforward yet effective tool made for load balancing and fail-over. The server cluster system's main entry point is the load balancer itself. It may run the Internet Protocol Virtual Server (IPVS), a Linux kernel module that performs layer-4 switching and transport-layer load balancing.

Types of Load Balancing

For your network, you should know about the different load-balancing procedures. Relational databases require server load adjusting, while worldwide servers require it for investigating across various areas and DNS load adjusting for domain name working. Cloud-based balancers can likewise be utilized for load adjusting. The following are the different types of load balancing in cloud computing.

Network Load Balancing/ Layer 4 Load Balancing

The OSI model's fourth layer manages TCP/UDP traffic and is devoted to network load balancing. It can deal with several inquiries each second. After getting a connection request, the load balancer chooses a target from the target group for the default rule. The network layer information is used by cloud load balancing to determine where network traffic should be routed. The fastest technique of local balancing is network load balancing, however, it cannot balance how traffic is distributed among servers.

HTTP(S) Load Balancing/ Layer 7 Load Balancing

The earliest type of load balancing is HTTP(s), which utilizes Layer 7. Load balancing thus takes place in the layer of operations. This load balancing allows you to base delivery decisions on data collected from HTTP addresses, making it the most versatile.

Internal Load Balancing

HTTP and HTTPS traffic is distributed using internal load balancing. It functions much like network load balancing and is used to balance the infrastructure locally. Although you can use this type of balancing internally to balance the infrastructure, it is similar to network load balancing. Virtual, software, and hardware load balancers are the three different types.

Hardware Load Balancer

The actual hardware that distributes network and application traffic at the foundational level determines this. Although the gadget can manage a lot of traffic, it is expensive and has little flexibility.

Software Load Balancer

Compared to hardware solutions, these are more affordable. It must be installed before it may be used, whether it is an open-source or paid version.

Virtual Load Balancer

It is different from a software load balancer in that it delivers the software to the virtual machine's hardware load-balancing device.

Why is Cloud Load Balancing Important in Cloud Computing ?

Here are a few reasons why load balancing is crucial in cloud computing.

Offers Better Performance

The load-balancing technology is less expensive and simple to use. As a result, businesses may complete client applications more quickly and produce better results for less money.

Simpler Automation

Using load balancing in cloud computing, businesses can quickly gain meaningful insights into their applications and spot traffic bottlenecks using predictive analytics tools. This could guide their commercial decisions. On all of this, the automation process hinges.

Helps Maintain Website Traffic

Scalability can be offered by cloud load balancing to manage website traffic. High-end traffic can be managed with the use of efficient load balancers, which are accomplished with the help of servers and networking hardware. Cloud load balancing is used by e-commerce businesses to manage and disperse workloads when dealing with numerous visitors every second.

Emergency and Disaster Recovery

During calamities or natural disasters like tsunamis, earthquakes, tornadoes, and floods, cloud-based service providers like AWS, Azure, and GCP can identify inaccessible servers and reroute traffic across geographical boundaries. Based on whatever load balancing algorithms cloud load balancers in network support, they may frequently predict in advance which servers are more likely to get overloaded. Some cloud load balancers can quickly divert server traffic to stronger nodes that are better suited to handle requests thanks to this type of "planning", which lowers the likelihood of data loss and service outages.

Can Handle Sudden Bursts in Traffic ?

Load balancers can handle any abrupt traffic bursts that are received at once. For instance, the website may be shut down if too many people request cricket match results. One can avoid traffic flow when using a load balancer. No matter how much traffic there is, load balancers evenly distribute the website's load across various servers to deliver the best possible performance with the quickest possible response times.

High Performance

The ability to scale up services and handle increased traffic effectively and efficiently is made possible by cloud-based load balancing. With the option to briefly redirect traffic to different servers, developers who need to deploy software updates and patches, handle broken servers, and test in a real-world environment have more freedom.

Greater Flexibility

A load balancer is primarily used to safeguard websites against unexpected crashes. If a single node fails while the burden is dispersed among other network servers or units, the load is moved to another node. It enables adaptability, scalability, and improved traffic management. Load balancers are useful in cloud systems due to these features. This is done to prevent overloading a single server.

Cost-Effective

At a substantially lower total cost of ownership, load balancing in cloud computing is effective and provides superior cloud service performance and reliability. Because cloud load balancers operate in the cloud or can be purchased as a service, they are available to startups, small businesses, and medium-sized corporations.

DDoS Attack Mitigation

Load balancers defend against distributed denial of service (DDoS) assaults by distributing traffic among numerous servers, diverting traffic away from a single overloaded server during a DDoS attack, and reducing the attack surface. By reducing single points of failure, load balancing in cloud computing makes the network resistant to such attacks.

Challenges Associated with Cloud Load Balancing

Even though load balancing is generally necessary for a cloud environment, there are several challenges with it. Scalability is among the most attractive advantages of load balancing as well as cloud computing, but it is also one of the most glaring disadvantages of the latter. Most load balancers have a finite number of nodes for distributing processes, which restricts how large they can grow. The availability of cloud services, resource planning, QoS management, energy consumption, and performance monitoring are additional challenges.

Load Balancer as a Service in Cloud Computing

Cloud service providers typically provide Load Balancing as a Service (LBaaS) to their clients as an alternative to on-premises, specialized traffic routing appliances that need to be installed and maintained internally. LBaaS, a load balancing used frequently in cloud computing, balances workloads in a way that is similar to traditional load balancing. LBaaS maintains itself in a cloud environment as a subscription or on-demand service by balancing workloads between servers there rather than distributing traffic among a group of servers located domestically in a single data center. Others spread traffic among numerous cloud providers, multi-cloud load balancers, and other hybrid cloud load balancers. Some cloud suppliers build and manage LBaaS systems.

Some advantages of LBaaS are :

- Cost savings :

Compared to hardware-based appliances, LBaaS is often less expensive in terms of money, time, effort, and internal resources for both the original investment and maintenance. - Scalability :

Load balancing services can be quickly and easily scaled to handle traffic spikes without manually configuring extra physical equipment. - Availability :

To reduce latency and ensure high availability even when a server is offline, connect to the server that is nearest to you geographically.

Conclusion

- Load balancing spreads traffic and workloads to prevent any server or computer from being under, overloaded, or sitting idle.

- To improve the effectiveness and durability of cloud applications, load balancing in cloud computing evenly distributes traffic, workloads, and computing resources throughout a cloud environment.

- Relational databases require server load adjusting, while worldwide servers require it for investigating across various areas and DNS load adjusting for domain name working.

- Using load balancing in cloud computing, businesses can quickly gain meaningful insights into their applications and spot traffic bottlenecks using predictive analytics tools. This could guide their commercial decisions.

- The availability of cloud services, resource planning, QoS management, energy consumption, and performance monitoring are some challenges associated with cloud load-balancing.