Standard Practices for Dealing with Unclean Data

Overview

In real-world data, a specific piece may need to be included for various reasons, including incorrect data, inability to load the information, or inadequate extraction. Handling missing values is one of the most challenging tasks for analysts since making the appropriate management decision results in solid data models.

Introduction

Data enrichment, data preparation, data cleaning, and data scrubbing are all various names for the same thing: correcting or deleting inaccurate, corrupted, or unusually formed data from a dataset.

The act of preparing data for analysis by deleting or changing erroneous, incomplete, irrelevant, redundant, or poorly structured data is known as data cleaning.

Data cleansing requires a lot of effort, and there is a critical need for a standard practice for unclean data. There's a reason why data cleansing is the most crucial stage in developing a data culture, let alone making accurate forecasts. It includes repairing spelling and grammar issues, standardizing data sets, rectifying errors like empty fields, and finding duplicate data points.

What Is the Need for A Standard Practice for Unclean Data?

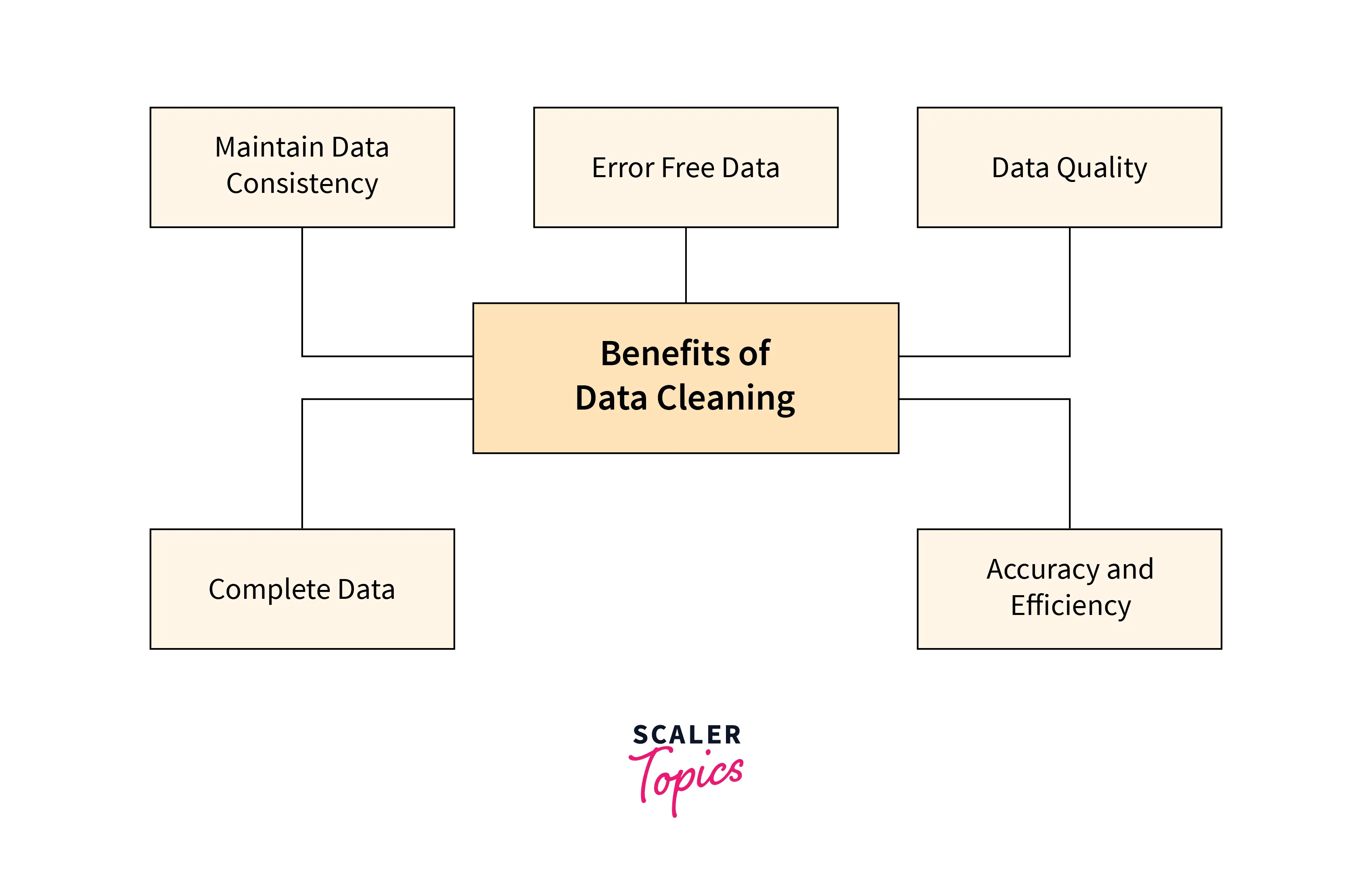

Preparing your data helps you retain quality and produces more accurate analytics, which enhances effective, intelligent decision-making. These are a few more advantages of maintaining a standard practice for unclean data:

- Better decision-making

- Increased earnings while saving time

- Streamline business practices

- Error removal when multiple data sources are involved.

- Capability to map the various functions and what your data is supposed to do.

How to Deal with Unclean Data in Machine Learning?

Fix Missing Data

There are multiple ways to fix missing data. We will use the Titanic dataset to understand fixing missing data better. The dataset can be found here.

Output:

We can see that the Age, Cabin, and Embarked columns have null values.

A. Deleting Rows with Missing Data.

Standard practice for unclean data is deleting rows with missing values. This technique is widely used to deal with null values. In this case, we either remove a specific row with a null value for a particular feature or a column with more than 70-75% missing data. This strategy is only recommended when the data set has sufficient samples. It is necessary to ensure that no bias is introduced once the data has been removed. Removing the data will result in information loss, which will cause the predicted output to fail.

Code to Drop Rows with null Values

Output:

B. Replacing null values with mean/median/mode

This method may be used on a feature that contains numerical data, such as a person's age or a ticket fee. We can compute the feature's mean, median, or mode and replace it with the missing values. This is an estimate that may introduce volatility into the data set. However, this strategy may avoid data loss, which produces better results than removing rows and columns. A statistical approach for missing values is used in place of the three estimates mentioned above. This standard practice for unclean data works well with linear data.

Code to calculate mean:

Output:

Replacing null values with mean

Output:

To replace it with median and mode, we can use the following to calculate the same:

Median:

Output:

Mode:

Output:

C. Assigning An Unique Category

A categorical attribute, such as gender, will have limited options. We can give another class to the missing data since they have a fixed number of types. Cabin and Embarked have missing values here, which can be replaced with a new category, say, U for 'unknown.' This standard practice for unclean data will add additional information to the dataset, resulting in a shift in variance. Because they are categorical, we must discover one hot encoding to transform them into a numeric form that the algorithm can understand.

Checking first 10 values in Cabin column

Output:

Replacing the null values with 'U'

Output:

Filter Unwanted Outliers

Outliers are values in your dataset that are out of the ordinary. They differ dramatically from other data points and can skew your research and contradict assumptions. Removing them is a subjective procedure dependent on the data you are attempting to examine. In general, reducing undesirable outliers will aid in improving the performance of the information you're working with, making this a standard practice for unclean data.

Remove an outlier if:

- You are aware that that is incorrect. For example, if you know what range the data should fall into, such as people's ages, you may safely discard results outside that range.

- You have a lot of information. Dropping a dubious outlier will not harm your sample.

- You may travel back in time and reminisce. You can also validate the dubious data point.

Remember that the existence of an outlier does not imply that it is erroneous. Instead, an outlier may help to prove a notion. So keep the outlier if that's the case.

Dropping Duplicate Observations

Duplicate data is most commonly encountered during the data-collecting procedure. This is common when combining data from several sources or receiving data from clients or multiple departments. Getting rid of duplicate data is standard practice for unclean data.

Code to drop duplicate rows

Output:

Dropping Redundant Features

Irrelevant characteristics will complicate your analysis. So, before you begin data cleansing, you must first determine what is significant and what is not. Dropping redundant features is standard practice for unclean data. For example, if you're studying your clients' ages, you don't need to include their email addresses.

Other items that must be removed since they add nothing to your data include:

- Personal identifiable (PII) data

- URLs

- HTML tags

- Boilerplate text

- Tracking codes

Conclusion

- We have understood the need for a standard practice for unclean data and how to implement them.

- Each data cleaning technique has its pros and cons. So it is important to understand your requirements before data cleaning.