Matrix Chain Multiplication

Overview

The number of multiplication steps required to multiply a chain of matrices of any arbitrary length highly depends on the order in which they get multiplied.

As multiplication of two numbers/integers is a costly operation, it is very much needed to find the optimal order of multiplying a chain of matrices to minimize the multiplication steps, which ultimately optimizes the time and resources required for the task.

Introduction to Matrix Chain Multiplication

In linear algebra, matrix chain multiplication is an operation that produces a matrix from two matrices. But, multiplying two matrices is not as simple as multiplying two integers, certain rules need to be followed during multiplying two matrices.

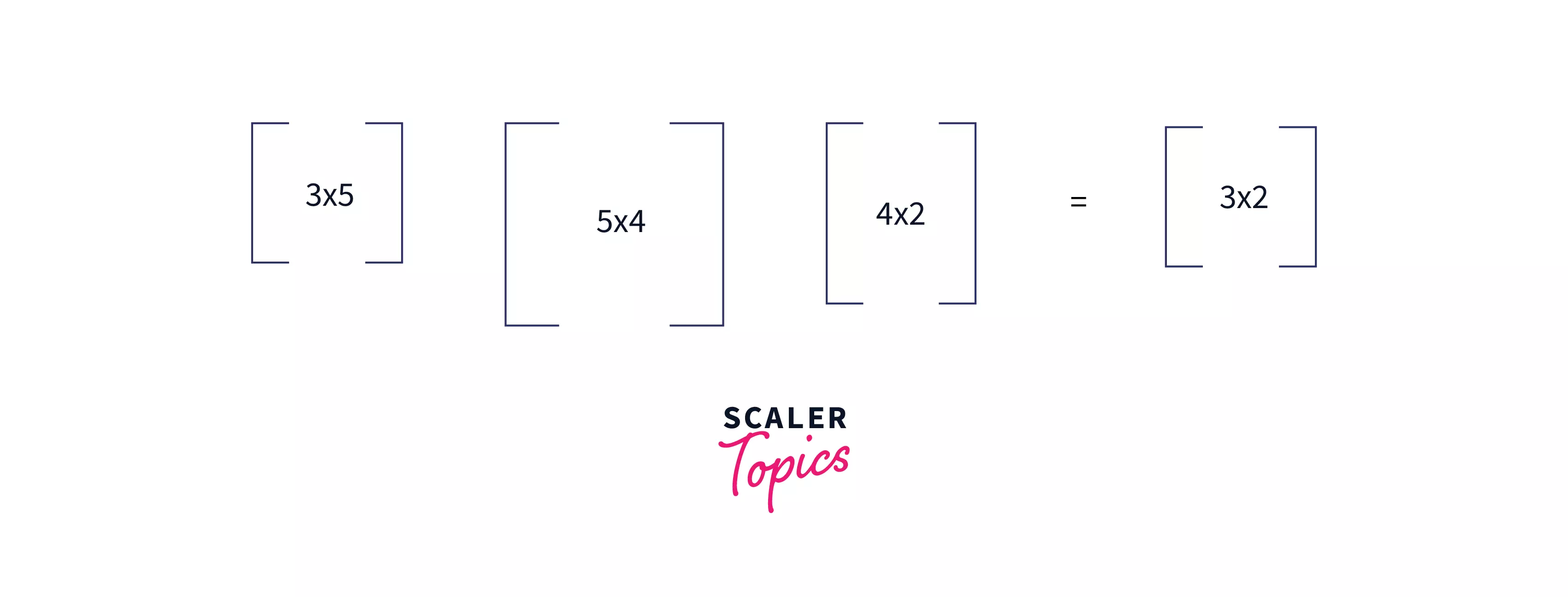

Let's say we have two matrices, of dimensions and of dimensions . and can be multiplied if and only if . And if the condition satisfies the resultant matrix (say ) will be of dimension and it requires multiplication steps to compute .

If we have been given a chain of matrices of some arbitrary length '', the number of multiplication steps required to multiply all the matrices highly depends on the order in which we multiply them.

So, in the matrix chain multiplication problem, we have been given an array representing the dimension of matrices and we are interested to deduce the minimum number of multiplication steps required to multiply them.

Example of Matrix Chain Multiplication

We have studied in our school days that matrix chain multiplication follow associative rule KaTeX parse error: $ within math mode and the number of steps required in multiplying matrix of dimension and of dimension are , and the resultant matrix is of dimension

Let's assume we have three matrices of dimensions , , and respectively and for some reason we are interested in multiplying all of them. There are two possible orders of multiplication -

-

- Steps required in will be .

- Dimensions of will be .

- Steps required in will be .

- Total Steps

-

- Steps required in will be .

- Dimensions of will be .

- Steps required in will be .

- Total Steps

Here, if we follow the second way, we would require fewer operations to obtain the same result.

Hence, it is necessary to know the optimal way in order to speed up the multiplication process by placing the brackets at every possible place and then checking which positioning minimizes the effort.

Naive Recursive Approach

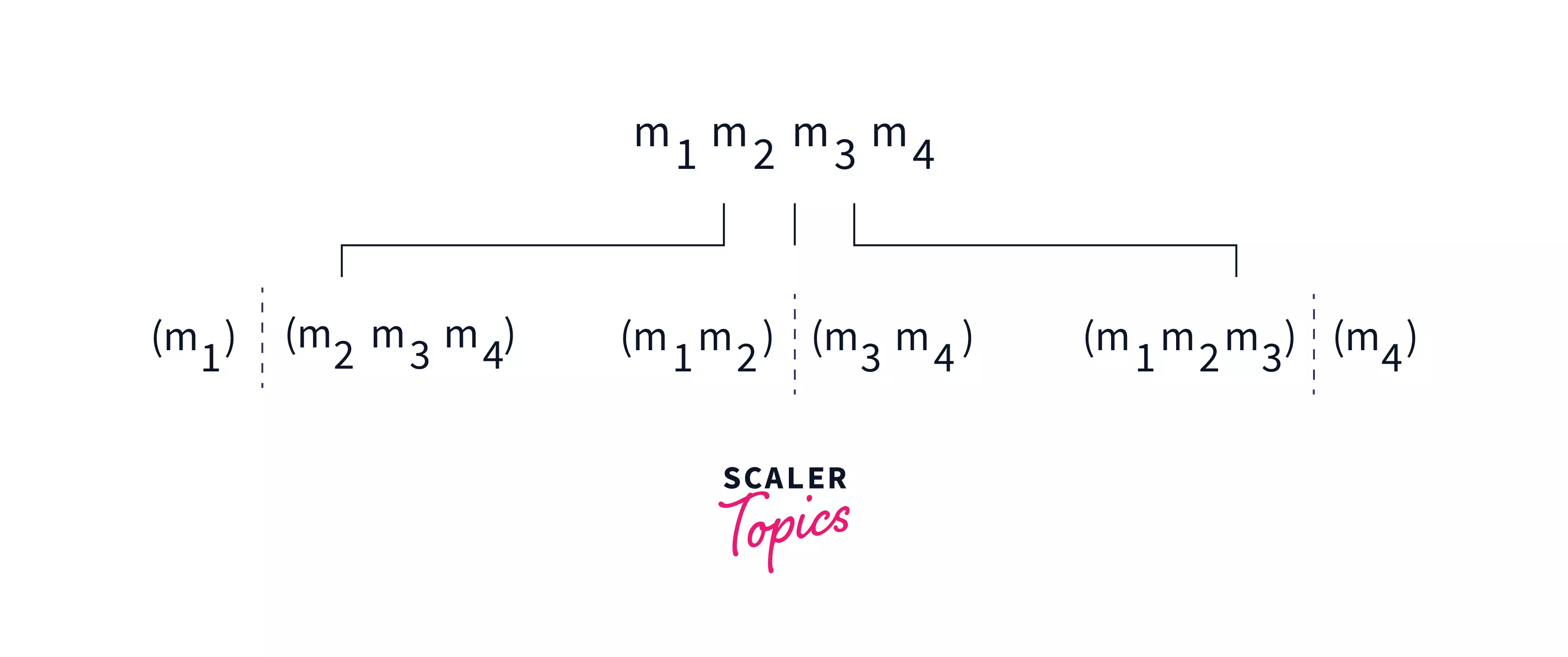

The idea is very simple, placing parentheses at every possible place. For example, for a matrix chain we can have place parentheses like -

So for a matrix chain multiplication of length , we can place parentheses in ways, notice that placing parentheses will not solve the problem rather it will split the problem into smaller sub-problems which are later used to find the answer for the main problem.

Like in the problem is divided into and which can be solved again by placing parentheses and splitting the problem into smaller sub-problems until size becomes less than or equal to 2.

So we can deduce the minimum number of steps required to multiply a matrix chain of length minimum number of all sub-problems.

To implement it we can use a recursive function in which we will place parentheses at every possible place, then in each step divide the main problem into two sub-problems left part and right part.

Later, the result obtained from the left and right parts can be used to find the answer of the main problem. Let's have a look at Pseudocode for better understanding.

Pseudocode of Recursive Approach

Explanation - To implement the matrix chain multiplication algorithm we can have a recursive function MatrixChainMultiplication which accepts three arguments an array (say mat) of integers representing dimensions of the matrices, low representing the starting index of the current sub-problem (initially low will be 0), and high representing the last index of the current sub-problem.

- The base case of this recursive function occurs when we will reach lowhigh because it means we are left with a sub-problem of size 1 a single matrix so we will return 0.

- Then, we will iterate from low to high and place parentheses for each i such that lowhigh.

- During each iteration we will also calculate the cost incurred if we place at that particular position Cost of left sub-problem + cost of right sub-problem + mat[low-1]mat[k]mat[high]

- At last we will return the minimum of the costs found during the iteration.

C/C++ Implementation of Recursive Approach

Java Implementation of Recursive Approach

Output -

Complexity Analysis

-

Time Complexity - Let be the total number of ways to place parentheses in the matrix chain, where is defined as -

On solving the above given recurrence relation, we get - which grows at an exponential rate.

-

Space Complexity - Though we have not used any auxiliary space but as you can see in the above image, the maximum depth of the recursive tree can reach up to where is the length of the matrix chain. Hence the overall space complexity is

Need of Dynamic Programming

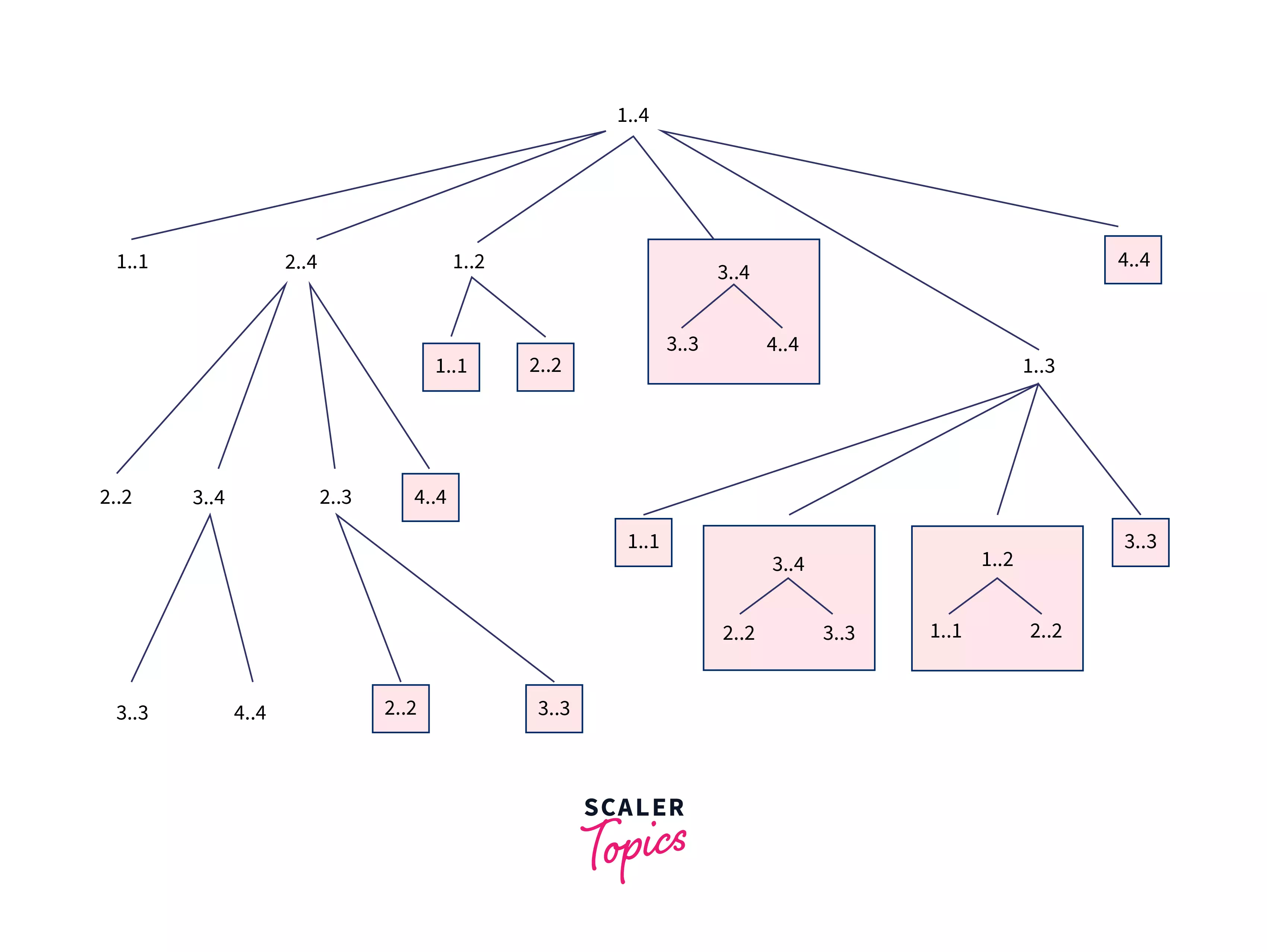

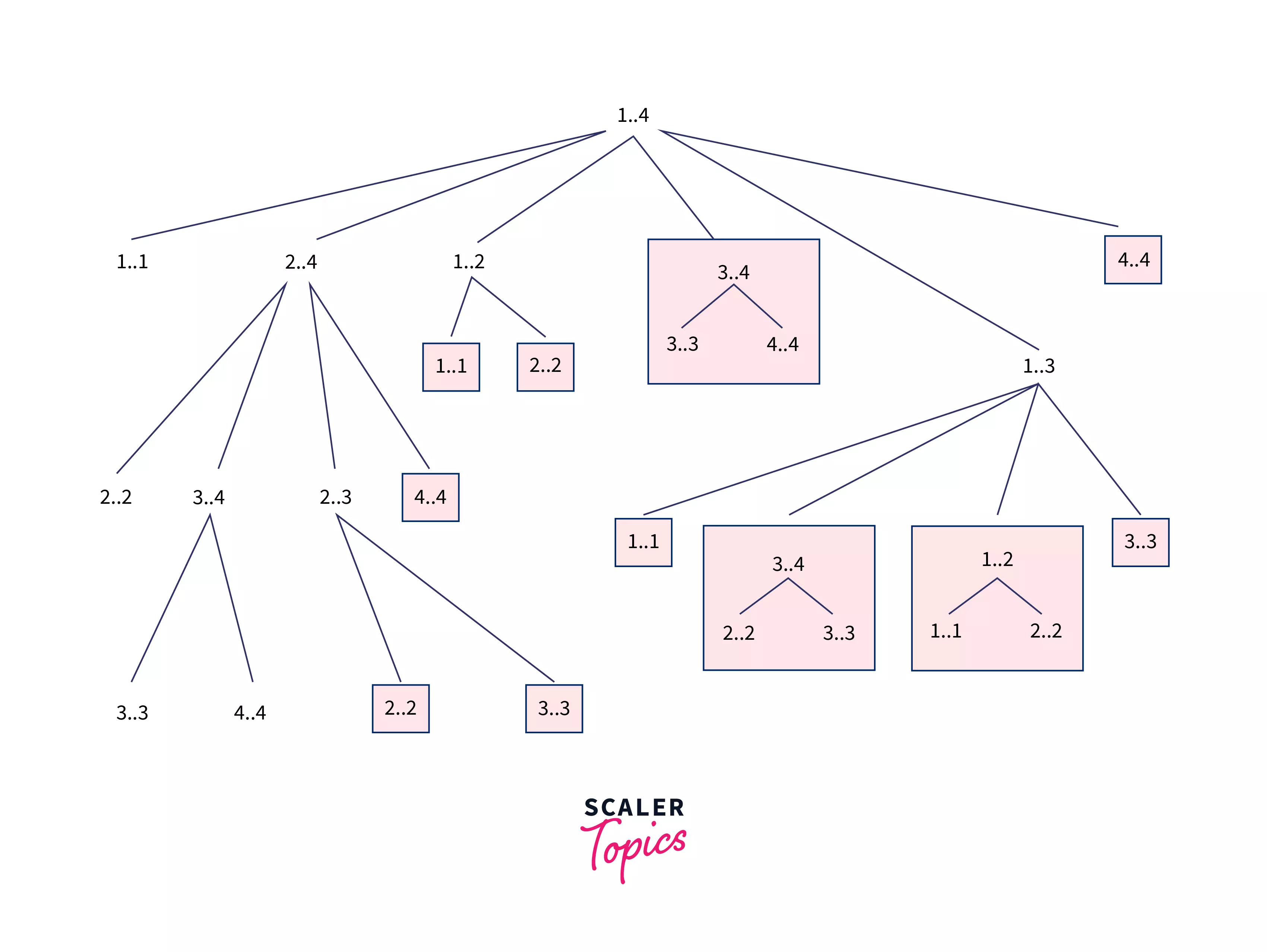

If we closely observe the recursive tree that is formed during finding the multiplication order, we will find that the result of the same sub-problem had been calculated many times also many overlapping sub-problems can be seen in the recursive tree.

For example, in a matrix chain of length 4, the recursive tree formed looks like -

We can see that answers for the sub-problem $1..2$, and $2..3$ have been calculated two times. So if we store them somewhere it will highly beneficial to speed up the process.

All these things suggest using Dynamic programming to find an efficient solution.

DP With Memoization

Algorithm for DP Using Memoization

The algorithm is almost the same as the naive recursive solution with the only change that here we will use a array of dimension .

Steps -

-

Declare a global array of dimension and initialize all the cells with value .

-

holds the answer for the sub-problem and whenever we will need to calculate we would simply return the stored answer instead of calculating it again.

-

Initialize variables low with , and high with and call the function to find the answer to this problem.

-

If then return 0 because we don't need any step to solve sub-problem with a single matrix.

-

If it means the answer for this problem has already been calculated. So we will simply return instead of solving it again.

-

In the other case, for each to we will find the minimum number of steps in solving the left sub-problem, right sub-problem, and steps required in multiplying the result obtained from both sides. Store the result obtained in dp[low][high] and return it.

-

At last answer for and will be returned.

Pseudocode

Explanation - We will use almost the same approach as Recursive Implementation with the only change that, we will maintain a 2-D array (say dp) of dimensions all of whose entries initialized with -1 where dp[i][j] stores result for the sub-problem i..j.

So for calculating the answer for the sub-problem i..j firstly we will check if we already have calculated the answer for it in past, by checking that if dp[i][j]!=-1. So if we already have calculated the answer for it we need not solve it again instead, we can simply return dp[i][j]

C/C++ Implementation

Output -

Complexity Analysis

-

Time Complexity - In the function we are iterating from to which costs and in each iteration, it takes time to calculate the answer of left and right sub-problems. Hence, the overall time complexity is

-

Space Complexity - We are using array of dimension . So the space complexity is

Bottom-up DP Solution

Algorithm

To solve this problem in a Bottom-up manner we will be following the "Gap strategy". Gap strategy means firstly we will solve all the sub-problems with gap=0, then with gap=1, and at last problem with gap=n-2.

For solving a problem with gap such that , all the problems of size will be taken into account. Please refer to the following algorithm and example for a better understanding.

- For sub-problem with gap 0 (single matrix), no multiplication is required so the answer will be 0.

- For sub-problem with gap 1 (two matrices A and B) the only option is to simply find steps required in multiplying A and B.

- For sub-problems with gap we need to find the minimum by placing the brackets at all the possible places.

For example -

- Answer for the sub-problem , when is 0.

- Answer for the sub-problem , when is .

- Answer for the sub-problem , when , is obtained by placing bracket at all possible places, and then calculating the sum of left sub-problem, right sub-problem and cost of multiplying matrices obtained from left and right side.

Pseudocode

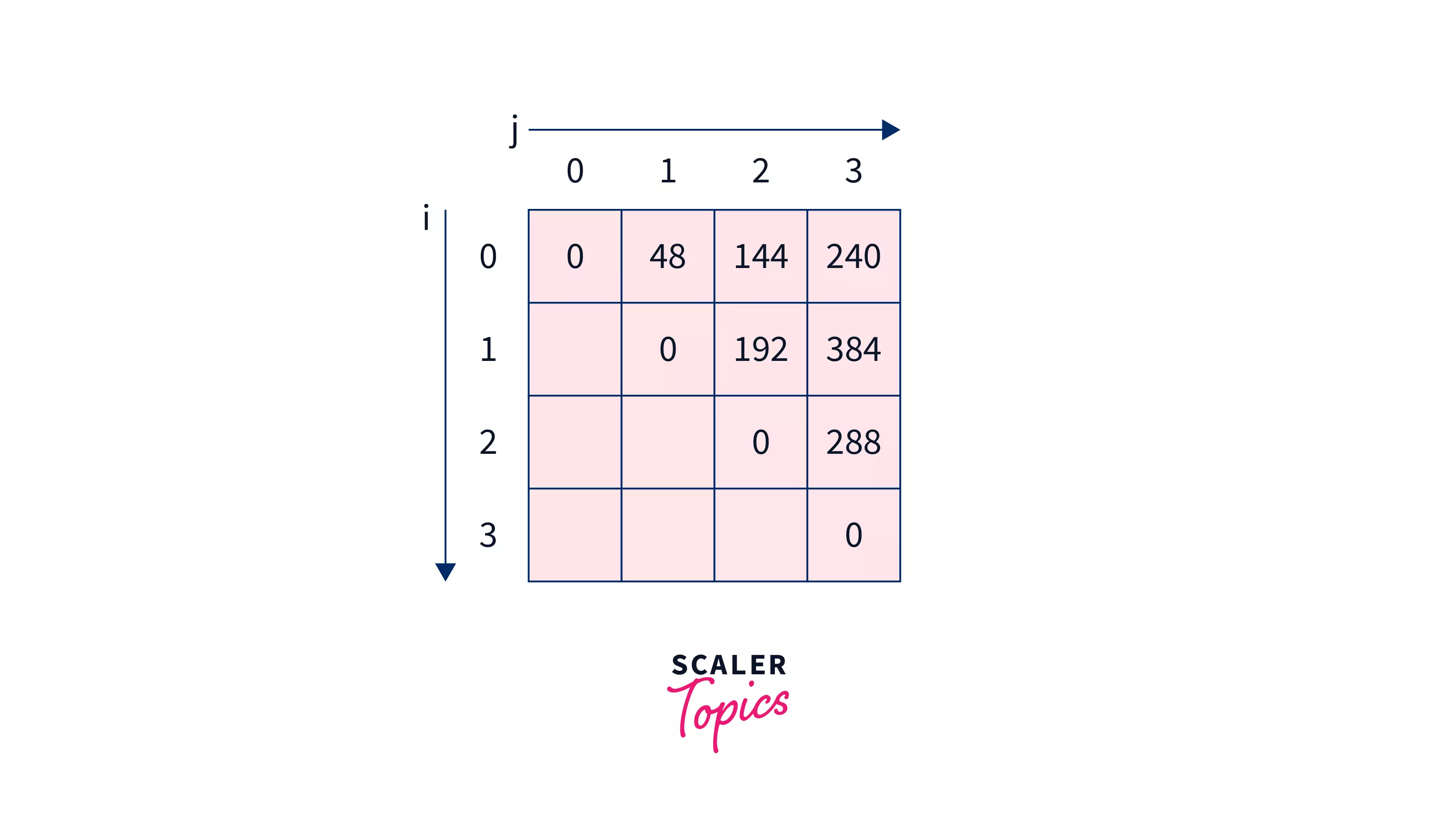

Let's dry run this algorithm on .

Here the length of the chain is 5, so we will create array of dimension as shown below -

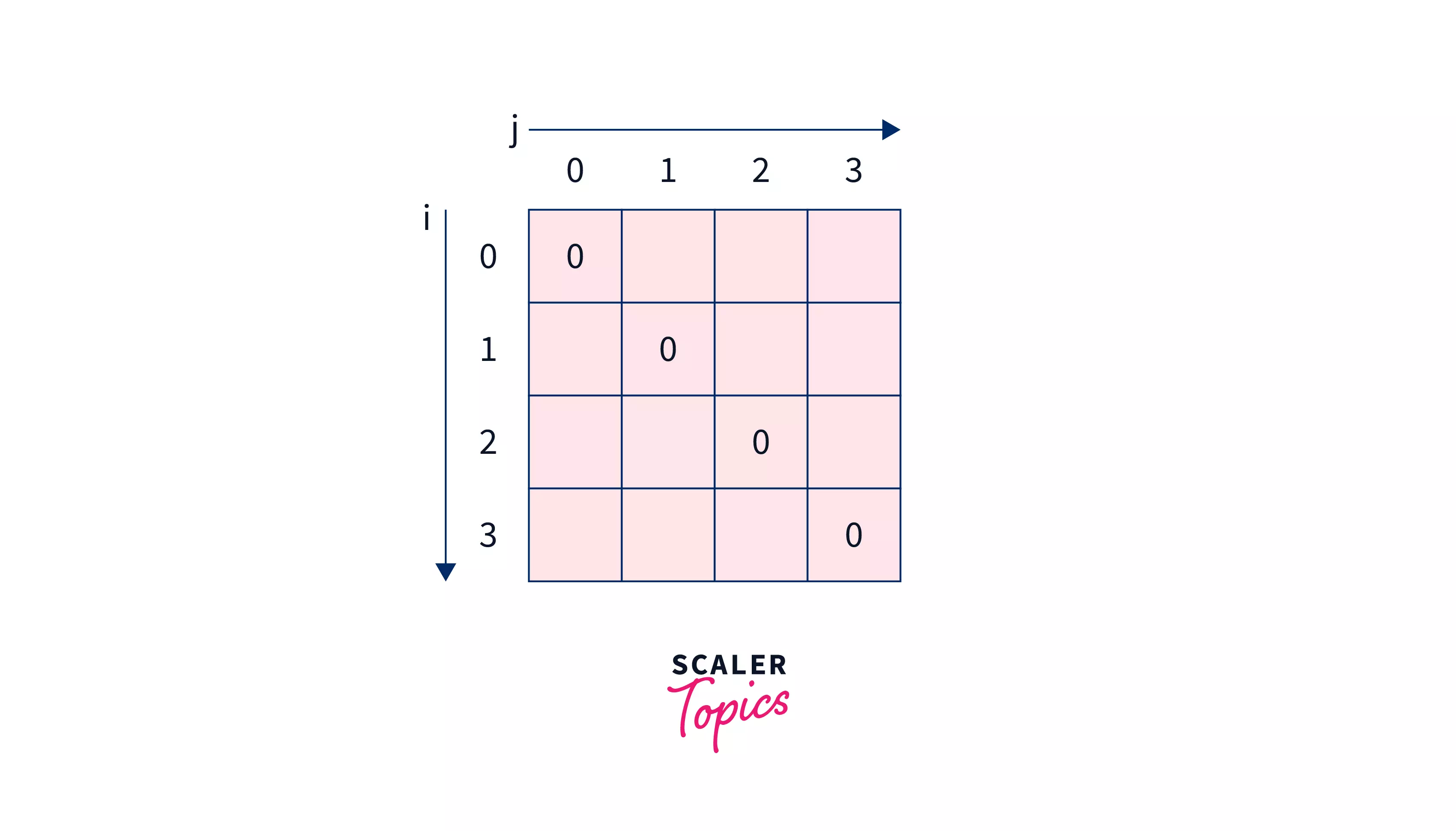

Then solving sub-problems of size and . As per the algorithm all of them will result in 0.

After which array will look like -

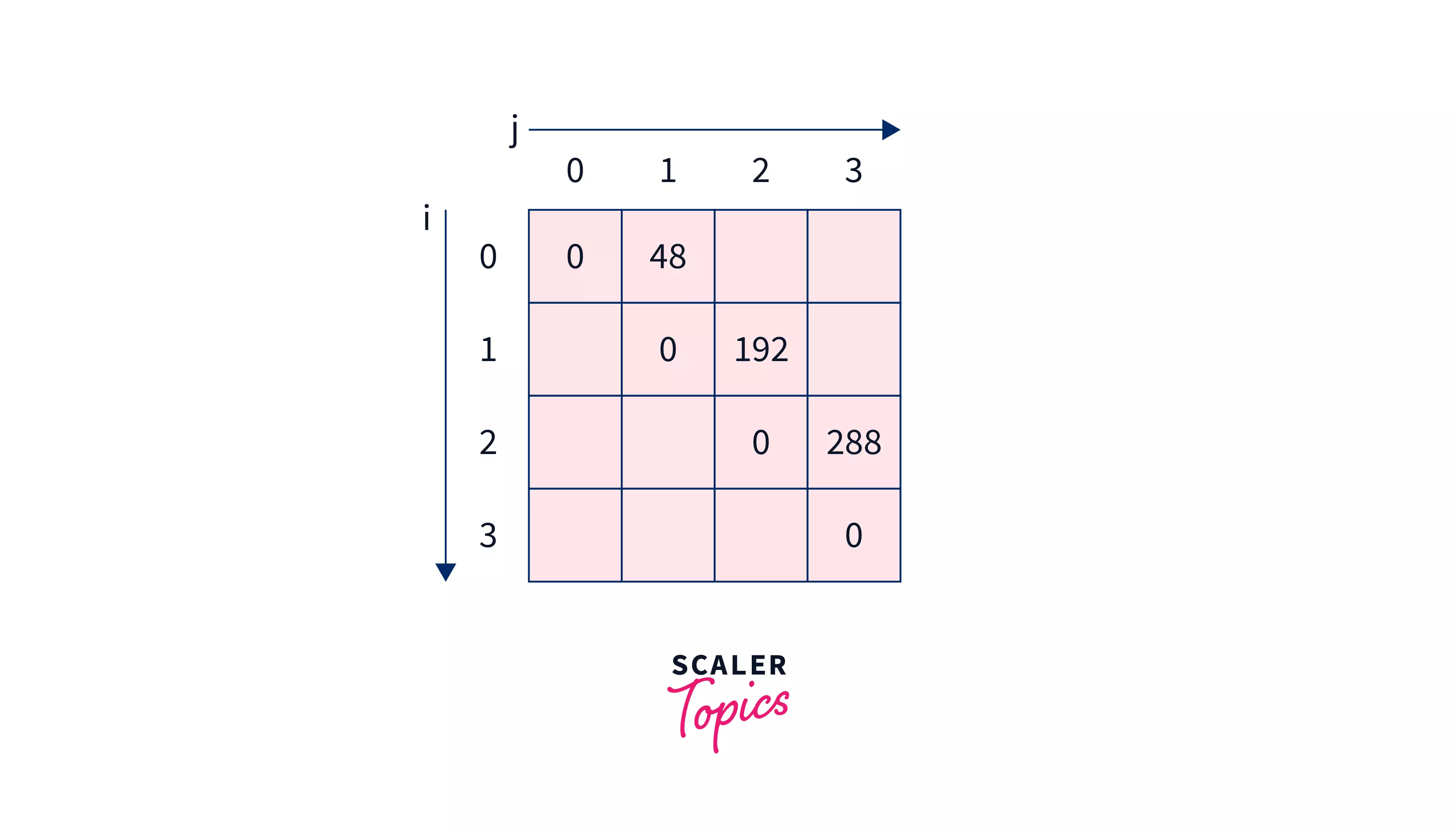

Solving all sub-problems of gap and .

- will result in

- will result in

- will result in

After which array will look like -

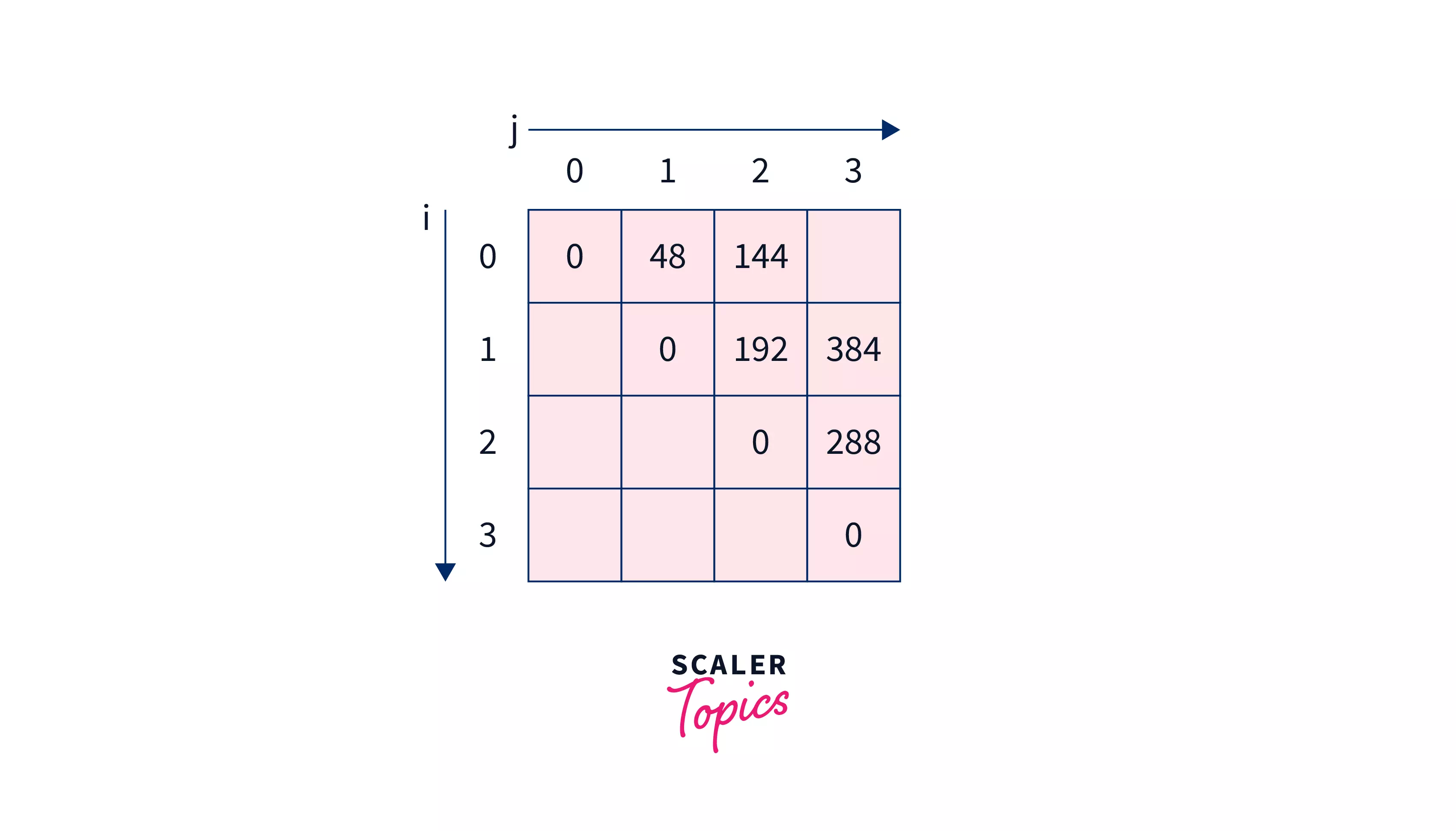

Now solving sub-problems with gap and .

-

will result in minimum of -

Hence we will make as .

-

will result in minimum of -

Hence we will make as

Now solving the last problem (main problem) with gap 3 . It will be minimum of -

So 240 is the minimum among them, hence we will make as 240 making array look like -

At last we will return .

C/C++ Implementation

We will use a 2-D array (say ) of dimensions because the last problem is of size/gap . Then we will iterate for each sub-problem with gap/size till the last problem with gap/size and compute the answer for the sub-problem based on the rules discussed in the algorithm section. At last, the answer of the final problem will be stored in so we will simply return it.

Output -

Complexity Analysis

- Time Complexity - We are using three nested for loops, each of which is iterating roughly times. Hence, the overall time complexity is .

- Space Complexity - We are using an auxiliary array of dimensions, hence space complexity is

Join our expert-led Free Dynamic Programming Course to master advanced problem-solving techniques and enhance your programming prowess.

Conclusion

- In the matrix chain multiplication problem, the minimum number of multiplication steps required to multiply a chain of matrices has been calculated.

- Determining the minimum number of steps required in the matrix chain multiplication can highly speed up the multiplication process.

- It takes time and auxiliarly space to calculate the minimum number of steps required to multiply a chain of matrices using the dynamic programming method.

- It is very important to decide the order of multiplication of matrices in matrix chain multiplication to perform the task efficiently.

- So the minimum number of multiplication steps required to multiply a chain matrix in matrix chain multiplication of length has been computed.

- Trying out all the possibilities recursively is very time consuming hence the method of dynamic programming is used to find the same.

- The minimum number of steps required to multiply a chain of matrices in matrix chain multiplication can be found in time complexity using dynamic programming.