Top NLP Engines

Overview

NLP engines extract valuable information from a sentence, whether typed or spoken and translate it into structured data. These engines are then used for a plethora of NLP tasks.

Introduction

Natural language processing's fundamental goal is to understand human input and translate it into computer language. To make this possible, engineers train a bot to extract valuable information from a sentence, whether typed or spoken and translate it into structured data. That is exactly what NLP engines do.

As soon as the user's inquiry is clear, the software using the NLP engine will be able to apply its logic to further respond to the query and assist users in achieving their goals. In this article, we're going to discuss the top natural language processing engines. But before we get to that, let's cover NLP and NLU.

What is the Difference Between NLP and NLU?

Natural Language Processing (NLP): In the context of artificial intelligence (AI), natural language processing is an umbrella term that incorporates numerous disciplines that deal with the interaction of computer systems and human natural languages. According to this viewpoint, natural language processing encompasses various sub-disciplines such as discourse analysis, relationship extraction, natural language comprehension, and a few more fields of language analysis.

Natural Language Understanding (NLU): Natural Language Understanding (NLU) is a subset of NLP that focuses on reading comprehension and semantic analysis. Today, the integration of NLP and NLU technologies is becoming increasingly important in a variety of software sectors, including bot technologies. While there are numerous vendors and platforms focused on NLP-NLU technologies, the following technologies are gaining popularity among bot developers.

Types of NLP Engines

Now that we know what NLP Engines are, and the difference between Natural Language Processing and Natural Language Understanding, let's take a hit at the types of NLP Engines that exist.

Cloud NLP Engines

Cloud NLP provides benefits right out of the box. They were trained on a variety of text corpora. They acquire the most up-to-date information and are continually updated with client interactions. They certainly have an advantage, and they are inexpensive and uncomplicated. Cloud NLP is simple to use even for those with little experience.

Cloud NLP engines can be used by businesses where exchanging data with third-party cloud providers is not an issue. IT, HR, Restaurants, and other major use cases. Some of the cloud NLP engines that are available are - Amazon Comprehend, Google Cloud's AutoML, Dialogue Flow, and, Microsoft LUIS.

In-house NLP Engines

The other type of NLP Engines is an In-house NLP engine. In-house NLP engines are suited for business applications when privacy is critical and/or where the company has agreed not to share consumer data with third parties. Going with custom NLP is critical, especially when the intranet is primarily utilized for business purposes. Apart from that, the banking, health, and finance industries use in-house NLP Engines in situations where data sharing is express banned.

Architecture of NLP Engine

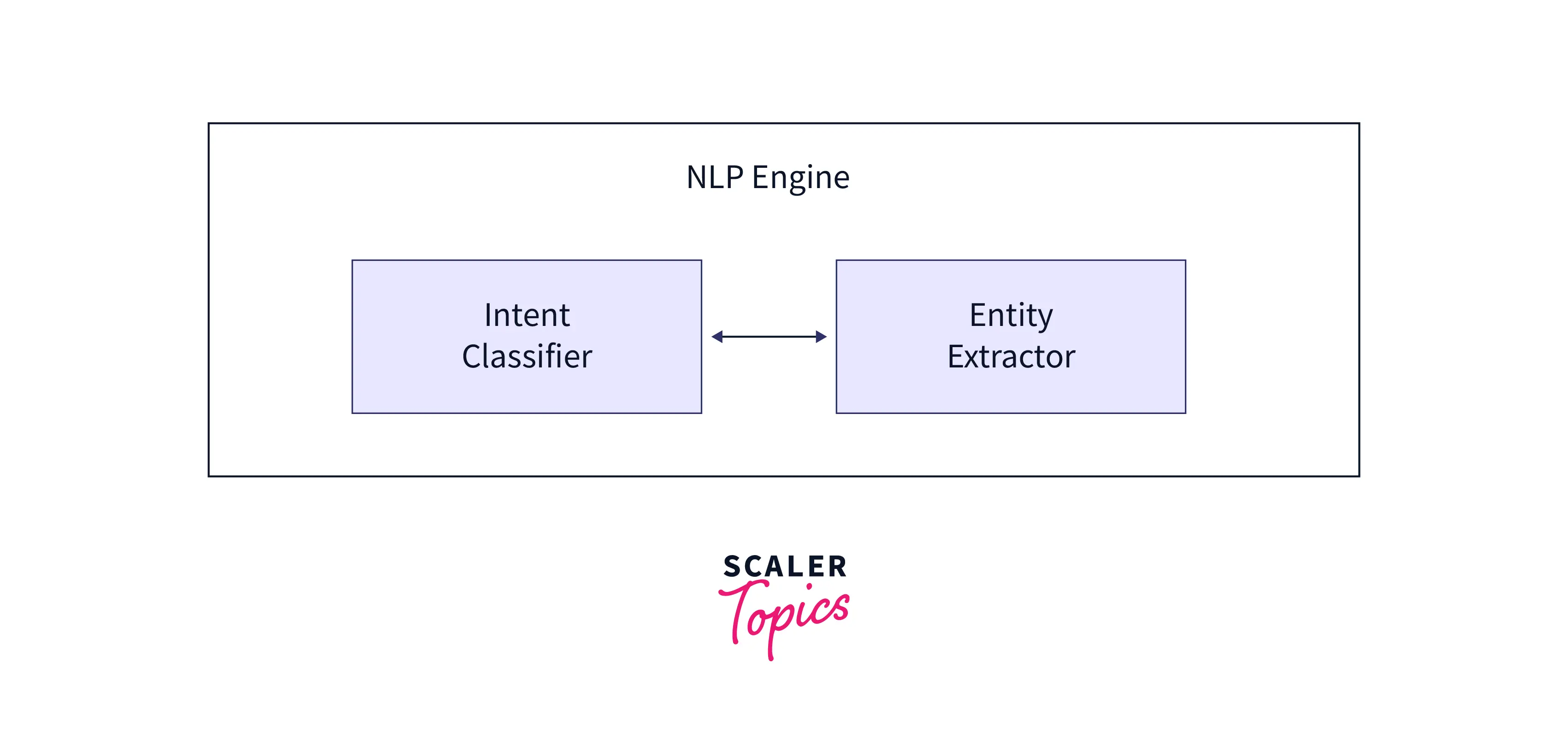

Before we get into details, take a look at a diagram depicting the architecture of an NLP engine.

An NLP Engine interprets natural language and then converts it into structured language. There are multiple components in an engine and each of them works in tandem to fulfill the user's problems or intentions.

Understanding Speech: When the user speaks, the chatbot should be able to convey what he is saying as well as his intention. Based on this, the chatbot should behave appropriately.

Keeping Context: Chatbots should be sophisticated enough to recognize user context. The user may use the same terms in different situations at times. This element is a standard requirement.

Custom Question and Answer System: Assume the user is inquiring about a dynamic knowledge bank. Where the user's inquiry necessitates the chatbot searching through company documentation, policies, CRMs, ticketing information, and so on.

Overview of the architecture of the NLP Engine

The architecture of an NLP engine has 2 major components:

1. Intent Classifier:

The intent classifier analyses the user's input determines its meaning, and associates it with one of the chatbot's supported intents. This is known as intent classification. A classifier is a method of categorizing data - in this case, a sentence - into several different groups. Chatbots will classify each piece of a sentence into broken-down categories to comprehend the intention behind the input it has received, similar to how people classify objects into sets, such as a violin is an instrument, a shirt is a type of apparel, and pleasure is an emotion. Developers have various approaches for dealing with this:

Pattern Matching: Pattern matching is the process of using patterns in the incoming text to classify it into different intents.

Machine Learning Methods: Creates a multi-class categorization using several machine learning algorithms.

Neural Networks: These networks use fine word embedding to learn from text.

We need numeric representations of text that a machine can work with for both machine learning methods and neural networks. This condition is met by sentence vectors. Vector space models allow users to convert their sentences into an equivalent mathematical vector. This is useful for representing meaning in multidimensional vectors. These vectors can then be used to classify purpose and demonstrate how different sentences connect.

2. Entity Extractor:

The entity extractor is responsible for extracting key information from the user's query. It extracts specific data such as:

- The type of dish that the user desires

- The order's time

- Type of problem encountered by the user

- Customer's name, phone number, address, and other information

Best Natural Language Processing Tools

We now know enough about NLP Engines, let's talk about some of the best Natural Language Processing tools that exist to make NLP tasks easier.

MonkeyLearn

MonkeyLearn is an easy-to-use, NLP-powered tool that may help you acquire important insights from text data.

To begin, choose one of the pre-trained models to perform text analysis tasks like sentiment analysis, topic categorization, or keyword extraction. You can create a customized machine learning model tailored to your organization for more accurate insights.

Once you've trained your models to give correct insights, you can connect your text analysis models to your favorite apps (such as Google Sheets, Zendesk, Excel, or Zapier) using our integrations (no coding required!) or MonkeyLearn's APIs, which are available in all major programming languages.

Aylien

Aylien is a SaaS API that analyses massive amounts of text-based data, such as academic articles, real-time information from news sites, and social media data, using deep learning and NLP. It can be used for NLP tasks such as text summarization, article extraction, entity extraction, and sentiment analysis, to name a few.

IBM Watson

Watson Assistant, formerly Watson Conversation, enables you to create an artificial intelligence assistant for a range of channels, including mobile devices, chat platforms, and even robotics. Create a multilingual application that understands natural language and reacts to clients in human-like dialogue. Connect to messaging channels, web environments, and social networks with ease to facilitate scaling. You may easily establish a workspace and develop your application to meet your specific requirements.

Google Cloud NLP API

Among other things, the Google Cloud Natural Language API includes various pre-trained models for sentiment analysis, content classification, and entity extraction. It also includes AutoML Natural Language, which allows you to create personalized machine learning models.

It makes use of Google question-answering and language comprehension technology as part of the Google Cloud architecture.

Amazon Comprehend

Amazon Comprehend is a natural language processing (NLP) service that is embedded into the Amazon Web Services infrastructure. This API can be used for NLP activities including sentiment analysis, topic modeling, entity recognition, and more.

There is a dedicated edition for individuals who work in healthcare: Amazon Comprehend Medical, which allows you to undertake advanced analysis of medical data using Machine Learning.

NLTK

The Natural Language Toolkit (NLTK) with Python is a leading tool for constructing NLP models. NLTK, which focuses on NLP research and education, is supported by an active community, as well as a variety of tutorials for language processing, example datasets, and resources such as a thorough Language Processing and Python handbook.

Although mastering this library takes time, it is regarded as an excellent playground for gaining hands-on NLP expertise. NLTK's modular nature allows it to provide several components for NLP tasks such as tokenization, tagging, stemming, parsing, and classification.

For more details on NLTK refer to the detailed guide right here.

Stanford Core NLP

Stanford Core NLP is a popular library created and maintained by Stanford University's NLP community. It's written in Java, so you'll need to install JDK on your computer, although it supports most programming languages through APIs.

The Core NLP toolbox enables you to do NLP tasks such as part-of-speech tagging, tokenizationand named entity recognition. Scalability and performance optimization are two of its key features, making it an excellent solution for difficult tasks.

For more details on Stanford Core NLP refer to the detailed guide right here.

TextBlob

TextBlob is a Python module that extends NLTK, allowing you to execute the same NLP operations in a much more intuitive and user-friendly interface. It has a simpler learning curve than other open-source libraries, making it a good alternative for beginners who wish to tackle NLP tasks such as sentiment analysis, text categorization, part-of-speech tagging, and much more.

To read more about TextBlob refer to the detailed article on TextBlob.

SpaCy

SpaCy is one of the most recent open-source Natural Language Processing using Python modules on our list. It's lightning-fast, simple to use, well-documented, and built to handle enormous amounts of data, not to mention a slew of pre-trained NLP models to make your job easier. Unlike NLTK or CoreNLP, which show a variety of algorithms for each task, SpaCy keeps its menu short and serves up the best available option for each task.

This library is an excellent choice for preparing text for deep learning and excels at extraction tasks. It is presently only available in English.

GenSim

Gensim is a highly specialized Python module that mostly handles topic modeling tasks by utilizing algorithms such as Latent Dirichlet Allocation (LDA). It's also quite good at detecting text similarities, indexing texts, and navigating across papers.

This library is quick, scalable, and capable of processing massive amounts of data. Here are some starter tutorials. To read more about Gensim, you can refer to this article.

Rasa

It has two important components: Rasa NLU for natural language understanding and Rasa Core for dialogue management. Rasa NLU assists us in delivering this domain-specific solution for developing contextual AI helpers with rich domain knowledge. Rasa NLU extracts intents and entities from user input to achieve this recognition. Rasa NLU is trained with a set of domain-specific intents to create a self-learning model that improves over time. This Rasa component can be thought of as "a set of high-level APIs for developing a language parser tailored to your use case utilizing existing NLP and ML libraries."

Refer to this article by scaler if you'd like to know how to build a chatbot using Rasa!

wit.ai

Wit.ai is a free platform that can be utilized for business purposes. There are no request limitations, but you must notify them if you intend to send more than one request every second. Many languages are supported. When your app is open, the community will be able to see your intents, entities, and verified expressions but not your logs; nonetheless, you retain control of the data. More than 120,000 developers use it. Not just chatbots are supported, but also wearables and home devices.

Conclusion

- Natural language processing’s fundamental goal is to understand human input and translate it into computer language. NLP engines extract valuable information from a sentence, whether typed or spoken and translate it into structured data.

- There are mainly 2 types of NLP engines - Cloud NLP Engines, and In-house NLP Engines.

- An NLP Engine has two major components - Intent Classifier and Entity Extractor.

- Some of the best NLP Tools are:

- MonkeyLearn

- Aylien

- IBM Watson

- Google Cloud NLP API

- Amazon Comprehend

- NLTK

- Stanford Core NLP

- TextBlob

- SpaCy

- GenSim

- Rasa

- wit.ai