Introduction to Object Tracking

Overview

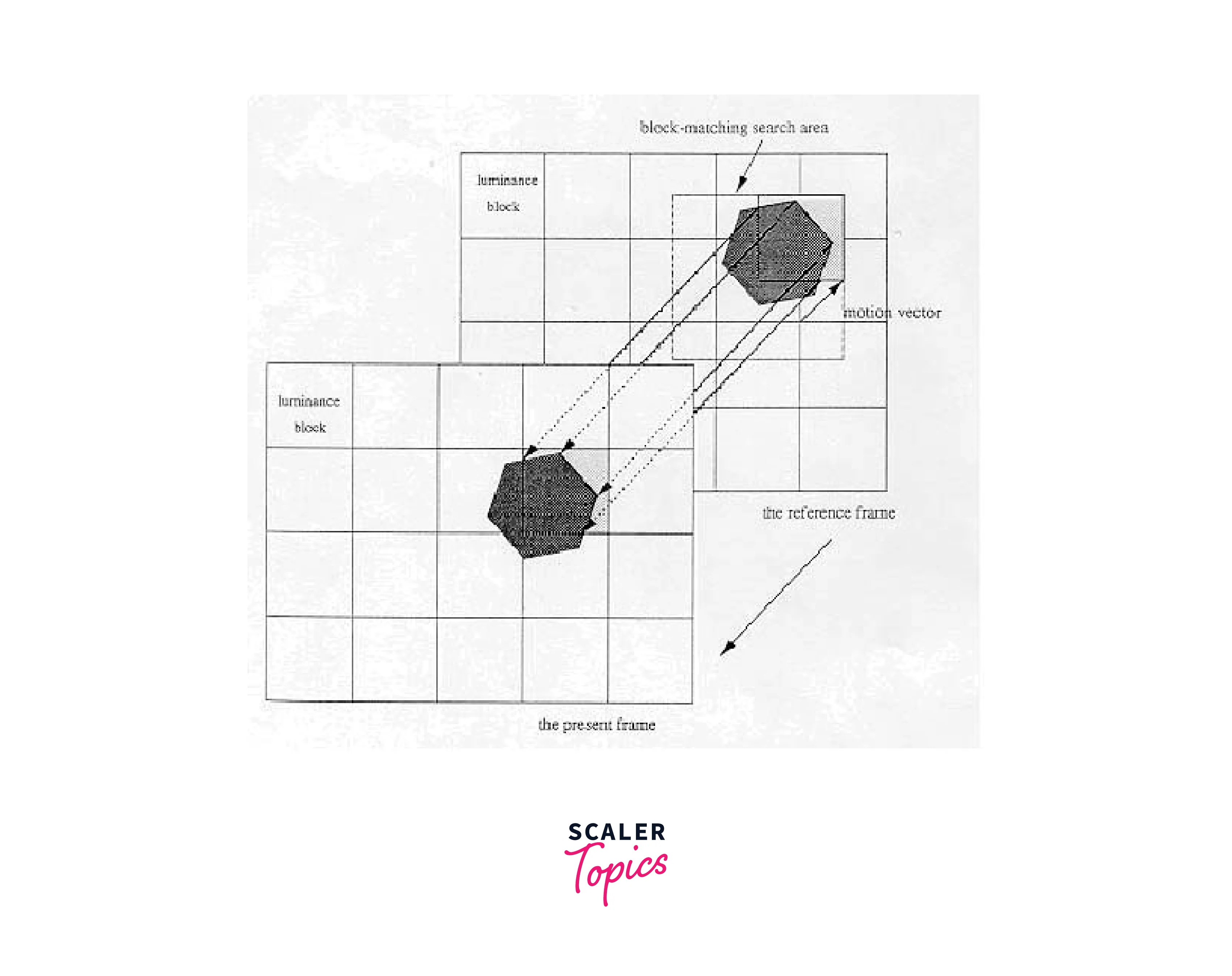

Object tracking is a computer vision technique that involves tracking an object in a video sequence over time. The main goal of object tracking is to estimate the position and motion of an object in a given scene. Object tracking has various applications in video surveillance, traffic monitoring, human-computer interaction, and robotics. Object tracking is different from object detection, which focuses on detecting the presence of objects in a given image or video frame.

Introduction

Object tracking is a technique used to monitor and follow objects as they move in a video or a sequence of frames. This technology is used in a wide range of applications, from traffic surveillance to sports analysis to human-robot interaction. Object tracking enables us to locate and track an object's movement in real-time, making it an essential tool for tasks that require continuous monitoring and analysis.

What is Object Tracking?

Object tracking is the process of locating and following an object's movement in a video or a sequence of frames. The goal of object tracking is to identify an object of interest in the first frame and track it as it moves in subsequent frames. This technique can be used for a variety of applications, including object recognition, traffic monitoring, and video surveillance.

Object tracking is a challenging task due to various factors such as occlusion, background clutter, illumination changes, and motion blur.

Researchers and engineers have developed various techniques to improve the accuracy and efficiency of object tracking, including traditional approaches like optical flow and Kalman filtering and more recent deep learning-based approaches like YOLOv7 and Siamese networks. OpenCV is a widely used library for implementing object tracking algorithms in real-time applications.

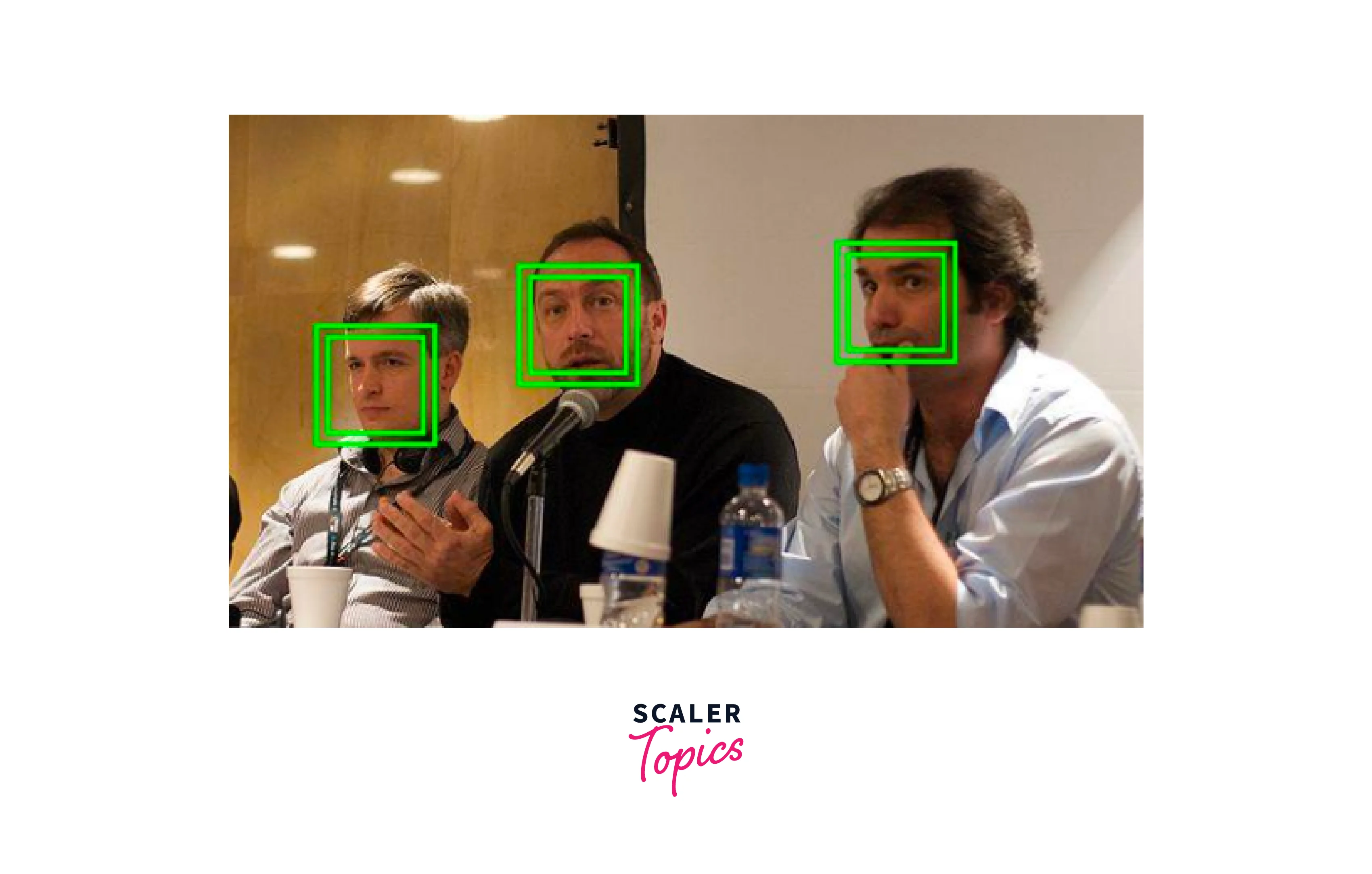

![]()

![]()

Object Tracking vs. Object Detection

Object tracking and object detection are two related but distinct computer vision techniques.

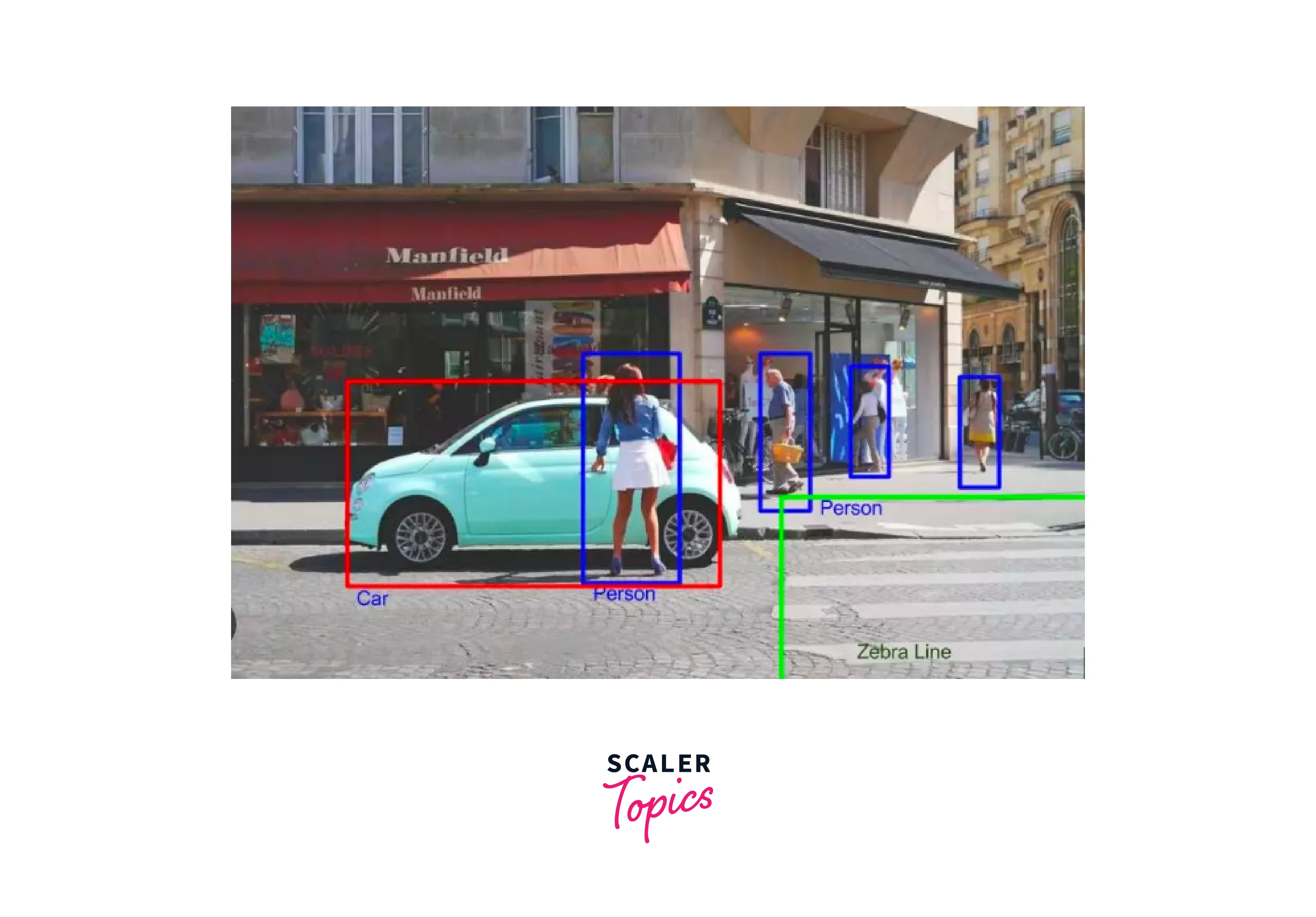

Object detection is the process of identifying and localizing objects in an image or a video frame. Object detection techniques can identify multiple objects in a single image and provide the location of each object in the image.

On the other hand, Object tracking in OpenCV techniques are used to follow an object's movement across multiple frames. The table below summarizes the key differences between object tracking and object detection.

| Object Detection | Object Tracking |

|---|---|

| Identify objects in a single frame | Follow objects across multiple frames |

| Can detect multiple objects in a single image | Tracks a single object or multiple objects |

| Provides the location of each object in the image | Provides the object's trajectory over time |

| Does not consider object motion | Considers object motion and appearance over time |

Different Stages of the Object Tracking Process

The Object tracking in OpenCV process involves several stages, which can be grouped into three main categories: initialization, tracking, and termination.

- Initialization:

In this stage, the object of interest is identified in the first frame of the video or the sequence of frames. This is typically done using object detection techniques. - Tracking:

Once the object of interest is identified in the first frame, it is tracked in subsequent frames using various tracking techniques. These techniques can be based on the object's appearance, motion, or both. - Termination:

The object tracking process is terminated when the object of interest is no longer visible or when the tracking algorithm fails to track the object accurately.

Levels of Object Tracking

Object tracking can be categorized into three levels based on the level of complexity of the tracking process.

-

Point Tracking:

Point tracking involves tracking a single point or feature in an image. This technique is often used for simple applications such as face tracking or motion analysis.- Point tracking is a technique for following the movement of a single point or feature in an image over time. It works by first identifying a point of interest in the initial image, and then tracking its position as it moves from frame to frame in a video or image sequence.

- It is often used in simple applications such as face tracking, where the position of the face is tracked over time to enable features such as automatic focus or exposure adjustment. It is also used in motion analysis, where the movement of a particular point on an object is tracked to determine its trajectory and speed.

- Point tracking algorithms typically work by first detecting a point of interest in the initial image using techniques such as corner detection or edge detection. They then use various methods such as optical flow or template matching to track the position of the point in subsequent frames. While point tracking is a simple technique, it can be effective in certain applications where the movement of a single point or feature is of interest.

-

Object Tracking:

Object tracking in OpenCV involves tracking an object's movement in a video or a sequence of frames. This technique is commonly used in applications such as surveillance and robotics.- Object tracking in OpenCV involves tracking the movement of an object in a video or sequence of frames. It works by first identifying the object of interest in the initial frame, and then using various techniques to track its movement in subsequent frames. Object tracking is often used in applications such as surveillance and robotics.

- In surveillance applications, object tracking can be used to monitor the movement of people or vehicles in a video feed. It can be used to detect and track suspicious activity, or to track the movement of individuals or vehicles of interest. Object tracking can also be used in robotics applications, where it can be used to track the movement of objects in a robot's environment.

- Object tracking in OpenCV typically involves using techniques such as feature extraction, object detection, and object tracking algorithms such as mean-shift or correlation-based tracking. These techniques enable the object of interest to be tracked as it moves through the video or sequence of frames. While object tracking can be a challenging task due to factors such as occlusions and changes in lighting, it can be an effective technique in many applications where the movement of objects is of interest.

-

Event Tracking:

Event tracking involves tracking complex events that involve multiple objects, such as traffic monitoring or sports analysis. This technique requires advanced algorithms that can analyze the behavior of multiple objects over time.-

Event tracking involves tracking complex events that involve multiple objects in a video or sequence of frames. This technique is often used in applications such as traffic monitoring or sports analysis, where the movement of multiple objects needs to be tracked to understand the overall event.

-

In traffic monitoring applications, event tracking can be used to monitor traffic flow and detect traffic incidents such as accidents or congestion. It can also be used to track individual vehicles as they move through an intersection or along a road network. In sports analysis applications, event tracking can be used to track the movement of players and balls to analyze game play and detect important events such as goals or fouls.

-

Event tracking typically involves using advanced computer vision techniques such as object detection, tracking, and classification. These techniques enable the system to detect and track multiple objects in a video feed and analyze their movement and interactions to understand the overall event. Event tracking can be a challenging task due to the complexity of the events being tracked, but it can be an effective technique in many applications where understanding the movement of multiple objects is important.

-

Challenges in Object Tracking

Object tracking in OpenCV is a complex problem and there are several challenges that need to be addressed in order to achieve accurate and robust tracking. Some of the main challenges of object tracking are:

Occlusion in Object Tracking in OpenCV

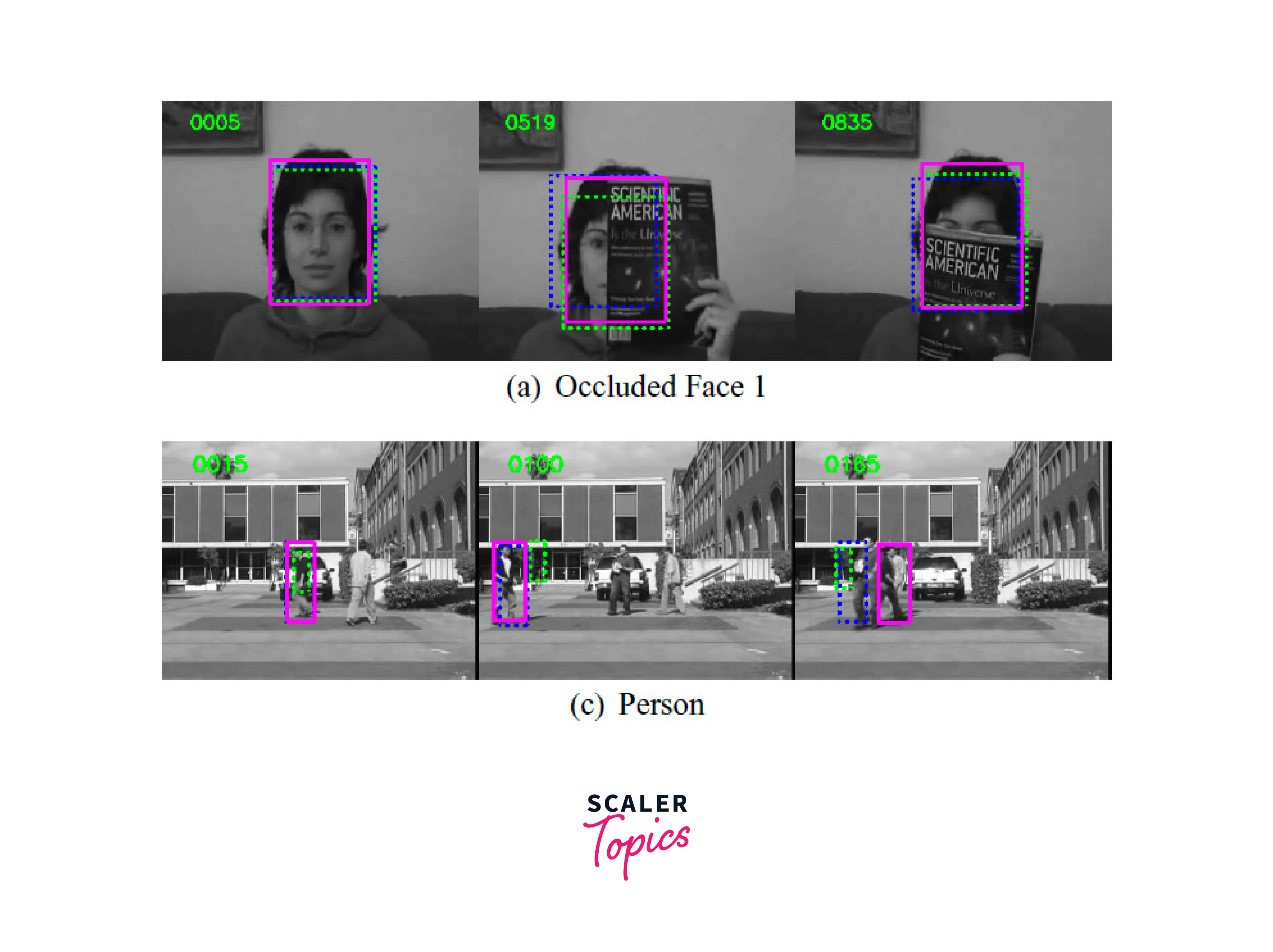

When an object is partially or fully occluded, it can be difficult to track it accurately. Occlusion can occur when an object is blocked by another object in the scene or when the object moves behind a foreground object.

-

Occlusion occurs in object tracking when the object being tracked is partially or completely obscured by another object or background. This can cause tracking algorithms to lose track of the object and result in tracking errors.

-

Occlusion is a common problem in object tracking, especially in crowded scenes where multiple objects are moving in close proximity to each other. It can also occur in scenarios where the object being tracked moves behind other objects, such as a pedestrian walking behind a parked car.

-

To address occlusion in object tracking with OpenCV, various techniques can be used, such as incorporating motion models to predict the movement of the occluded object, using multiple cameras or sensors to obtain a more complete view of the scene, or using machine learning techniques to learn to recognize occlusion and adapt the tracking algorithm accordingly.

-

Despite these techniques, occlusion remains a challenging problem in object tracking with OpenCV and there is ongoing research to develop more robust tracking algorithms that can handle occlusion more effectively.

Solutions:

-

One solution to occlusion is to use multiple object detectors to track different parts of the object when it is occluded. For example, a face tracker may use multiple detectors to track the face, eyes, nose, and mouth separately. When a part of the face is occluded, the tracker can use the other detectors to estimate the location of the occluded part.

-

Another approach is to use object-level occlusion reasoning to estimate the likelihood that an object is occluded and adjust the tracking accordingly. This involves modeling the occlusion patterns in the scene and using them to predict the likelihood of occlusion at each frame.

Illumination Changes in Object Tracking in OpenCV:

Changes in lighting conditions can make it difficult to track an object accurately. For example, shadows or reflections can cause the appearance of an object to change.

-

Illumination changes can occur when there is a change in lighting conditions, such as shadows, reflections, or changes in natural light. These changes can cause the appearance of the object being tracked to change, which can make it difficult for the tracking algorithm to detect and track the object.

-

To address illumination changes in object tracking with OpenCV, various techniques can be used, such as adaptive thresholding to adjust the brightness and contrast of the image, using color histograms to track the object based on its color, or using image enhancement techniques such as gamma correction or histogram equalization to improve the quality of the image.

-

Despite these techniques, illumination changes remain a challenging problem in object tracking and there is ongoing research to develop more robust tracking algorithms that can handle illumination changes more effectively.

Solutions:

-

One solution to illumination changes is to use color constancy algorithms to normalize the color of the object across different lighting conditions. Color constancy algorithms estimate the color of the illumination and use it to adjust the color of the object.

-

Another approach is to use multiple image representations that are robust to changes in lighting conditions. For example, a tracking algorithm may use both color and texture features to track the object. If the color features are affected by illumination changes, the texture features can still provide a reliable tracking signal.

![]()

Scale Changes in Object Tracking in OpenCV

When an object changes size, it can be difficult to track it accurately. Scale changes can occur when an object moves closer or further away from the camera.

-

Scale changes occur when the size of the object being tracked changes, either due to the object moving closer or further away from the camera, or due to changes in the camera's zoom level. These changes can cause the appearance of the object to change, which can make it difficult for the tracking algorithm to detect and track the object.

-

To address scale changes in object tracking, various techniques can be used, such as using scale-invariant features to track the object, incorporating scale estimation into the tracking algorithm, or using multiple cameras or sensors with different focal lengths to obtain a more complete view of the scene.

-

Despite these techniques, scale changes remain a challenging problem in object tracking with OpenCV and there is ongoing research to develop more robust tracking algorithms that can handle scale changes more effectively.

Solutions:

-

One solution to scale changes is to use scale-invariant feature descriptors that are robust to changes in scale. These feature descriptors can be used to track the object across different scales without requiring explicit scale estimation.

-

Another approach is to use a multi-scale tracking framework that can track an object across different scales. This involves estimating the scale of the object at each frame and using it to adjust the tracking accordingly.

![]()

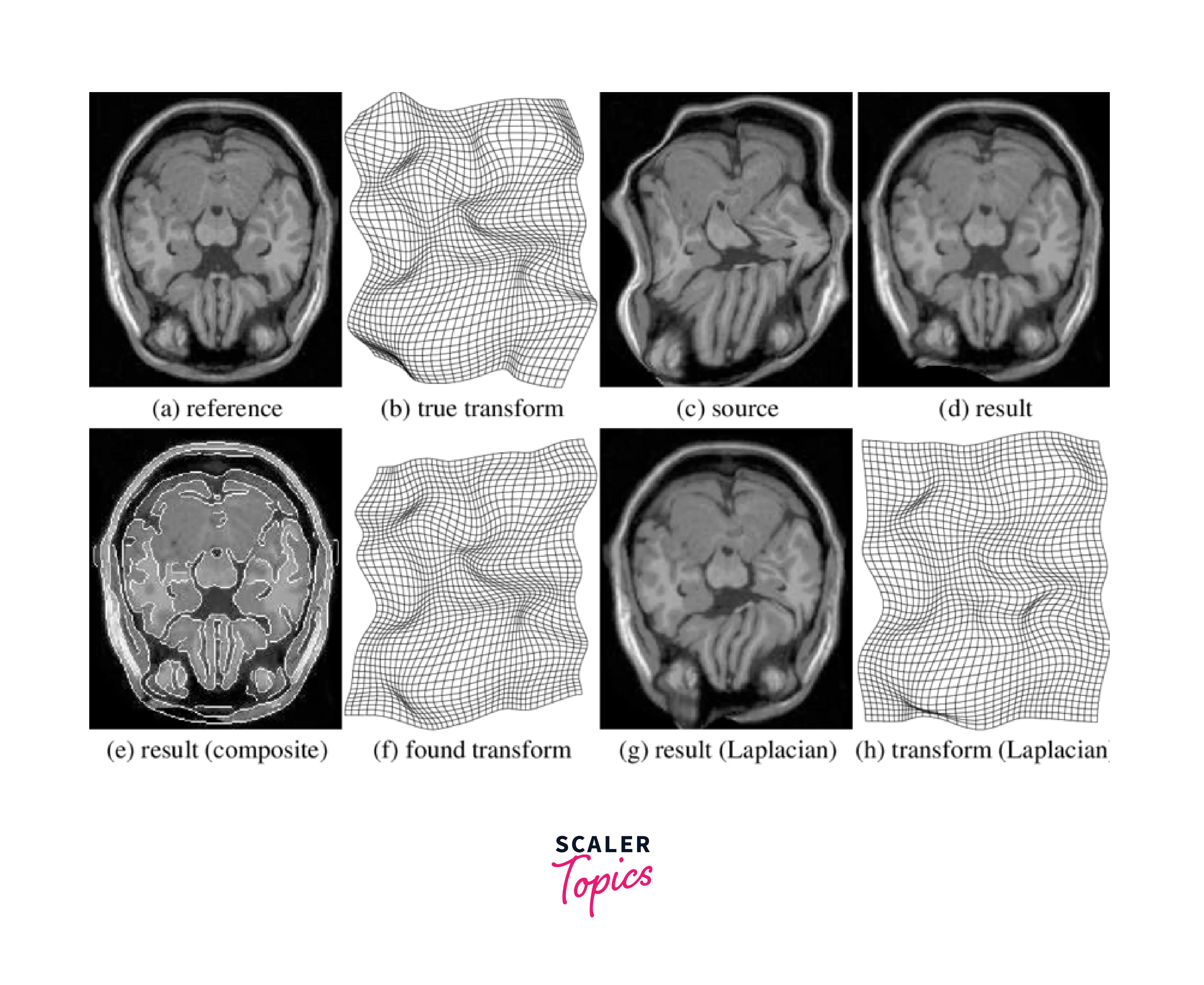

Deformation in Object Tracking in OpenCV

When an object changes shape, it can be difficult to track it accurately. This can occur when an object moves in a non-rigid way or when the object itself is deformed.

-

Deformation occurs when the shape of the object being tracked changes, either due to the object deforming or changing shape, or due to changes in the camera's perspective. These changes can cause the appearance of the object to change, which can make it difficult for the tracking algorithm to detect and track the object.

-

To address deformation in object tracking, various techniques can be used, such as using deformable models to represent the object's shape, incorporating shape estimation into the tracking algorithm, or using multiple cameras or sensors with different perspectives to obtain a more complete view of the scene.

Solutions:

-

One solution to deformation is to use non-rigid registration techniques to align the object across different frames. Non-rigid registration techniques can estimate the deformation of the object and use it to adjust the tracking accordingly.

-

Another approach is to use shape models to estimate the deformation of the object and adjust the tracking accordingly. This involves building a statistical model of the object's shape and using it to estimate the deformation at each frame.

Motion Blur in Object Tracking in OpenCV

When an object is moving quickly, it can appear blurred in the video frames. This can make it difficult to track the object accurately.

-

Motion blur occurs when there is motion in the scene, causing objects to appear blurry in the image. This can make it difficult for the tracking algorithm to accurately detect and track the object.

-

To address motion blur in object tracking, various techniques can be used, such as using motion blur estimation to account for the blur in the image, incorporating motion models to predict the movement of the object, or using multiple cameras or sensors with different exposure times to obtain a more complete view of the scene.

Solutions:

-

One solution to motion blur is to use motion deblurring algorithms to recover the sharp image of the object. Motion deblurring algorithms estimate the motion blur kernel of the image and use it to recover the sharp image.

-

Another approach is to use tracking-by-detection techniques that rely on the appearance of the object rather than its motion. These techniques can be robust to motion blur because they do not rely on the precise motion of the object.

Object Appearance Changes in Object Tracking in OpenCV:

Changes in an object's appearance, such as changes in color or texture, can make it difficult to track the object accurately.

-

Object appearance changes occur when the appearance of the object being tracked changes due to changes in its pose, lighting, or occlusion. These changes can make it difficult for the tracking algorithm to accurately detect and track the object.

-

To address object appearance changes in object tracking, various techniques can be used, such as using appearance models to represent the object's appearance, incorporating appearance estimation into the tracking algorithm, or using machine learning techniques to learn to recognize appearance changes and adapt the tracking algorithm accordingly.

Solutions:

-

One solution to appearance changes is to use adaptive appearance models that can update the object's appearance over time. Adaptive appearance models can learn the appearance of the object from the tracked frames and use it to update the object model.

-

Another approach is to use multi-modal feature representations that are robust to changes in appearance. For example, a tracking algorithm may use both color and texture features to track the object. If the color of the object changes, the texture features can still provide a reliable tracking signal.

Tracking Initialization in Object Tracking in OpenCV

Object tracking algorithms often require initialization, which involves selecting the object to be tracked in the first frame of the video. If the object is not selected accurately, the tracking algorithm may fail.

-

Tracking initialization is the process of identifying the object to be tracked in the first frame of the video or sequence of frames. This is typically done using user input, such as manually selecting the object with a bounding box.

-

The accuracy and robustness of the tracking algorithm can depend heavily on the quality of the tracking initialization.

-

To improve tracking performance, various techniques can be used, such as incorporating object detection algorithms to automatically identify the object to be tracked, using machine learning techniques to learn to recognize the object, or incorporating feedback from previous tracking frames to refine the tracking initialization.

Solutions:

-

One solution to tracking initialization is to use interactive methods that allow the user to select the object to be tracked. Interactive methods can provide accurate initialization because they rely on human perception.

-

Another approach is to use online learning techniques to update the object model as the tracking progresses. Online learning techniques can adjust the object model as the tracking progresses and improve its accuracy.

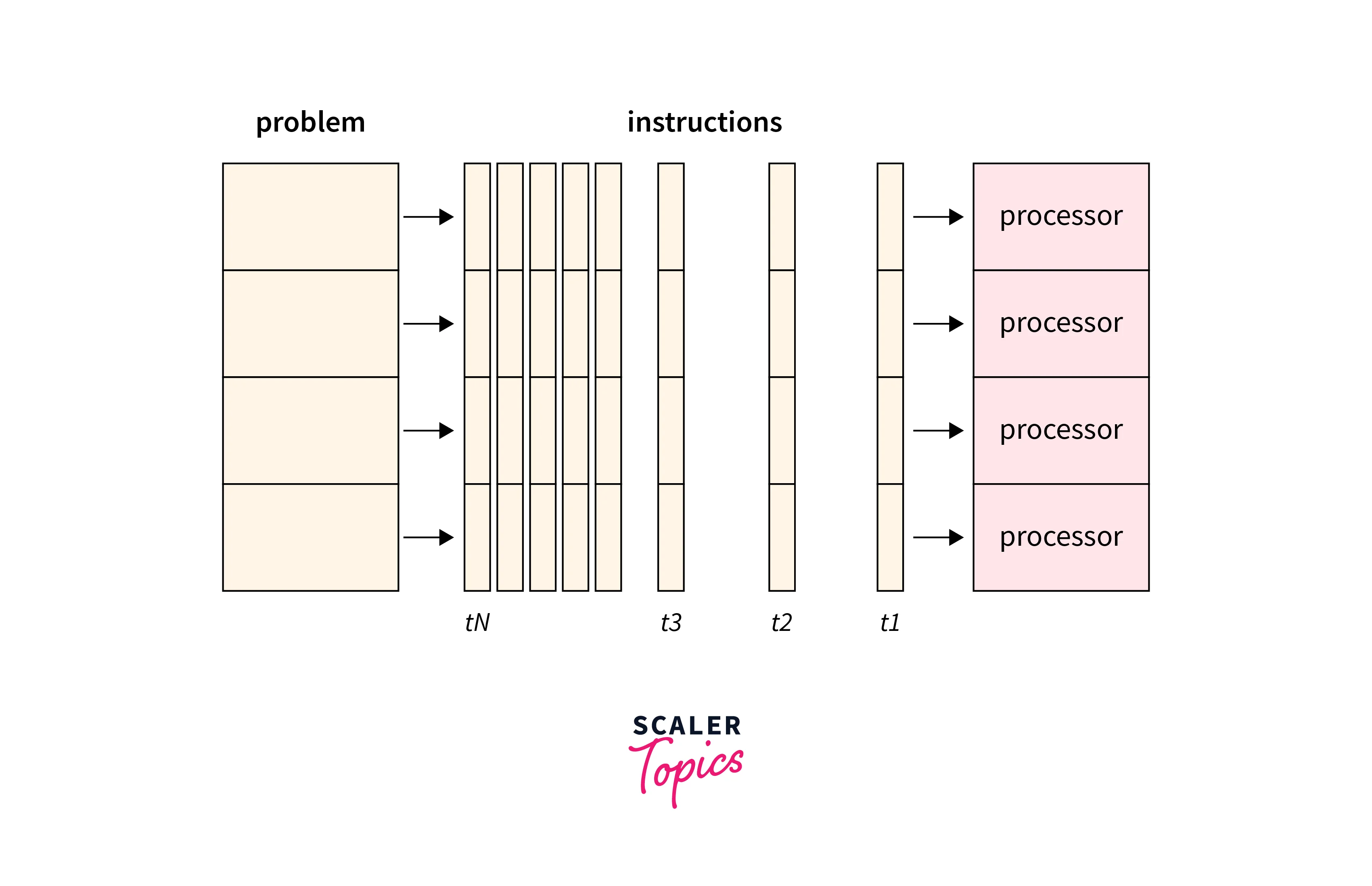

Computational Complexity in Object Tracking in OpenCV

Object tracking algorithms can be computationally intensive, which can make real-time tracking challenging.

-

Computational complexity refers to the amount of computational resources required to perform object tracking, such as processing power and memory. As object tracking algorithms become more complex and sophisticated, they may require more computational resources to operate efficiently.

-

To address computational complexity in object tracking, various techniques can be used, such as optimizing the tracking algorithm for efficient resource usage, using hardware accelerators such as GPUs to offload some of the computational load, or using distributed computing techniques to distribute the workload across multiple processors or nodes.

Solutions:

-

One solution to computational complexity is to use efficient algorithms and data structures that can perform tracking in real-time. Efficient algorithms and data structures can reduce the computational load of the tracking algorithm and make it feasible to perform tracking in real-time.

-

Another approach is to use parallel computing techniques to distribute the computational load across multiple processors or GPUs. Parallel computing techniques can significantly reduce the processing time and make it possible to perform tracking on high-resolution video streams in real-time.

Camera Motion in Object Tracking in OpenCV

Camera motion can cause the entire scene to move, which can make it difficult to track objects accurately.

-

Camera motion refers to the movement of the camera itself, which can cause the position and appearance of the object being tracked to change in the image. Camera motion can include translational motion, rotational motion, and zooming.

-

To address camera motion in object tracking, various techniques can be used, such as using camera calibration techniques to estimate the camera's motion and correct for it, incorporating motion models into the tracking algorithm to predict the motion of the object and compensate for the camera's motion, or using multiple cameras or sensors with different viewpoints to obtain a more complete view of the scene.

Solutions:

-

One solution to camera motion is to use motion compensation techniques to compensate for the camera motion. Motion compensation techniques can estimate the camera motion and use it to compensate for the motion of the object.

-

Another approach is to use a multi-camera setup to track the object from different viewpoints. This involves using multiple cameras to capture the object from different viewpoints and fusing the tracking results to obtain a more robust estimate of the object's position.

Tracking in Crowded Scenes

Tracking in crowded scenes is a challenging problem because of the large number of objects that need to be tracked simultaneously.

-

Tracking in crowded scenes can be challenging due to the presence of multiple objects and occlusions, which can cause the tracking algorithm to lose track of the object being tracked.

-

To address tracking in crowded scenes, various techniques can be used, such as incorporating object detection algorithms to identify and track multiple objects simultaneously, using machine learning techniques to learn to distinguish between different objects and track them individually, or using multiple cameras or sensors with different viewpoints to obtain a more complete view of the scene.

Solutions:

-

One solution to tracking in crowded scenes is to use multi-object tracking algorithms that can track multiple objects simultaneously. Multi-object tracking algorithms can track the objects and maintain their identities over time.

-

Another approach is to use object-level occlusion reasoning to estimate the likelihood of occlusion and adjust the tracking accordingly. This involves modeling the occlusion patterns in the scene and using them to predict the likelihood of occlusion at each frame. Additionally, incorporating contextual information, such as the scene layout, can also aid in tracking objects in crowded scenes.

![]()

Addressing these challenges requires advanced algorithms and techniques, and researchers are continually working on improving Object tracking in OpenCV performance.

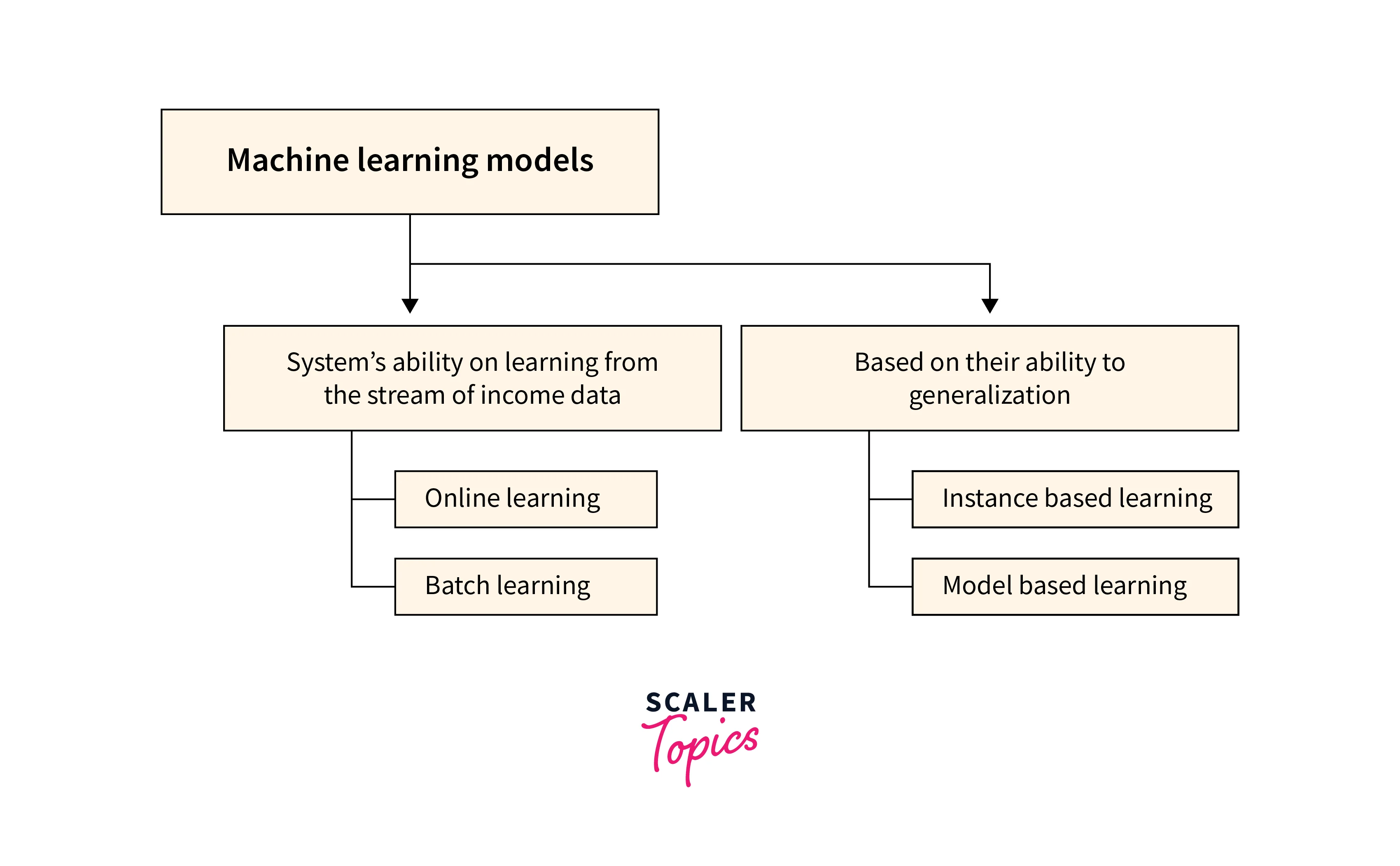

Deep Learning-based approaches to Object Tracking

Deep learning-based approaches to object tracking have gained a lot of attention in recent years due to their high accuracy and robustness in various scenarios. These approaches use deep neural networks to learn the appearance and motion patterns of the object of interest, and then use these patterns to track the object in subsequent frames.

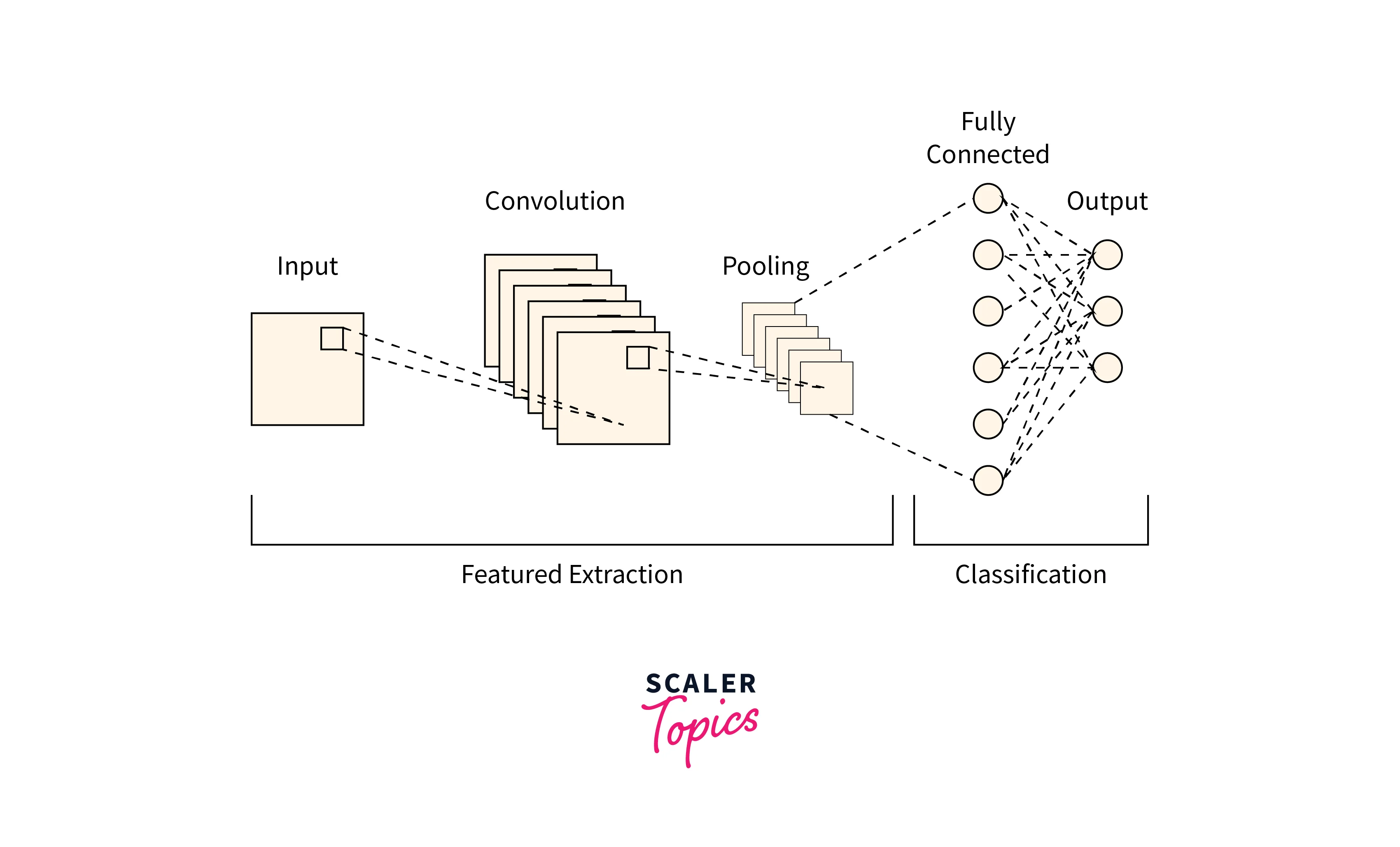

Convolutional Neural Networks (CNNs) for Feature Extraction

CNNs are a type of Deep Learning network that is commonly used for image and video processing. CNNs can be used to automatically extract relevant features from the input frames, which can be used to track the object. The features learned by CNNs are robust to changes in lighting, appearance, and background clutter, making them suitable for tracking in challenging environments.

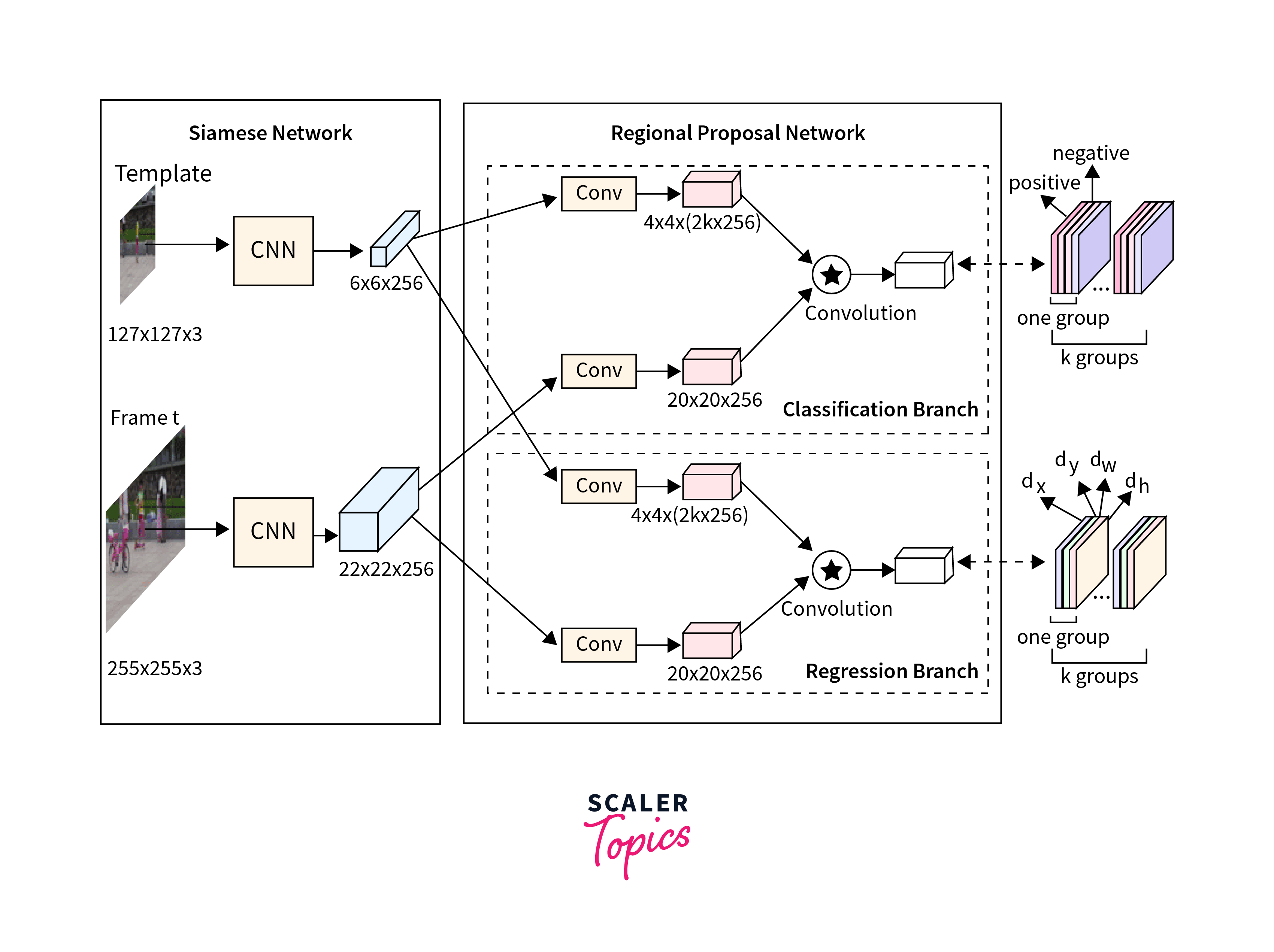

Siamese Networks for Learning a Similarity Metric Between Object and Background

Siamese Networks are another type of Deep Learning network that can be used for object tracking. They learn a similarity metric between the object and the surrounding background, which is used to track the object over time. Siamese Networks are particularly useful when the appearance of the object changes significantly over time.

Recurrent Neural Networks (RNNs) for Modeling Temporal Dependencies

RNNs are a type of Deep Learning network that can model temporal dependencies in the object's motion. RNNs can be used to predict the future trajectory of the object based on its past motion, which can improve the accuracy of the Object tracking in OpenCV.

Siamese-RPN

Recently, the combination of the Siamese network and deep regression network has been proposed, called the Siamese-RPN (Region Proposal Network). This method utilizes the Siamese network to generate proposals and the deep regression network to refine the proposals.

Overall, deep learning-based methods have shown promising results in object tracking, but they also require large amounts of training data and computing resources.

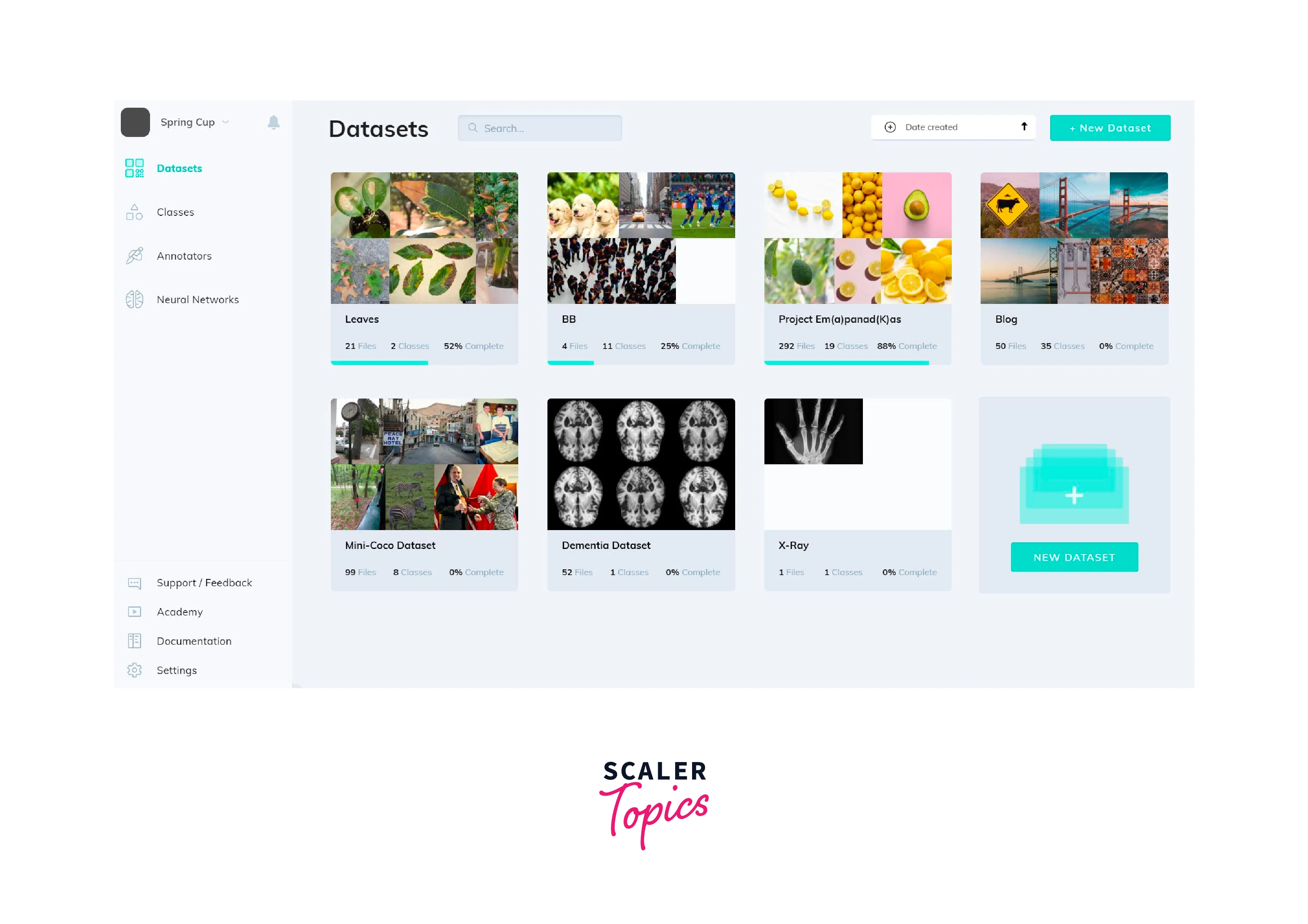

Visual Object Tracking using V7

Before proceeding, make sure to request a 14-day free trial if you haven't already. Now, let's briefly go through the steps involved in Visual Object Tracking using V7.

Upload Data The first step is to upload the data.

Data Annotation

After uploading your video, the next step is to annotate it. To speed up the process, you can take advantage of V7's auto-annotation tool.

To start annotating, choose the frame where you want to begin tracking the object, which in this example is a runner with a white shirt. You can use the timeline bar located at the bottom of the video to navigate to the desired frame.

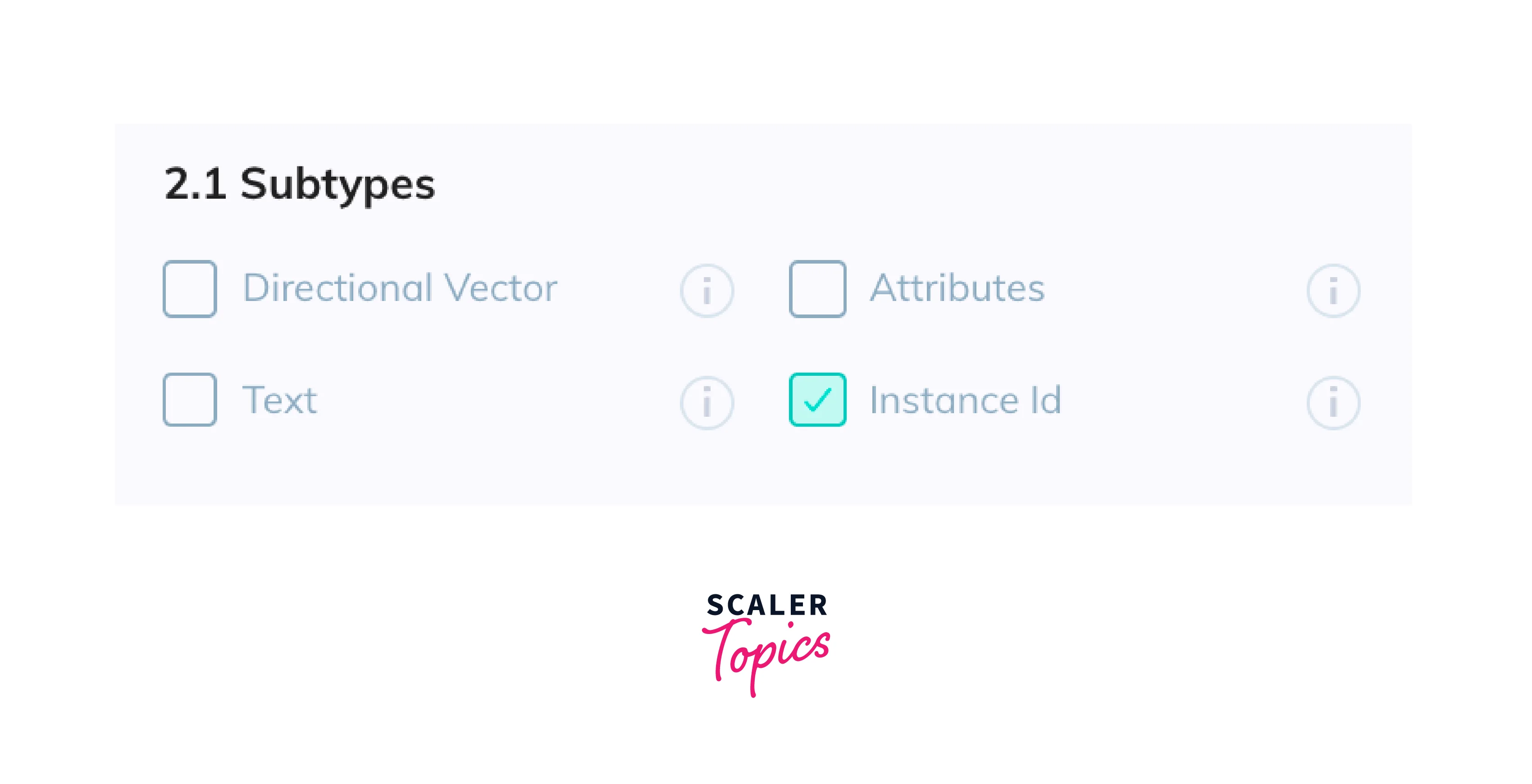

After selecting the desired frame, use the auto annotation tool to draw a polygon around the object of interest. It's crucial to select the instance ID as the subtype during annotation. To do so, you must first navigate to the Classes tab in your dataset, create or edit a class, and add Instance ID as a Subtype.

Instance ID

Instance ID is a unique identification number assigned to a selected object in the video. It helps to keep track of the object throughout the video, even if it changes its orientation or position. The instance ID is useful in classifying and detecting the same object in multiple frames of the video.

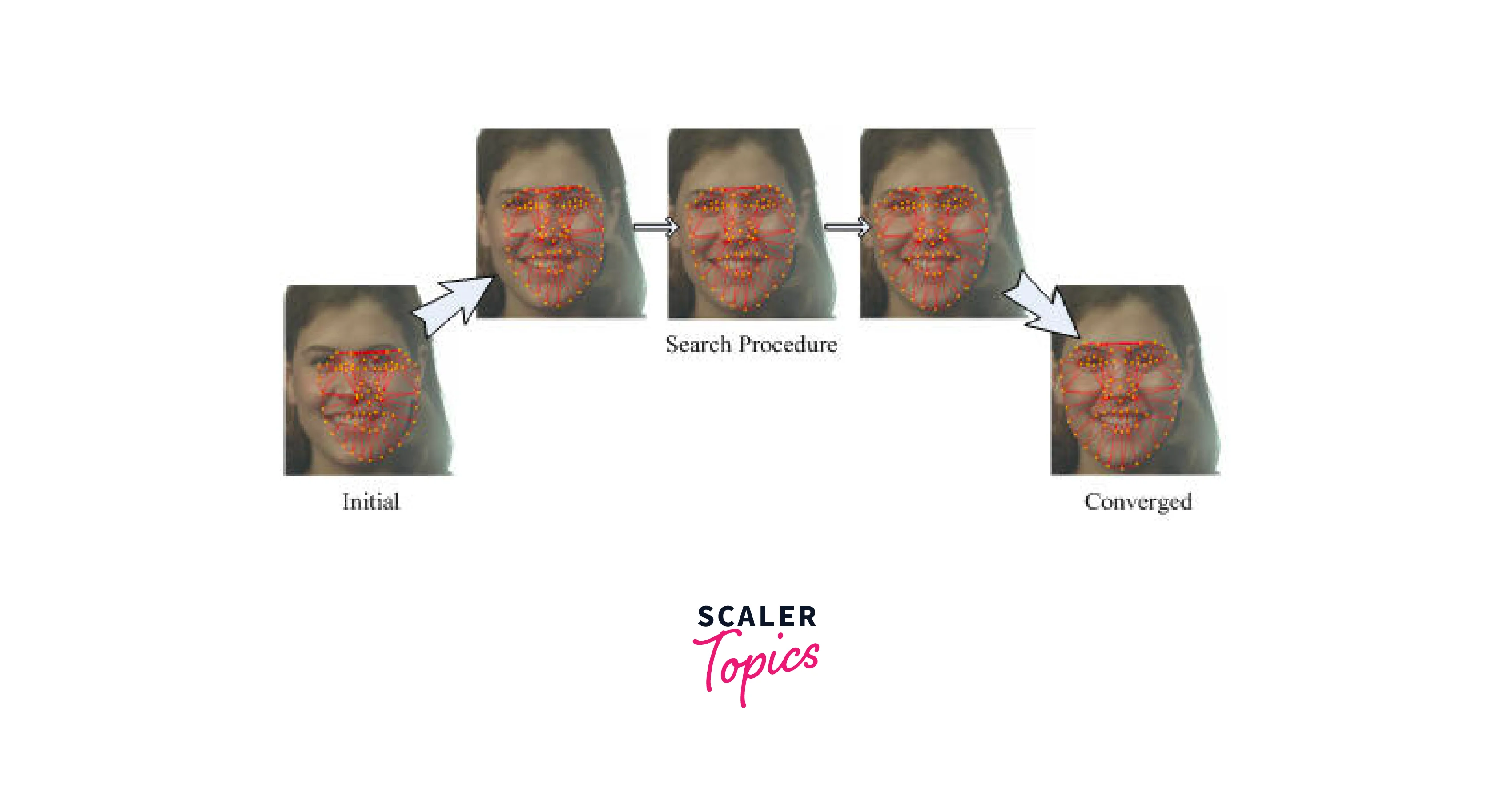

Adding and Deleting Anchor Points

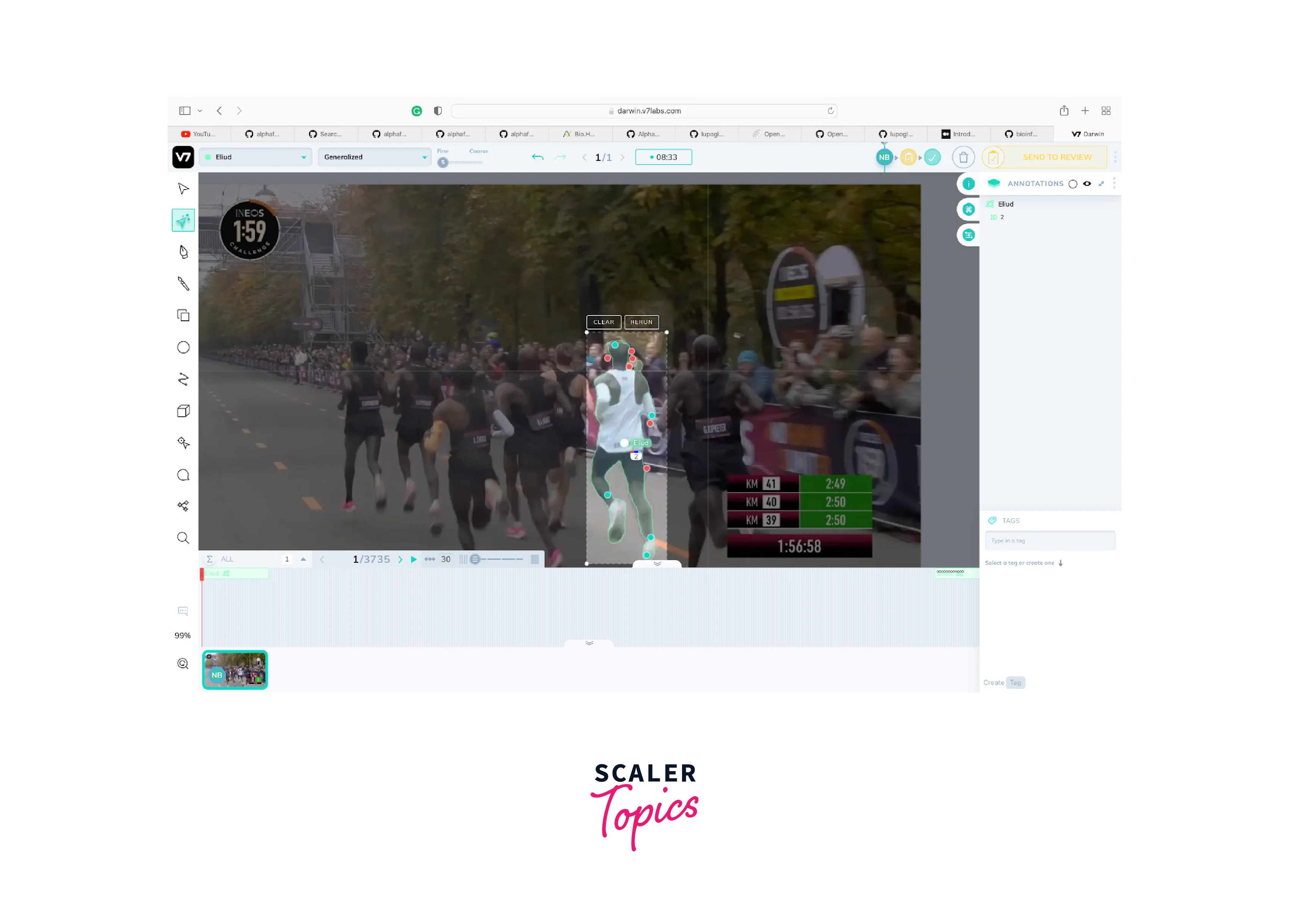

The auto annotation tool generates a segmentation around the selected object, such as the runner in this case. However, you may notice that the segmentation mask is incomplete in certain areas.

To address this, simply hover your mouse over the areas where the segmentation mask is missing and extend it to the desired area within the polygon. You can then click on the areas where the segmentation mask is incomplete to complete the segmentation.

In the image shown above, the extended segmentation mask of the runner’s body is done manually by clicking on the areas where it was missing. The green dots indicate the added segmentation, while the red dots represent the deleted ones. After completing the annotation, move to the next frame.

To annotate the object in the next frame, click on the rerun button located on top of the polygon mask around the object. This will automatically apply the segmentation mask to the object. You can continue annotating your object in subsequent frames by copying the instances and adjusting the label until you have annotated all instances.

Implementation of Object Tracking in OpenCV

In this tutorial, we will implement object tracking using OpenCV in Python. Object tracking is a technique used to track the movement of an object over time in a video. We will use the MeanShift algorithm to track an object in a video.

Step 1: Import Required Libraries

First, we need to import the libraries required for this task. We will be using OpenCV, NumPy, and Matplotlib.

Step 2: Load the Video

Next, we need to load the video that we want to track an object in. We will use the cv2.VideoCapture() function to load the video.

Step 3: Read the First Frame

Now, we need to read the first frame of the video and select the object that we want to track. We will use the cv2.imread() function to read the first frame.

Step 4: Select the Object to Track

After reading the first frame, we need to select the object that we want to track. We can use the mouse to select the object. We will create a function to select the object.

The above function will draw a rectangle around the selected object and create a histogram of the selected object.

Step 5: Initialize the MeanShift Algorithm

After selecting the object to track, we need to initialize the MeanShift algorithm. We will use the histogram that we created in the previous step to initialize the algorithm.

Step 6: Track the Object

Finally, we can track the object using the MeanShift algorithm. We will use a while loop to read each frame of the video and track the object in the frame.

In the above code, we first read each frame of the video and convert it to the HSV color space. We then calculate the back projection of the frame using the histogram that we created in the previous step. We then use the cv2.meanShift() function to track the object in the frame. We then draw a rectangle around the object and display the frame using the cv2.imshow() function. We use the cv2.waitKey() function to wait for a key press and break out of the loop if the 'Esc' key is pressed.

Output of Object tracking in OpenCV

![]()

Conclusion

- In conclusion, Object tracking in OpenCV is a crucial task in computer vision and has numerous applications in various fields such as surveillance, autonomous driving, and robotics.

- OpenCV provides a range of powerful tools for object tracking that can be implemented with ease.

- The implementation discussed here covers the basics of object tracking and provides a good foundation to build upon.

- With further research and experimentation, it is possible to improve the accuracy and efficiency of object tracking in real-world scenarios.