Deadlock Prevention in Operating System (OS)

Overview

Deadlock is a critical state in computing where processes are stuck, each waiting for the other to release a resource. This halts system operations, impacting efficiency. Understanding deadlock prevention is crucial for system stability. It empowers administrators and developers to implement strategies for smooth processes, ensuring optimal resource use and system reliability.

Introduction to Deadlock

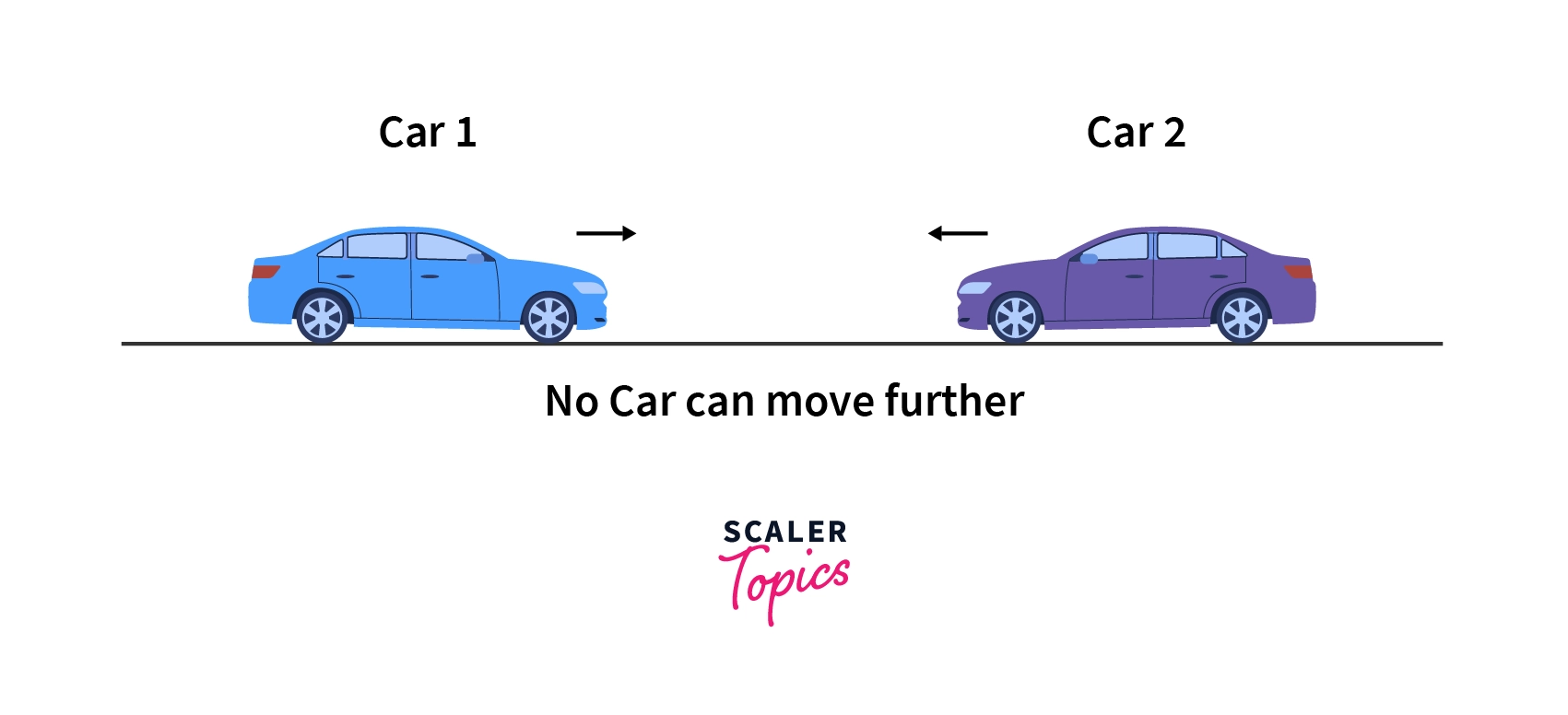

Consider a one-way road with two cars approaching from opposite directions, blocking each other. The road is the resource, and crossing it represents a process. Since it's a one-way road, both cars can't move simultaneously, leading to a deadlock.

A deadlock in an operating system is an indefinite blocking of processes competing for resources. It occurs when two or more processes require resources that can't be shared simultaneously.

There are four necessary conditions for deadlock. Deadlock happens only when all four conditions occur simultaneously for unshareable single instance resources.

Deadlock Characteristics/Conditions

- Mutual exclusion

- Hold and wait

- No preemption

- Circular wait.

There are three ways to handle deadlock:

-

Deadlock prevention: The possibility of deadlock is excluded before making requests, by eliminating one of the necessary conditions for deadlock.

Example: Only allowing traffic from one direction, will exclude the possibility of blocking the road.

-

Deadlock avoidance: The Operating system runs an algorithm on requests to check for a safe state. Any request that may result in a deadlock is not granted.

Example: Checking each car and not allowing any car that can block the road. If there is already traffic on the road, then a car coming from the opposite direction can cause blockage.

-

Deadlock detection & recovery: OS detects deadlock by regularly checking the system state, and recovers to a safe state using recovery techniques. Example: Unblocking the road by backing cars from one side. Deadlock prevention and deadlock avoidance are carried out before deadlock occurs.

In this article, we will learn about deadlock prevention in OS.

Deadlock prevention is a set of methods used to ensure that all requests are safe, by eliminating at least one of the four necessary conditions for deadlock.

Deadlock Prevention in Operating System

A process is a set of instructions. When a process runs, it needs resources like CPU cycles, Files, or Peripheral device access.

Some of the requests for resources can lead to deadlock.

Deadlock prevention is eliminating one of the necessary conditions of deadlock so that only safe requests are made to OS and the possibility of deadlock is excluded before making requests.

As now requests are made carefully, the operating system can grant all requests safely.

Here OS does not need to do any additional tasks as it does in deadlock avoidance by running an algorithm on requests checking for the possibility of deadlock.

Deadlock Prevention Techniques

Deadlock prevention techniques refer to violating any one of the four necessary conditions. We will see one by one how we can violate each of them to make safe requests and which is the best approach to prevent deadlock.

Mutual Exclusion

Some resources are inherently unshareable, for example, Printers. For unshareable resources, processes require exclusive control of the resources.

A mutual exclusion means that unshareable resources cannot be accessed simultaneously by processes.

Shared resources do not cause deadlock but some resources can't be shared among processes, leading to a deadlock.

For Example: read operation on a file can be done simultaneously by multiple processes, but write operation cannot. Write operation requires sequential access, so, some processes have to wait while another process is doing a write operation.

It is not possible to eliminate mutual exclusion, as some resources are inherently non-shareable,

For Example Tape drive, as only one process can access data from a Tape drive at a time.

For other resources like printers, we can use a technique called Spooling.

Spooling: It stands for Simultaneous Peripheral Operations online.

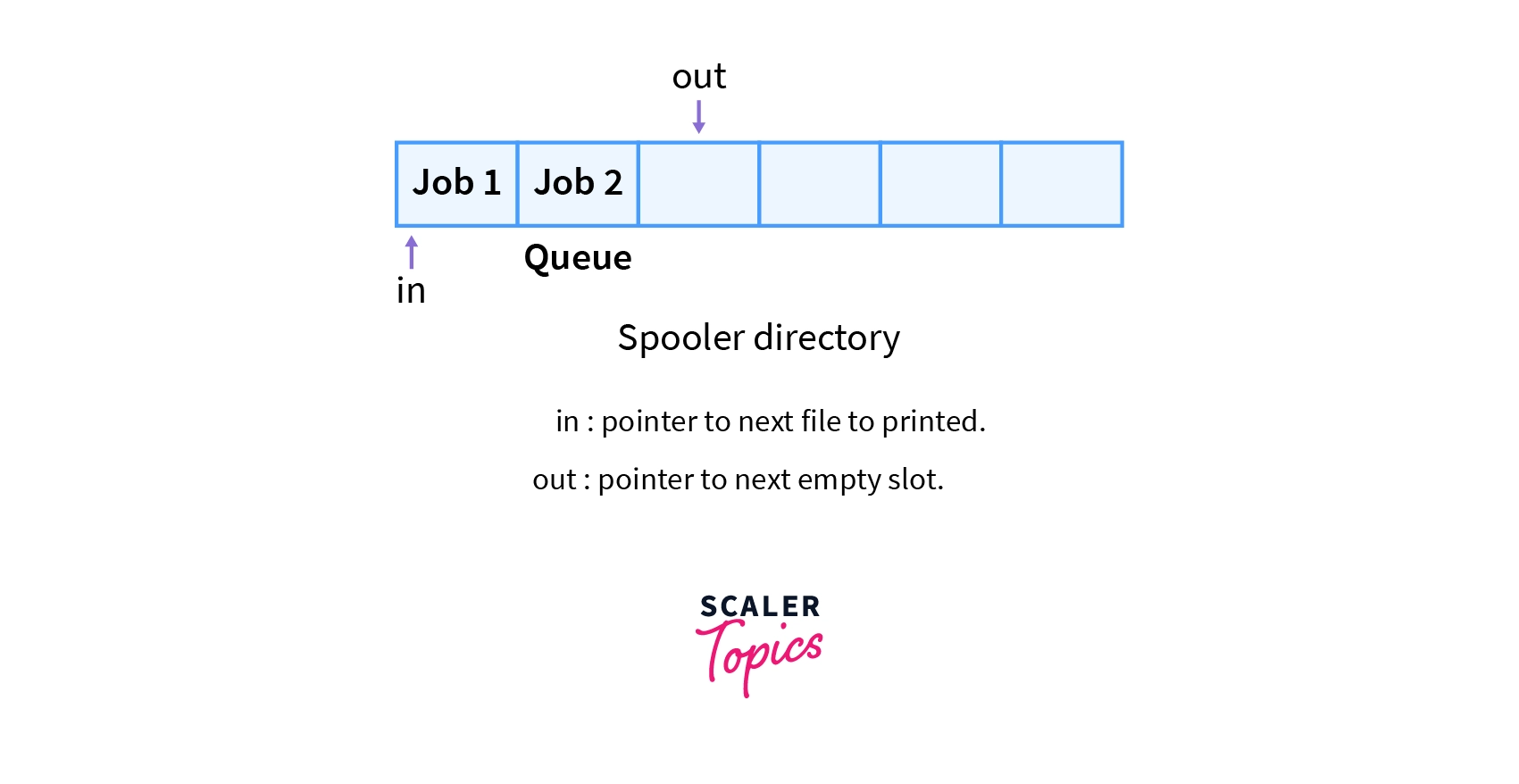

A Printer has associated memory which can be used as a spooler directory (memory that is used to store files that are to be printed next).

In spooling, when multiple processes request the printer, their jobs ( instructions of the processes that require printer access) are added to the queue in the spooler directory.

The printer is allocated to jobs on a first come first serve (FCFS) basis. In this way, the process does not have to wait for the printer and it continues its work after adding its job to the queue.

We can understand the workings of the Spooler directory better with the diagram given below:

Challenges of Spooling:

-

Spooling can only be used for resources with associated memory, like a Printer.

-

It may also cause race condition. A race condition is a situation where two or more processes are accessing a resource and the final results cannot be definitively determined.

For Example: In printer spooling, if process A overwrites the job of process B in the queue, then process B will never receive the output.

-

It is not a full-proof method as after the queue becomes full, incoming processes go into a waiting state.

For Example: If the size of the queue is 10 blocks then whenever there are more than 10 processes, they will go in a waiting state.

:::

Hold and Wait

Hold and wait is a condition in which a process is holding one resource while simultaneously waiting for another resource that is being held by another process. The process cannot continue till it gets all the required resources.

In the diagram given below:

- Resource 1 is allocated to Process 2

- Resource 2 is allocated to Process 1

- Resource 3 is allocated to Process 1

- Process 1 is waiting for Resource 1 and holding Resource 2 and Resource 3

- Process 2 is waiting for Resource 2 and holding Resource 1

There are two ways to eliminate hold and wait:-

-

By eliminating wait:

The process specifies the resources it requires in advance so that it does not have to wait for allocation after execution starts.

For Example: Process1 declares in advance that it requires both Resource1 and Resource2

-

By eliminating hold:

The process has to release all resources it is currently holding before making a new request.

For Example: Process1 has to release Resource2 and Resource3 before making request for Resource1

Challenges:

-

As a process executes instructions one by one, it cannot know about all required resources before execution.

-

Releasing all the resources a process is currently holding is also problematic as they may not be usable by other processes and are released unnecessarily.

For example: When Process1 releases both Resource2 and Resource3, Resource3 is released unnecessarily as it is not required by Process2.

No preemption

Preemption is temporarily interrupting an executing task and later resuming it.

For example, if process P1 is using a resource and a high-priority process P2 requests for the resource, process P1 is stopped and the resources are allocated to P2.

There are two ways to eliminate this condition by preemption:

-

If a process is holding some resources and waiting for other resources, then it should release all previously held resources and put a new request for the required resources again. The process can resume once it has all the required resources.

For example: If a process has resources R1, R2, and R3 and it is waiting for resource R4, then it has to release R1, R2, and R3 and put a new request of all resources again.

-

If a process P1 is waiting for some resource, and there is another process P2 that is holding that resource and is blocked waiting for some other resource. Then the resource is taken from P2 and allocated to P1. This way process P2 is preempted and it requests again for its required resources to resume the task. The above approaches are possible for resources whose states are easily restored and saved, such as memory and registers.

Challenges:

-

These approaches are problematic as the process might be actively using these resources and halting the process by preempting can cause inconsistency.

For example: If a process is writing to a file and its access is revoked for the process before it completely updates the file, the file will remain unusable and in an inconsistent state.

-

Putting requests for all resources again is inefficient and time-consuming.

Circular Wait

In circular wait, two or more processes wait for resources in a circular order. We can understand this better by the diagram given below:

To eliminate circular wait, we assign a priority to each resource. A process can only request resources in increasing order of priority.

In the example above, process P3 is requesting resource R1, which has a number lower than resource R3 which is already allocated to process P3. So this request is invalid and cannot be made, as R1 is already allocated to process P1.

Challenges:

-

It is difficult to assign a relative priority to resources, as one resource can be prioritized differently by different processes.

For Example: A media player will give a lesser priority to a printer while a document processor might give it a higher priority. The priority of resources is different according to the situation and use case.

Feasibility of Deadlock Prevention

- Mutual exclusion cannot be eliminated completely because some resources are inherently non-shareable

- Hold and wait cannot be eliminated as we cannot know in advance about the required resources to prevent waiting. It is inefficient to prevent a hold by releasing all the resources while requesting a new one

- Preempting processes can cause inconsistency and starting the process over by putting requests for all resources again is inefficient.

- Eliminating circular wait is the only practical way to prevent deadlock.

Elevate your understanding of operating systems with our Free Operating System course. Enroll today and learn from experts!

Conclusion

- Deadlock can be prevented by eliminating any of the four necessary conditions, which are mutual exclusion, hold and wait, no preemption, and circular wait.

- Mutual exclusion, hold and wait and no preemption cannot be violated practically.

- Circular wait can be feasibly eliminated by assigning a priority to each resource. The request is granted if the process requests a resource of higher priority than the resources it currently holds.