Why do We Need Application Servers Like Puma in Ruby?

Overview

In the world of web development, application servers play a crucial role in ensuring web applications' smooth and efficient functioning. Ruby, a popular programming language known for its simplicity and elegance, is no exception. When it comes to deploying Ruby applications, having a reliable and performant application server becomes essential. One such highly regarded application server in the Ruby ecosystem is Puma. In this article, we will explore the reasons why we need application servers like Puma Ruby, the benefits they provide, and how Puma Ruby specifically enhances the performance, scalability, and reliability of Ruby applications.

Introduction

What are Application Servers?

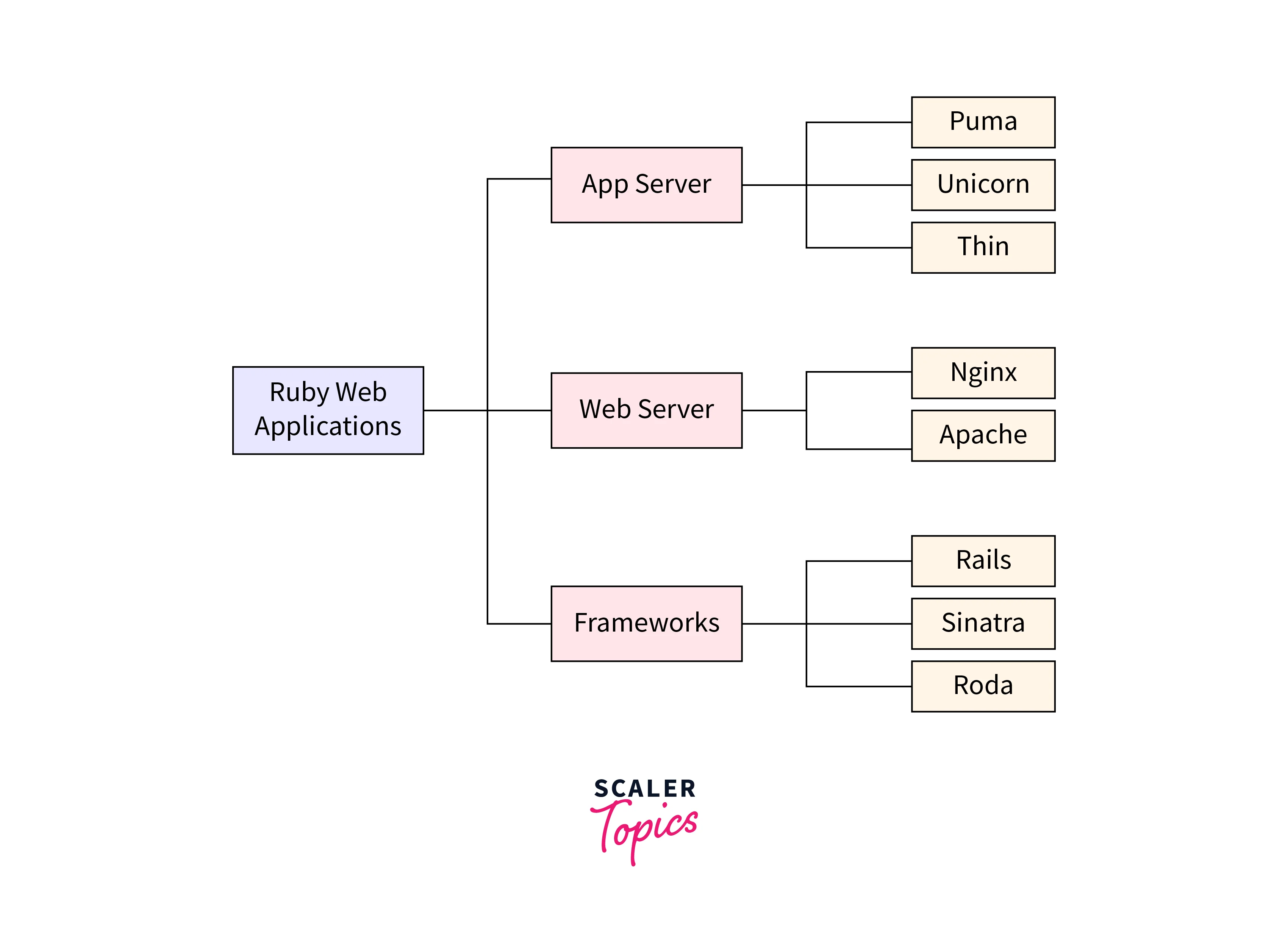

Before delving into the specifics, let's first understand what application servers are in the context of web development. An application server is a software framework that provides an environment for running web applications. It is an intermediary between the web server, which handles incoming HTTP requests, and the application code. Application servers manage and process these requests, handle concurrency, and provide other essential services required for application execution.

Importance of Application Servers in Ruby

Ruby, known for its elegant syntax and developer-friendly features, has gained popularity among web developers. However, Ruby's interpreter, commonly known as Ruby MRI (Matz's Ruby Interpreter), has limitations when it comes to handling multiple concurrent requests efficiently. This is where application servers come into play. By integrating an application server like Puma with Ruby, developers can overcome these limitations and achieve better performance, scalability, fault tolerance, and reliability in their applications.

The Need for Application Servers in Ruby

Concurrent Request Handling

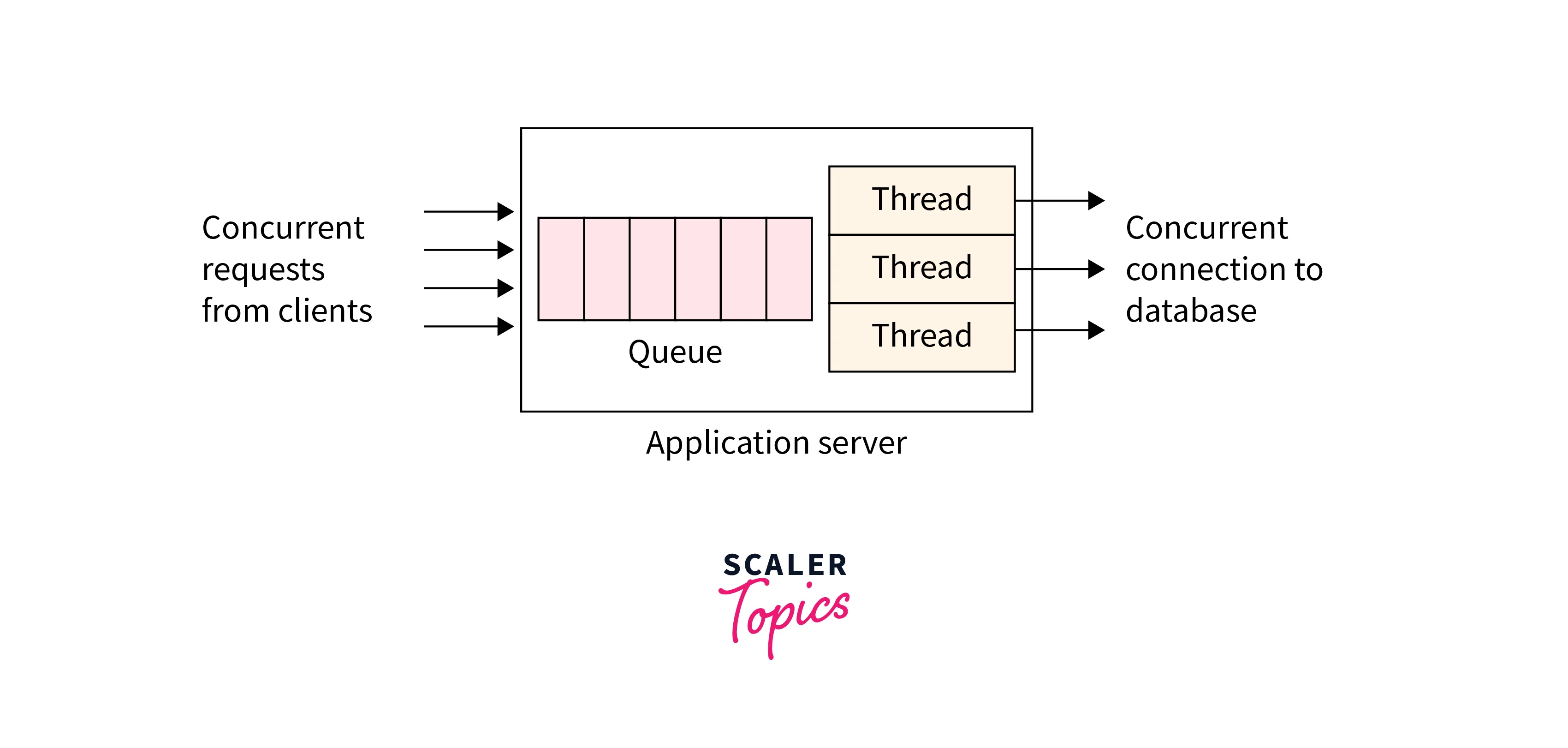

Ruby's application servers are vital for handling concurrent requests efficiently. While Ruby is expressive and developer-friendly, it has limitations in concurrent request handling. By default, Ruby is single-threaded, processing requests one by one. This results in slow response times and poor performance under high concurrency.

Application servers solve this problem by implementing multi-threading or multi-processing. They enable simultaneous handling of multiple requests in Ruby applications, improving performance and response times.

There are several popular application servers in the Ruby ecosystem, such as Puma, Unicorn, and Passenger. These servers utilize multi-threading or multi-processing to handle multiple requests concurrently.

-

Multi-threading:

Application servers like Puma Ruby use multi-threading to process multiple requests within a single Ruby process. Each request is assigned to a separate thread, and these threads can run concurrently, utilizing the available CPU cores efficiently. Multi-threading is well-suited for applications that are I/O bound, such as handling web requests that involve interacting with databases or making API calls.

-

Multi-processing:

Servers like Unicorn and Passenger use a multi-process approach. They create multiple Ruby processes, each capable of handling a request independently. Each process runs in its own memory space, enabling true parallel execution. Multi-processing is beneficial for applications that are CPU bound, where the processing power of multiple cores can be utilized effectively.

Example:

For instance, a Ruby social media app needs to handle concurrent requests for user profiles, newsfeeds, and notifications. Using application server's multi-threading or multi-processing, the app can process these requests simultaneously, improving responsiveness and user experience.

Advantages and Disadvantages:

The following are the advantages and disadvantages of multi-threading and multi-processing.

| Multi-threading | Multi-processing | |

|---|---|---|

| Advantages | 1. Improved responsiveness | 1. Efficient CPU utilization |

| 2. Efficient resource utilization | 2. Isolation and fault tolerance | |

| 3. Simplified programming model | ||

| Disadvantages | 1. Thread synchronization complexity | 1. Higher memory overhead |

| 2. Increased complexity | 2. Increased startup time | |

| 3. Limited CPU-bound performance (due to GIL) | 3. Complex inter-process communication |

Scalability and Performance

Application servers are essential for scalability and performance in Ruby applications.

-

Scalability:

Application servers allow scaling Ruby applications to handle increased traffic and user load. They achieve this by creating multiple instances of Ruby processes or threads, distributing the load across them. This enables horizontal scalability, ensuring the application can handle a higher number of concurrent requests. Load balancers can also be integrated with application servers to evenly distribute requests across multiple application instances.

For example, during a viral marketing campaign, if a Ruby web app experiences a sudden surge in user traffic, a single Ruby process may struggle, causing slower responses or system failures. But with an application server like Puma, multiple Ruby processes or threads can be dynamically created to handle incoming requests. This distributes the load, scales horizontally, and ensures efficient handling of increased traffic.

-

Performance:

Ruby, being an interpreted language, may not be as performant as compiled languages. However, application servers help optimize the performance of Ruby applications in several ways.

a. Caching:

Application servers provide caching mechanisms that store frequently accessed data or computations in memory, reducing the need for repeated fetching or computing. Caching improves response times, reduces the load on databases or external services, and enhances performance. For example, in a Ruby-based content management system, caching through the application server allows storing rendered HTML versions of articles in memory. Subsequent requests for the same articles can be served directly from the cache, eliminating the need for database retrieval and rendering operations.

b. Connection pooling:

Application servers use connection pooling to manage pre-established database connections, eliminating the need to establish new connections for each request. This improves database access speed and overall performance. For instance, in a Ruby-based e-commerce application, connection pooling provided by the application server maintains a pool of established database connections. When a request arrives, the application can quickly grab an available connection from the pool, execute the query, and return the results. This eliminates the need for establishing new connections, reducing overhead and enhancing application performance.

c. Optimized server configurations:

Application servers can optimize server parameters like worker processes, memory allocation, and request timeouts to enhance performance. For example, a Ruby-based real-time chat app may need many concurrent connections for multiple chat rooms. By configuring the application server to allocate more processes or threads and optimize memory, the app can handle connections efficiently, ensuring smooth real-time communication and optimal performance.

Fault Tolerance and Reliability

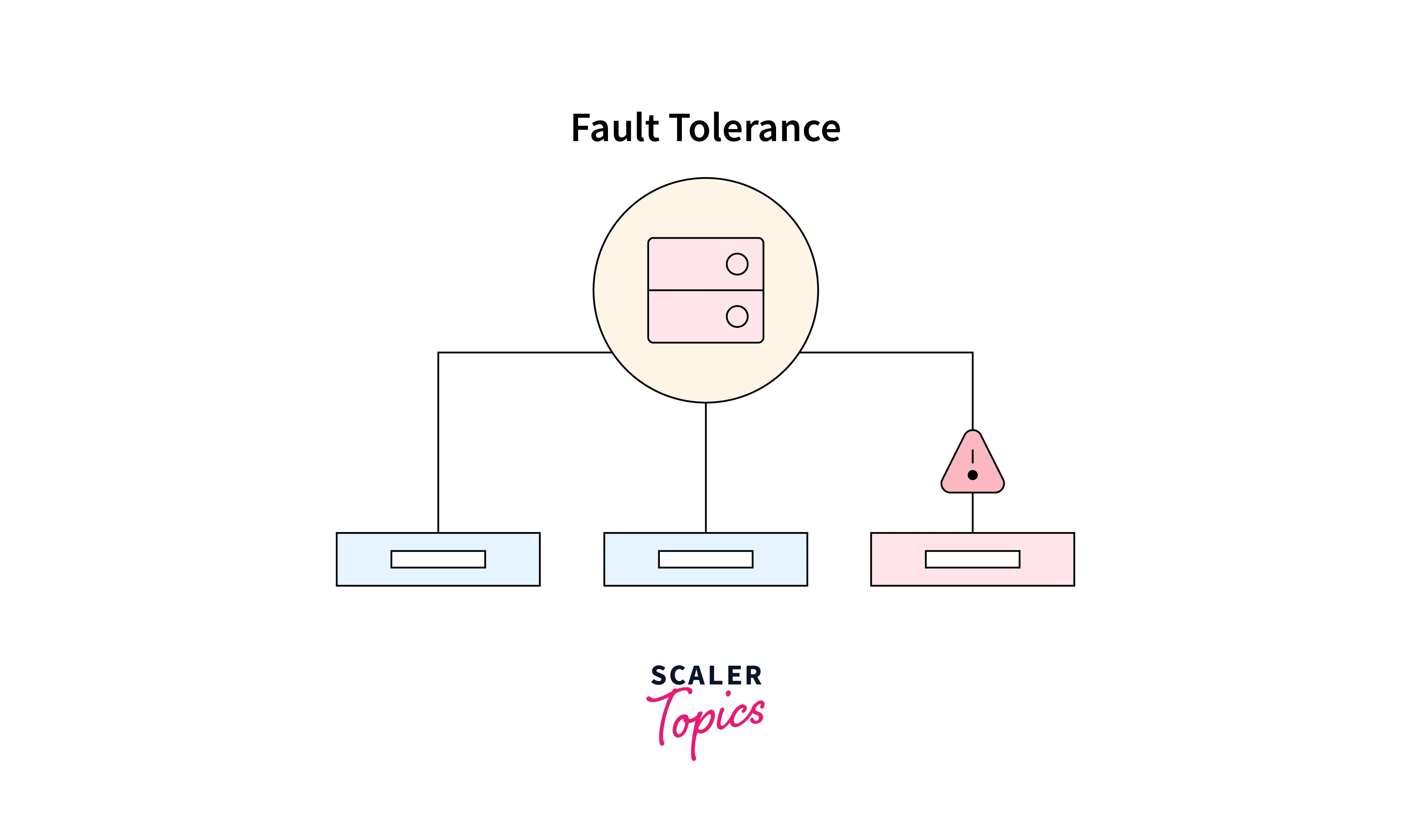

Application servers enhance fault tolerance and reliability in Ruby apps, handling errors and failures to ensure availability and resilience.

-

Fault tolerance:

Application servers ensure fault-tolerant Ruby apps by handling errors without crashing the entire application. They provide features like request retries, error logging, and customizable retry policies. Circuit breakers prevent cascading failures by temporarily stopping requests to failing components. These mechanisms enhance fault tolerance and reliability.

-

Graceful restarts:

Application servers support graceful restarts of Ruby apps, essential for deploying code changes without interrupting ongoing requests. They enable zero-downtime deployments, minimizing disruption and ensuring continuous availability. Additional features like hot-swapping and rolling restarts reduce downtime during updates and maintain a seamless user experience.

-

Process and thread management:

Application servers manage processes/threads, ensuring their creation, termination, and resource allocation. They monitor their health and automatically restart them if unresponsive or error-prone. This enhances reliability as the server can detect and recover from failures, improving overall resilience. Configuration options include health checks and resource allocation, optimizing system resources and preventing monopolization.

-

Fault isolation:

Application servers isolate requests in separate processes/threads to contain errors and prevent cascading failures. This isolation safeguards performance and responsiveness, enhancing stability and reliability.

Benefits of Using Puma as an Application Server in Ruby

What is Puma?

Puma is a popular application server for Ruby web applications. It is built with performance, concurrency, and scalability in mind. Puma Ruby is designed to work well with Ruby frameworks like Ruby on Rails, Sinatra, and Hanami, providing a robust execution environment for these applications.

Performance Advantages of Using Puma

The following are the performance advantages of using Puma:

-

Multi-Threading and Parallel Request Processing

One of the key advantages of using Puma is its support for multi-threading and parallel request processing:

Multi-threading:

Puma employs a multi-threading approach to handle concurrent requests within a single Ruby process. Multi-threading allows Puma Ruby to create multiple threads, which are independent units of execution, to process incoming requests simultaneously. Each thread can execute its assigned request independently without waiting for other threads to complete their tasks. This parallel execution enables efficient utilization of system resources and enhances the concurrency of the application.

Parallel request processing:

With Puma's multi-threading capability, the server can achieve parallel request processing. When a request is received, Puma Ruby assigns it to an available thread, and multiple threads execute requests concurrently. This parallelism allows the server to handle multiple requests at the same time rather than processing them sequentially. As a result, Puma can make the most out of the available CPU cores and maximize the throughput of the application.

-

Efficient Memory Management

Puma is designed to optimize memory usage, ensuring that the server operates efficiently and minimizes the memory footprint. Here's how Puma achieves efficient memory management:

a. Thread-level memory sharing:

Puma's multi-threading model allows threads to share memory within a single process. This means that memory allocated for common resources, such as application code, libraries, and data structures, can be shared among multiple threads. By sharing memory, Puma reduces the overall memory overhead, as the same resources do not need to be replicated for each thread.

b. Reduced memory duplication:

Puma avoids unnecessary memory duplication by employing copy-on-write (COW) techniques. When a new thread is created, it initially shares the memory with the parent thread. As long as the threads don't modify the shared memory, the memory remains shared. This minimizes the memory footprint, as it eliminates the need for redundant copies of the same data.

c. Efficient garbage collection:

Ruby employs a garbage collector (GC) to manage memory allocation and deallocation. Puma utilizes Ruby's GC mechanisms to ensure efficient memory management. It works in coordination with Ruby's GC to minimize memory fragmentation and reclaim unused memory. By optimizing garbage collection, Puma helps prevent memory leaks and ensures that memory is efficiently utilized.

d. Configurable worker processes:

Puma allows the configuration of the number of worker processes to be used. Each worker process operates independently, with its own memory space. By adjusting the number of worker processes based on the application's requirements and available system resources, you can effectively manage memory usage. Scaling the number of worker processes allows you to balance the memory requirements and performance of the application.

e. Memory optimization techniques:

Puma incorporates various memory optimization techniques to further enhance memory management. These techniques include object pooling, which reuses objects instead of creating new ones and minimizing memory fragmentation through memory allocation strategies. These optimizations contribute to more efficient memory utilization and overall performance.

-

Scalability and Concurrency with Puma

Puma Ruby offers impressive scalability and concurrency capabilities. By leveraging multi-threading, Puma can handle multiple requests concurrently, optimizing CPU utilization. This high level of concurrency ensures the efficient processing of numerous requests simultaneously. Additionally, Puma provides flexible scalability options, supporting both multi-threading and multi-process models. With multi-threading, Puma creates multiple threads within a single process, effectively utilizing shared memory and minimizing overhead.

In contrast, the multi-process model allows Puma to spawn multiple worker processes, enabling horizontal scalability across different CPU cores or machines. Furthermore, Puma seamlessly integrates with load balancers, which distribute incoming requests evenly across multiple application instances. This load-balancing functionality ensures a balanced workload distribution, preventing any individual instance from becoming overwhelmed.

-

Enhanced Fault Tolerance and Reliability

Enhanced fault tolerance and reliability are significant benefits of using Puma as an application server in Ruby. Puma incorporates features and mechanisms that ensure the application remains available, resilient, and can handle errors and failures effectively. Let's explore how Puma enhances fault tolerance and reliability:

a. Error handling and recovery:

Puma provides robust error handling and recovery mechanisms. When an error occurs during the request processing, Puma captures and handles it without crashing the entire application. It employs strategies such as error logging, request retries, and error handling middleware to handle errors gracefully. Puma's error logging allows developers to identify and diagnose issues more effectively, facilitating quicker resolutions.

b. Graceful restarts:

Puma supports graceful restarts, allowing you to deploy code changes or update the application without disrupting ongoing requests. During a restart, Puma ensures that existing requests are completed before shutting down processes or threads. This zero-downtime deployment capability prevents service interruptions and maintains continuous availability for users. Graceful restarts also enable seamless rollbacks in case of issues with new deployments.

c. Process and thread management:

Puma manages processes and threads effectively to enhance fault tolerance. It monitors the health of processes or threads and can automatically restart them if they become unresponsive or encounter errors. This self-healing capability ensures that a failure in one process or thread does not impact the overall application. Puma's process and thread management capabilities contribute to the reliability and stability of the application.

d. Fault isolation:

Puma Ruby employs fault isolation techniques to contain failures within the application. It can run different requests in separate processes or threads, ensuring that errors or crashes in one request do not affect others. By isolating requests, Puma prevents failures from cascading throughout the application, minimizing the impact of errors and enhancing overall reliability.

e. Monitoring and diagnostics:

Puma provides built-in monitoring and diagnostic features that contribute to fault tolerance and reliability. It offers detailed request-level logging, error tracking, and access logs, which facilitate the identification and analysis of issues. By monitoring server metrics and performance indicators, developers can proactively detect and address potential bottlenecks or failure points, improving the overall reliability of the application.

Alternatives to Puma

Other Popular Application Servers in Ruby

While Puma is a widely used application server in the Ruby ecosystem, there are other alternatives available as well. Some popular alternatives to Puma include Unicorn, Thin, and Passenger. Each of these application servers has its strengths and features that make them suitable for specific use cases.

Comparison of Different Application Servers

When choosing an application server for a Ruby application, it is essential to consider factors such as performance, scalability, reliability, ease of use, and community support. Comparing different application servers based on these factors can help developers make an informed decision. Factors like concurrency model, memory footprint, monitoring capabilities, and integration with Ruby frameworks should be carefully evaluated.

Conclusion

- Application servers provide infrastructure for high-performance, scalable Ruby web apps.

- They enable concurrent request handling and improved performance through multi-threading or multi-processing.

- Puma Ruby uses multi-threading, while Unicorn and Passenger use multi-process approaches.

- Application servers offer features like load balancing, process/thread management, and graceful restarts.

- They optimize performance through memory management, caching, connection pooling, and server configurations.

- Application servers ensure fault tolerance, error handling, request retries, and fault isolation.

- Choose the right server based on workload, intensity, and fault tolerance requirements.