Research Papers on Artificial Intelligence

Overview

Research papers on artificial intelligence (AI) are crucial for advancing our understanding and development of intelligent systems. These papers cover a wide range of topics, including machine learning, natural language processing, computer vision, and robotics. They provide valuable insights, innovative approaches, and breakthroughs in the field. Research papers on artificial intelligence foster collaboration, drive progress and serve as a reference for students, professionals, and enthusiasts. By exploring algorithms, methodologies, and applications, these papers contribute to the ever-evolving landscape of AI research. They play a significant role in shaping the future of AI technology and its impact on various industries.

Introduction

Artificial intelligence, as a field of study, emerged from the desire to create intelligent systems capable of performing tasks that typically require human intelligence. Research papers on artificial intelligence have been instrumental in advancing the field and have contributed to the rapid growth and widespread adoption of AI technologies in various domains.

The early research papers on AI focused on foundational concepts and theories, such as symbolic reasoning and expert systems. However, with the advent of machine learning, particularly deep learning, the field witnessed a paradigm shift. Machine learning algorithms that can automatically learn patterns and make predictions from data revolutionized AI research and application.

Research papers on artificial intelligence serve as the foundation for further advancements and applications in the field. They provide a means for researchers to communicate their findings, share methodologies, and collaborate with peers, ultimately driving the progress and innovation in AI research and development.

Discussion of AI Technologies

Research papers on artificial intelligence encompass a wide array of AI technologies, each explored and analyzed through rigorous investigation and experimentation. These papers serve as a conduit for sharing novel advancements, methodologies, and empirical results in the field. By delving into various AI technologies, researchers contribute to the continuous evolution and refinement of AI systems. Below are some key AI technologies commonly discussed in research papers:

Machine Learning

Machine learning algorithms form the backbone of many AI applications, and research papers extensively explore their development and optimization. These papers investigate different types of machine learning approaches, such as supervised learning, unsupervised learning, and reinforcement learning. They propose innovative algorithms, architectures, and optimization techniques to enhance the performance and efficiency of machine-learning models. Furthermore, research papers on machine learning often address specific challenges, such as dealing with high-dimensional data, handling imbalanced datasets, and improving interpretability.

Natural Language Processing (NLP)

Research papers on artificial intelligence also focus on NLP, a subfield that deals with enabling machines to understand, interpret, and generate human language. These papers explore techniques for tasks such as text classification, sentiment analysis, information retrieval, and language translation. NLP research papers often present state-of-the-art models and methodologies, including deep learning architectures like recurrent neural networks (RNNs) and transformer models. They delve into language representation, word embeddings, syntactic and semantic parsing, and discourse analysis, among other topics.

Computer Vision

Computer vision research papers tackle the challenges of enabling machines to interpret and understand visual information. These papers delve into various computer vision tasks, including object detection, image recognition, image segmentation, and image generation. They propose innovative convolutional neural network (CNN) architectures, feature extraction techniques, and image processing algorithms. Computer vision research papers also explore areas such as video analysis, 3D reconstruction, visual tracking, and scene understanding, contributing to advancements in autonomous vehicles, surveillance systems, and augmented reality.

Robotics

Research papers on artificial intelligence and robotics focus on developing intelligent robots capable of autonomous decision-making, perception, and interaction with the physical world. These papers cover topics like motion planning, sensor fusion, robot learning, and human-robot interaction. They investigate algorithms for robot localization and mapping, object manipulation, grasping, and navigation in complex environments. Robotics research papers often include experimental evaluations using real robots or simulations, showcasing the practical applicability and performance of the proposed approaches.

Ethical and Societal Implications

As AI technologies become more pervasive, research papers also explore the ethical and societal implications associated with their development and deployment. These papers discuss topics such as bias and fairness in AI algorithms, transparency and interpretability of AI systems, privacy concerns in data collection and usage, and the impact of AI on employment and social structures. Ethical and societal implications research papers aim to provide guidelines, regulations, and frameworks for responsible AI development and usage, ensuring that AI technologies align with societal values and benefit humanity as a whole.

Reinforcement Learning

Reinforcement learning is a crucial subfield of machine learning, and research papers dedicated to this topic focus on teaching agents to make optimal decisions based on trial-and-error interactions with an environment. These papers delve into algorithms such as Q-learning, policy gradients, and deep reinforcement learning. They explore various applications, including game playing, robotics, autonomous control, and recommendation systems. Reinforcement learning research papers also investigate topics like exploration-exploitation trade-offs, reward shaping, and multi-agent reinforcement learning.

Privacy-Preserving Machine Learning

Privacy-preserving machine learning is an emerging domain that addresses the challenge of leveraging sensitive data while preserving individuals' privacy. Research papers in this area propose innovative techniques such as federated learning, secure multi-party computation, and differential privacy. These papers explore methods to train machine learning models on distributed data without sharing the raw data itself, ensuring data privacy and security. Privacy-preserving machine learning research papers also analyze the trade-offs between privacy guarantees and model performance.

Top Research papers

Research papers play a crucial role in shaping the field of artificial intelligence (AI) by presenting groundbreaking ideas, innovative approaches, and significant advancements. Here are some of the top research papers that have made a substantial impact in the realm of AI:

"Deep Residual Learning for Image Recognition" by Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun (2016)

This research paper on artificial intelligence introduced the concept of residual networks (ResNets), which revolutionized image recognition tasks. ResNets allowed for the training of extremely deep neural networks by introducing skip connections that facilitated the flow of information across layers. This paper demonstrated that deeper networks could achieve higher accuracy, challenging the previous belief that increasing network depth leads to diminishing performance gains.

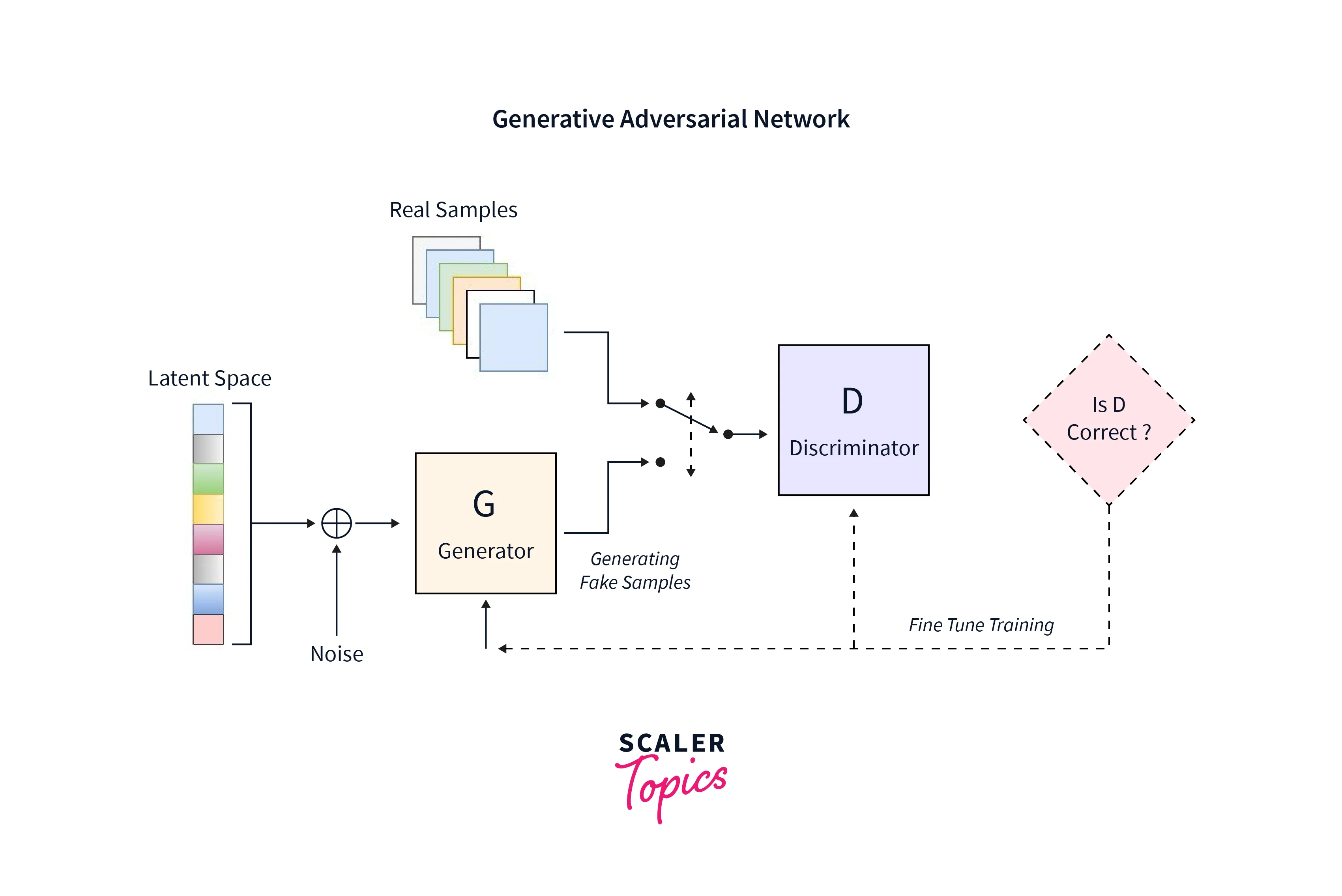

"Generative Adversarial Networks" by Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, et al. (2014)

This seminal paper introduced the concept of Generative Adversarial Networks (GANs). GANs consist of two neural networks, a generator, and a discriminator, competing against each other. The generator aims to produce synthetic data that resembles the real data distribution, while the discriminator's task is to differentiate between real and synthetic data. GANs have since become a cornerstone of generative modeling, enabling the creation of realistic images, videos, and other types of data.

"Attention Is All You Need" by Vaswani et al. (2017)

This influential research paper on artificial intelligence introduced the Transformer model, which revolutionized the field of natural language processing (NLP). Transformers employ self-attention mechanisms to capture contextual relationships between words in a sequence, eliminating the need for recurrent neural networks (RNNs) or convolutional layers. The Transformer model achieved state-of-the-art performance in various NLP tasks, including machine translation, text summarization, and language understanding.

"ImageNet Classification with Deep Convolutional Neural Networks" by Krizhevsky, Sutskever, and Hinton (2012)

This groundbreaking paper introduced the AlexNet model, a deep convolutional neural network (CNN), which significantly advanced image classification performance. AlexNet won the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2012, demonstrating the power of deep learning. The paper's success paved the way for the widespread adoption of deep CNN architectures in computer vision tasks.

"Reinforcement Learning" by Richard S. Sutton and Andrew G. Barto (1998)

This influential book presents a comprehensive overview of reinforcement learning, a subfield of machine learning concerned with learning optimal behaviors through interactions with an environment. The book provides a theoretical foundation, algorithms, and practical insights into reinforcement learning, serving as a go-to resource for researchers and practitioners in the field. Reinforcement learning has been instrumental in solving complex AI problems, including game playing, robotics, and autonomous control.

"Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks" by Alec Radford, Luke Metz, and Soumith Chintala (2016)

This research paper on artificial intelligence introduced Deep Convolutional Generative Adversarial Networks (DCGANs), which extended the GAN framework specifically for image synthesis. DCGANs demonstrated the ability to generate high-quality, diverse images from random noise vectors. This work contributed to the advancement of unsupervised learning and paved the way for subsequent research in image generation, style transfer, and image editing.

"Neural Machine Translation by Jointly Learning to Align and Translate" by Bahdanau, Cho, and Bengio (2014)

This influential paper introduced the attention mechanism in sequence-to-sequence models, greatly improving the performance of neural machine translation. The attention mechanism allows the model to focus on different parts of the input sequence during the translation process, enabling better alignment and understanding. This work significantly advanced the state-of-the-art in machine translation and inspired further research on attention-based models in various other sequence-to-sequence tasks.

"BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding" by Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova (2018)

This paper introduced the BERT model, which achieved state-of-the-art results in various natural language processing tasks by pre-training a transformer-based neural network on a large corpus of unlabeled text data.

"DeepFace: Closing the Gap to Human-Level Performance in Face Verification" by Yaniv Taigman, Ming Yang, Marc'Aurelio Ranzato, and Lior Wolf (2014)

This research paper on artificial intelligence presented the DeepFace model, which utilized deep convolutional neural networks to achieve remarkable performance in face verification tasks, narrowing the performance gap between machines and humans.

Conclusion

- Research papers in AI contribute to the exchange of knowledge, methodologies, and breakthroughs among researchers, fostering collaboration and innovation.

- Research papers cover a wide range of AI technologies, including machine learning, natural language processing, computer vision, and robotics.

- Several influential research papers have significantly shaped the field of AI, including those on deep learning, generative adversarial networks, attention mechanisms, and reinforcement learning.

- Research papers also explore the ethical and societal implications of AI, addressing issues such as fairness, transparency, and privacy.

- Prominent research papers in AI inspire and guide future research directions, pushing the boundaries of the field and unlocking its potential.